2026

ConeGS: Error–Guided Densification Using Pixel Cones for Improved Reconstruction with Fewer Primitives (oral)

B. Baranowski, S. Esposito, P. Gschoßmann, A. Chen and A. Geiger

International Conference on 3D Vision (3DV), 2026

B. Baranowski, S. Esposito, P. Gschoßmann, A. Chen and A. Geiger

International Conference on 3D Vision (3DV), 2026

Abstract: 3D Gaussian Splatting (3DGS) achieves state-of-the-art image quality and real-time performance in novel view synthesis but often suffers from a suboptimal spatial distribution of primitives. This issue stems from cloning-based densification, which propagates Gaussians along existing geometry, limiting exploration and requiring many primitives to adequately cover the scene. We present ConeGS, an image-space-informed densification framework that is independent of existing scene geometry state. ConeGS first creates a fast Instant Neural Graphics Primitives (iNGP) reconstruction as a geometric proxy to estimate per-pixel depth. During the subsequent 3DGS optimization, it identifies high-error pixels and inserts new Gaussians along the corresponding viewing cones at the predicted depth values, initializing their size according to the cone diameter. A pre-activation opacity penalty rapidly removes redundant Gaussians, while a primitive budgeting strategy controls the total number of primitives, either by a fixed budget or by adapting to scene complexity, ensuring high reconstruction quality. Experiments show that ConeGS consistently enhances reconstruction quality and rendering performance across Gaussian budgets, with especially strong gains under tight primitive constraints where efficient placement is crucial.

Latex Bibtex Citation:

@inproceedings{Baranowski2026THREEDV,

author = {Bartłomiej Baranowski and Stefano Esposito and Patricia Gschoßmann and Anpei Chen and Andreas Geiger},

title = {ConeGS: Error–Guided Densification Using Pixel Cones for Improved Reconstruction with Fewer Primitives},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2026}

}

Latex Bibtex Citation:

@inproceedings{Baranowski2026THREEDV,

author = {Bartłomiej Baranowski and Stefano Esposito and Patricia Gschoßmann and Anpei Chen and Andreas Geiger},

title = {ConeGS: Error–Guided Densification Using Pixel Cones for Improved Reconstruction with Fewer Primitives},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2026}

}

2025

Transforming Science with Large Language Models: A Survey on AI-assisted Scientific Discovery, Experimentation, ...

S. Eger, Y. Cao, J. D'Souza, A. Geiger, C. Greisinger, S. Gross, Y. Hou, B. Krenn, A. Lauscher, Y. Li, et al.

Arxiv, 2025

S. Eger, Y. Cao, J. D'Souza, A. Geiger, C. Greisinger, S. Gross, Y. Hou, B. Krenn, A. Lauscher, Y. Li, et al.

Arxiv, 2025

Abstract: With the advent of large multimodal language models, science is now at a threshold of an AI-based technological transformation. Recently, a plethora of new AI models and tools has been proposed, promising to empower researchers and academics worldwide to conduct their research more effectively and efficiently. This includes all aspects of the research cycle, especially (1) searching for relevant literature; (2) generating research ideas and conducting experimentation; generating (3) text-based and (4) multimodal content (e.g., scientific figures and diagrams); and (5) AI-based automatic peer review. In this survey, we provide an in-depth overview over these exciting recent developments, which promise to fundamentally alter the scientific research process for good. Our survey covers the five aspects outlined above, indicating relevant datasets, methods and results (including evaluation) as well as limitations and scope for future research. Ethical concerns regarding shortcomings of these tools and potential for misuse (fake science, plagiarism, harms to research integrity) take a particularly prominent place in our discussion. We hope that our survey will not only become a reference guide for newcomers to the field but also a catalyst for new AI-based initiatives in the area of "AI4Science".

Latex Bibtex Citation:

@article{Eger2025ARXIV,

author = {Steffen Eger and Yong Cao and Jennifer D'Souza and Andreas Geiger and Christian Greisinger and Stephanie Gross and Yufang Hou and Brigitte Krenn and Anne Lauscher and Yizhi Li and Chenghua Lin and Nafise Sadat Moosavi and Wei Zhao and Tristan Miller},

title = {Transforming Science with Large Language Models: A Survey on AI-assisted Scientific Discovery, Experimentation, Content Generation, and Evaluation},

journal = {Arxiv},

year = {2025}

}

Latex Bibtex Citation:

@article{Eger2025ARXIV,

author = {Steffen Eger and Yong Cao and Jennifer D'Souza and Andreas Geiger and Christian Greisinger and Stephanie Gross and Yufang Hou and Brigitte Krenn and Anne Lauscher and Yizhi Li and Chenghua Lin and Nafise Sadat Moosavi and Wei Zhao and Tristan Miller},

title = {Transforming Science with Large Language Models: A Survey on AI-assisted Scientific Discovery, Experimentation, Content Generation, and Evaluation},

journal = {Arxiv},

year = {2025}

}

ReSim: Reliable World Simulation for Autonomous Driving

J. Yang, K. Chitta, S. Gao, L. Chen, Y. Shao, X. Jia, H. Li, A. Geiger, X. Yue and L. Chen

Advances in Neural Information Processing Systems (NeurIPS), 2025

J. Yang, K. Chitta, S. Gao, L. Chen, Y. Shao, X. Jia, H. Li, A. Geiger, X. Yue and L. Chen

Advances in Neural Information Processing Systems (NeurIPS), 2025

Abstract: How can we reliably simulate future driving scenarios under a wide range of ego driving behaviors? Recent driving world models, developed exclusively on real-world driving data composed mainly of safe expert trajectories, struggle to follow hazardous or non-expert behaviors, which are rare in such data. This limitation restricts their applicability to tasks such as policy evaluation. In this work, we address this challenge by enriching real-world human demonstrations with diverse non-expert data collected from a driving simulator (e.g., CARLA), and building a controllable world model trained on this heterogeneous corpus. Starting with a video generator featuring a diffusion transformer architecture, we devise several strategies to effectively integrate conditioning signals and improve prediction controllability and fidelity. The resulting model, ReSim, enables Reliable Simulation of diverse open-world driving scenarios under various actions, including hazardous non-expert ones. To close the gap between high-fidelity simulation and applications that require reward signals to judge different actions, we introduce a Video2Reward module that estimates a reward from ReSim's simulated future. Our ReSim paradigm achieves up to 44% higher visual fidelity, improves controllability for both expert and non-expert actions by over 50%, and boosts planning and policy selection performance on NAVSIM by 2% and 25%, respectively.

Latex Bibtex Citation:

@inproceedings{Yang2025NEURIPS,

author = {Jiazhi Yang and Kashyap Chitta and Shenyuan Gao and Long Chen and Yuqian Shao and Xiaosong Jia and Hongyang Li and Andreas Geiger and Xiangyu Yue and Li Chen},

title = {ReSim: Reliable World Simulation for Autonomous Driving},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Yang2025NEURIPS,

author = {Jiazhi Yang and Kashyap Chitta and Shenyuan Gao and Long Chen and Yuqian Shao and Xiaosong Jia and Hongyang Li and Andreas Geiger and Xiangyu Yue and Li Chen},

title = {ReSim: Reliable World Simulation for Autonomous Driving},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2025}

}

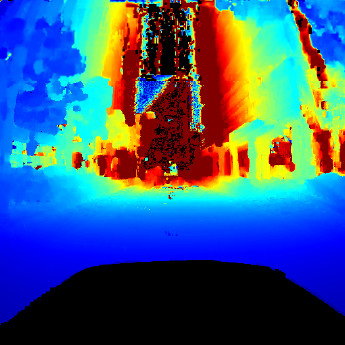

PanopticNeRF-360: Panoramic 3D-to-2D Label Transfer in Urban Scenes

X. Fu, S. Zhang, T. Chen, Y. Lu, X. Zhou, A. Geiger and Y. Liao

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025

X. Fu, S. Zhang, T. Chen, Y. Lu, X. Zhou, A. Geiger and Y. Liao

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025

Abstract: Training perception systems for self-driving cars requires substantial 2D annotations that are labor-intensive to manual label. While existing datasets provide rich annotations on pre-recorded sequences, they fall short in labeling rarely encountered viewpoints, potentially hampering the generalization ability for perception models. In this paper, we present PanopticNeRF-360, a novel approach that combines coarse 3D annotations with noisy 2D semantic cues to generate high-quality panoptic labels and images from any viewpoint. Our key insight lies in exploiting the complementarity of 3D and 2D priors to mutually enhance geometry and semantics. Specifically, we propose to leverage coarse 3D bounding primitives and noisy 2D semantic and instance predictions to guide geometry optimization, by encouraging predicted labels to match panoptic pseudo ground truth. Simultaneously, the improved geometry assists

in filtering 3D&2D annotation noise by fusing semantics in 3D space via a learned semantic field. To further enhance appearance, we combine MLP and hash grids to yield hybrid scene features, striking a balance between high-frequency appearance and contiguous semantics. Our experiments demonstrate PanopticNeRF-360’s state-of-the-art performance over label transfer methods on the challenging urban scenes of the KITTI-360 dataset. Moreover, PanopticNeRF-360 enables omnidirectional rendering of high-fidelity, multi-view and spatiotemporally consistent appearance, semantic and instance labels.

Latex Bibtex Citation:

@article{Fu2025PAMI,

author = {Xiao Fu and Shangzhan Zhang and Tianrun Chen and Yichong Lu and Xiaowei Zhou and Andreas Geiger and Yiyi Liao},

title = {PanopticNeRF-360: Panoramic 3D-to-2D Label Transfer in Urban Scenes},

journal = {Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2025}

}

Latex Bibtex Citation:

@article{Fu2025PAMI,

author = {Xiao Fu and Shangzhan Zhang and Tianrun Chen and Yichong Lu and Xiaowei Zhou and Andreas Geiger and Yiyi Liao},

title = {PanopticNeRF-360: Panoramic 3D-to-2D Label Transfer in Urban Scenes},

journal = {Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2025}

}

Easi3R: Estimating Disentangled Motion from DUSt3R Without Training

X. Chen, Y. Chen, Y. Xiu, A. Geiger and A. Chen

International Conference on Computer Vision (ICCV), 2025

X. Chen, Y. Chen, Y. Xiu, A. Geiger and A. Chen

International Conference on Computer Vision (ICCV), 2025

Abstract: Recent advances in DUSt3R have enabled robust estimation of dense point clouds and camera parameters of static scenes, leveraging Transformer network architectures and direct supervision on large-scale 3D datasets. In contrast, the limited scale and diversity of available 4D datasets present a major bottleneck for training a highly generalizable 4D model. This constraint has driven conventional 4D methods to fine-tune 3D models on scalable dynamic video data with additional geometric priors such as optical flow and depths. In this work, we take an opposite path and introduce Easi3R, a simple yet efficient training-free method for 4D reconstruction. Our approach applies attention adaptation during inference, eliminating the need for from-scratch pre-training or network fine-tuning. We find that the attention layers in DUSt3R inherently encode rich information about camera and object motion. By carefully disentangling these attention maps, we achieve accurate dynamic region segmentation, camera pose estimation, and 4D dense point map reconstruction. Extensive experiments on real-world dynamic videos demonstrate that our lightweight attention adaptation significantly outperforms previous state-of-the-art methods that are trained or finetuned on extensive dynamic datasets.

Latex Bibtex Citation:

@inproceedings{Chen2025ICCV,

author = {Xingyu Chen and Yue Chen and Yuliang Xiu and Andreas Geiger and Anpei Chen},

title = {Easi3R: Estimating Disentangled Motion from DUSt3R Without Training},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Chen2025ICCV,

author = {Xingyu Chen and Yue Chen and Yuliang Xiu and Andreas Geiger and Anpei Chen},

title = {Easi3R: Estimating Disentangled Motion from DUSt3R Without Training},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models (oral)

H. Huang, A. Chen, V. Havrylov, A. Geiger and D. Zhang

International Conference on Computer Vision (ICCV), 2025

H. Huang, A. Chen, V. Havrylov, A. Geiger and D. Zhang

International Conference on Computer Vision (ICCV), 2025

Abstract: Vision foundation models (VFMs) such as DINOv2 and CLIP have achieved impressive results on various downstream tasks, but their limited feature resolution hampers performance in applications requiring pixel-level understanding. Feature upsampling offers a promising direction to address this challenge. In this work, we identify two critical factors for enhancing feature upsampling: the upsampler architecture and the training objective. For the upsampler architecture, we introduce a coordinate-based cross-attention transformer that integrates the high-resolution images with coordinates and low-resolution VFM features to generate sharp, high-quality features. For the training objective, we propose constructing high-resolution pseudo-groundtruth features by leveraging class-agnostic masks and self-distillation. Our approach effectively captures fine-grained details and adapts flexibly to various input and feature resolutions. Through experiments, we demonstrate that our approach significantly outperforms existing feature upsampling techniques across various downstream tasks.

Latex Bibtex Citation:

@inproceedings{Huang2025ICCV,

author = {Haiwen Huang and Anpei Chen and Volodymyr Havrylov and Andreas Geiger and Dan Zhang},

title = {LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Huang2025ICCV,

author = {Haiwen Huang and Anpei Chen and Volodymyr Havrylov and Andreas Geiger and Dan Zhang},

title = {LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

MoGA: 3D Generative Avatar Prior for Monocular Gaussian Avatar Reconstruction (highlight)

Z. Dong, L. Duan, J. Song, M. Black and A. Geiger

International Conference on Computer Vision (ICCV), 2025

Z. Dong, L. Duan, J. Song, M. Black and A. Geiger

International Conference on Computer Vision (ICCV), 2025

Abstract: We present MoGA, a novel method to reconstruct high-fidelity 3D Gaussian avatars from a single-view image. The main challenge lies in inferring unseen appearance and geometric details while ensuring 3D consistency and realism. Most previous methods rely on 2D diffusion models to synthesize unseen views; however, these generated views are sparse and inconsistent, resulting in unrealistic 3D artifacts and blurred appearance. To address these limitations, we leverage a generative avatar model, that can generate diverse 3D avatars by sampling deformed Gaussians from a learned prior distribution. Due to the limited amount of 3D training data, such a 3D model alone cannot capture all image details of unseen identities. Consequently, we integrate it as a prior, ensuring 3D consistency by projecting input images into its latent space and enforcing additional 3D appearance and geometric constraints. Our novel approach formulates Gaussian avatar creation as model inversion by fitting the generative avatar to synthetic views from 2D diffusion models. The generative avatar provides a meaningful initialization for model fitting, enforces 3D regularization, and helps in refining pose estimation. Experiments show that our method surpasses state-of-the-art techniques and generalizes well to real-world scenarios. Our Gaussian avatars are also inherently animatable.

Latex Bibtex Citation:

@inproceedings{Dong2025ICCV,

author = {Zijian Dong and Longteng Duan and Jie Song and Michael J. Black and Andreas Geiger},

title = {MoGA: 3D Generative Avatar Prior for Monocular Gaussian Avatar Reconstruction},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Dong2025ICCV,

author = {Zijian Dong and Longteng Duan and Jie Song and Michael J. Black and Andreas Geiger},

title = {MoGA: 3D Generative Avatar Prior for Monocular Gaussian Avatar Reconstruction},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

CaRL: Learning Scalable Planning Policies with Simple Rewards

B. Jaeger, D. Dauner, J. Beißwenger, S. Gerstenecker, K. Chitta and A. Geiger

Conference on Robot Learning (CoRL), 2025

B. Jaeger, D. Dauner, J. Beißwenger, S. Gerstenecker, K. Chitta and A. Geiger

Conference on Robot Learning (CoRL), 2025

Abstract: We investigate reinforcement learning (RL) for privileged planning in autonomous driving. State-of-the-art approaches for this task are rule-based, but these methods do not scale to the long tail. RL, on the other hand, is scalable and does not suffer from compounding errors like imitation learning. Contemporary RL approaches for driving use complex shaped rewards that sum multiple individual rewards, e.g.\ progress, position, or orientation rewards. We show that PPO fails to optimize a popular version of these rewards when the mini-batch size is increased, which limits the scalability of these approaches. Instead, we propose a new reward design based primarily on optimizing a single intuitive reward term: route completion. Infractions are penalized by terminating the episode or multiplicatively reducing route completion. We find that PPO scales well with higher mini-batch sizes when trained with our simple reward, even improving performance. Training with large mini-batch sizes enables efficient scaling via distributed data parallelism. We scale PPO to 300M samples in CARLA and 500M samples in nuPlan with a single 8-GPU node. The resulting model achieves 64 DS on the CARLA longest6 v2 benchmark, outperforming other RL methods with more complex rewards by a large margin. Requiring only minimal adaptations from its use in CARLA, the same method is the best learning-based approach on nuPlan. It scores 91.3 in non-reactive and 90.6 in reactive traffic on the Val14 benchmark while being an order of magnitude faster than prior work.

Latex Bibtex Citation:

@inproceedings{Jaeger2025CORL,

author = {Bernhard Jaeger and Daniel Dauner and Jens Beißwenger and Simon Gerstenecker and Kashyap Chitta and Andreas Geiger},

title = {CaRL: Learning Scalable Planning Policies with Simple Rewards},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Jaeger2025CORL,

author = {Bernhard Jaeger and Daniel Dauner and Jens Beißwenger and Simon Gerstenecker and Kashyap Chitta and Andreas Geiger},

title = {CaRL: Learning Scalable Planning Policies with Simple Rewards},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025}

}

Pseudo-Simulation for Autonomous Driving

W. Cao, M. Hallgarten, T. Li, D. Dauner, X. Gu, C. Wang, Y. Miron, M. Aiello, H. Li, I. Gilitschenski, et al.

Conference on Robot Learning (CoRL), 2025

W. Cao, M. Hallgarten, T. Li, D. Dauner, X. Gu, C. Wang, Y. Miron, M. Aiello, H. Li, I. Gilitschenski, et al.

Conference on Robot Learning (CoRL), 2025

Abstract: Existing evaluation paradigms for Autonomous Vehicles (AVs) face critical limitations. Real-world evaluation is often challenging due to safety concerns and a lack of reproducibility, whereas closed-loop simulation can face insufficient realism or high computational costs. Open-loop evaluation, while being efficient and data-driven, relies on metrics that generally overlook compounding errors. In this paper, we propose pseudo-simulation, a novel paradigm that addresses these limitations. Pseudo-simulation operates on real datasets, similar to open-loop evaluation, but augments them with synthetic observations generated prior to evaluation using 3D Gaussian Splatting. Our key idea is to approximate potential future states the AV might encounter by generating a diverse set of observations that vary in position, heading, and speed. Our method then assigns a higher importance to synthetic observations that best match the AV’s likely behavior using a novel proximity-based weighting scheme. This enables evaluating error recovery and the mitigation of causal confusion, as in closed-loop benchmarks, without requiring sequential interactive simulation. We show that pseudo-simulation is better correlated with closed-loop simulations (R² = 0.8) than the best existing open-loop approach (R² = 0.7). We also establish a public leaderboard for the community to benchmark new methodologies with pseudo-simulation.

Latex Bibtex Citation:

@inproceedings{Cao2025CORL,

author = {Wei Cao and Marcel Hallgarten and Tianyu Li and Daniel Dauner and Xunjiang Gu and Caojun Wang and Yakov Miron and Marco Aiello and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {Pseudo-Simulation for Autonomous Driving},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Cao2025CORL,

author = {Wei Cao and Marcel Hallgarten and Tianyu Li and Daniel Dauner and Xunjiang Gu and Caojun Wang and Yakov Miron and Marco Aiello and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {Pseudo-Simulation for Autonomous Driving},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025}

}

UrbanGen: Urban Generation with Compositional and Controllable Neural Fields

Y. Yang, Y. Shen, Y. Wang, A. Geiger and Y. Liao

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025

Y. Yang, Y. Shen, Y. Wang, A. Geiger and Y. Liao

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025

Abstract: Despite the rapid progress in generative radiance fields, most existing methods focus on object-centric applications and are not able to generate complex urban scenes. In this paper, we propose UrbanGen, a solution for the challenging task of generating urban radiance fields with photorealistic rendering, accurate geometry, high controllability, and diverse city styles. Our key idea is to leverage a coarse 3D panoptic prior, represented by a semantic voxel grid for stuff and bounding boxes for countable objects, to condition a compositional generative radiance field. This panoptic prior simplifies the task of learning complex urban geometry, enables disentanglement of stuff and objects, and provides versatile control over both. Moreover, by combining semantic and geometry losses with adversarial training, our method faithfully adheres to the input conditions, allowing for joint rendering of semantic and depth maps alongside RGB images. In addition, we collect a unified dataset with images and their panoptic priors in the same format from 3 diverse real-world datasets: KITTI-360, nuScenes, and Waymo, and train a city style-aware model on this data. Our systematic study shows that UrbanGen outperforms state-of-the-art generative radiance field baselines in terms of image fidelity and geometry accuracy for urban scene generation. Furthermore, UrbenGen brings a new set of controllability features, including large camera movements, stuff editing, and city style control.

Latex Bibtex Citation:

@article{Yang2025PAMI,

author = {Yuanbo Yang and Yujun Shen and Yue Wang and Andreas Geiger and Yiyi Liao},

title = {UrbanGen: Urban Generation with Compositional and Controllable Neural Fields},

journal = {Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2025}

}

Latex Bibtex Citation:

@article{Yang2025PAMI,

author = {Yuanbo Yang and Yujun Shen and Yue Wang and Andreas Geiger and Yiyi Liao},

title = {UrbanGen: Urban Generation with Compositional and Controllable Neural Fields},

journal = {Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2025}

}

Scholar Inbox: Personalized Paper Recommendations for Scientists

M. Flicke, G. Angrabeit, M. Iyengar, V. Protsenko, I. Shakun, J. Cicvaric, B. Kargi, H. He, L. Schuler, L. Scholz, et al.

Annual Meeting of the Association for Computational Linguistics (ACL), System Demonstrations, 2025

M. Flicke, G. Angrabeit, M. Iyengar, V. Protsenko, I. Shakun, J. Cicvaric, B. Kargi, H. He, L. Schuler, L. Scholz, et al.

Annual Meeting of the Association for Computational Linguistics (ACL), System Demonstrations, 2025

Abstract: Scholar Inbox is a new open-access platform designed to address the challenges researchers face in staying current with the rapidly expanding volume of scientific literature. We provide personalized recommendations, continuous updates from open-access archives (arXiv, bioRxiv, etc.), visual paper summaries, semantic search, and a range of tools to streamline research workflows and promote open research access. The platform's personalized recommendation system is trained on user ratings, ensuring that recommendations are tailored to individual researchers' interests. To further enhance the user experience, Scholar Inbox also offers a map of science that provides an overview of research across domains, enabling users to easily explore specific topics. We use this map to address the cold start problem common in recommender systems, as well as an active learning strategy that iteratively prompts users to rate a selection of papers, allowing the system to learn user preferences quickly. We evaluate the quality of our recommendation system on a novel dataset of 800k user ratings, which we make publicly available, as well as via an extensive user study. https://www.scholar-inbox.com/

Latex Bibtex Citation:

@inproceedings{Flicke2025ACLSDT,

author = {Markus Flicke and Glenn Angrabeit and Madhav Iyengar and Vitalii Protsenko and Illia Shakun and Jovan Cicvaric and Bora Kargi and Haoyu He and Lukas Schuler and Lewin Scholz and Kavyanjali Agnihotri and Yong Cao and Andreas Geiger},

title = {Scholar Inbox: Personalized Paper Recommendations for Scientists},

booktitle = {Annual Meeting of the Association for Computational Linguistics (ACL), System Demonstrations},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Flicke2025ACLSDT,

author = {Markus Flicke and Glenn Angrabeit and Madhav Iyengar and Vitalii Protsenko and Illia Shakun and Jovan Cicvaric and Bora Kargi and Haoyu He and Lukas Schuler and Lewin Scholz and Kavyanjali Agnihotri and Yong Cao and Andreas Geiger},

title = {Scholar Inbox: Personalized Paper Recommendations for Scientists},

booktitle = {Annual Meeting of the Association for Computational Linguistics (ACL), System Demonstrations},

year = {2025}

}

Relightable Full-Body Gaussian Codec Avatars

S. Wang, T. Simon, I. Santesteban, T. Bagautdinov, J. Li, V. Agrawal, F. Prada, S. Yu, P. Nalbone, M. Gramlich, et al.

International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), 2025

S. Wang, T. Simon, I. Santesteban, T. Bagautdinov, J. Li, V. Agrawal, F. Prada, S. Yu, P. Nalbone, M. Gramlich, et al.

International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), 2025

Abstract: We propose Relightable Full-Body Gaussian Codec Avatars, a new approach for modeling relightable full-body avatars with fine-grained details including face and hands. The unique challenge for relighting full-body avatars lies in the large deformations caused by body articulation and the resulting impact on appearance caused by light transport. Changes in body pose can dramatically change the orientation of body surfaces with respect to lights, resulting in both local appearance changes due to changes in local light transport functions, as well as non-local changes due to occlusion between body parts. To address this, we decompose the light transport into local and non-local effects. Local appearance changes are modeled using learnable zonal harmonics for diffuse radiance transfer. Unlike spherical harmonics, zonal harmonics are highly efficient to rotate under articulation. This allows us to learn diffuse radiance transfer in a local coordinate frame, which disentangles the local radiance transfer from the articulation of the body. To account for non-local appearance changes, we introduce a shadow network that predicts shadows given precomputed incoming irradiance on a base mesh. This facilitates the learning of non-local shadowing between the body parts. Finally, we use a deferred shading approach to model specular radiance transfer and better capture reflections and highlights such as eye glints. We demonstrate that our approach successfully models both the local and non-local light transport required for relightable full-body avatars, with a superior generalization ability under novel illumination conditions and unseen poses.

Latex Bibtex Citation:

@inproceedings{Wang2025SIGGRAPH,

author = {Shaofei Wang and Tomas Simon and Igor Santesteban and Timur Bagautdinov and Junxuan Li and Vasu Agrawal and Fabian Prada and Shoou-I Yu and Pace Nalbone and Matt Gramlich and Roman Lubachersky and Chenglei Wu and Javier Romero and Jason Saragih and Michael Zollhoefer and Andreas Geiger and Siyu Tang and Shunsuke Saito},

title = {Relightable Full-Body Gaussian Codec Avatars},

booktitle = {International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Wang2025SIGGRAPH,

author = {Shaofei Wang and Tomas Simon and Igor Santesteban and Timur Bagautdinov and Junxuan Li and Vasu Agrawal and Fabian Prada and Shoou-I Yu and Pace Nalbone and Matt Gramlich and Roman Lubachersky and Chenglei Wu and Javier Romero and Jason Saragih and Michael Zollhoefer and Andreas Geiger and Siyu Tang and Shunsuke Saito},

title = {Relightable Full-Body Gaussian Codec Avatars},

booktitle = {International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH)},

year = {2025}

}

Volumetric Surfaces: Representing Fuzzy Geometries with Layered Meshes

S. Esposito, A. Chen, C. Reiser, S. Bulò, L. Porzi, K. Schwarz, C. Richardt, M. Zollhöfer, P. Kontschieder and A. Geiger

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

S. Esposito, A. Chen, C. Reiser, S. Bulò, L. Porzi, K. Schwarz, C. Richardt, M. Zollhöfer, P. Kontschieder and A. Geiger

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: High-quality view synthesis relies on volume rendering, splatting, or surface rendering. While surface rendering is typically the fastest, it struggles to accurately model fuzzy geometry like hair. In turn, alpha-blending techniques excel at representing fuzzy materials but require an unbounded number of samples per ray (P1). Further overheads are induced by empty space skipping in volume rendering (P2) and sorting input primitives in splatting (P3). We present a novel representation for real-time view synthesis where the (P1) number of sampling locations is small and bounded, (P2) sampling locations are efficiently found via rasterization, and (P3) rendering is sorting-free. We achieve this by representing objects as semi-transparent multi-layer meshes rendered in a fixed order. First, we model surface layers as signed distance function (SDF) shells with optimal spacing learned during training. Then, we bake them as meshes and fit UV textures. Unlike single-surface methods, our multi-layer representation effectively models fuzzy objects. In contrast to volume and splatting-based methods, our approach enables real-time rendering on low-power laptops and smartphones.

Latex Bibtex Citation:

@inproceedings{Esposito2025CVPR,

author = {Stefano Esposito and Anpei Chen and Christian Reiser and Samuel Rota Bulò and Lorenzo Porzi and Katja Schwarz and Christian Richardt and Michael Zollhöfer and Peter Kontschieder and Andreas Geiger},

title = {Volumetric Surfaces: Representing Fuzzy Geometries with Layered Meshes},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Esposito2025CVPR,

author = {Stefano Esposito and Anpei Chen and Christian Reiser and Samuel Rota Bulò and Lorenzo Porzi and Katja Schwarz and Christian Richardt and Michael Zollhöfer and Peter Kontschieder and Andreas Geiger},

title = {Volumetric Surfaces: Representing Fuzzy Geometries with Layered Meshes},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Prometheus: 3D-Aware Latent Diffusion Models for Feed-Forward Text-to-3D Scene Generation

Y. Yang, J. Shao, X. Li, Y. Shen, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Y. Yang, J. Shao, X. Li, Y. Shen, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: In this work, we introduce Prometheus, a 3D-aware latent diffusion model for text-to-3D generation at both object and scene levels in seconds. We formulate 3D scene generation as multi-view, feed-forward, pixel-aligned 3D Gaussian generation within the latent diffusion paradigm. To ensure generalizability, we build our model upon pre-trained text-to-image generation model with only minimal adjustments, and further train it using a large number of images from both single-view and multi-view datasets. Furthermore, we introduce an RGB-D latent space into 3D Gaussian generation to disentangle appearance and geometry information, enabling efficient feed-forward generation of 3D Gaussians with better fidelity and geometry. Extensive experimental results demonstrate the effectiveness of our method in both feed-forward 3D Gaussian reconstruction and text-to-3D generation. Project page: https://freemty.github.io/project-prometheus/.

Latex Bibtex Citation:

@inproceedings{Yang2025CVPR,

author = {Yuanbo Yang and Jiahao Shao and Xinyang Li and Yujun Shen and Andreas Geiger and Yiyi Liao},

title = {Prometheus: 3D-Aware Latent Diffusion Models for Feed-Forward Text-to-3D Scene Generation},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Yang2025CVPR,

author = {Yuanbo Yang and Jiahao Shao and Xinyang Li and Yujun Shen and Andreas Geiger and Yiyi Liao},

title = {Prometheus: 3D-Aware Latent Diffusion Models for Feed-Forward Text-to-3D Scene Generation},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

GenFusion: Closing the Loop between Reconstruction and Generation via Videos

S. Wu, C. Xu, B. Huang, A. Geiger and A. Chen

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

S. Wu, C. Xu, B. Huang, A. Geiger and A. Chen

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: Recently, 3D reconstruction and generation have demonstrated impressive novel view synthesis results, achieving high fidelity and efficiency. However, a notable conditioning gap can be observed between these two fields, e.g., scalable 3D scene reconstruction often requires densely captured views, whereas 3D generation typically relies on a single or no input view, which significantly limits their applications. We found that the source of this phenomenon lies in the misalignment between 3D constraints and generative priors. To address this problem, we propose a reconstruction-driven video diffusion model that learns to condition video frames on artifact-prone RGB-D renderings. Moreover, we propose a cyclical fusion pipeline that iteratively adds restoration frames from the generative model to the training set, enabling progressive expansion and addressing the viewpoint saturation limitations seen in previous reconstruction and generation pipelines. Our evaluation, including view synthesis from sparse view and masked input, validates the effectiveness of our approach. More details at https://genfusion.sibowu.com.

Latex Bibtex Citation:

@inproceedings{Wu2025CVPR,

author = {Sibo Wu and Congrong Xu and Binbin Huang and Andreas Geiger and Anpei Chen},

title = {GenFusion: Closing the Loop between Reconstruction and Generation via Videos},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Wu2025CVPR,

author = {Sibo Wu and Congrong Xu and Binbin Huang and Andreas Geiger and Anpei Chen},

title = {GenFusion: Closing the Loop between Reconstruction and Generation via Videos},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

DepthSplat: Connecting Gaussian Splatting and Depth

H. Xu, S. Peng, F. Wang, H. Blum, D. Barath, A. Geiger and M. Pollefeys

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

H. Xu, S. Peng, F. Wang, H. Blum, D. Barath, A. Geiger and M. Pollefeys

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: Gaussian splatting and single-view depth estimation are typically studied in isolation. In this paper, we present DepthSplat to connect Gaussian splatting and depth estimation and study their interactions. More specifically, we first contribute a robust multi-view depth model by leveraging pre-trained monocular depth features, leading to high-quality feed-forward 3D Gaussian splatting reconstructions. We also show that Gaussian splatting can serve as an unsupervised pre-training objective for learning powerful depth models from large-scale multi-view posed datasets. We validate the synergy between Gaussian splatting and depth estimation through extensive ablation and cross-task transfer experiments. Our DepthSplat achieves state-of-the-art performance on ScanNet, RealEstate10K and DL3DV datasets in terms of both depth estimation and novel view synthesis, demonstrating the mutual benefits of connecting both tasks. In addition, DepthSplat enables feed-forward reconstruction from 12 input views (512x960 resolutions) in 0.6 seconds.

Latex Bibtex Citation:

@inproceedings{Xu2025CVPR,

author = {Haofei Xu and Songyou Peng and Fangjinhua Wang and Hermann Blum and Daniel Barath and Andreas Geiger and Marc Pollefeys},

title = {DepthSplat: Connecting Gaussian Splatting and Depth},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Xu2025CVPR,

author = {Haofei Xu and Songyou Peng and Fangjinhua Wang and Hermann Blum and Daniel Barath and Andreas Geiger and Marc Pollefeys},

title = {DepthSplat: Connecting Gaussian Splatting and Depth},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

EVolSplat: Efficient Volume-based Gaussian Splatting for Urban View Synthesis

S. Miao, J. Huang, D. Bai, X. Yan, H. Zhou, Y. Wang, B. Liu, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

S. Miao, J. Huang, D. Bai, X. Yan, H. Zhou, Y. Wang, B. Liu, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: Novel view synthesis of urban scenes is essential for autonomous driving-related applications.Existing NeRF and 3DGS-based methods show promising results in achieving photorealistic renderings but require slow, per-scene optimization. We introduce EVolSplat, an efficient 3D Gaussian Splatting model for urban scenes that works in a feed-forward manner. Unlike existing feed-forward, pixel-aligned 3DGS methods, which often suffer from issues like multi-view inconsistencies and duplicated content, our approach predicts 3D Gaussians across multiple frames within a unified volume using a 3D convolutional network. This is achieved by initializing 3D Gaussians with noisy depth predictions, and then refining their geometric properties in 3D space and predicting color based on 2D textures. Our model also handles distant views and the sky with a flexible hemisphere background model. This enables us to perform fast, feed-forward reconstruction while achieving real-time rendering. Experimental evaluations on the KITTI-360 and Waymo datasets show that our method achieves state-of-the-art quality compared to existing feed-forward 3DGS- and NeRF-based methods.

Latex Bibtex Citation:

@inproceedings{Miao2025CVPR,

author = {Sheng Miao and Jiaxin Huang and Dongfeng Bai and Xu Yan and Hongyu Zhou and Yue Wang and Bingbing Liu and Andreas Geiger and Yiyi Liao},

title = {EVolSplat: Efficient Volume-based Gaussian Splatting for Urban View Synthesis},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Miao2025CVPR,

author = {Sheng Miao and Jiaxin Huang and Dongfeng Bai and Xu Yan and Hongyu Zhou and Yue Wang and Bingbing Liu and Andreas Geiger and Yiyi Liao},

title = {EVolSplat: Efficient Volume-based Gaussian Splatting for Urban View Synthesis},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

UrbanCAD: Towards Highly Controllable and Photorealistic 3D Vehicles for Urban Scene Simulation

Y. Lu, Y. Cai, S. Zhang, H. Zhou, H. Hu, H. Yu, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Y. Lu, Y. Cai, S. Zhang, H. Zhou, H. Hu, H. Yu, A. Geiger and Y. Liao

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: Photorealistic 3D vehicle models with high controllability are essential for autonomous driving simulation and data augmentation. While handcrafted CAD models provide flexible controllability, free CAD libraries often lack the high-quality materials necessary for photorealistic rendering. Conversely, reconstructed 3D models offer high-fidelity rendering but lack controllability. In this work, we introduce UrbanCAD, a framework that generates highly controllable and photorealistic 3D vehicle digital twins from a single urban image, leveraging a large collection of free 3D CAD models and handcrafted materials. To achieve this, we propose a novel pipeline that follows a retrieval-optimization manner, adapting to observational data while preserving fine-grained expert-designed priors for both geometry and material. This enables vehicles' realistic 360-degree rendering, background insertion, material transfer, relighting, and component manipulation. Furthermore, given multi-view background perspective and fisheye images, we approximate environment lighting using fisheye images and reconstruct the background with 3DGS, enabling the photorealistic insertion of optimized CAD models into rendered novel view backgrounds. Experimental results demonstrate that UrbanCAD outperforms baselines in terms of photorealism. Additionally, we show that various perception models maintain their accuracy when evaluated on UrbanCAD with in-distribution configurations but degrade when applied to realistic out-of-distribution data generated by our method. This suggests that UrbanCAD is a significant advancement in creating photorealistic, safety-critical driving scenarios for downstream applications.

Latex Bibtex Citation:

@inproceedings{Lu2025CVPR,

author = {Yichong Lu and Yichi Cai and Shangzhan Zhang and Hongyu Zhou and Haoji Hu and Huimin Yu and Andreas Geiger and Yiyi Liao},

title = {UrbanCAD: Towards Highly Controllable and Photorealistic 3D Vehicles for Urban Scene Simulation},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Lu2025CVPR,

author = {Yichong Lu and Yichi Cai and Shangzhan Zhang and Hongyu Zhou and Haoji Hu and Huimin Yu and Andreas Geiger and Yiyi Liao},

title = {UrbanCAD: Towards Highly Controllable and Photorealistic 3D Vehicles for Urban Scene Simulation},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

EMPERROR: A Flexible Generative Perception Error Model for Probing Self-Driving Planners

N. Hanselmann, S. Doll, M. Cordts, H. Lensch and A. Geiger

Robotics and Automation Letters (RA-L), 2025

N. Hanselmann, S. Doll, M. Cordts, H. Lensch and A. Geiger

Robotics and Automation Letters (RA-L), 2025

Abstract: To handle the complexities of real-world traffic, learning planners for self-driving from data is a promising direction. While recent approaches have shown great progress, they typically assume a setting in which the ground-truth world state is available as input. However, when deployed, planning needs to be robust to the long-tail of errors incurred by a noisy perception system, which is often neglected in evaluation. To address this, previous work has proposed drawing adversarial samples from a perception error model (PEM) mimicking the noise characteristics of a target object detector. However, these methods use simple PEMs that fail to accurately capture all failure modes of detection. In this paper, we present EMPERROR, a novel transformer-based generative PEM, apply it to stress-test an imitation learning (IL)-based planner and show that it imitates modern detectors more faithfully than previous work. Furthermore, it is able to produce realistic noisy inputs that increase the planner's collision rate by up to 85%, demonstrating its utility as a valuable tool for a more complete evaluation of self-driving planners.

Latex Bibtex Citation:

@article{Hanselmann2025RAL,

author = {Niklas Hanselmann and Simon Doll and Marius Cordts and Hendrik P. A. Lensch and Andreas Geiger},

title = {EMPERROR: A Flexible Generative Perception Error Model for Probing Self-Driving Planners},

journal = {Robotics and Automation Letters (RA-L)},

year = {2025}

}

Latex Bibtex Citation:

@article{Hanselmann2025RAL,

author = {Niklas Hanselmann and Simon Doll and Marius Cordts and Hendrik P. A. Lensch and Andreas Geiger},

title = {EMPERROR: A Flexible Generative Perception Error Model for Probing Self-Driving Planners},

journal = {Robotics and Automation Letters (RA-L)},

year = {2025}

}

Is Single-View Mesh Reconstruction Ready for Robotics?

F. Nolte, A. Geiger, B. Schölkopf and I. Posner

Arxiv, 2025

F. Nolte, A. Geiger, B. Schölkopf and I. Posner

Arxiv, 2025

Abstract: This paper evaluates single-view mesh reconstruction models for their potential in enabling instant digital twin creation for real-time planning and dynamics prediction using physics simulators for robotic manipulation. Recent single-view 3D reconstruction advances offer a promising avenue toward an automated real-to-sim pipeline: directly mapping a single observation of a scene into a simulation instance by reconstructing scene objects as individual, complete, and physically plausible 3D meshes. However, their suitability for physics simulations and robotics applications under immediacy, physical fidelity, and simulation readiness remains underexplored. We establish robotics-specific benchmarking criteria for 3D reconstruction, including handling typical inputs, collision-free and stable geometry, occlusions robustness, and meeting computational constraints. Our empirical evaluation using realistic robotics datasets shows that despite success on computer vision benchmarks, existing approaches fail to meet robotics-specific requirements. We quantitively examine limitations of single-view reconstruction for practical robotics implementation, in contrast to prior work that focuses on multi-view approaches. Our findings highlight critical gaps between computer vision advances and robotics needs, guiding future research at this intersection.

Latex Bibtex Citation:

@article{Nolte2025ARXIV,

author = {Frederik Nolte and Andreas Geiger and Bernhard Schölkopf and Ingmar Posner},

title = {Is Single-View Mesh Reconstruction Ready for Robotics?},

journal = {Arxiv},

year = {2025}

}

Latex Bibtex Citation:

@article{Nolte2025ARXIV,

author = {Frederik Nolte and Andreas Geiger and Bernhard Schölkopf and Ingmar Posner},

title = {Is Single-View Mesh Reconstruction Ready for Robotics?},

journal = {Arxiv},

year = {2025}

}

Artificial Kuramoto Oscillatory Neurons (oral)

T. Miyato, S. Löwe, A. Geiger and M. Welling

International Conference on Learning Representations (ICLR), 2025

T. Miyato, S. Löwe, A. Geiger and M. Welling

International Conference on Learning Representations (ICLR), 2025

Abstract: It has long been known in both neuroscience and AI that ``binding'' between neurons leads to a form of competitive learning where representations are compressed in order to represent more abstract concepts in deeper layers of the network. More recently, it was also hypothesized that dynamic (spatiotemporal) representations play an important role in both neuroscience and AI. Building on these ideas, we introduce Artificial Kuramoto Oscillatory Neurons (AKOrN) as a dynamical alternative to threshold units, which can be combined with arbitrary connectivity designs such as fully connected, convolutional, or attentive mechanisms. Our generalized Kuramoto updates bind neurons together through their synchronization dynamics. We show that this idea provides performance improvements across a wide spectrum of tasks such as unsupervised object discovery, adversarial robustness, calibrated uncertainty quantification, and reasoning. We believe that these empirical results show the importance of rethinking our assumptions at the most basic neuronal level of neural representation, and in particular show the importance of dynamical representations.

Latex Bibtex Citation:

@inproceedings{Miyato2025ICLR,

author = {Takeru Miyato and Sindy Löwe and Andreas Geiger and Max Welling},

title = {Artificial Kuramoto Oscillatory Neurons},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Miyato2025ICLR,

author = {Takeru Miyato and Sindy Löwe and Andreas Geiger and Max Welling},

title = {Artificial Kuramoto Oscillatory Neurons},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2025}

}

Unimotion: Unifying 3D Human Motion Synthesis and Understanding (oral)

C. Li, J. Chibane, Y. He, N. Pearl, A. Geiger and G. Pons-Moll

International Conference on 3D Vision (3DV), 2025

C. Li, J. Chibane, Y. He, N. Pearl, A. Geiger and G. Pons-Moll

International Conference on 3D Vision (3DV), 2025

Abstract: We introduce Unimotion, the first unified multi-task human motion model capable of both flexible motion control and frame-level motion understanding. While existing works control avatar motion with global text conditioning, or with fine-grained per frame scripts, none can do both at once. In addition, none of the existing works can output frame-level text paired with the generated poses. In contrast, Unimotion allows to control motion with global text, or local frame-level text, or both at once, providing more flexible control for users. Importantly, Unimotion is the first model which by design outputs local text paired with the generated poses, allowing users to know what motion happens and when, which is necessary for a wide range of applications. We show Unimotion opens up new applications: 1.) Hierarchical control, allowing users to specify motion at different levels of detail, 2.) Obtaining motion text descriptions for existing MoCap data or YouTube videos 3.) Allowing for editability, generating motion from text, and editing the motion via text edits. Moreover, Unimotion attains state-of-the-art results for the frame-level text-to-motion task on the established HumanML3D dataset.

Latex Bibtex Citation:

@inproceedings{Li2025THREEDV,

author = {Chuqiao Li and Julian Chibane and Yannan He and Naama Pearl and Andreas Geiger and Gerard Pons-Moll},

title = {Unimotion: Unifying 3D Human Motion Synthesis and Understanding},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Li2025THREEDV,

author = {Chuqiao Li and Julian Chibane and Yannan He and Naama Pearl and Andreas Geiger and Gerard Pons-Moll},

title = {Unimotion: Unifying 3D Human Motion Synthesis and Understanding},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2025}

}

2024

Renovating Names in Open-Vocabulary Segmentation Benchmarks

H. Huang, S. Peng, D. Zhang and A. Geiger

Advances in Neural Information Processing Systems (NeurIPS), 2024

H. Huang, S. Peng, D. Zhang and A. Geiger

Advances in Neural Information Processing Systems (NeurIPS), 2024

Abstract: Names are essential to both human cognition and vision-language models. Open-vocabulary models utilize class names as text prompts to generalize to categories unseen during training. However, the precision of these names is often overlooked in existing datasets. In this paper, we address this underexplored problem by presenting a framework for "renovating" names in open-vocabulary segmentation benchmarks (RENOVATE). Our framework features a renaming model that enhances the quality of names for each visual segment. Through experiments, we demonstrate that our renovated names help train stronger open-vocabulary models with up to 15% relative improvement and significantly enhance training efficiency with improved data quality. We also show that our renovated names improve evaluation by better measuring misclassification and enabling fine-grained model analysis. We will provide our code and relabelings for several popular segmentation datasets (MS COCO, ADE20K, Cityscapes) to the research community.

Latex Bibtex Citation:

@inproceedings{Huang2024NEURIPS,

author = {Haiwen Huang and Songyou Peng and Dan Zhang and Andreas Geiger},

title = {Renovating Names in Open-Vocabulary Segmentation Benchmarks},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Huang2024NEURIPS,

author = {Haiwen Huang and Songyou Peng and Dan Zhang and Andreas Geiger},

title = {Renovating Names in Open-Vocabulary Segmentation Benchmarks},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability

S. Gao, J. Yang, L. Chen, K. Chitta, Y. Qiu, A. Geiger, J. Zhang and H. Li

Advances in Neural Information Processing Systems (NeurIPS), 2024

S. Gao, J. Yang, L. Chen, K. Chitta, Y. Qiu, A. Geiger, J. Zhang and H. Li

Advances in Neural Information Processing Systems (NeurIPS), 2024

Abstract: World models can foresee the outcomes of different actions, which is of paramount importance for autonomous driving. Nevertheless, existing driving world models still have limitations in generalization to unseen environments, prediction fidelity of critical details, and action controllability for flexible application. In this paper, we present Vista, a generalizable driving world model with high fidelity and versatile controllability. Based on a systematic diagnosis of existing methods, we introduce several key ingredients to address these limitations. To accurately predict real-world dynamics at high resolution, we propose two novel losses to promote the learning of moving instances and structural information. We also devise an effective latent replacement approach to inject historical frames as priors for coherent long-horizon rollouts. For action controllability, we incorporate a versatile set of controls from high-level intentions (command, goal point) to low-level maneuvers (trajectory, angle, and speed) through an efficient learning strategy. After large-scale training, the capabilities of Vista can seamlessly generalize to different scenarios. Extensive experiments on multiple datasets show that Vista outperforms the most advanced general-purpose video generator in over 70% of comparisons and surpasses the best-performing driving world model by 55% in FID and 27% in FVD. Moreover, for the first time, we utilize the capacity of Vista itself to establish a generalizable reward for real-world action evaluation without accessing the ground truth actions.

Latex Bibtex Citation:

@inproceedings{Gao2024NEURIPS,

author = {Shenyuan Gao and Jiazhi Yang and Li Chen and Kashyap Chitta and Yihang Qiu and Andreas Geiger and Jun Zhang and Hongyang Li},

title = {Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Gao2024NEURIPS,

author = {Shenyuan Gao and Jiazhi Yang and Li Chen and Kashyap Chitta and Yihang Qiu and Andreas Geiger and Jun Zhang and Hongyang Li},

title = {Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

NAVSIM: Data-Driven Non-Reactive Autonomous Vehicle Simulation and Benchmarking

D. Dauner, M. Hallgarten, T. Li, X. Weng, Z. Huang, Z. Yang, H. Li, I. Gilitschenski, B. Ivanovic, M. Pavone, et al.

Advances in Neural Information Processing Systems (NeurIPS), 2024

D. Dauner, M. Hallgarten, T. Li, X. Weng, Z. Huang, Z. Yang, H. Li, I. Gilitschenski, B. Ivanovic, M. Pavone, et al.

Advances in Neural Information Processing Systems (NeurIPS), 2024

Abstract: Benchmarking vision-based driving policies is challenging. On one hand, open-loop evaluation with real data is easy, but these results do not reflect closed-loop performance. On the other, closed-loop evaluation is possible in simulation, but is hard to scale due to its significant computational demands. Further, the simulators available today exhibit a large domain gap to real data. This has resulted in an inability to draw clear conclusions from the rapidly growing body of research on end-to-end autonomous driving. In this paper, we present NAVSIM, a middle ground between these evaluation paradigms, where we use large datasets in combination with a non-reactive simulator to enable large-scale real-world benchmarking. Specifically, we gather simulation-based metrics, such as progress and time to collision, by unrolling bird's eye view abstractions of the test scenes for a short simulation horizon. Our simulation is non-reactive, i.e., the evaluated policy and environment do not influence each other. As we demonstrate empirically, this decoupling allows open-loop metric computation while being better aligned with closed-loop evaluations than traditional displacement errors. NAVSIM enabled a new competition held at CVPR 2024, where 143 teams submitted 463 entries, resulting in several new insights. On a large set of challenging scenarios, we observe that simple methods with moderate compute requirements such as TransFuser can match recent large-scale end-to-end driving architectures such as UniAD. Our modular framework can potentially be extended with new datasets, data curation strategies, and metrics, and will be continually maintained to host future challenges.

Latex Bibtex Citation:

@inproceedings{Dauner2024NEURIPS,

author = {Daniel Dauner and Marcel Hallgarten and Tianyu Li and Xinshuo Weng and Zhiyu Huang and Zetong Yang and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {NAVSIM: Data-Driven Non-Reactive Autonomous Vehicle Simulation and Benchmarking},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Dauner2024NEURIPS,

author = {Daniel Dauner and Marcel Hallgarten and Tianyu Li and Xinshuo Weng and Zhiyu Huang and Zetong Yang and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {NAVSIM: Data-Driven Non-Reactive Autonomous Vehicle Simulation and Benchmarking},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024}

}

Gaussian Opacity Fields: Efficient Adaptive Surface Reconstruction in Unbounded Scenes

Z. Yu, T. Sattler and A. Geiger

Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA), 2024

Z. Yu, T. Sattler and A. Geiger

Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA), 2024

Abstract: Recently, 3D Gaussian Splatting (3DGS) has demonstrated impressive novel view synthesis results, while allowing the rendering of high-resolution images in real-time. However, leveraging 3D Gaussians for surface reconstruction poses significant challenges due to the explicit and disconnected nature of 3D Gaussians. In this work, we present Gaussian Opacity Fields (GOF), a novel approach for efficient, high-quality, and adaptive surface reconstruction in unbounded scenes. Our GOF is derived from ray-tracing-based volume rendering of 3D Gaussians, enabling direct geometry extraction from 3D Gaussians by identifying its levelset, without resorting to Poisson reconstruction or TSDF fusion as in previous work. We approximate the surface normal of Gaussians as the normal of the ray-Gaussian intersection plane, enabling the application of regularization that significantly enhances geometry. Furthermore, we develop an efficient geometry extraction method utilizing Marching Tetrahedra, where the tetrahedral grids are induced from 3D Gaussians and thus adapt to the scene's complexity. Our evaluations reveal that GOF surpasses existing 3DGS-based methods in surface reconstruction and novel view synthesis. Further, it compares favorably to or even outperforms, neural implicit methods in both quality and speed.

Latex Bibtex Citation:

@inproceedings{Yu2024SIGGRAPHASIA,

author = {Zehao Yu and Torsten Sattler and Andreas Geiger},

title = {Gaussian Opacity Fields: Efficient Adaptive Surface Reconstruction in Unbounded Scenes},

booktitle = {Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Yu2024SIGGRAPHASIA,

author = {Zehao Yu and Torsten Sattler and Andreas Geiger},

title = {Gaussian Opacity Fields: Efficient Adaptive Surface Reconstruction in Unbounded Scenes},

booktitle = {Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA)},

year = {2024}

}

An Invitation to Deep Reinforcement Learning

B. Jaeger and A. Geiger

Foundations and Trends in Optimization, 2024

B. Jaeger and A. Geiger

Foundations and Trends in Optimization, 2024

Abstract: Training a deep neural network to maximize a target objective has become the standard recipe for successful machine learning over the last decade. These networks can be optimized with supervised learning if the target objective is differentiable. However, this is not the case for many interesting problems. Common objectives like intersection over union (IoU), and bilingual evaluation understudy (BLEU) scores or rewards cannot be optimized with supervised learning. A common workaround is to define differentiable surrogate losses, leading to suboptimal solutions with respect to the actual objective. Reinforcement learning (RL) has emerged as a promising alternative for optimizing deep neural networks to maximize non-differentiable objectives in recent years. Examples include aligning large language models via human feedback, code generation, object detection or control problems. This makes RL techniques relevant to the larger machine learning audience. The subject is, however, timeintensive to approach due to the large range of methods, as well as the often highly theoretical presentation. This monograph takes an alternative approach that is different from classic RL textbooks. Rather than focusing on tabular problems, we introduce RL as a generalization of supervised learning, which we first apply to non-differentiable objectives and later to temporal problems. Assuming only basic knowledge of supervised learning, the reader will be able to understand state-of-the-art deep RL algorithms like proximal policy optimization (PPO) after reading this tutorial.

Latex Bibtex Citation:

@book{Jaeger2024,

author = {Bernhard Jaeger and Andreas Geiger},

title = {An Invitation to Deep Reinforcement Learning},

publisher = {Foundations and Trends in Optimization},

year = {2024}

}

Latex Bibtex Citation:

@book{Jaeger2024,

author = {Bernhard Jaeger and Andreas Geiger},

title = {An Invitation to Deep Reinforcement Learning},

publisher = {Foundations and Trends in Optimization},

year = {2024}

}

HDT: Hierarchical Document Transformer

H. He, M. Flicke, J. Buchmann, I. Gurevych and A. Geiger

Conference on Language Modeling (COLM), 2024

H. He, M. Flicke, J. Buchmann, I. Gurevych and A. Geiger

Conference on Language Modeling (COLM), 2024

Abstract: In this paper, we propose the Hierarchical Document Transformer (HDT), a novel sparse Transformer architecture tailored for structured hierarchical documents. Such documents are extremely important in numerous domains, including science, law or medicine. However, most existing solutions are inefficient and fail to make use of the structure inherent to documents. HDT exploits document structure by introducing auxiliary anchor tokens and redesigning the attention mechanism into a sparse multi-level hierarchy. This approach facilitates information exchange between tokens at different levels while maintaining sparsity, thereby enhancing computational and memory efficiency while exploiting the document structure as an inductive bias. We address the technical challenge of implementing HDT's sample-dependent hierarchical attention pattern by developing a novel sparse attention kernel that considers the hierarchical structure of documents. As demonstrated by our experiments, utilizing structural information present in documents leads to faster convergence, higher sample efficiency and better performance on downstream tasks.

Latex Bibtex Citation:

@inproceedings{He2024COLM,

author = {Haoyu He and Markus Flicke and Jan Buchmann and Iryna Gurevych and Andreas Geiger},

title = {HDT: Hierarchical Document Transformer},

booktitle = {Conference on Language Modeling (COLM)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{He2024COLM,

author = {Haoyu He and Markus Flicke and Jan Buchmann and Iryna Gurevych and Andreas Geiger},

title = {HDT: Hierarchical Document Transformer},

booktitle = {Conference on Language Modeling (COLM)},

year = {2024}

}

LISO: Lidar-only Self-Supervised 3D Object Detection

S. Baur, F. Moosmann and A. Geiger

European Conference on Computer Vision (ECCV), 2024

S. Baur, F. Moosmann and A. Geiger

European Conference on Computer Vision (ECCV), 2024

Abstract: 3D object detection is one of the most important components in any Self-Driving stack, but current state-of-the-art (SOTA) lidar object detectors require costly & slow manual annotation of 3D bounding boxes to perform well. Recently, several methods emerged to generate pseudo ground truth without human supervision, however, all of these methods have various drawbacks: Some methods require sensor rigs with full camera coverage and accurate calibration, partly supplemented by an auxiliary optical flow engine. Others require expensive high-precision localization to find objects that disappeared over multiple drives. We introduce a novel self-supervised method to train SOTA lidar object detection networks which works on unlabeled sequences of lidar point clouds only, which we call trajectory-regularized self-training. It utilizes a SOTA self-supervised lidar scene flow network under the hood to generate, track, and iteratively refine pseudo ground truth. We demonstrate the effectiveness of our approach for multiple SOTA object detection networks across multiple real-world datasets. Code will be released.

Latex Bibtex Citation:

@inproceedings{Baur2024ECCV,

author = {Stefan Baur and Frank Moosmann and Andreas Geiger},

title = {LISO: Lidar-only Self-Supervised 3D Object Detection},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Baur2024ECCV,

author = {Stefan Baur and Frank Moosmann and Andreas Geiger},

title = {LISO: Lidar-only Self-Supervised 3D Object Detection},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

LaRa: Efficient Large-Baseline Radiance Fields

A. Chen, H. Xu, S. Esposito, S. Tang and A. Geiger

European Conference on Computer Vision (ECCV), 2024

A. Chen, H. Xu, S. Esposito, S. Tang and A. Geiger

European Conference on Computer Vision (ECCV), 2024

Abstract: Radiance field methods have achieved photorealistic novel view synthesis and geometry reconstruction. But they are mostly applied in per-scene optimization or small-baseline settings. While several recent works investigate feed-forward reconstruction with large baselines by utilizing transformers, they all operate with a standard global attention mechanism and hence ignore the local nature of 3D reconstruction. We propose a method that unifies local and global reasoning in transformer layers, resulting in improved quality and faster convergence. Our model represents scenes as Gaussian Volumes and combines this with an image encoder and Group Attention Layers for efficient feed-forward reconstruction. Experimental results demonstrate that our model, trained for two days on four GPUs, demonstrates high fidelity in reconstructing 360° radiance fields, and robustness to zero-shot and out-of-domain testing.

Latex Bibtex Citation:

@inproceedings{Chen2024ECCV,

author = {Anpei Chen and Haofei Xu and Stefano Esposito and Siyu Tang and Andreas Geiger},

title = {LaRa: Efficient Large-Baseline Radiance Fields},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Chen2024ECCV,

author = {Anpei Chen and Haofei Xu and Stefano Esposito and Siyu Tang and Andreas Geiger},

title = {LaRa: Efficient Large-Baseline Radiance Fields},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

SLEDGE: Synthesizing Driving Environments with Generative Models and Rule-Based Traffic

K. Chitta, D. Dauner and A. Geiger

European Conference on Computer Vision (ECCV), 2024

K. Chitta, D. Dauner and A. Geiger

European Conference on Computer Vision (ECCV), 2024

Abstract: SLEDGE is the first generative simulator for vehicle motion planning trained on real-world driving logs. Its core component is a learned model that is able to generate agent bounding boxes and lane graphs. The model's outputs serve as an initial state for rule-based traffic simulation. The unique properties of the entities to be generated for SLEDGE, such as their connectivity and variable count per scene, render the naive application of most modern generative models to this task non-trivial. Therefore, together with a systematic study of existing lane graph representations, we introduce a novel raster-to-vector autoencoder. It encodes agents and the lane graph into distinct channels in a rasterized latent map. This facilitates both lane-conditioned agent generation and combined generation of lanes and agents with a Diffusion Transformer. Using generated entities in SLEDGE enables greater control over the simulation, e.g. upsampling turns or increasing traffic density. Further, SLEDGE can support 500m long routes, a capability not found in existing data-driven simulators like nuPlan. It presents new challenges for planning algorithms, evidenced by failure rates of over 40% for PDM, the winner of the 2023 nuPlan challenge, when tested on hard routes and dense traffic generated by our model. Compared to nuPlan, SLEDGE requires 500x less storage to set up (<4 GB), making it a more accessible option and helping with democratizing future research in this field.

Latex Bibtex Citation:

@inproceedings{Chitta2024ECCV,

author = {Kashyap Chitta and Daniel Dauner and Andreas Geiger},

title = {SLEDGE: Synthesizing Driving Environments with Generative Models and Rule-Based Traffic},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Chitta2024ECCV,

author = {Kashyap Chitta and Daniel Dauner and Andreas Geiger},

title = {SLEDGE: Synthesizing Driving Environments with Generative Models and Rule-Based Traffic},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

Efficient Depth-Guided Urban View Synthesis

S. Miao, J. Huang, D. Bai, W. Qiu, B. Liu, A. Geiger and Y. Liao

European Conference on Computer Vision (ECCV), 2024

S. Miao, J. Huang, D. Bai, W. Qiu, B. Liu, A. Geiger and Y. Liao

European Conference on Computer Vision (ECCV), 2024

Abstract: Recent advances in implicit scene representation enable high-fidelity street view novel view synthesis. However, existing methods optimize a neural radiance field for each scene, relying heavily on dense training images and extensive computation resources. To mitigate this shortcoming, we introduce a new method called Efficient Depth-Guided Urban View Synthesis (EDUS) for fast feed-forward inference and efficient per-scene fine-tuning. Different from prior generalizable methods that infer geometry based on feature matching, EDUS leverages noisy predicted geometric priors as guidance to enable generalizable urban view synthesis from sparse input images. The geometric priors allow us to apply our generalizable model directly in the 3D space, gaining robustness across various sparsity levels. Through comprehensive experiments on the KITTI-360 and Waymo datasets, we demonstrate promising generalization abilities on novel street scenes. Moreover, our results indicate that EDUS achieves state-of-the-art performance in sparse view settings when combined with fast test-time optimization.

Latex Bibtex Citation:

@inproceedings{Miao2024ECCV,

author = {Sheng Miao and Jiaxin Huang and Dongfeng Bai and Weichao Qiu and Bingbing Liu and Andreas Geiger and Yiyi Liao},

title = {Efficient Depth-Guided Urban View Synthesis},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

Latex Bibtex Citation:

@inproceedings{Miao2024ECCV,

author = {Sheng Miao and Jiaxin Huang and Dongfeng Bai and Weichao Qiu and Bingbing Liu and Andreas Geiger and Yiyi Liao},

title = {Efficient Depth-Guided Urban View Synthesis},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024}

}

MVSplat: Efficient 3D Gaussian Splatting from Sparse Multi-View Images (oral)

Y. Chen, H. Xu, C. Zheng, B. Zhuang, M. Pollefeys, A. Geiger, T. Cham and J. Cai

European Conference on Computer Vision (ECCV), 2024

Y. Chen, H. Xu, C. Zheng, B. Zhuang, M. Pollefeys, A. Geiger, T. Cham and J. Cai

European Conference on Computer Vision (ECCV), 2024

Abstract: We propose MVSplat, an efficient feed-forward 3D Gaussian Splatting model learned from sparse multi-view images. To accurately localize the Gaussian centers, we propose to build a cost volume representation via plane sweeping in the 3D space, where the cross-view feature similarities stored in the cost volume can provide valuable geometry cues to the estimation of depth. We learn the Gaussian primitives' opacities, covariances, and spherical harmonics coefficients jointly with the Gaussian centers while only relying on photometric supervision. We demonstrate the importance of the cost volume representation in learning feed-forward Gaussian Splatting models via extensive experimental evaluations. On the large-scale RealEstate10K and ACID benchmarks, our model achieves state-of-the-art performance with the fastest feed-forward inference speed (22 fps). Compared to the latest state-of-the-art method pixelSplat, our model uses 10x fewer parameters and infers more than 2x faster while providing higher appearance and geometry quality as well as better cross-dataset generalization.

Latex Bibtex Citation:

@inproceedings{Chen2024ECCVb,

author = {Yuedong Chen and Haofei Xu and Chuanxia Zheng and Bohan Zhuang and Marc Pollefeys and Andreas Geiger and Tat-Jen Cham and Jianfei Cai},