Summaries of all papers discussed in the survey paper on autonomous vision.

It is very likely that we have missed several highly important works. Therefore, we appreciate every feedback from the community on what we should add. If you have comments, please send an E-Mail to . We will take every comment into consideration for the next version of the survey paper.

Taxonomy

Toogle the topics (colorized) to show only literature from the selected categories and click on papers (black) to get details.

Zoom and move around with normal map controls. For a simple list of topics click on the list tab.

Created using D3.js

Summaries

Sort literature according to: Title | Author | Conference | Year

| Mapping, Localization & Ego-Motion Estimation State of the Art on KITTI | |||

|

IJRR 2016

Carlevaris-Bianco2016IJRR | |||

| Object Detection State of the Art on KITTI | |||

|

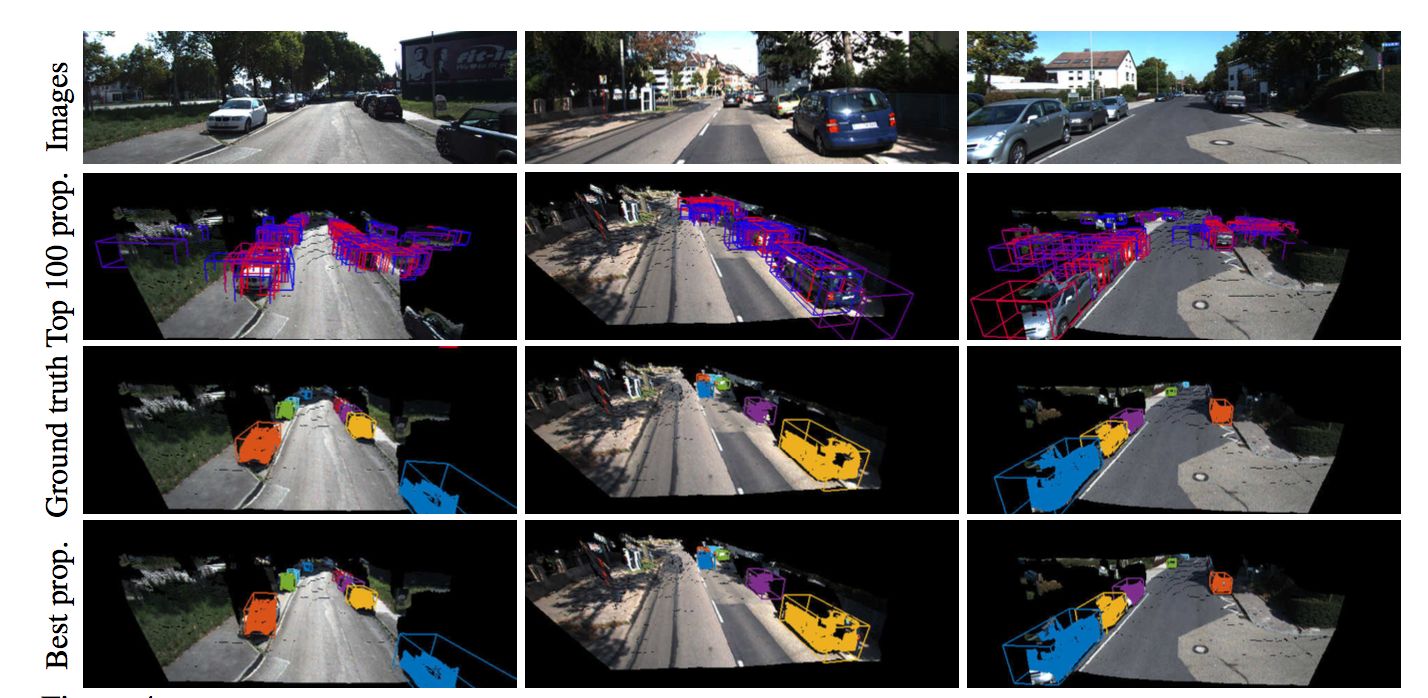

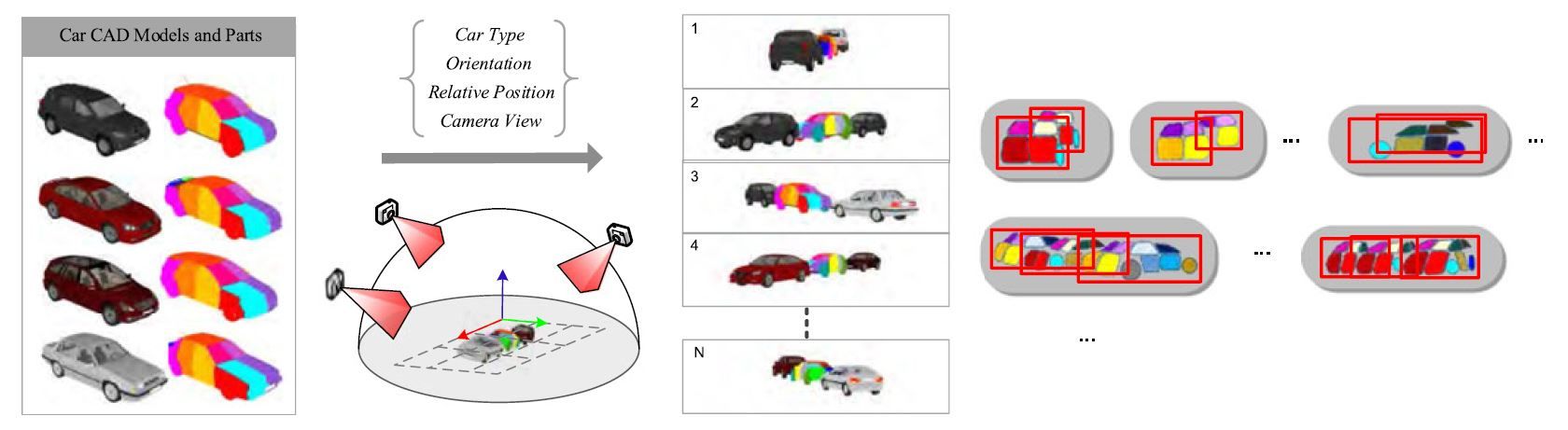

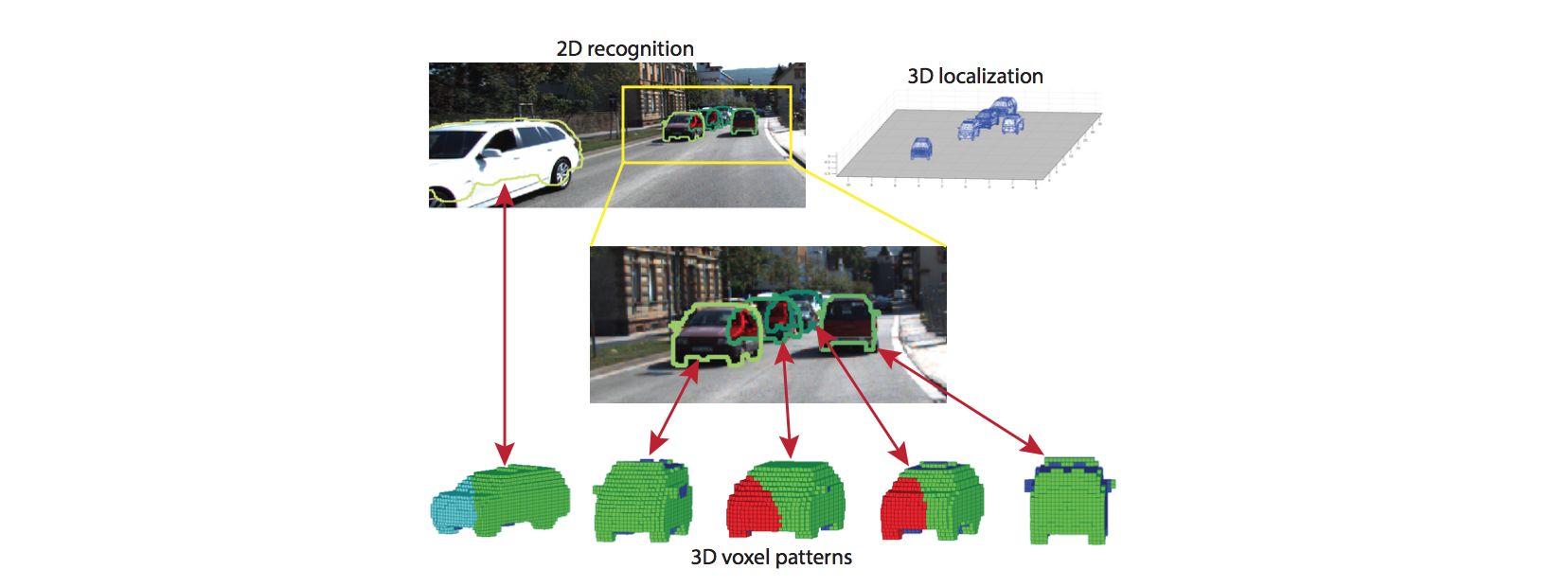

Deep MANTA: A Coarse-to-fine Many-Task Network for joint 2D and 3D vehicle analysis from monocular image[scholar]

|

CVPR 2017

Chabot2017CVPR | ||

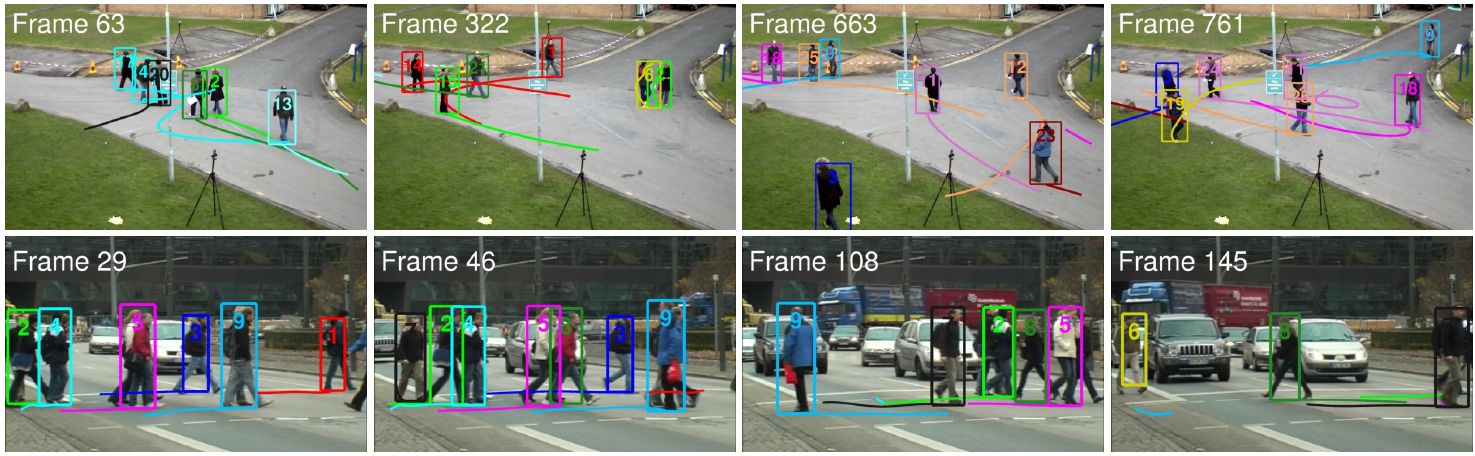

| Object Tracking State of the Art on MOT & KITTI | |||

|

Online Multi-object Tracking Using CNN-Based Single Object Tracker with Spatial-Temporal Attention Mechanism[scholar]

|

ICCV 2017

Chu2017ICCV | ||

| Object Tracking State of the Art on MOT & KITTI | |||

|

FAMNet: Joint Learning of Feature, Affinity and Multi-dimensional Assignment for Online Multiple Object Tracking[scholar]

|

ARXIV 2019

Chu2019ARXIV | ||

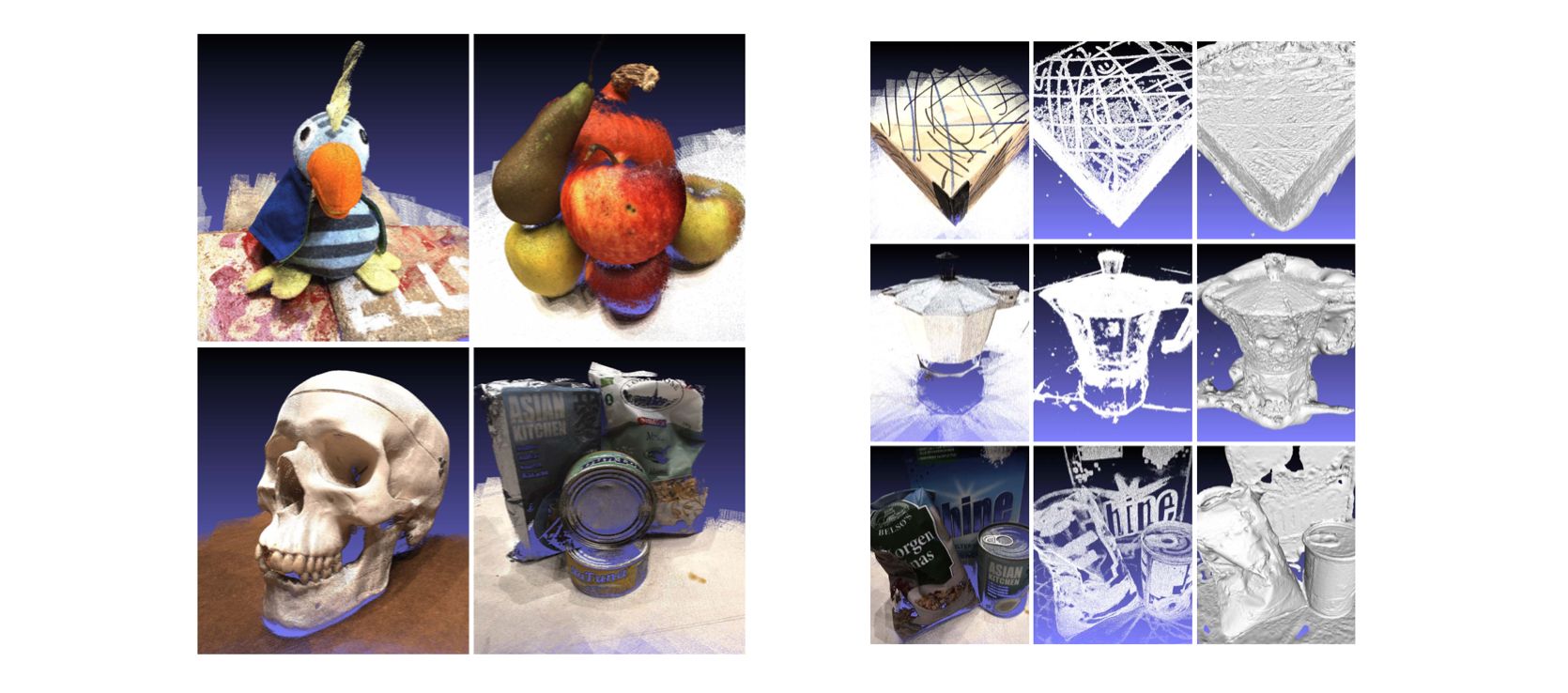

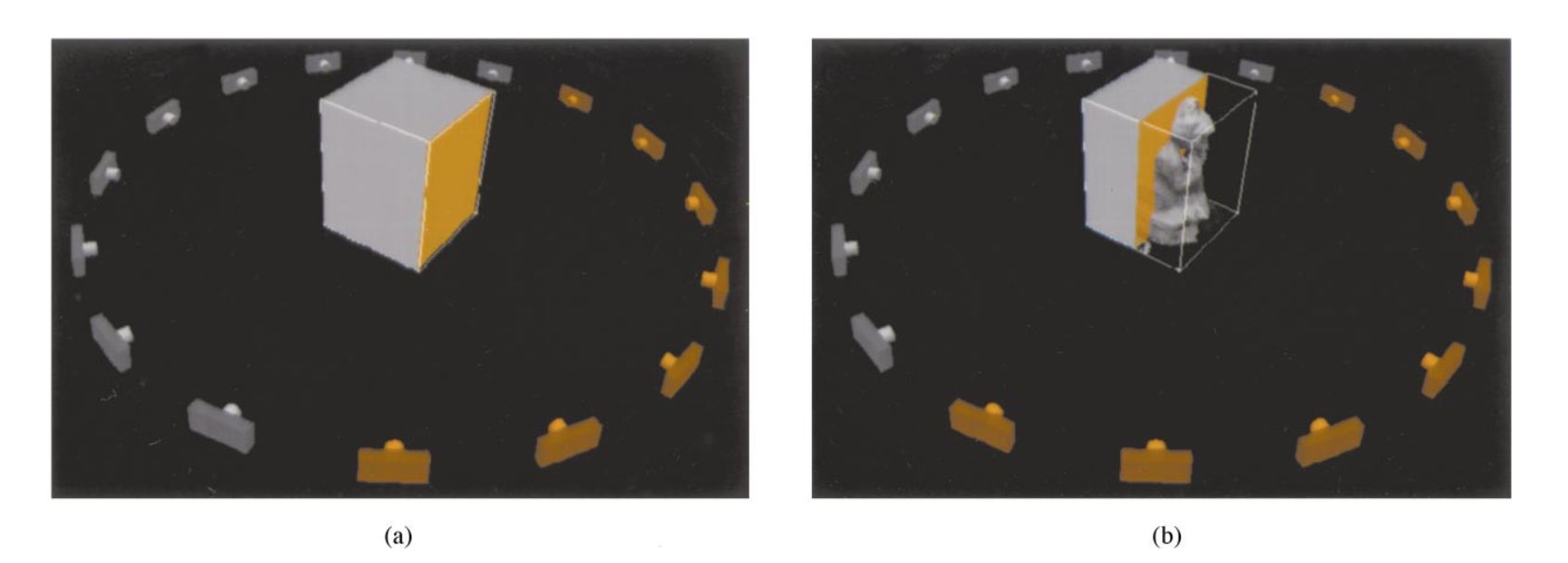

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

2018 IEEE Conference on Computer Vision and Pattern Recognition 2018

Huang2018CVPRa | |||

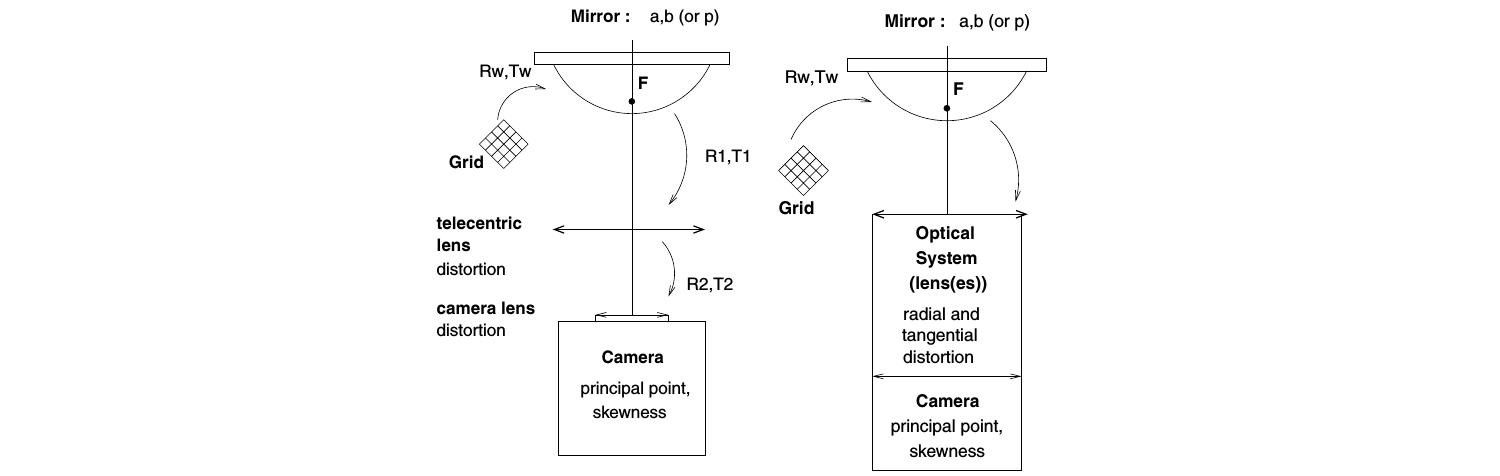

| Sensors Camera Models | |||

|

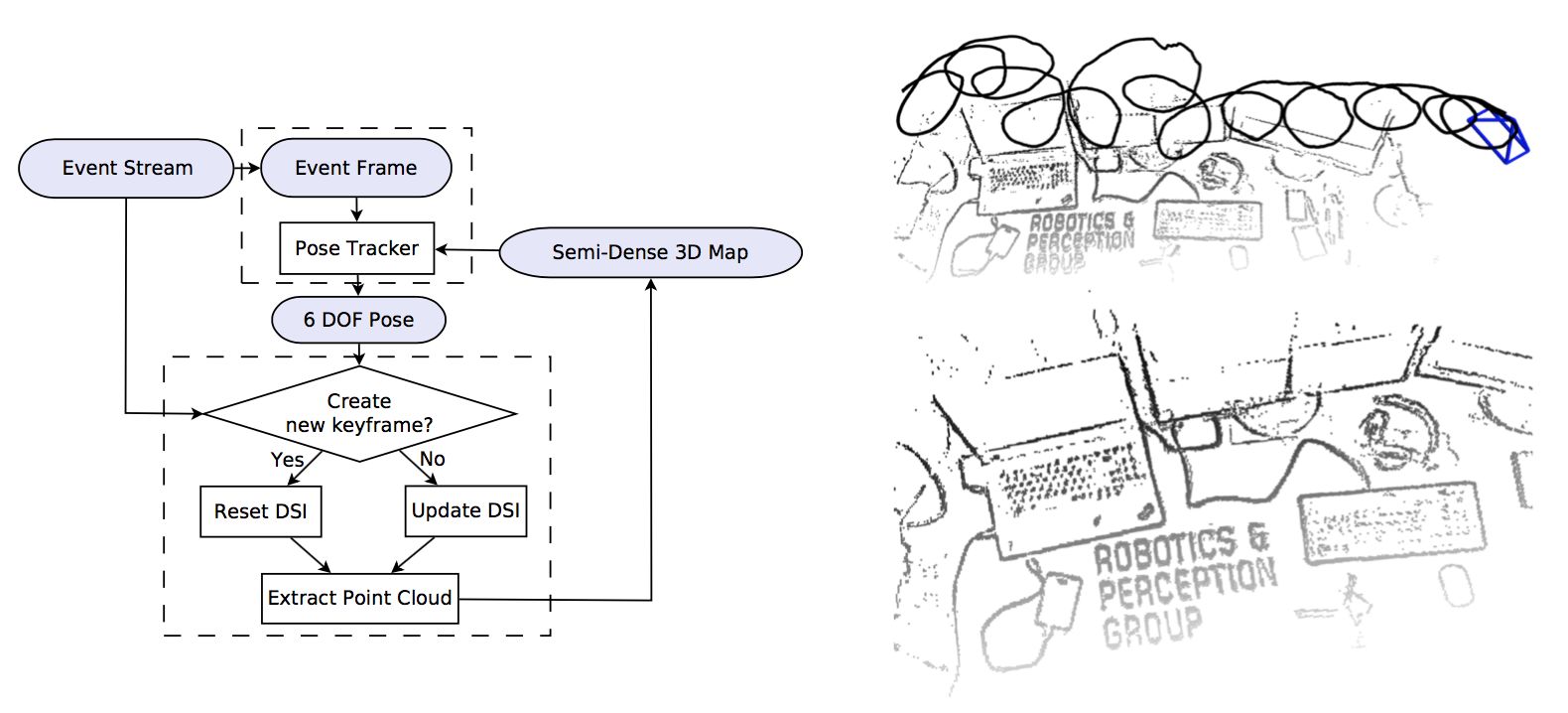

The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM[scholar]

|

IJRR 2017

Mueggler2017IJRR | ||

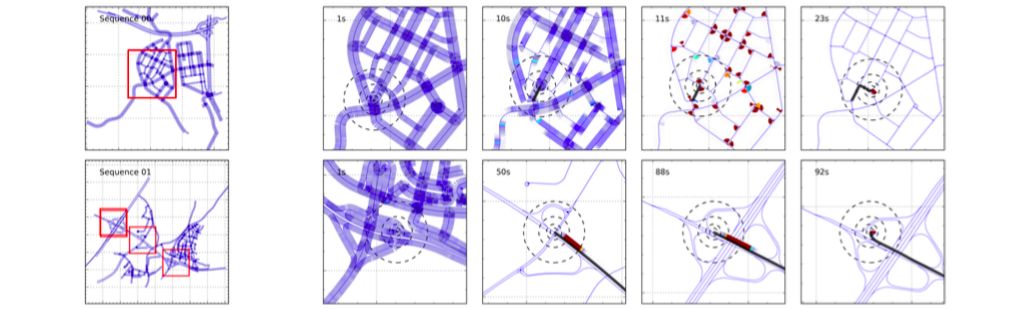

| Mapping, Localization & Ego-Motion Estimation State of the Art on KITTI | |||

|

Workshop of Computer Vision (WVC) 2017

Pereira2017WVC | |||

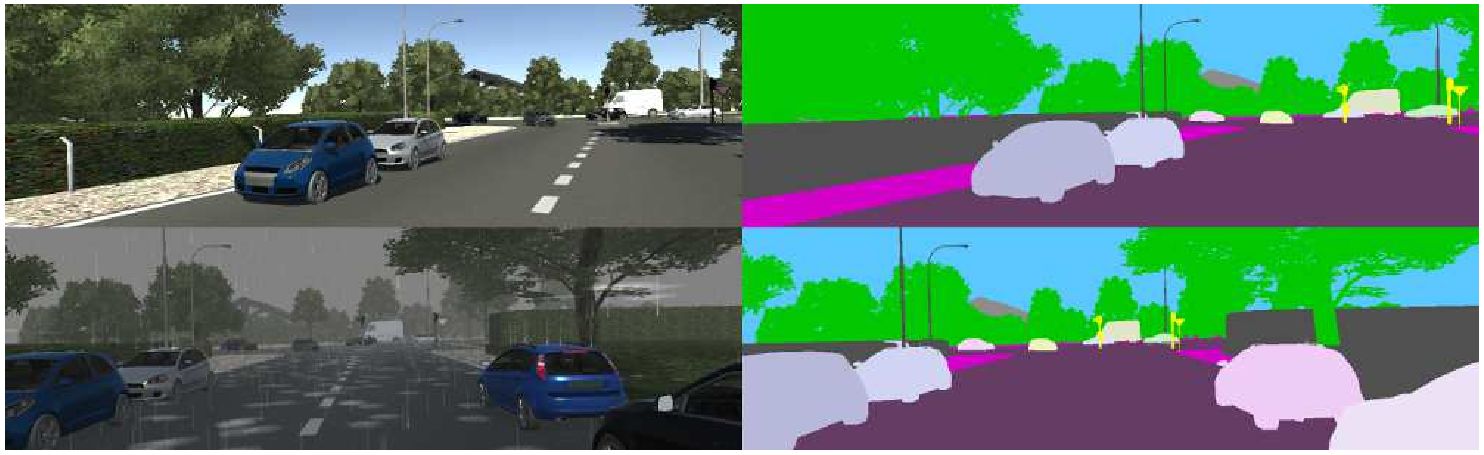

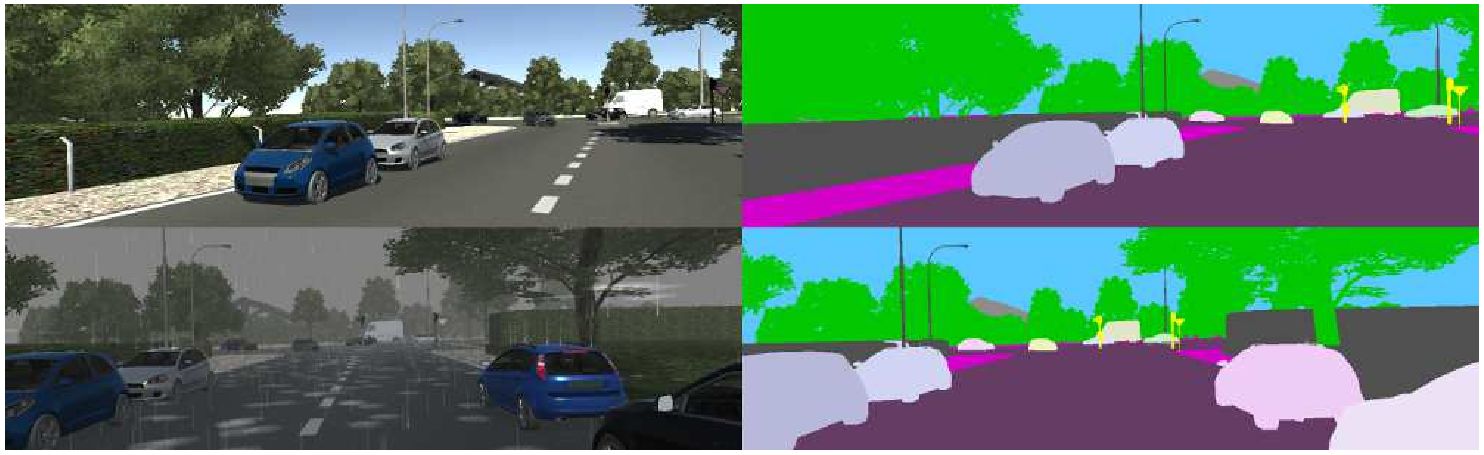

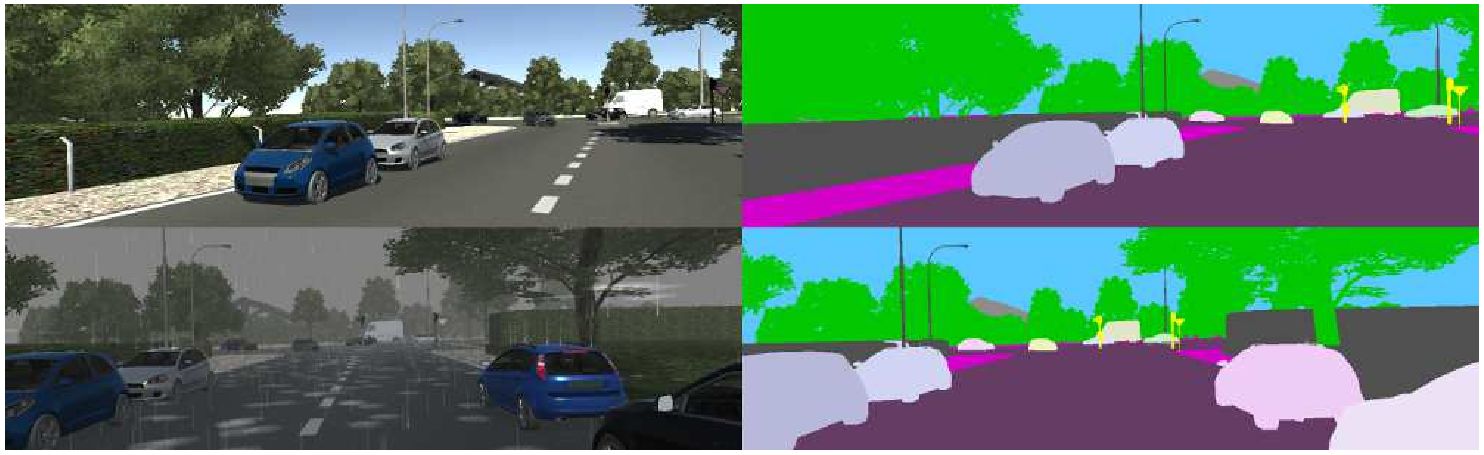

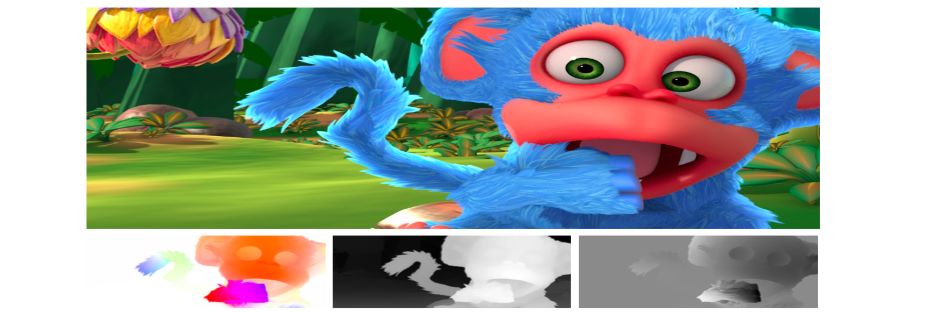

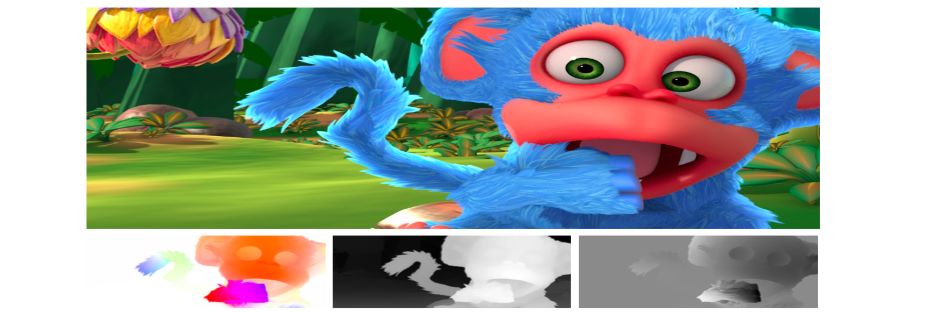

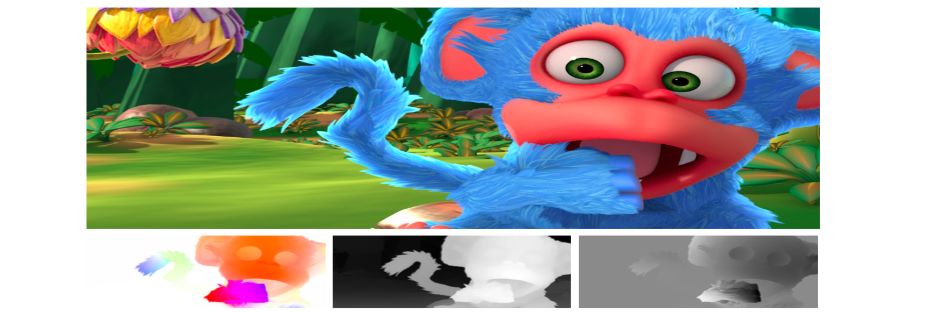

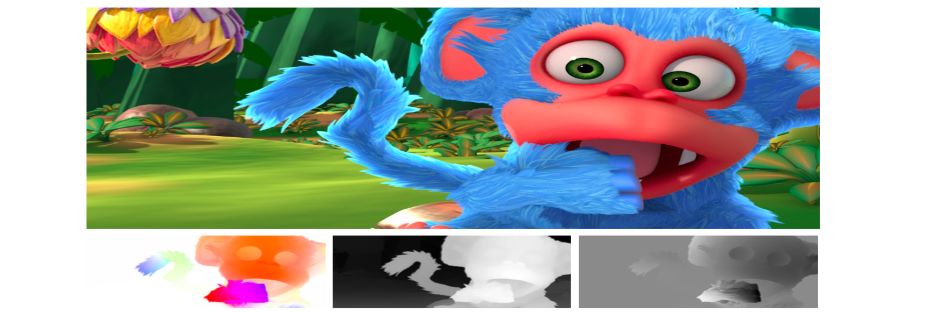

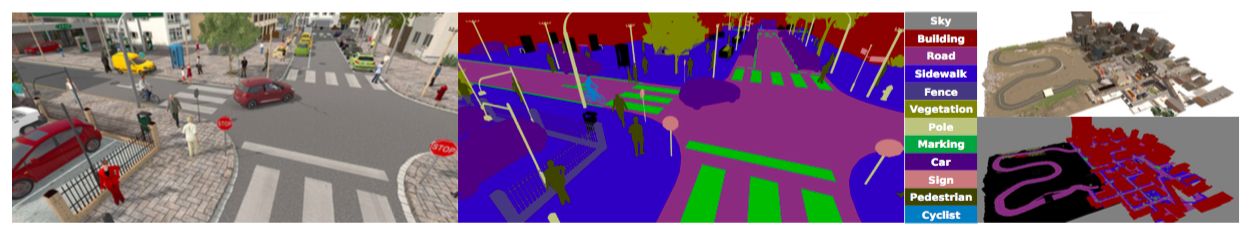

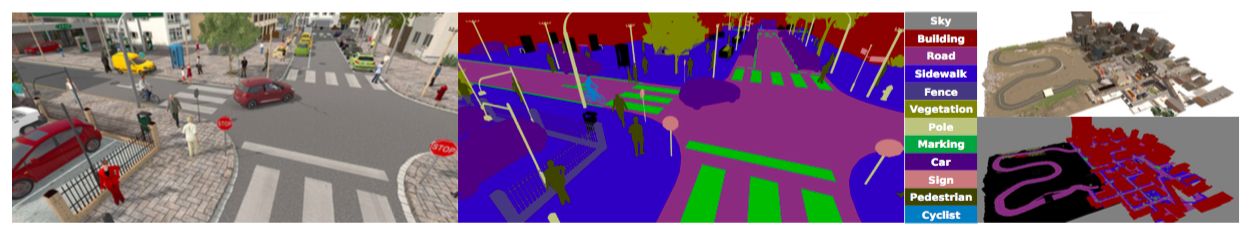

| Datasets & Benchmarks Synthetic Data Generation using Game Engines | |||

|

ACM Multimedia Open Source Software Competition 2017

Qiu2017ACM | |||

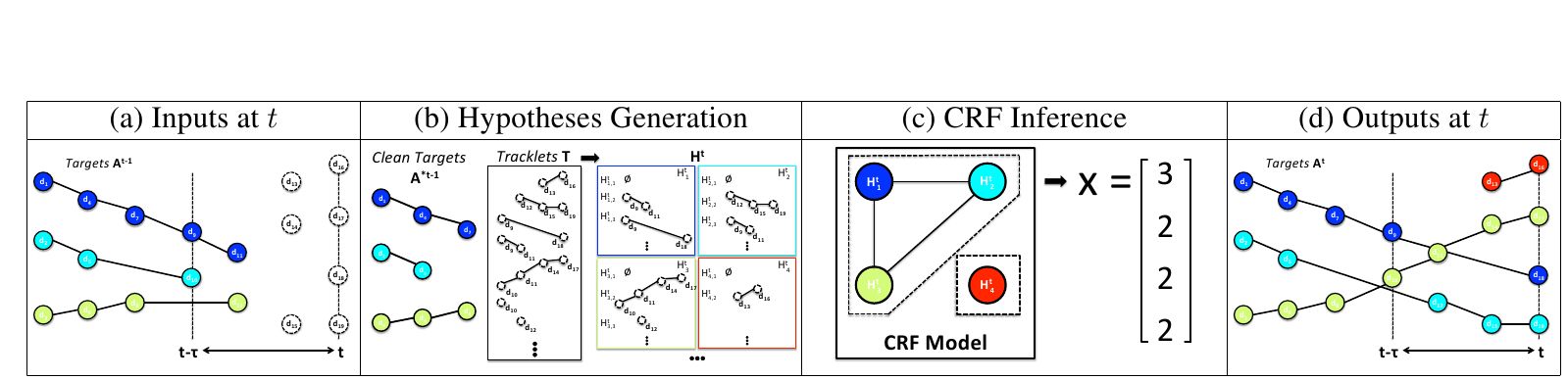

| Object Tracking State of the Art on MOT & KITTI | |||

|

Tracklet Association Tracker: An End-to-End Learning-based Association Approach for Multi-Object Tracking[scholar]

|

ARXIV 2018

Shen2018ARXIV | ||

| Multi-view 3D Reconstruction Problem Definition | |||

|

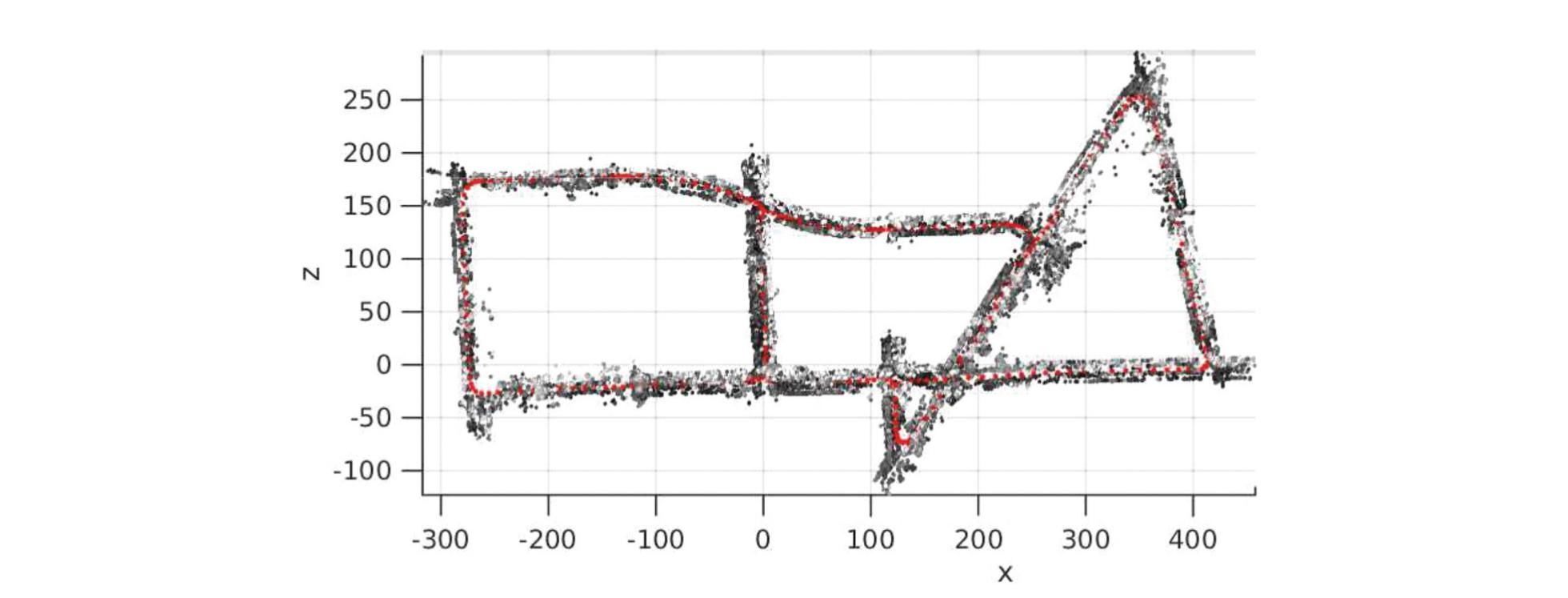

Proc. of the ISPRS Workshop Land Surface Mapping and Characterization Using Laser Altimetry 2001

Vosselman2001ISPRS | |||

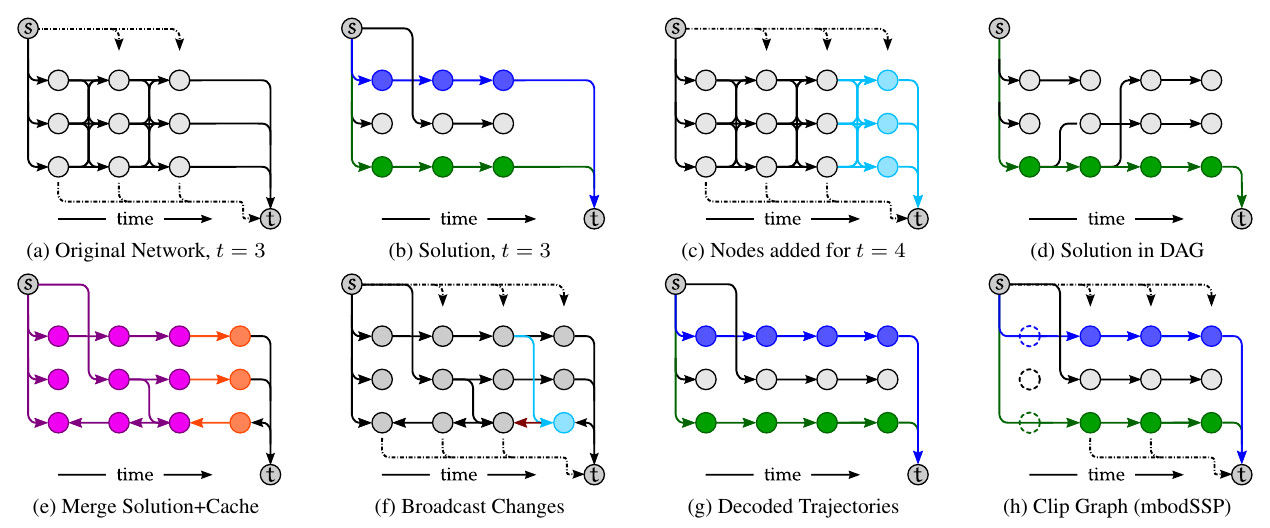

| Object Tracking Methods | |||

|

Efficient track linking methods for track graphs using network-flow and set-cover techniques[scholar]

|

CVPR 2011

Wu2011CVPRb | ||

| Semantic Segmentation State of the Art on Cityscapes | |||

|

Dense Relation Network: Learning Consistent and Context-Aware Representation for Semantic Image Segmentation[scholar]

|

ICIP 2018

Zhuang2018ICIP | ||

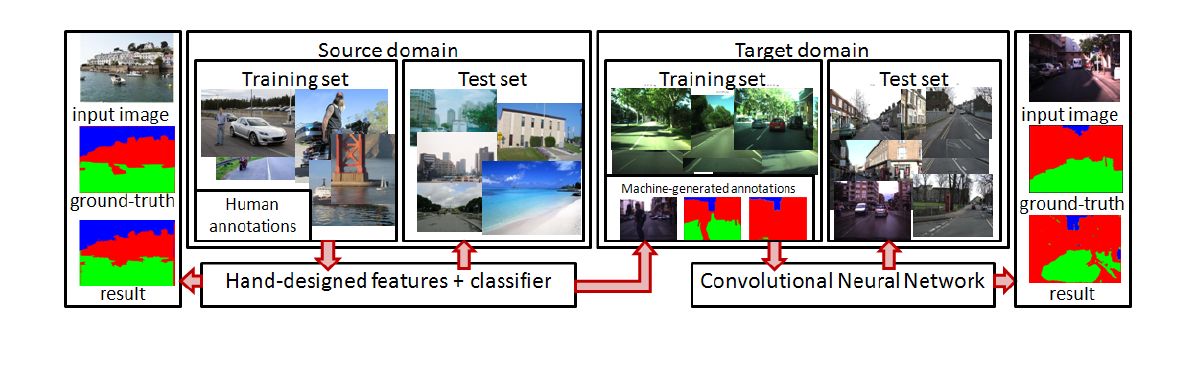

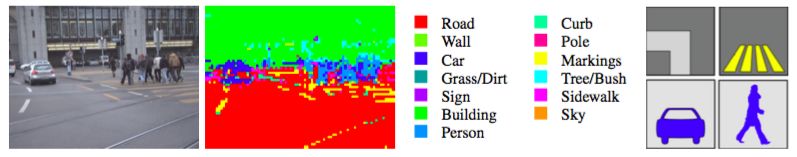

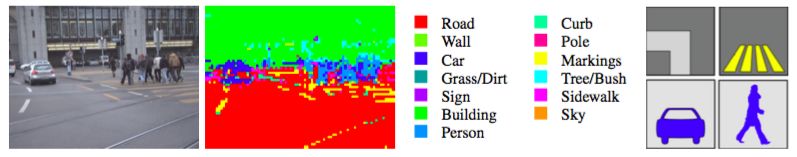

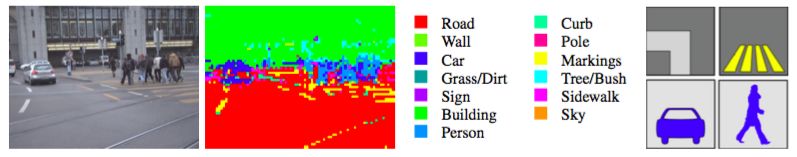

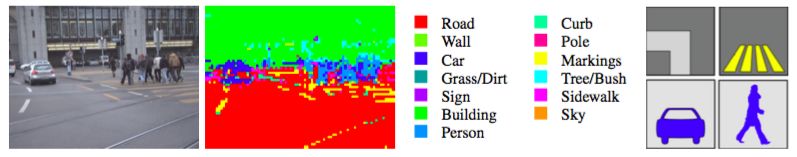

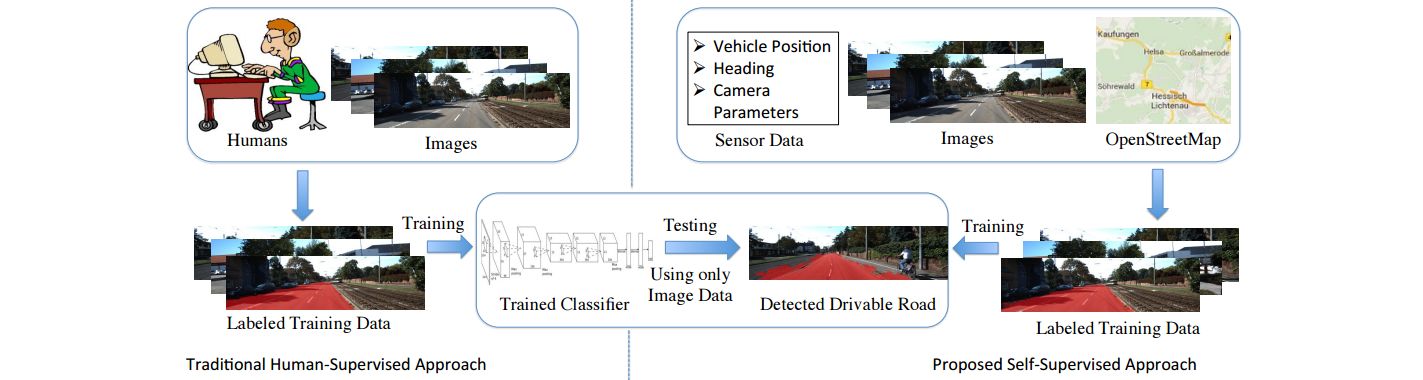

| Semantic Segmentation Methods | |||

|

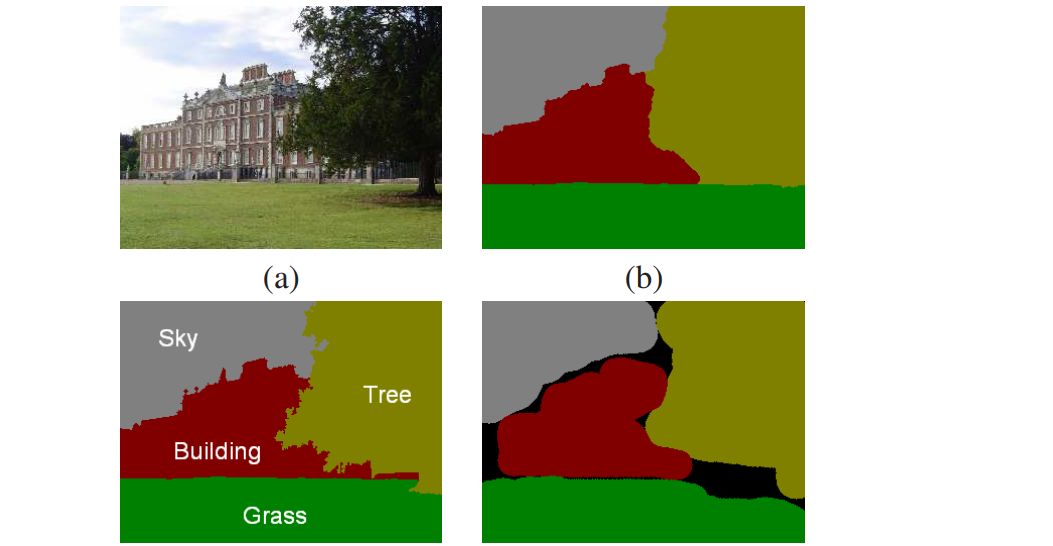

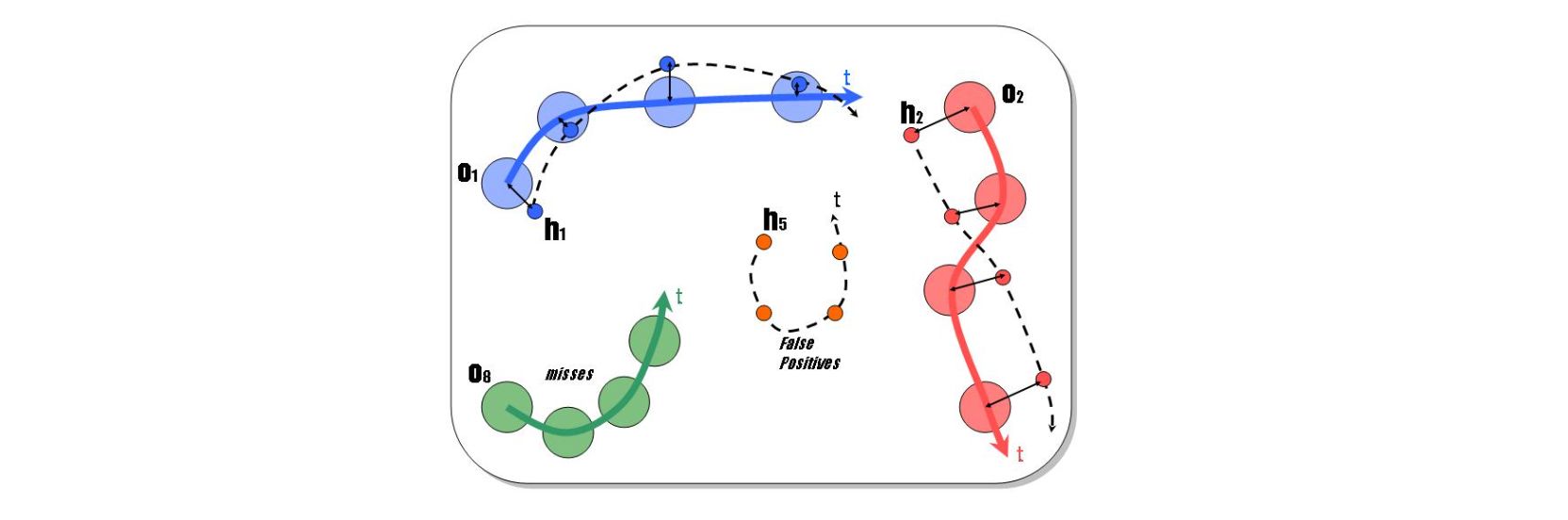

ECCV 2012

Alvarez2012ECCV | |||

- Recovering the 3D structure of the road scenes

- Convolutional neural network to learn features from noisy labels to recover the 3D scene layout

- Generating training labels by applying an algorithm trained on a general image dataset

- Train network using the generated labels to classify on-board images (offline)

- Online learning of patterns in stochastic random textures (i.e. road texture)

- Texture descriptor based on a learned color plane fusion to obtain maximal uniformity in road areas

- Offline and online information are combined to detect road areas in single images

- Evaluation on a self-recorded dataset and CamVid

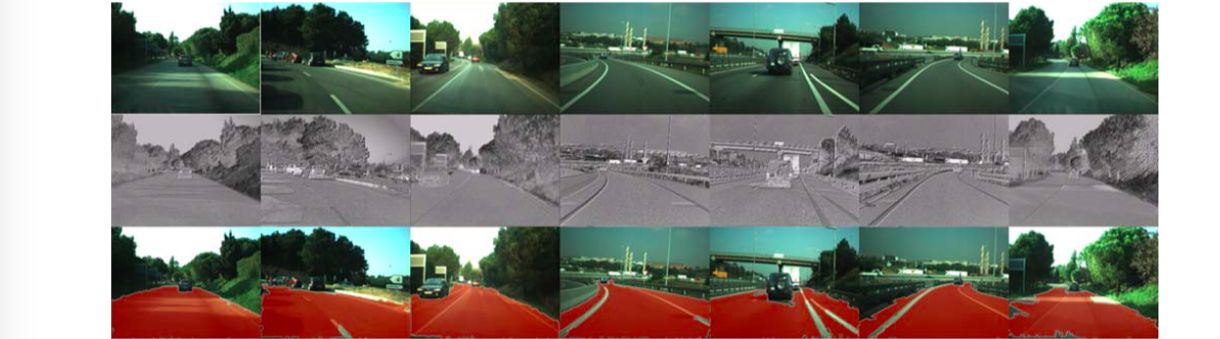

| Semantic Segmentation Methods | |||

|

TITS 2011

Alvarez2011TITS | |||

- Identifying road pixels is a major challenge due to the intraclass variability caused by lighting conditions. A particularly difficult scenario appears when the road surface has both shadowed and nonshadowed areas

- Proposes a novel approach to vision-based road detection that is robust to shadows

- Contributions:

- Uses a shadow-invariant feature space combined with a model-based classifier

- Proposes to use the illuminant-invariant image as the feature space

- This invariant image is derived from the physics behind color formation in the presence of a Planckian light source, Lambertian surfaces, and narrowband imaging sensors.

- Sunlight is approximately Planckian, road surfaces are mainly Lambertian, and regular color cameras are near narrowband

- Evaluates on self-recorded data

| Stereo Methods | |||

|

JMLR 2016

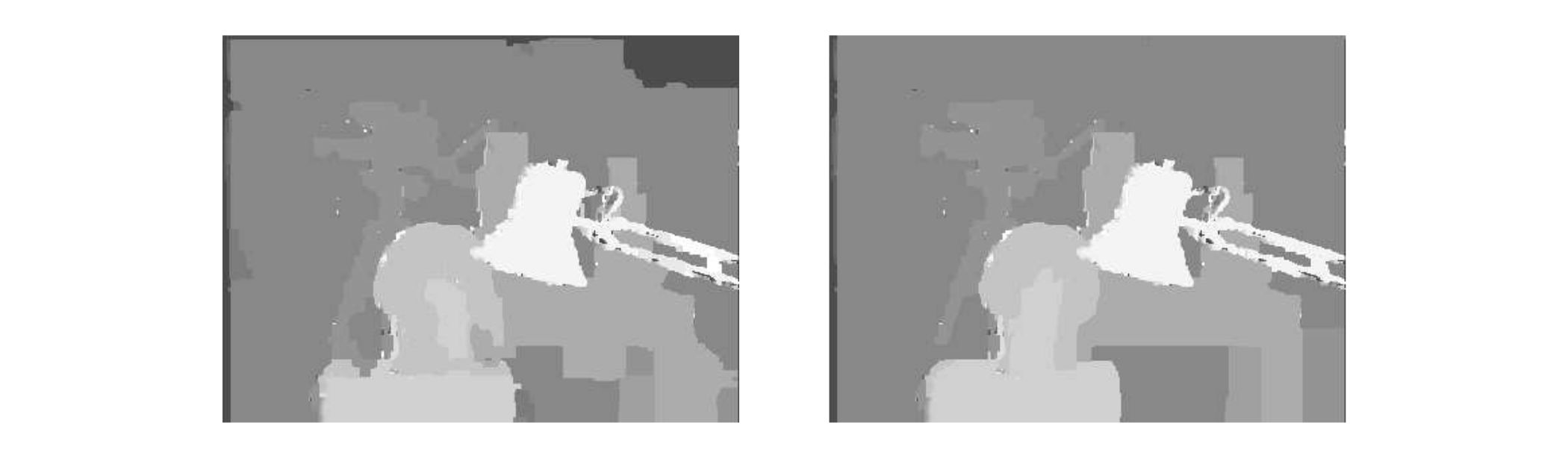

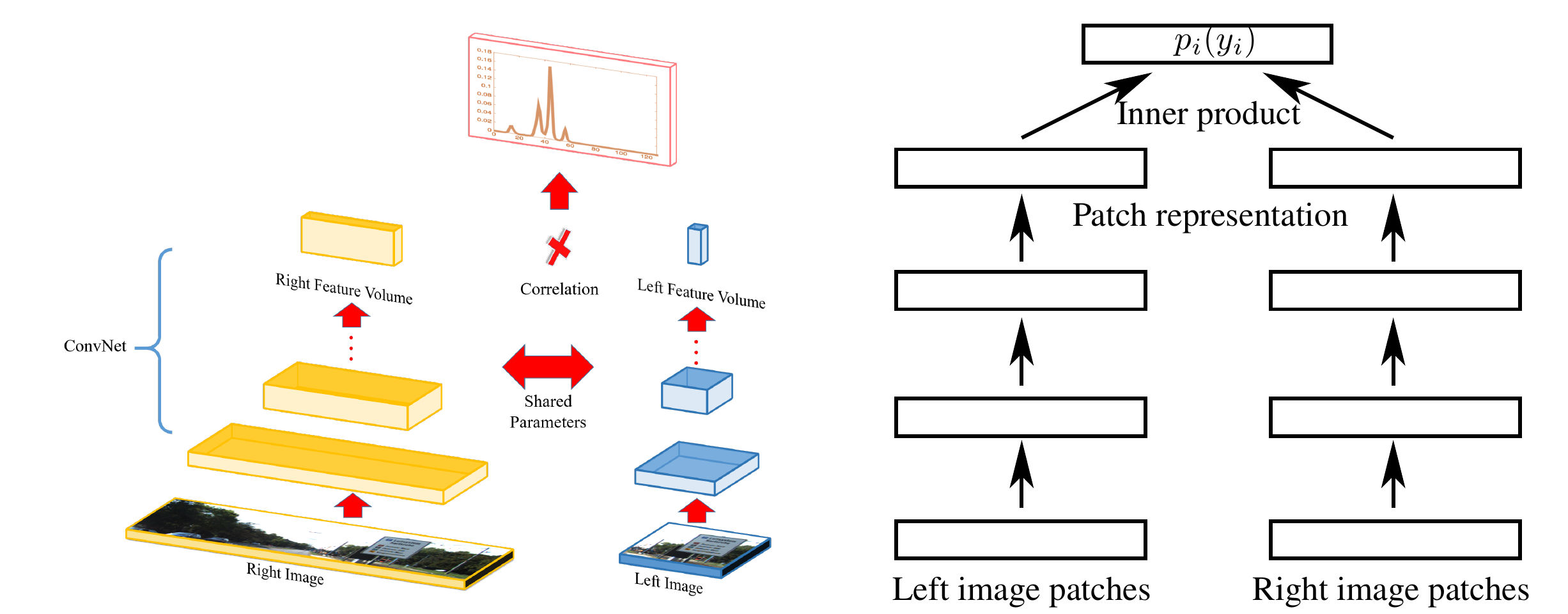

Zbontar2016JMLR | |||

- Matching cost computation by learning a similarity measure on patches using a CNN

- Siamese network with normalization and cosine similarity in the end

- Fast architecture and accurate architecture (+fully connected layers)

- Binary classification of similar and dissimilar pairs

- Sampling negatives in the neighbourhood of the positive

- Margin loss

- A series of post-processing steps:

- cross-based cost aggregation, semiglobal matching, a left-right consistency check, subpixel enhancement, a median filter, and a bilateral filter

- The best performing on KITTI 2012, 2015 datasets

| Optical Flow Methods | |||

|

JMLR 2016

Zbontar2016JMLR | |||

- Matching cost computation by learning a similarity measure on patches using a CNN

- Siamese network with normalization and cosine similarity in the end

- Fast architecture and accurate architecture (+fully connected layers)

- Binary classification of similar and dissimilar pairs

- Sampling negatives in the neighbourhood of the positive

- Margin loss

- A series of post-processing steps:

- cross-based cost aggregation, semiglobal matching, a left-right consistency check, subpixel enhancement, a median filter, and a bilateral filter

- The best performing on KITTI 2012, 2015 datasets

| Semantic Segmentation Methods | |||

|

CVPR 2010

Alvarez2010CVPR | |||

- Visionbased road detection

- Current methods:

- Based on low-level features only

- Assuming structured roads, road homogeneity, and uniform lighting conditions

- Information at scene, image and pixel level by exploiting sequential nature of the data

- Low-level, contextual and temporal cues combined in a Bayesian framework

- Contextual cues as horizon lines, vanishing points, 3D scene layout and 3D road stages

- Robust to varying imaging conditions, road types, and scenarios (tunnels, urban and high-way)

- Combined cues outperforms all other individual cues.

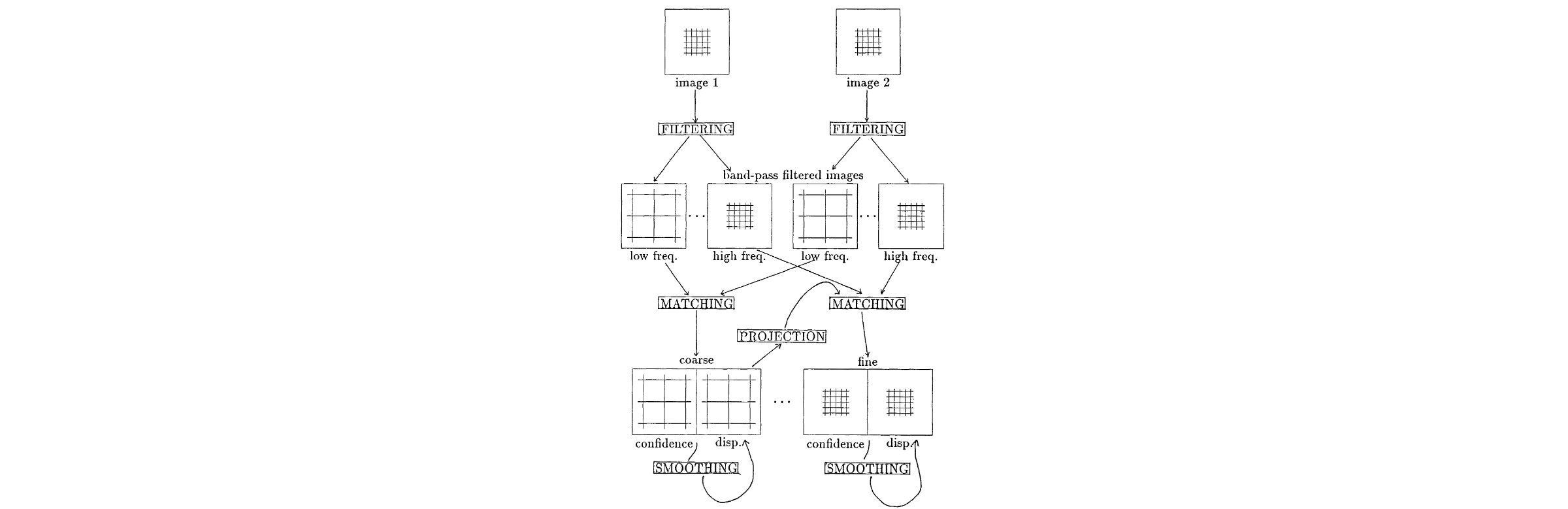

| Optical Flow Methods | |||

|

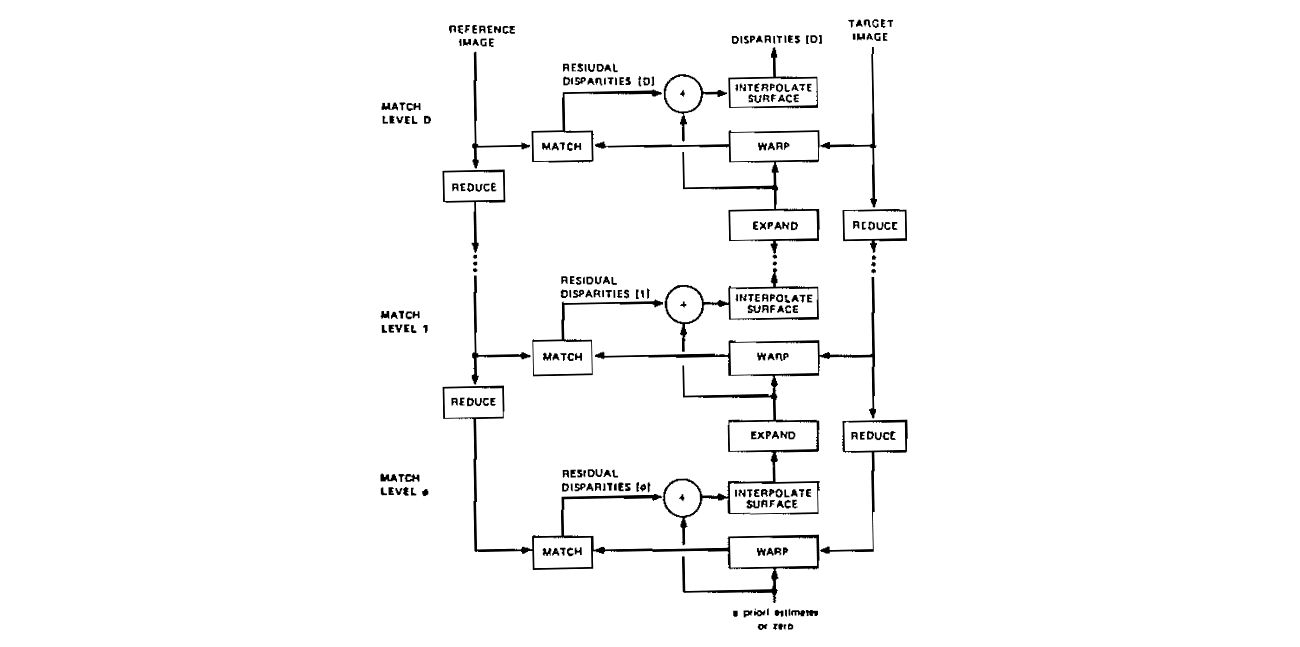

IJCV 1989

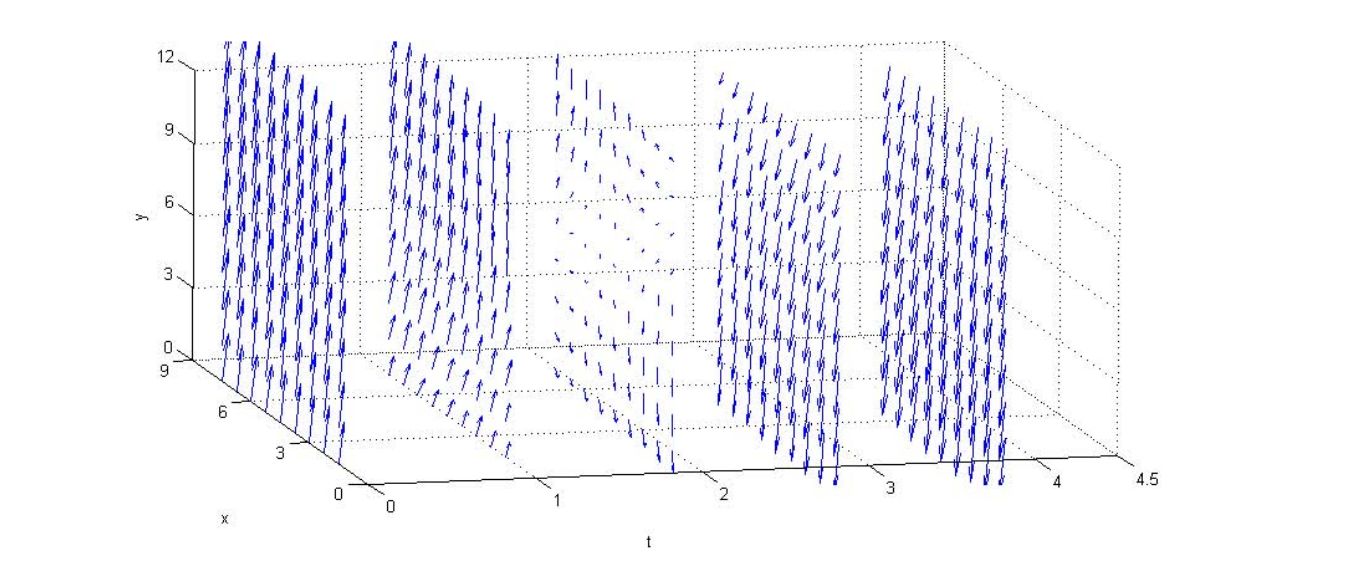

Anandan1989IJCV | |||

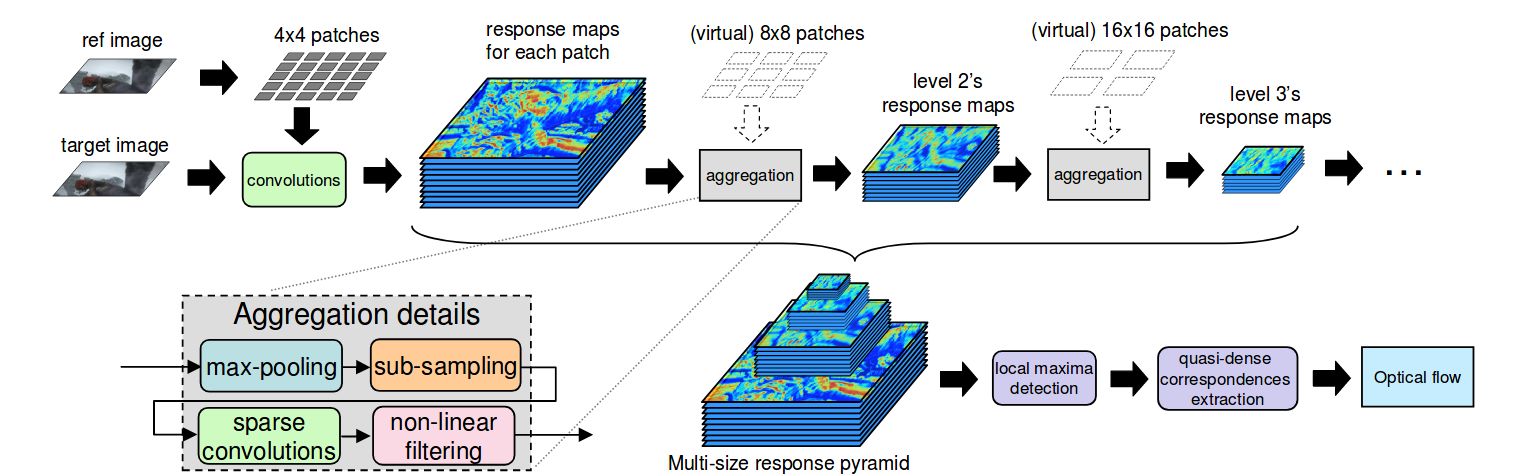

- Hierarchical computational framework for dense displacements fields from image pairs

- Based no a scale-based separation of image intensity information

- Rough estimates are firstly obtained from large-scale intensity information

- Refinement using intensity information at smaller scales

- Additionally a direction-dependent confidence measure is proposed

- Smoothness constraint propagates information with high confidence to neighbors with low confidence

- Computations are pixel-parallel, uniform across the image and based on information in a small neighborhood

- Demonstration on real images and two more hierarchical gradient-based algorithms are shown to be consistent with the framework besides the proposed one

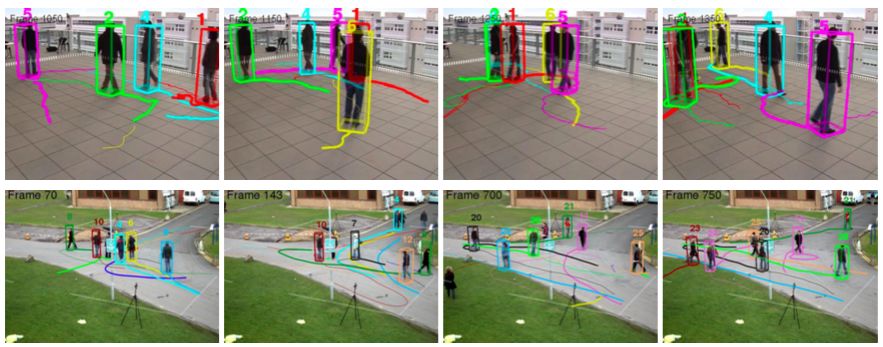

| Object Tracking Methods | |||

|

CVPR 2010

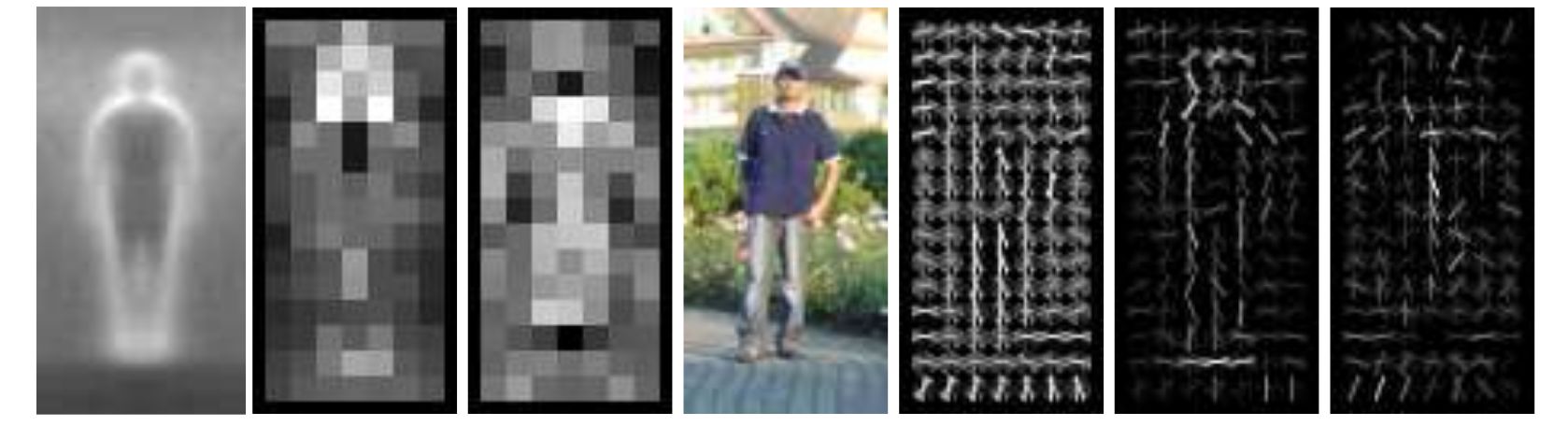

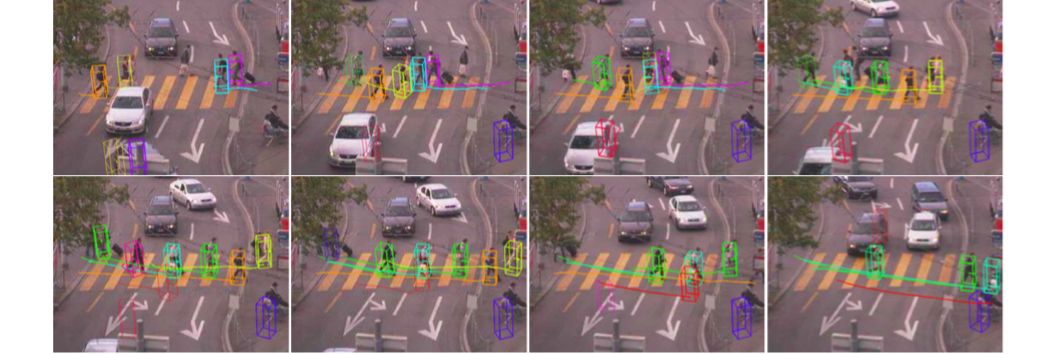

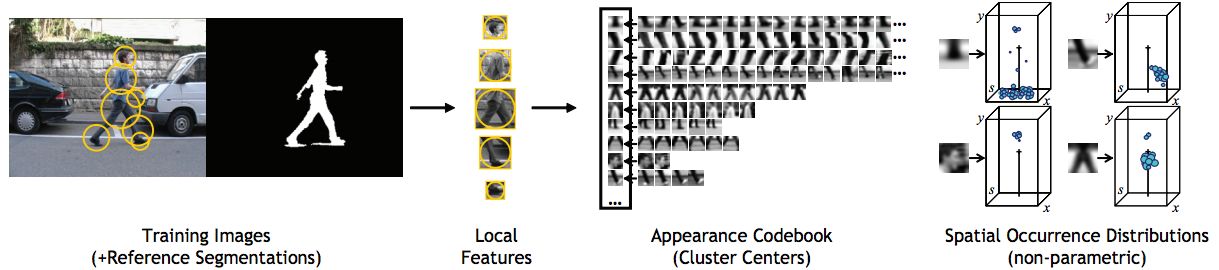

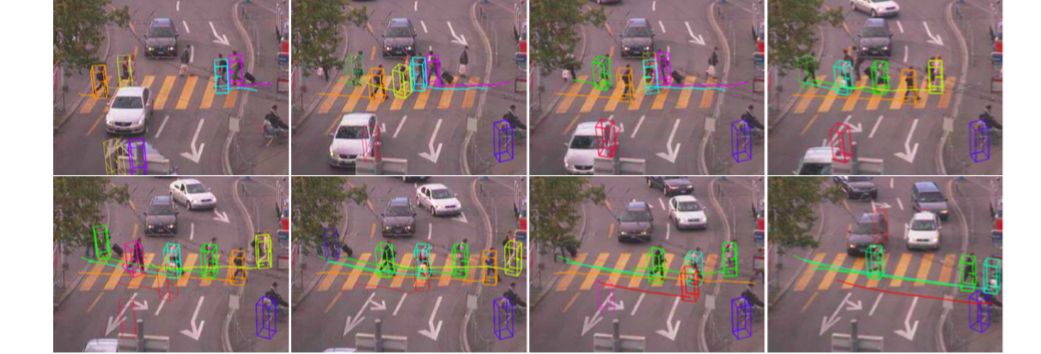

Andriluka2010CVPR | |||

- 3D pose estimation from image sequences using tracking by detection

- Methods so far worked well in controlled environments but struggle with real world scenarios

- Three staged approach

- Initial estimate of 2D articulation and viewpoint of the person using an extended 2D person detector

- Data association and accumulation into robust estimates of 2D limbs positions using a HMM based tracking approach

- Estimates used as robust image observation to reliably recover 3D pose in a Bayesian framework using hGPLVM as temporal prior

- Evaluation on HumanEva II and a novel real world dataset TUD Stadtmitte for qualitative results

| Object Tracking Methods | |||

|

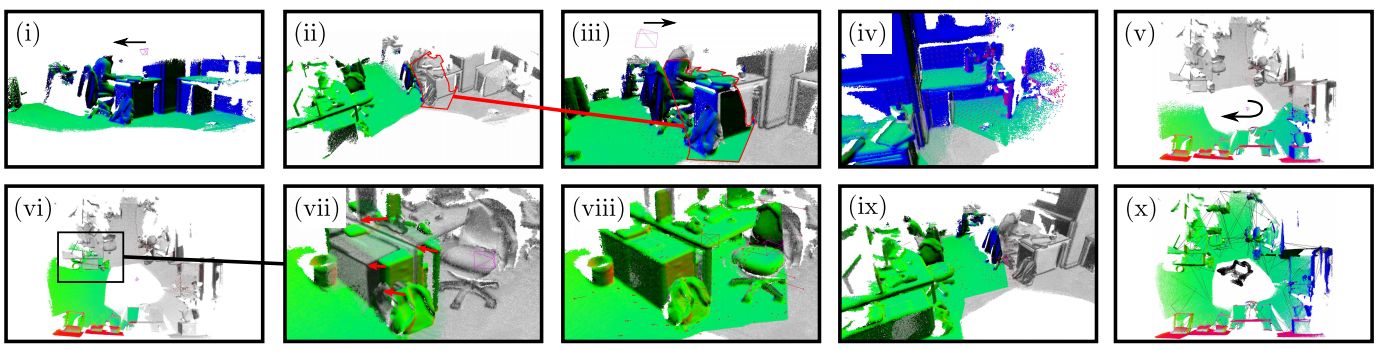

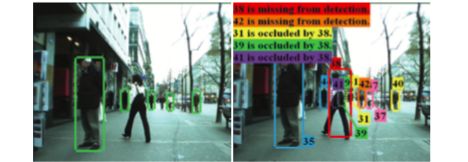

CVPR 2008

Andriluka2008CVPR | |||

- Combining detection and tracking in a single framework

- Motivation:

- People detection in complex street scenes, but with frequent false positives

- Tracking for a particular individual, but challenged by crowded street scenes

- Extension of a state-of-the-art people detector with a limb-based structure model

- Hierarchical Gaussian process latent variable model (hGPLVM) to model dynamics of the individual limbs

- Prior knowledge on possible articulations

- Temporal coherency within a walking cycle

- HMM to extend the people-tracklets to possibly longer sequences

- Improved hypotheses for position and articulation of each person in several frames

- Detection and tracking of multiple people in cluttered scenes with reoccurring occlusions

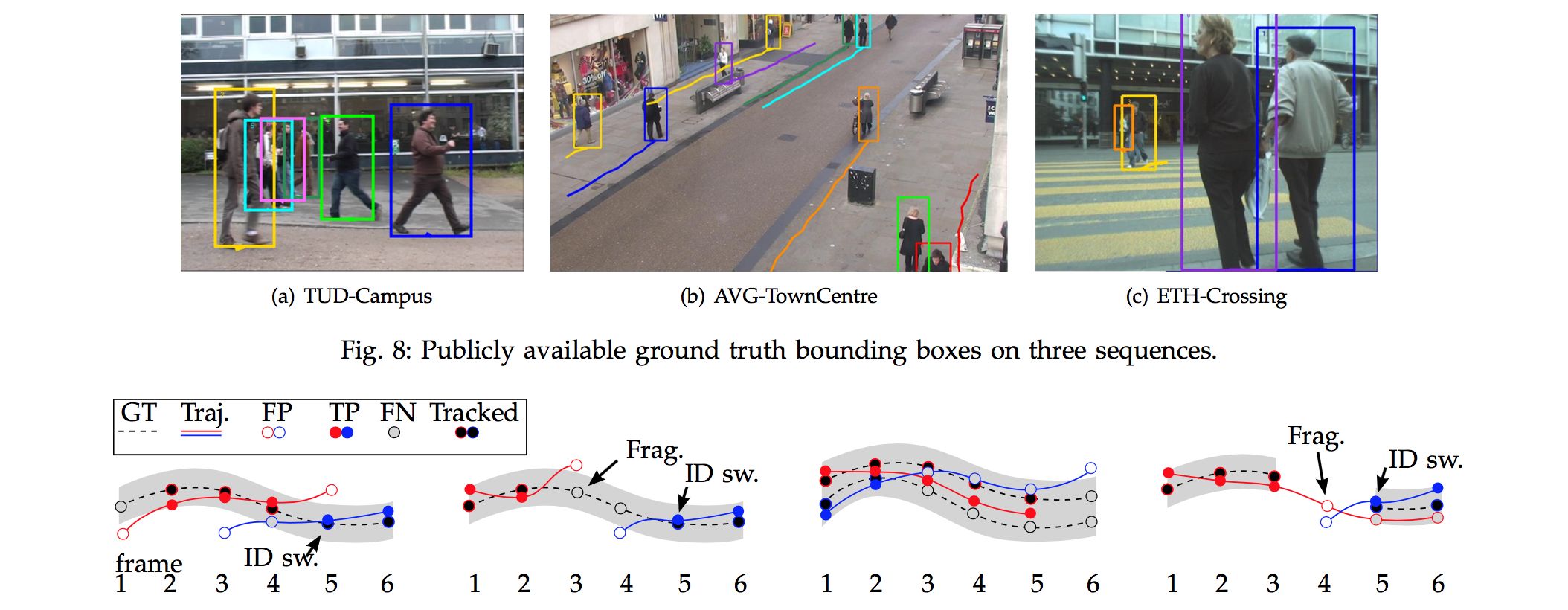

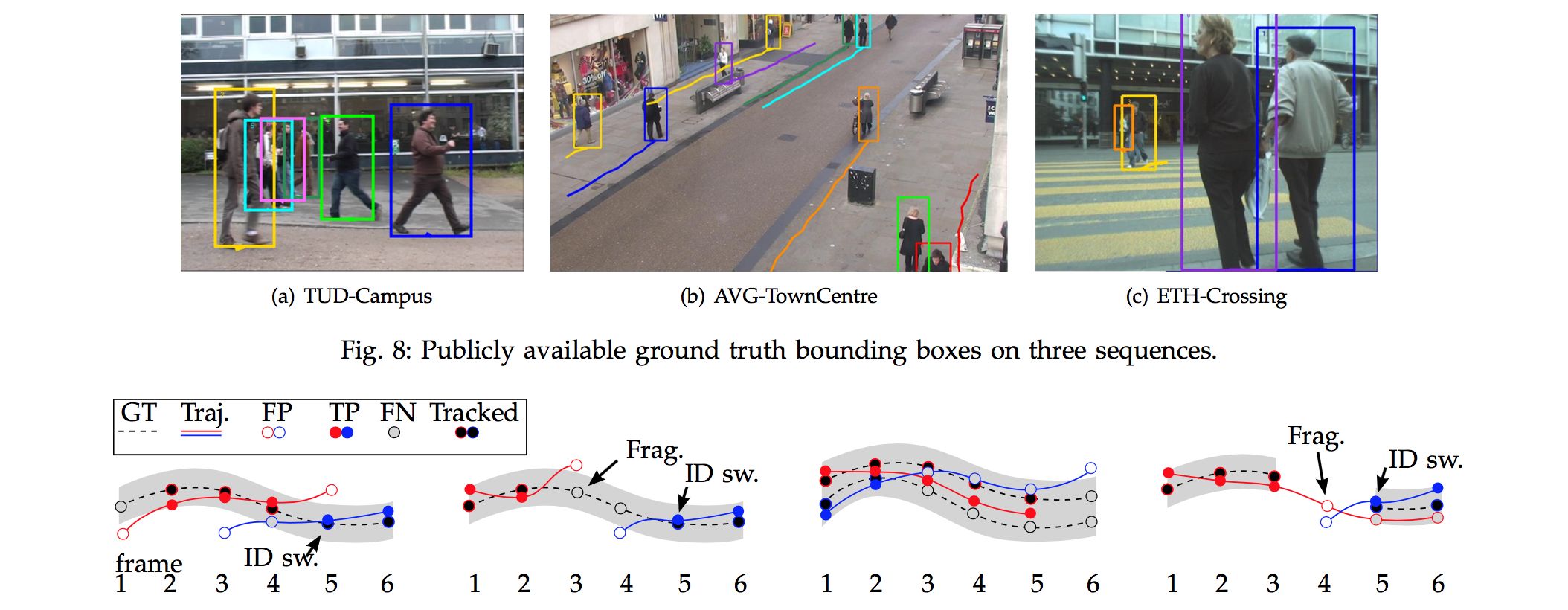

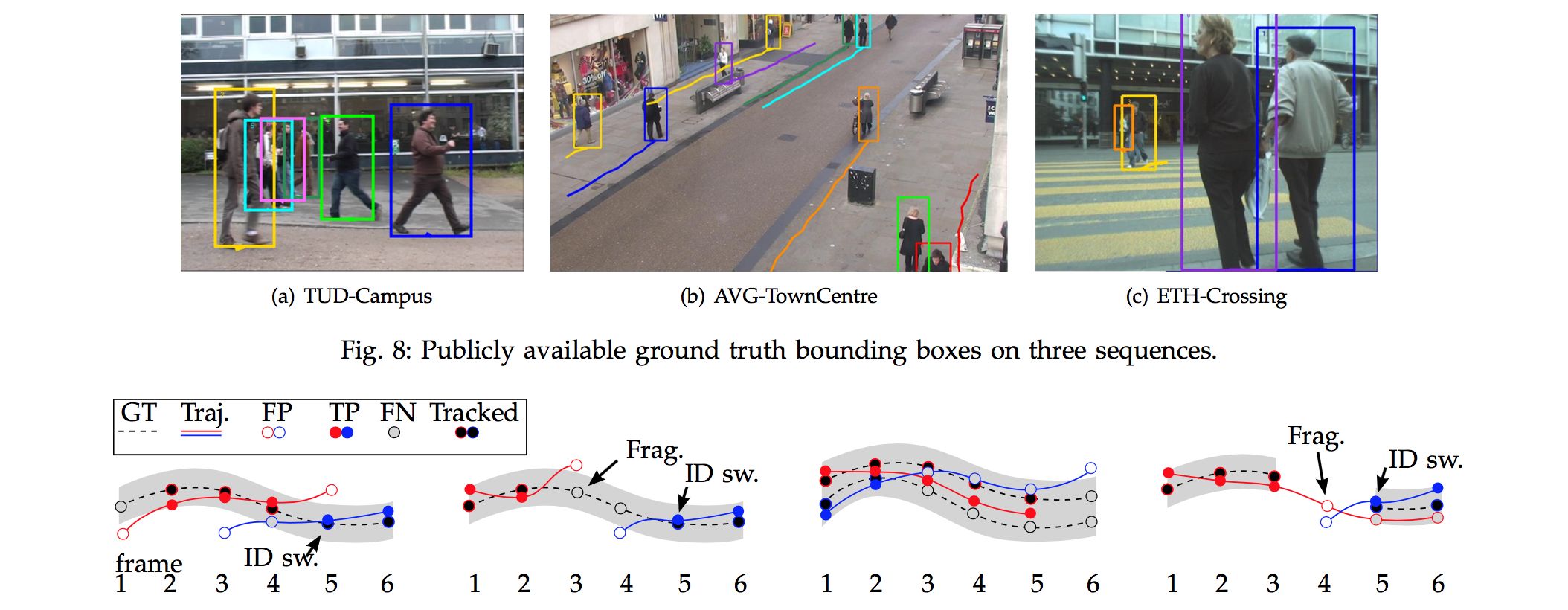

- Evaluated on TUD-Campus dataset

| Object Tracking Datasets | |||

|

CVPR 2008

Andriluka2008CVPR | |||

- Combining detection and tracking in a single framework

- Motivation:

- People detection in complex street scenes, but with frequent false positives

- Tracking for a particular individual, but challenged by crowded street scenes

- Extension of a state-of-the-art people detector with a limb-based structure model

- Hierarchical Gaussian process latent variable model (hGPLVM) to model dynamics of the individual limbs

- Prior knowledge on possible articulations

- Temporal coherency within a walking cycle

- HMM to extend the people-tracklets to possibly longer sequences

- Improved hypotheses for position and articulation of each person in several frames

- Detection and tracking of multiple people in cluttered scenes with reoccurring occlusions

- Evaluated on TUD-Campus dataset

| Object Tracking Methods | |||

|

CVPR 2011

Andriyenko2011CVPR | |||

- Existing methods limit the state space, either by per-frame non-maxima suppression or by discretizing locations to a coarse grid

- Contributions:

- Target locations are not bound to discrete object detections or grid positions, therefore defined in case of detector failure, and that there is no grid aliasing

- Proposes that convexity is not the primary requirement for a good cost function in the case of tracking.

- New minimization procedure is capable of exploring a much larger portion of the search space than standard gradient methods

- Evaluates on sequences from terrace1,terrace2, VS-PETS2009, TUD-Stadtmitte datasets

| Object Tracking Methods | |||

|

CVPR 2012

Andriyenko2012CVPR | |||

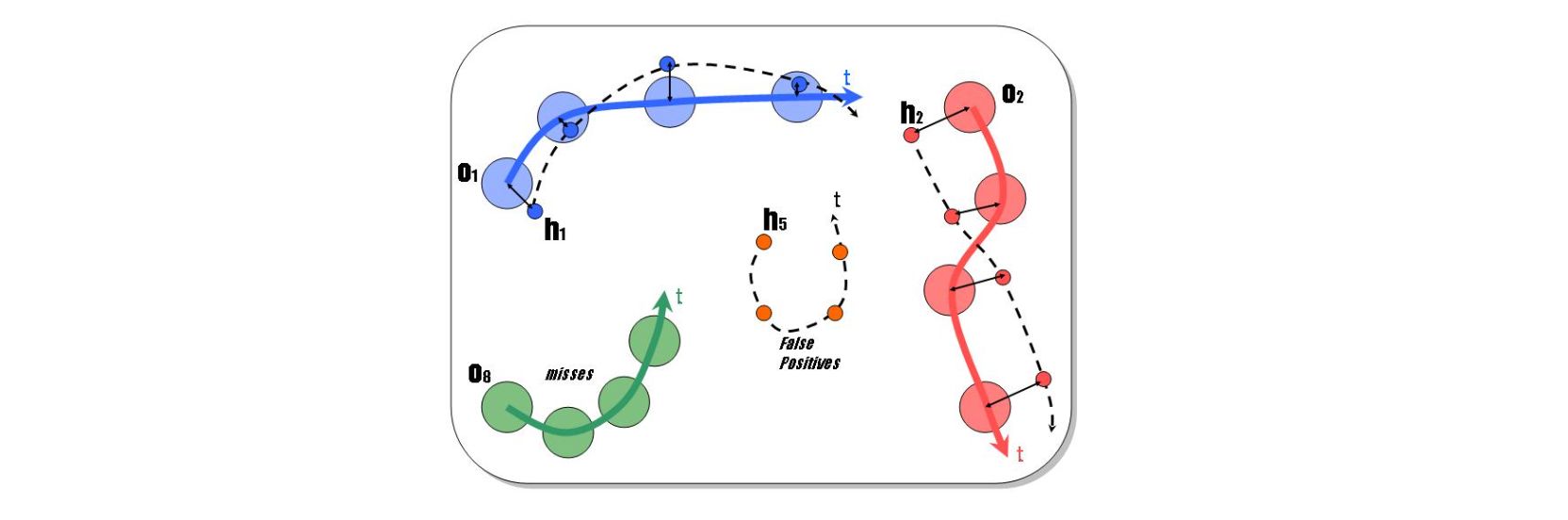

- Multi-target tracking consists of the discrete problem of data association and the continuous problem of trajectory estimation

- Both problems were tackled separately using precomputed trajectories for data association

- Discrete-continuous optimization that jointly addresses data association and trajectory estimation

- Continuous trajectory model using cubic B-splines

- Discrete association using a MRF that assigns each observation to a trajectory or identifies it as outlier

- Combined formulation with label costs to avoid too many trajectories

- Evaluation on the TUD datasets

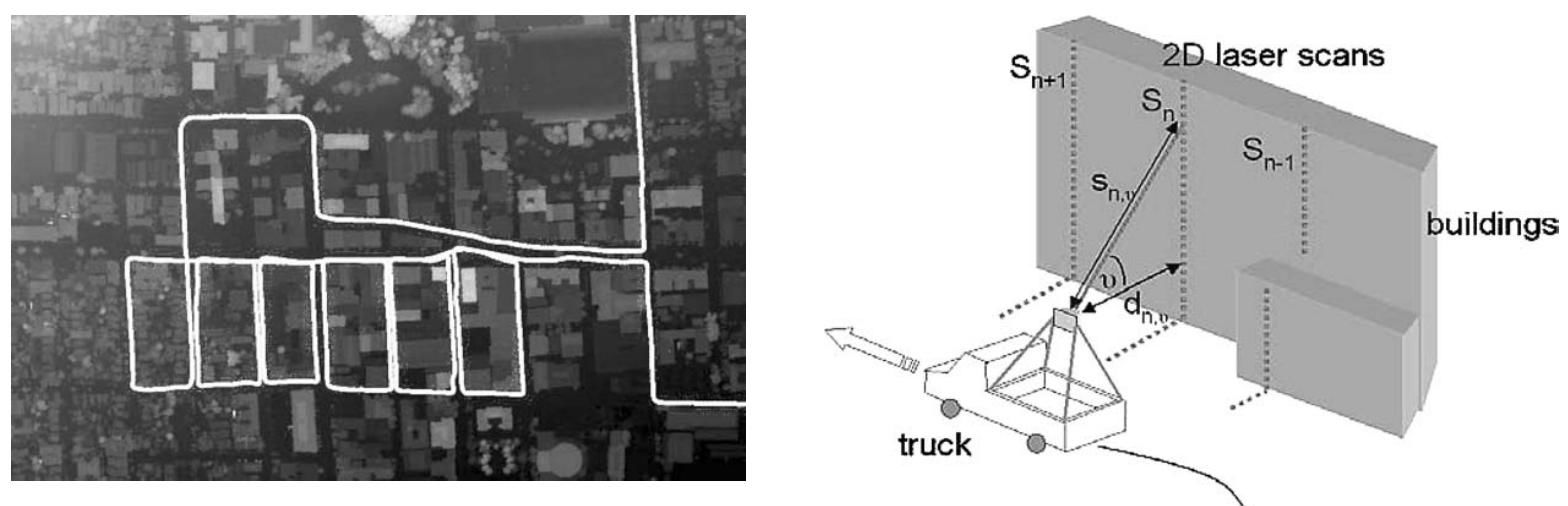

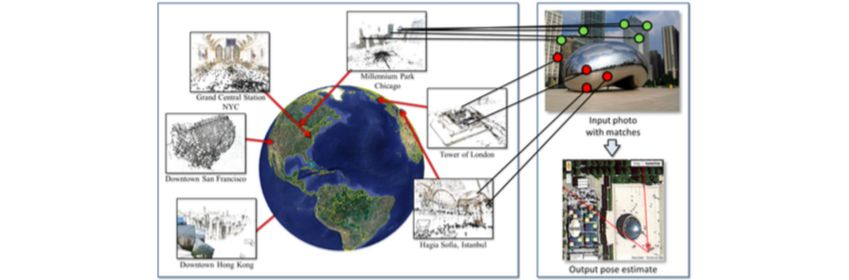

| Mapping, Localization & Ego-Motion Estimation Problem Definition | |||

|

COMPUTER 2010

Anguelov2010COMPUTER | |||

- Google Street View captures panoramic imagery of streets in hundreds of cities in 20 countries

- Technical challenges in capturing, processing, and serving street-level imagery

- Developed sophisticated hardware, software and operational processes

- Pose estimation using GPS, wheel encoder, and inertial with an online Kalman-filter-based algorithm

- Camera system consisting of 15 small cameras using 5 MP CMOS

- Laser range data is aggregated and simplified by fitting a coarse mesh

- Supports 3D navigation

| Mapping, Localization & Ego-Motion Estimation Mapping | |||

|

COMPUTER 2010

Anguelov2010COMPUTER | |||

- Google Street View captures panoramic imagery of streets in hundreds of cities in 20 countries

- Technical challenges in capturing, processing, and serving street-level imagery

- Developed sophisticated hardware, software and operational processes

- Pose estimation using GPS, wheel encoder, and inertial with an online Kalman-filter-based algorithm

- Camera system consisting of 15 small cameras using 5 MP CMOS

- Laser range data is aggregated and simplified by fitting a coarse mesh

- Supports 3D navigation

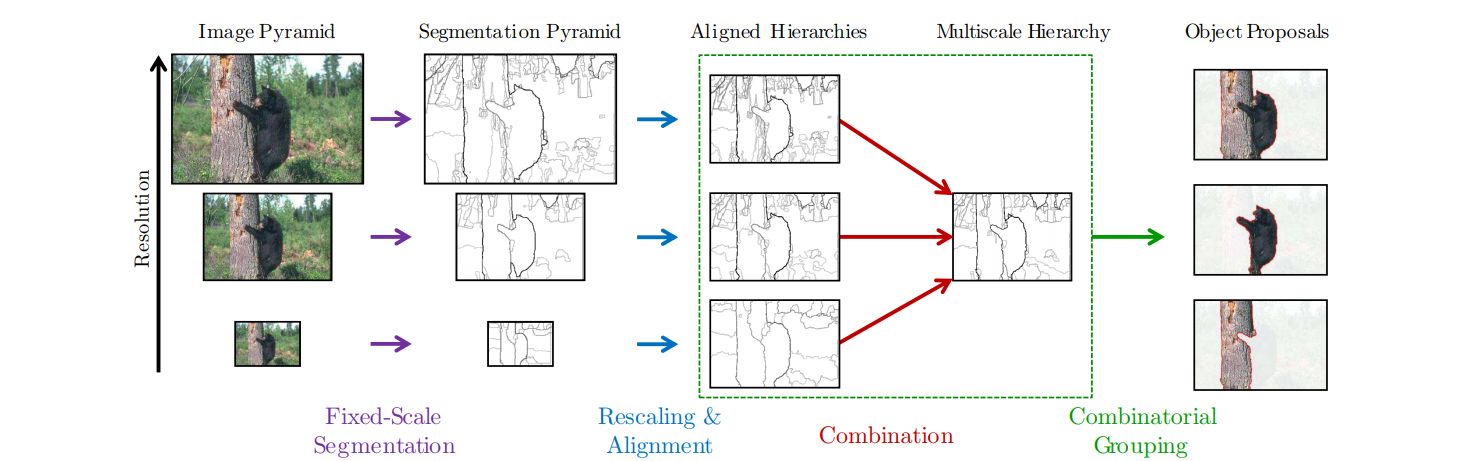

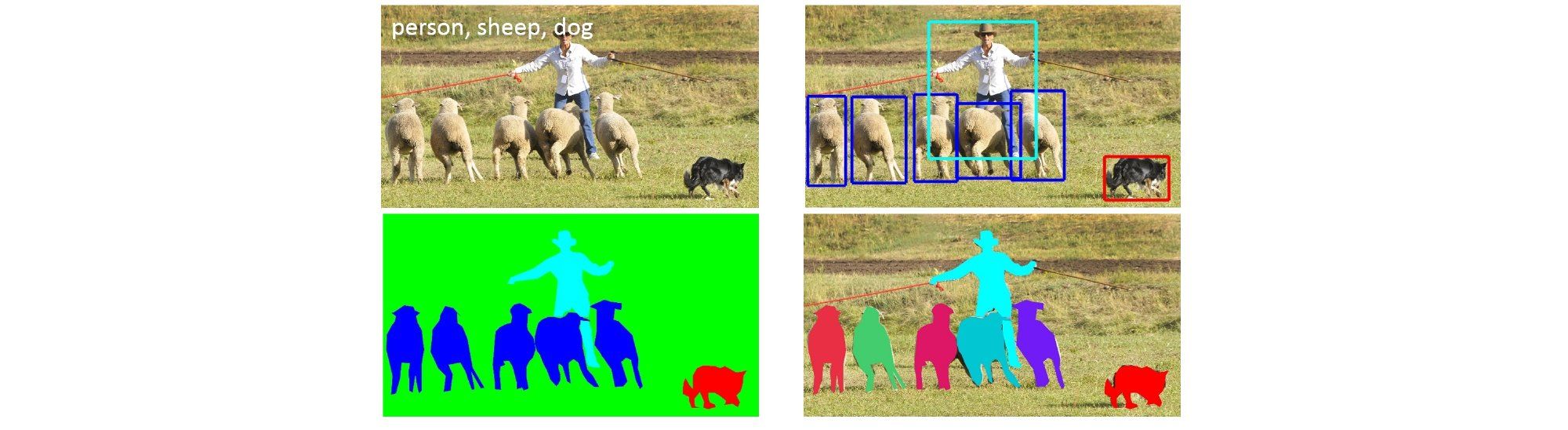

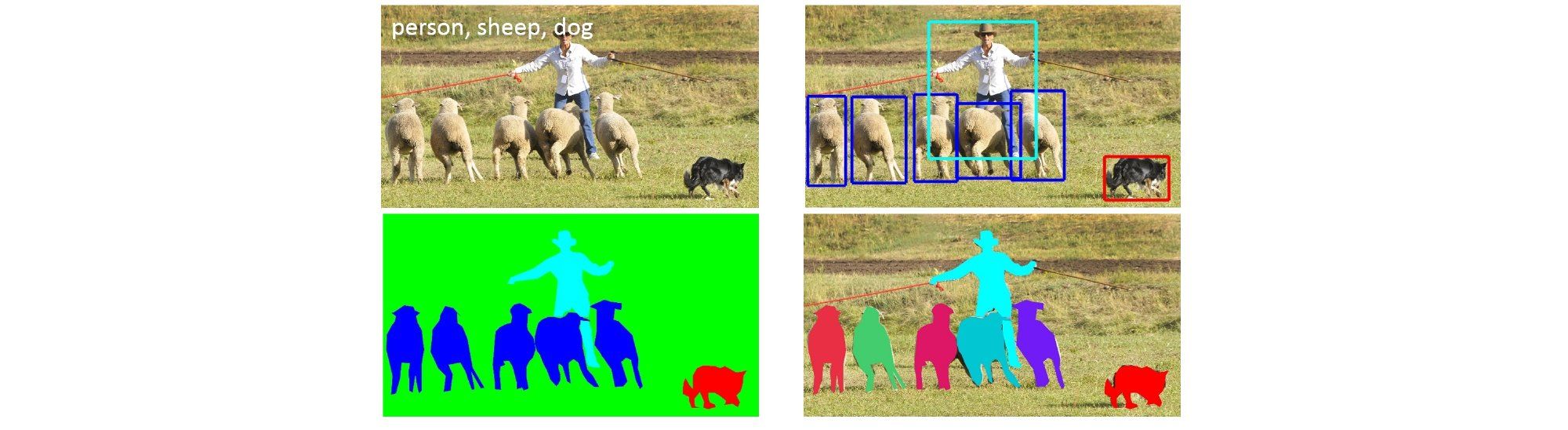

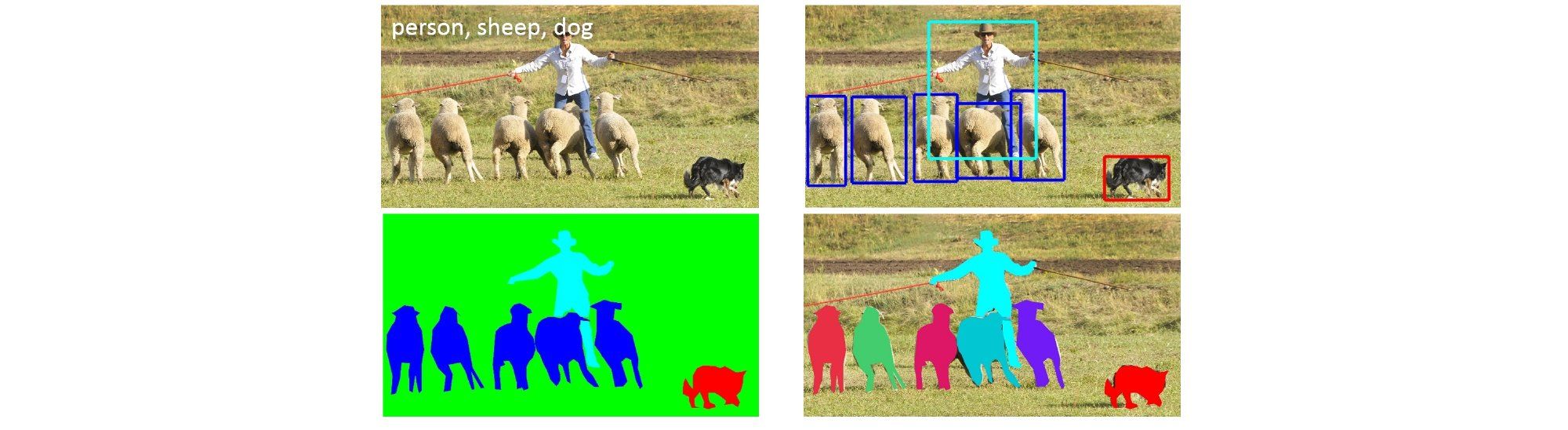

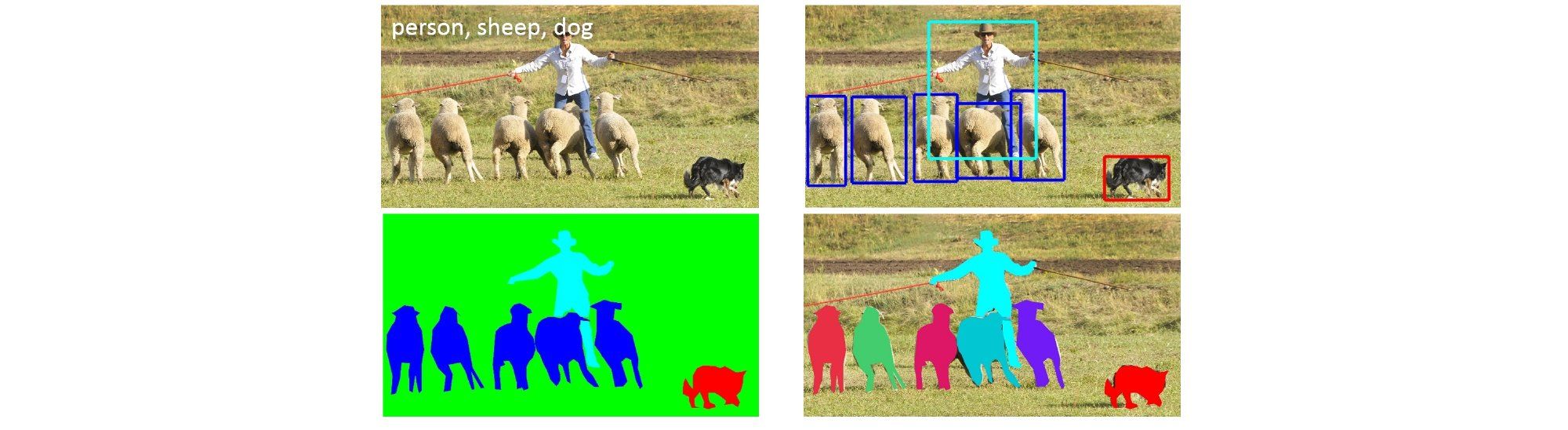

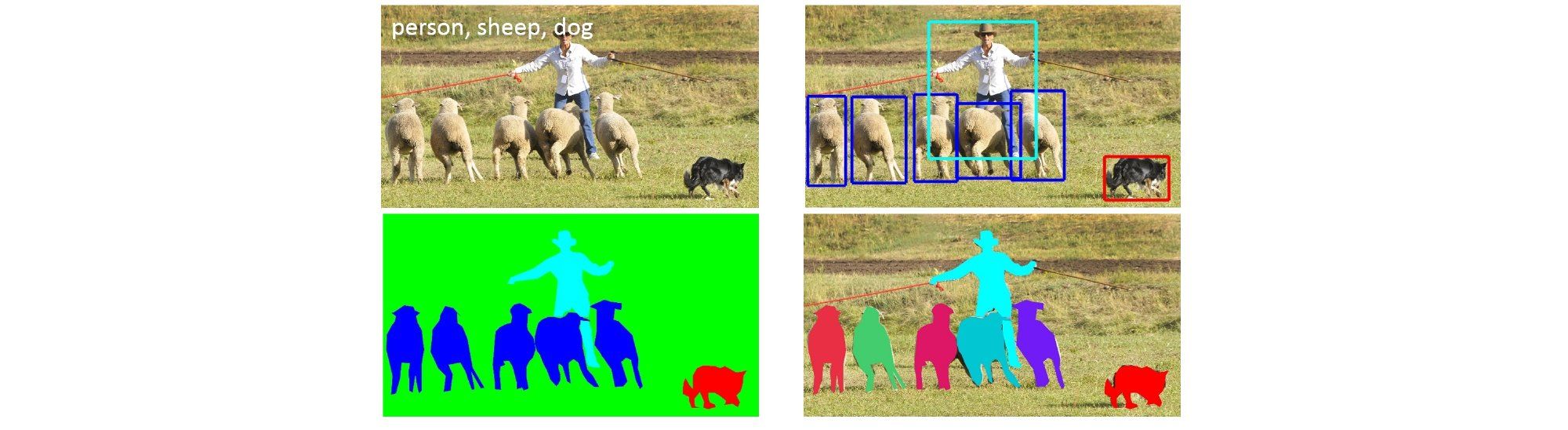

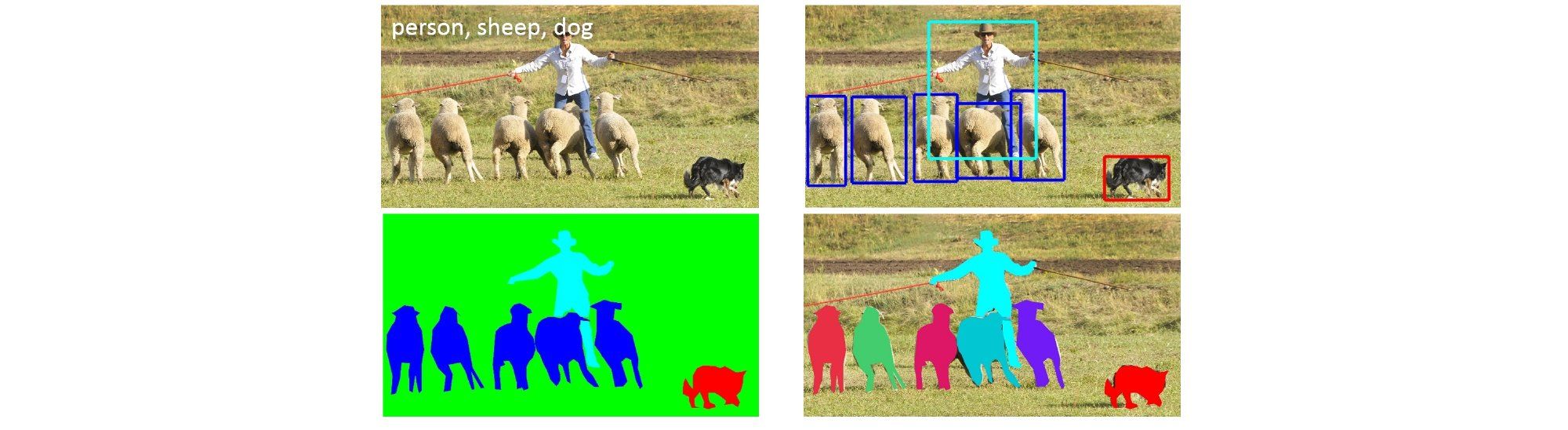

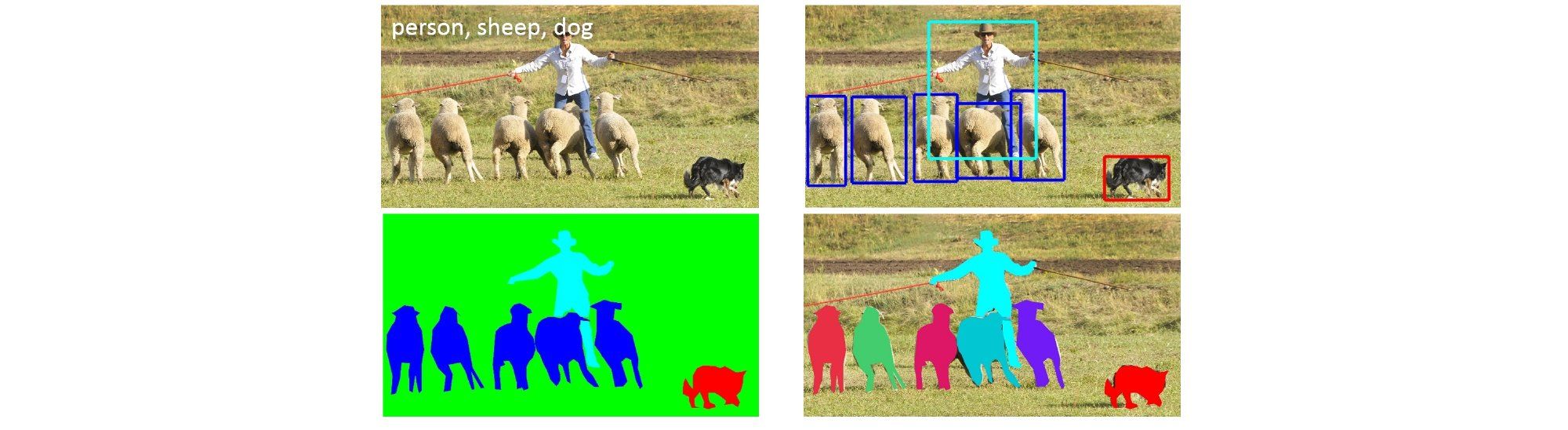

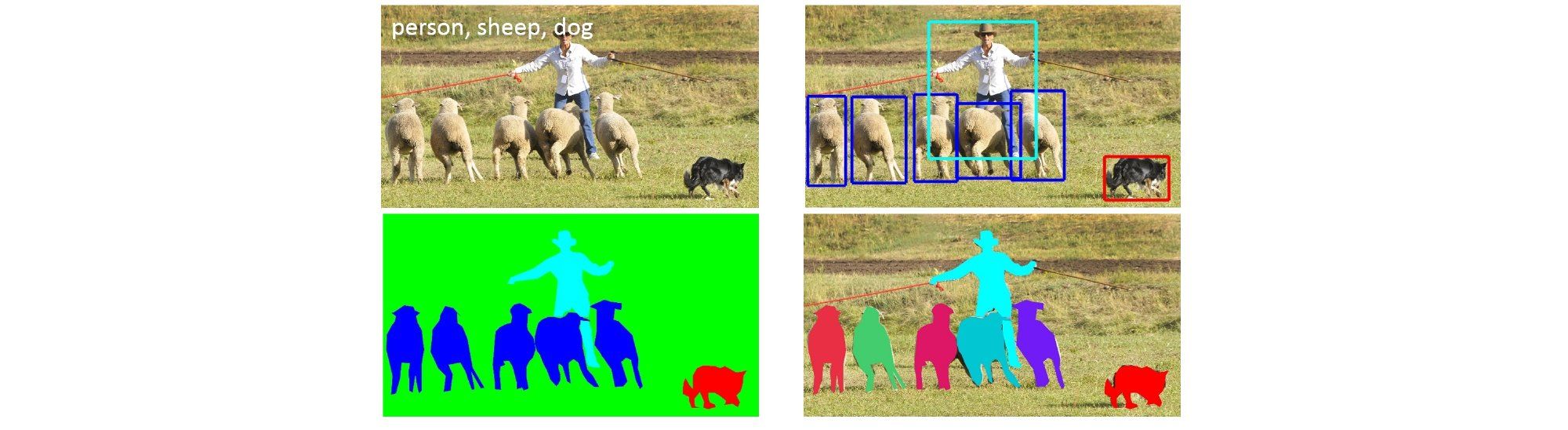

| Semantic Instance Segmentation Methods | |||

|

CVPR 2014

Arbelaez2014CVPR | |||

- Previous Proposal-based instance segmentation methods extract class-agnostic proposals which are classified as an instance of a certain semantic class in order to obtain pixel-level instance masks.

- This paper proposes a high-performance hierarchical segmenter that makes effective use of multiscale information.

- Propose a grouping strategy that combines multiscale regions into highly-accurate object candidates by exploring efficiently their combinatorial space

- The Region proposal method proposed in this paper can be directly used as instance segments.

- Demonstrate performance on BSDS500, VOC12 datasets.

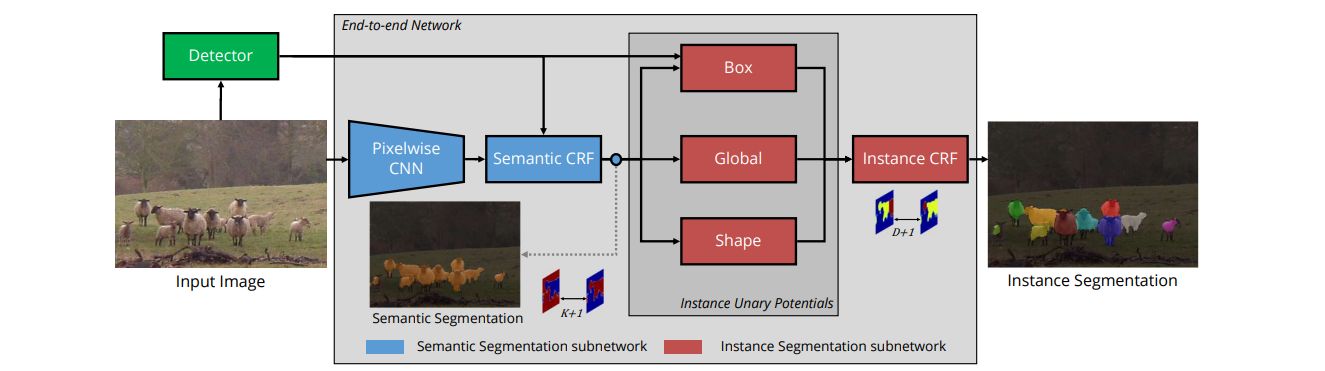

| Semantic Instance Segmentation Methods | |||

|

CVPR 2017

Arnab2017CVPR | |||

- Propose an Instance Segmentation system that produces a segmentation map where each pixel is assigned an object class and instance identity label.

- Most previous approaches adapt object detectors to produce segments instead of boxes.

- In contrast, their method is based on an initial semantic segmentation module, which feeds into an instance subnetwork.

- This subnetwork uses the initial category-level segmentation, along with cues from the output of an object detector, within an end-to-end CRF to predict instances.

- The end-to-end approach requires no post-processing and considers the image holistically, instead of processing independent proposals.

- Therefore, unlike some previous work, a pixel cannot belong to multiple instances.

- Demonstrate performance on cityscapes, PASCAL VOC and Semantic Boundaries Dataset (SBD) datasets.

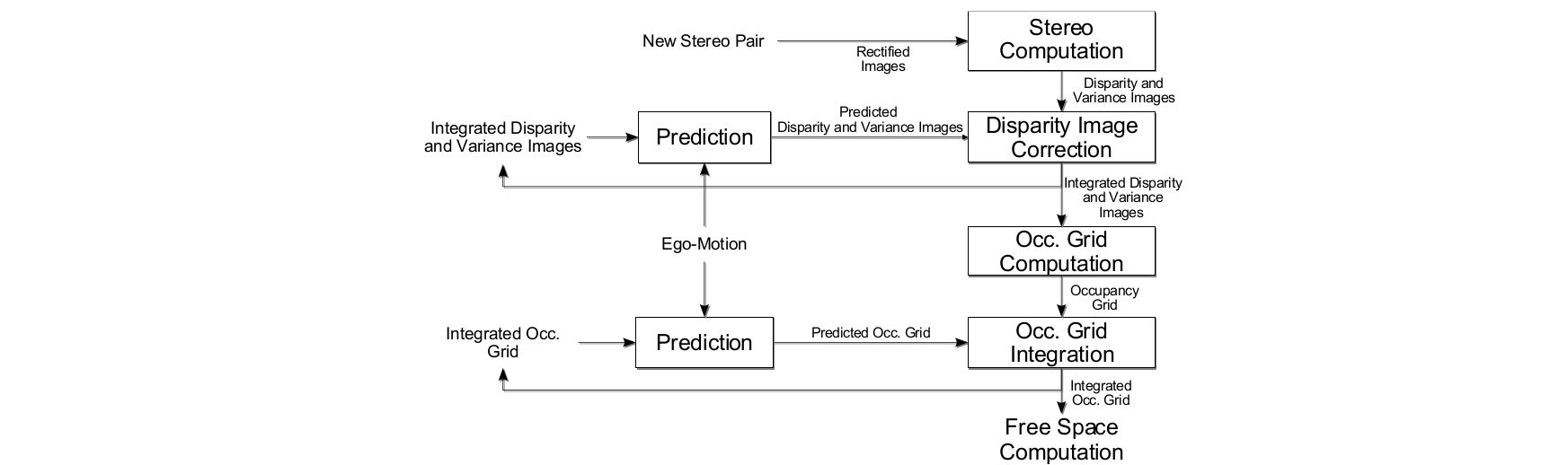

| Semantic Segmentation Methods | |||

|

ICCVWORK 2007

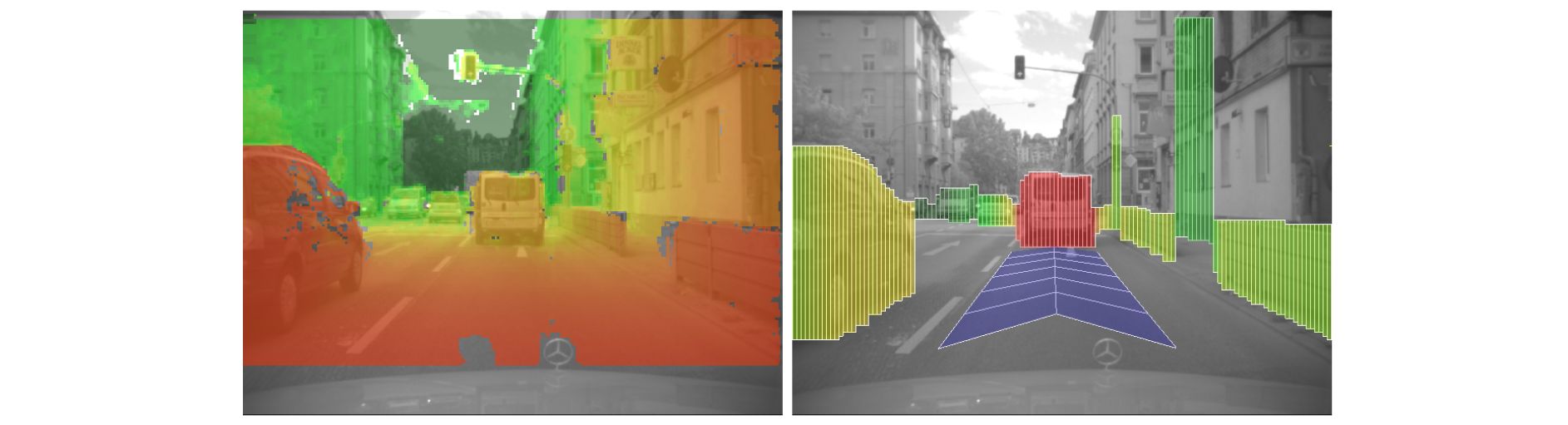

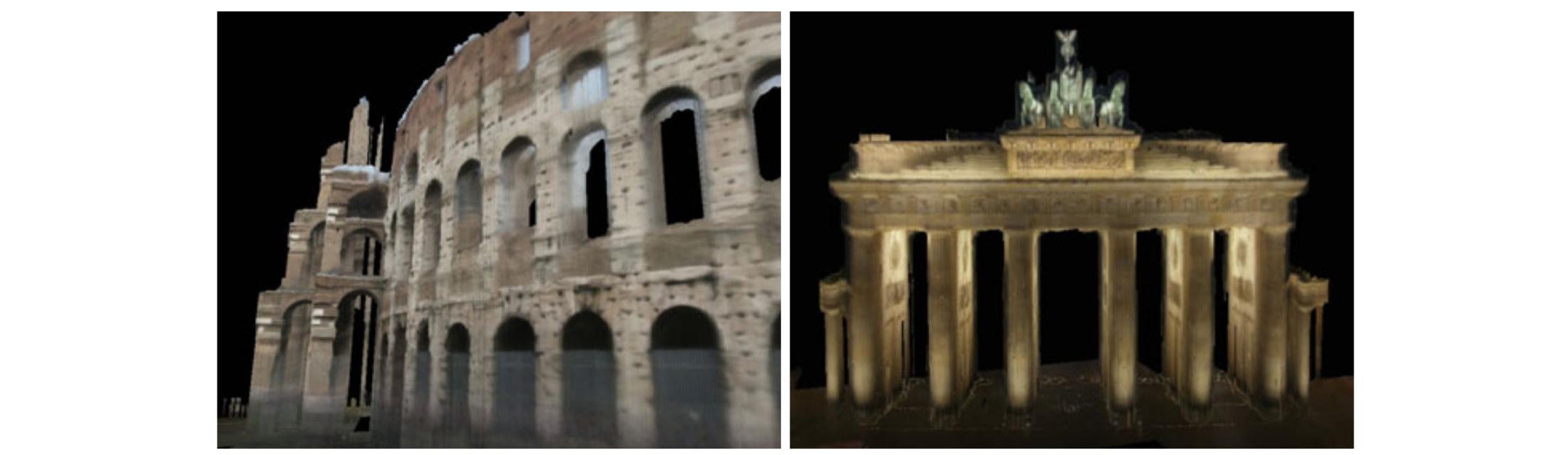

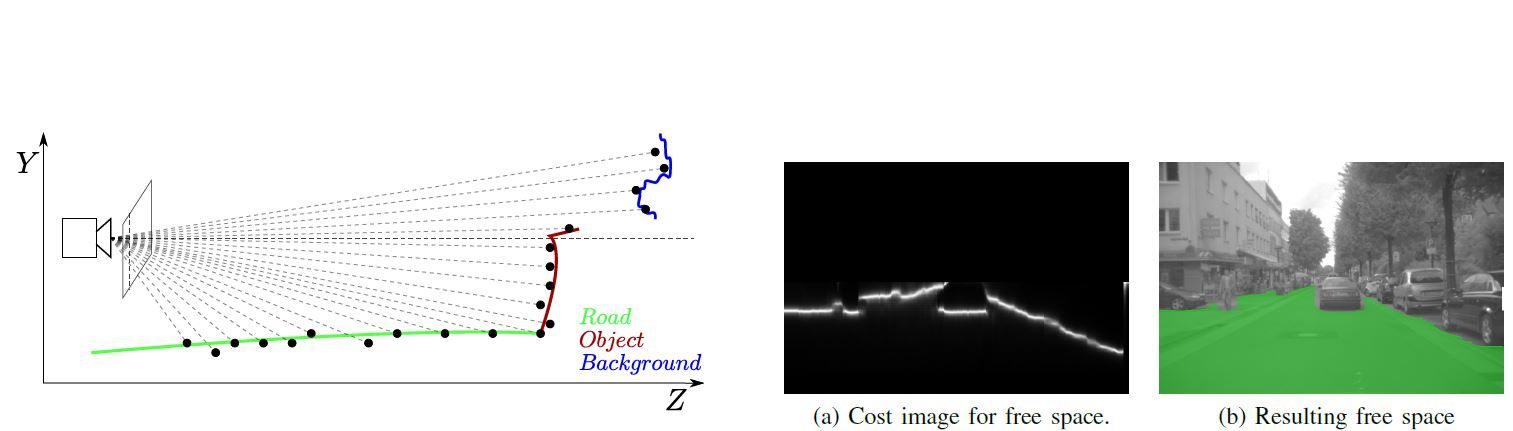

Badino2007ICCVWORK | |||

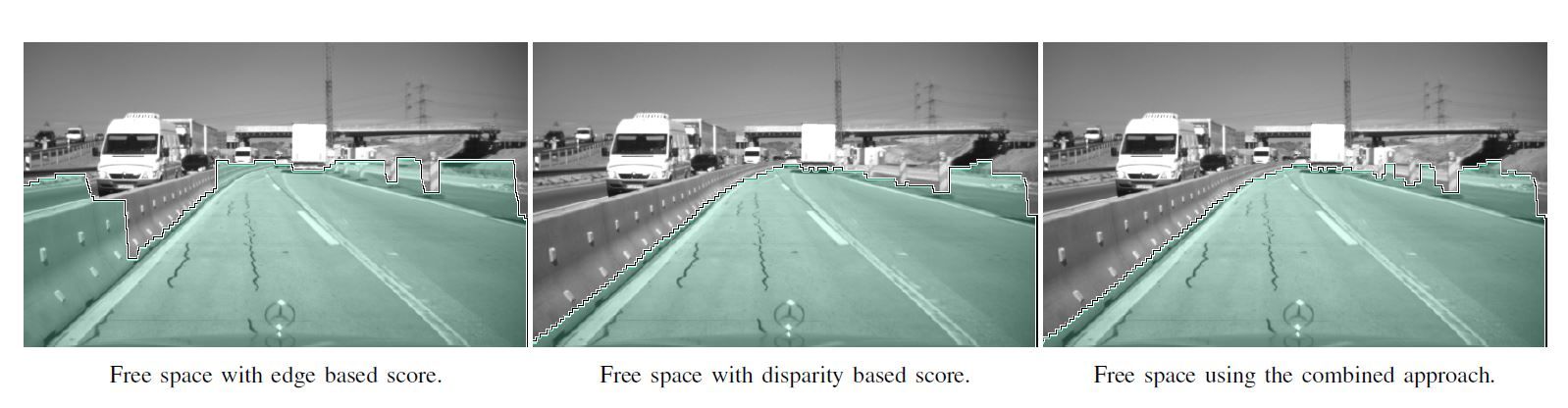

- The free space is the world regions where navigation without collision is guaranteed

- Contributions:

- Presents a method for the computation of free space with stochastic occupancy grids

- Stereo measurements are integrated over time reducing disparity uncertainty.

- These integrated measurements are entered into an occupancy grid, taking into account the noise properties of the measurements

- Defines three types of grids and discusses their benefits and drawbacks

- Applies dynamic programming to a polar occupancy grid, to find the optimal segmentation between free and occupied regions

- Evaluates on stereo sequences introduced in the paper

| Semantic Segmentation Methods | |||

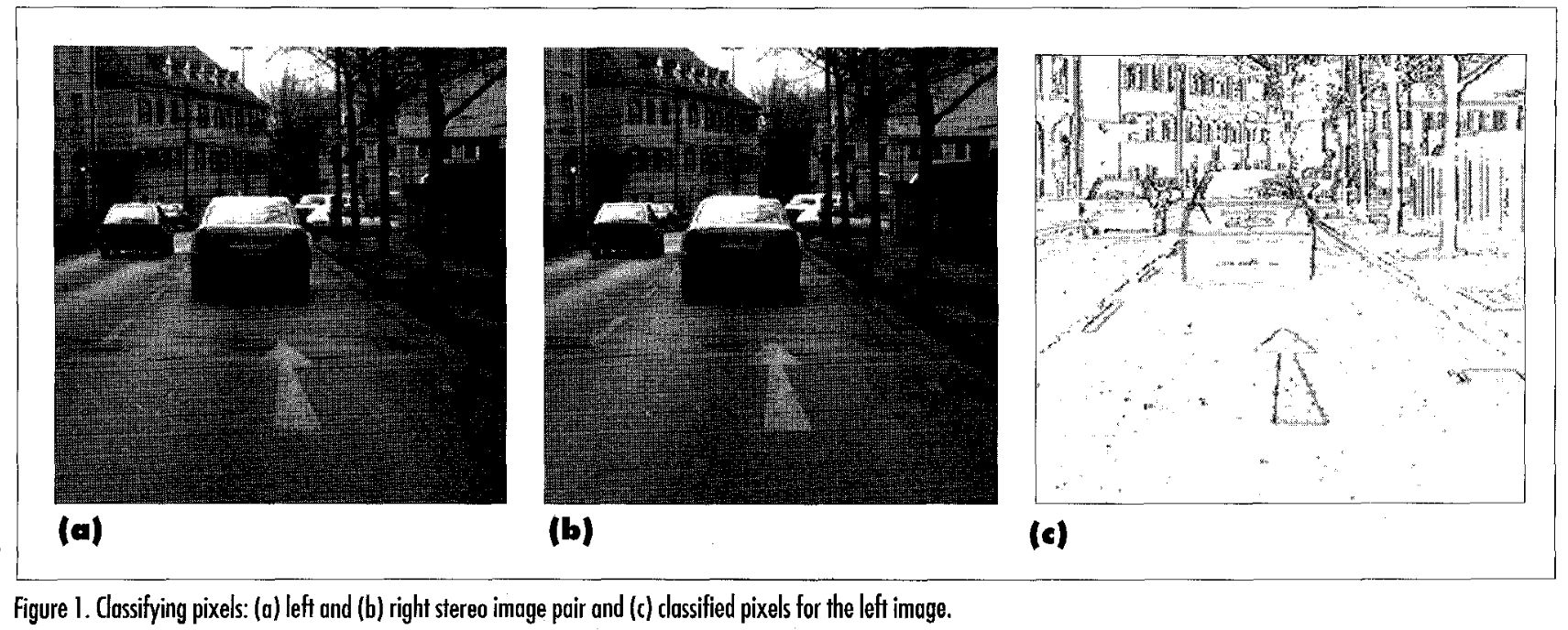

|

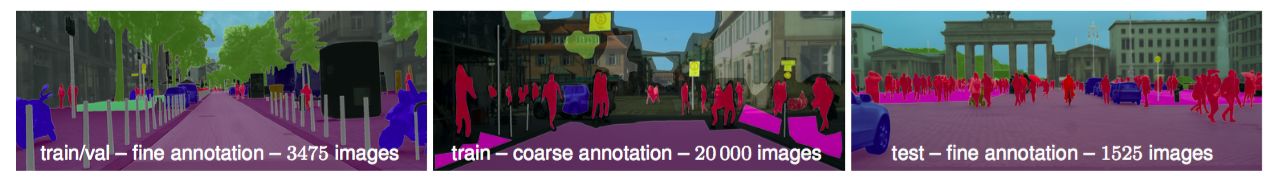

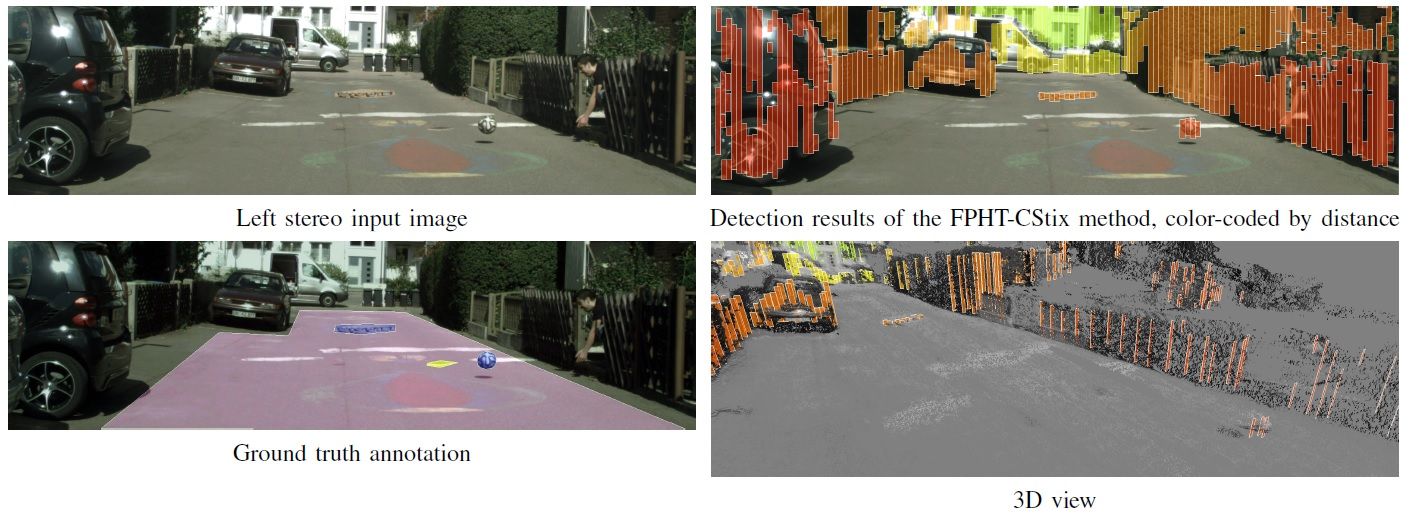

DAGM 2009

Badino2009DAGM | |||

- Motivation: Develop a compact, flexible representation of the 3D traffic situation that can be used for the scene understanding tasks of driver assistance and autonomous systems

- Contributions:

- Introduces a new primitive, a set of rectangular sticks called stixel for modeling 3D scenes

- Each stixel is defined by its 3D position relative to the camera and stands vertically on the ground, having a certain height

- Each stixel limits the free space and approximates the object boundaries

- Stochastic occupancy grids are computed from dense stereo information

- Free space is computed from a polar representation of the occupancy grid

- The height of the stixels is obtained by segmenting the disparity image in foreground and background disparities

| Mapping, Localization & Ego-Motion Estimation Localization | |||

|

ICRA 2012

Badino2012ICRA | |||

- Autonomous vehicles must be capable of localizing in GPS denied situations

- Topometric localization which combines topological with metric localization

- Build compact database of simple visual and 3D features with GPS equipped vehicle

- Whole image SURF descriptor, a vector containing gradient information of entire image

- Range mean and standard deviation descriptor

- Localization using a Bayesian filter to match visual and range measurements to the database

- Algorithm is reliable across wide environmental change, including lighting difference, seasonal variations

- Evaluation using a vehicle with mounted video cameras and LIDAR

- Achieving an average localization accuracy of 1 m on an 8 km route

| Mapping, Localization & Ego-Motion Estimation Datasets | |||

|

ICRA 2012

Badino2012ICRA | |||

- Autonomous vehicles must be capable of localizing in GPS denied situations

- Topometric localization which combines topological with metric localization

- Build compact database of simple visual and 3D features with GPS equipped vehicle

- Whole image SURF descriptor, a vector containing gradient information of entire image

- Range mean and standard deviation descriptor

- Localization using a Bayesian filter to match visual and range measurements to the database

- Algorithm is reliable across wide environmental change, including lighting difference, seasonal variations

- Evaluation using a vehicle with mounted video cameras and LIDAR

- Achieving an average localization accuracy of 1 m on an 8 km route

| Semantic Segmentation Methods | |||

|

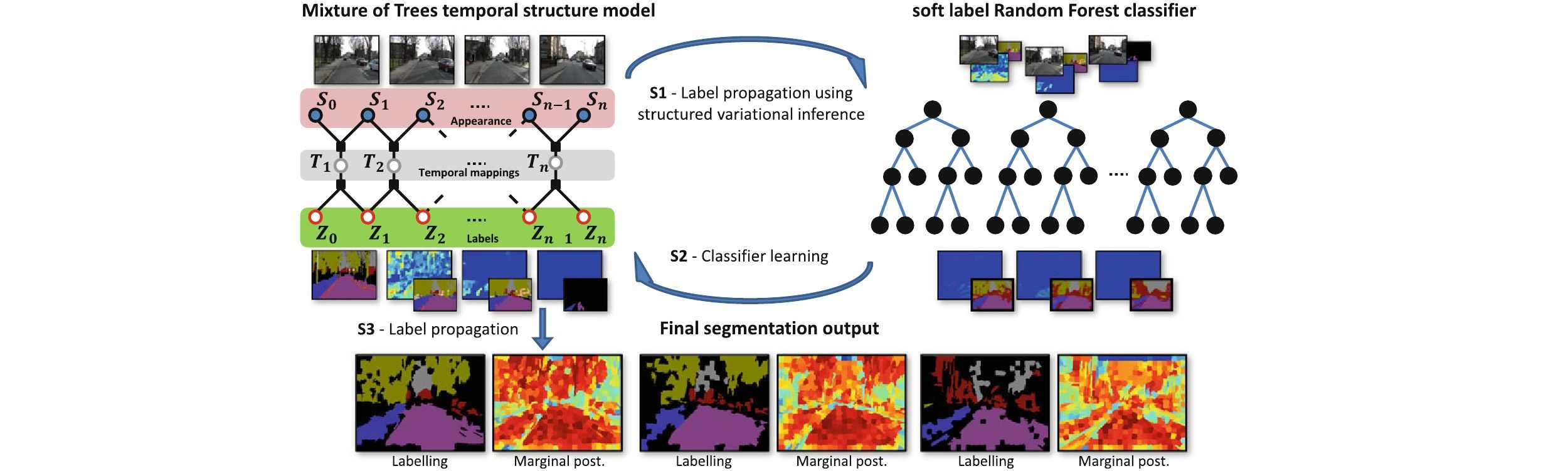

IJCV 2014

Badrinarayanan2014IJCV | |||

- Mixture of trees probabilistic graphical model for semi-supervised video segmentation

- Each component represents a tree structured temporal linkage between super-pixels from first to last frame

- Variational inference scheme for this model to estimate super-pixel labels and the confidence

- Structured variational inference without unaries to estimate super-pixel marginal posteriors

- Training a soft label Random Forest classifier with pixel marginal posteriors

- Predictions are injected back as unaries in the second iteration of label inference

- Inference over full video volume which helps to avoid erroneous label propagation

- Very efficient in term of computational speed and memory usage and can be used in real time

- Evaluation using the challenging SegTrack dataset (binary segmentation), CamVid driving video dataset(multi-class segmentation)

| Semantic Segmentation Methods | |||

|

CVPR 2010

Badrinarayanan2010CVPR | |||

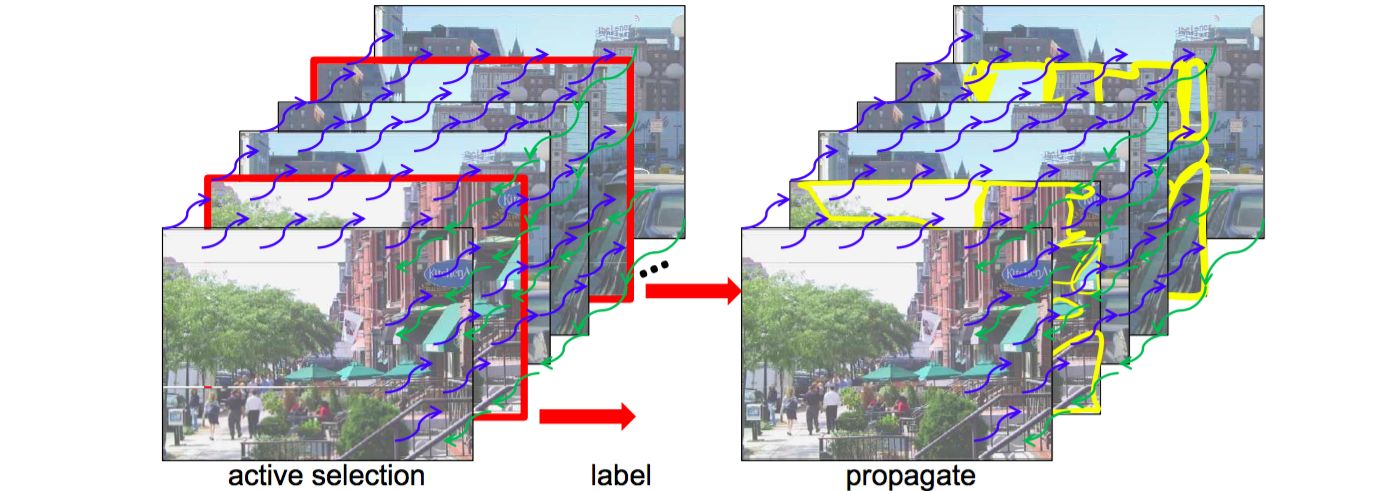

- Labelling of video sequences is expensive

- Hidden Markov Model for label propagation in video sequences

- Using a limited amount of hand labelled pixels

- Optic Flow based, image patches based, semantic regions based label propagation

- Short sequences naive optic flow based propagation is sufficient otherwise more sophisticated models necessary

- Evaluation by training Random forest classifier for video segmentation with ground truth and data from label propagation

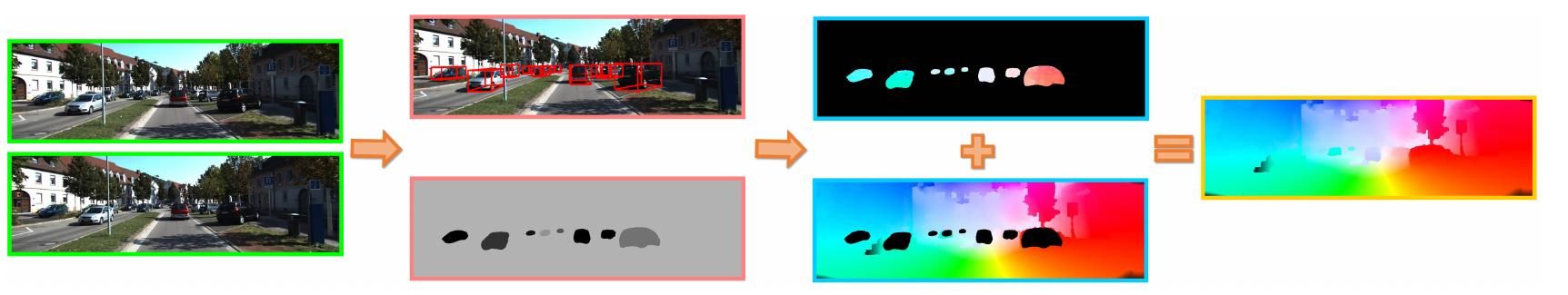

| Optical Flow Methods | |||

|

ECCV 2016

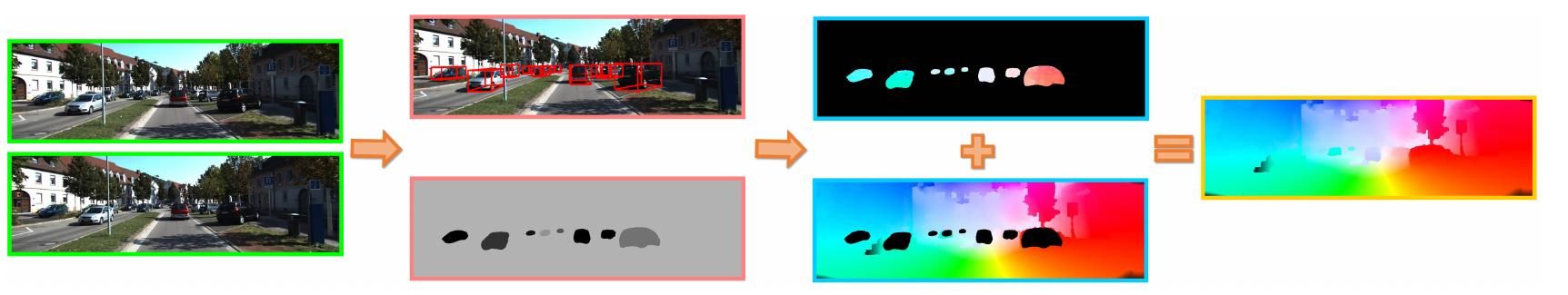

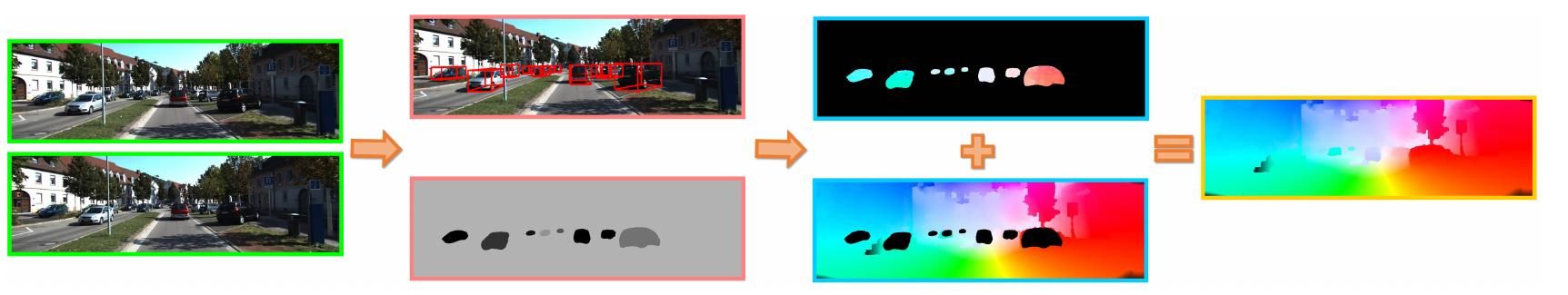

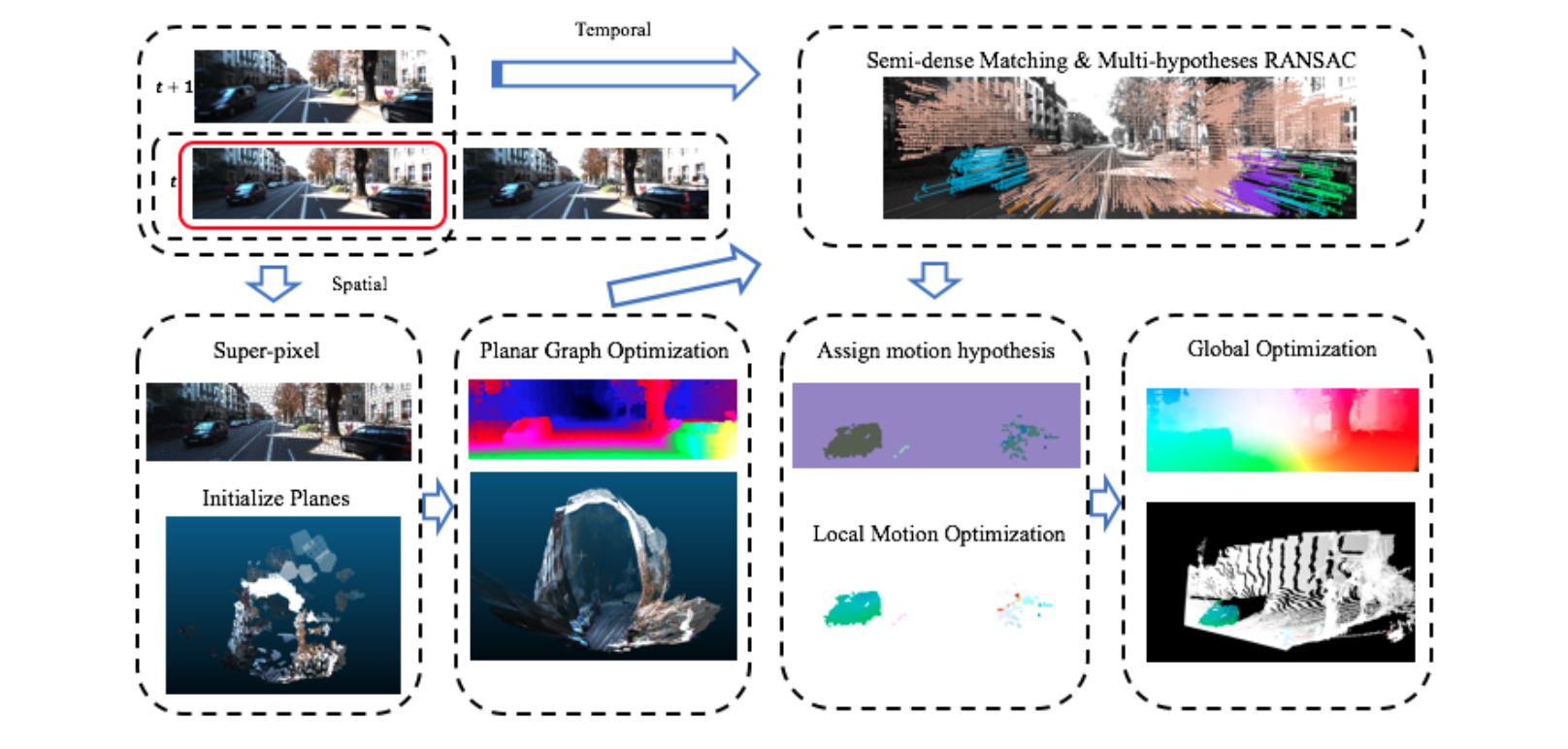

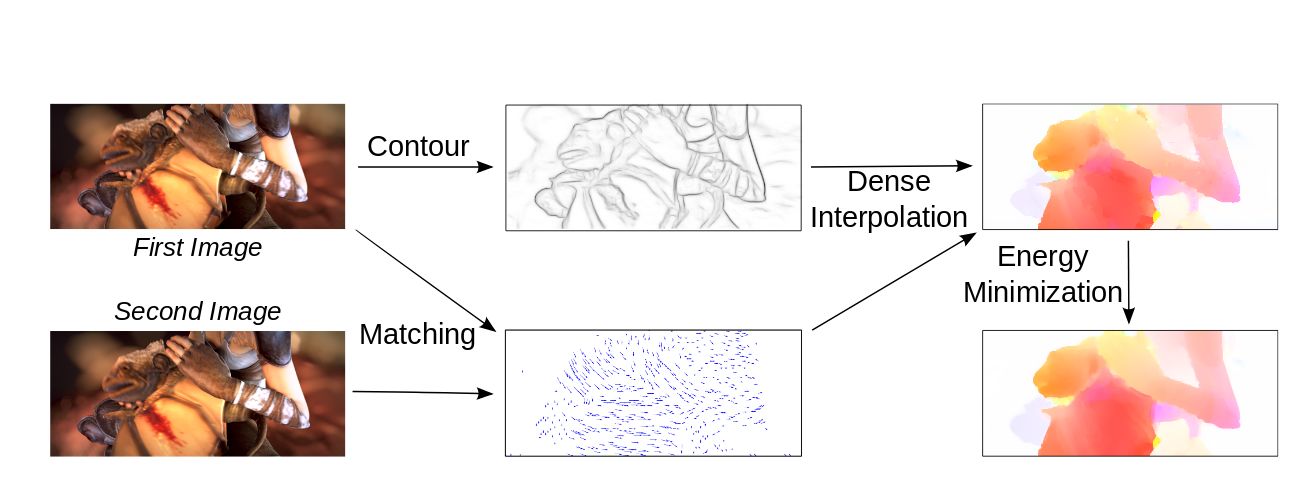

Bai2016ECCV | |||

- Optical flow for autonomous driving

- Assumptions

- Static background

- Small number of rigidly moving objects

- Foreground/background segmentation using semantic segmentation network in combination with 3D object detection

- Propose a siamese network with product layer that learns flow matching with uncertainty

- Restrict the flow matches to lie on its epipolar line

- Slanted plane model for background flow estimation

- Evaluation on KITTI 2015

| Optical Flow State of the Art on KITTI | |||

|

ECCV 2016

Bai2016ECCV | |||

- Optical flow for autonomous driving

- Assumptions

- Static background

- Small number of rigidly moving objects

- Foreground/background segmentation using semantic segmentation network in combination with 3D object detection

- Propose a siamese network with product layer that learns flow matching with uncertainty

- Restrict the flow matches to lie on its epipolar line

- Slanted plane model for background flow estimation

- Evaluation on KITTI 2015

| Optical Flow Discussion | |||

|

ECCV 2016

Bai2016ECCV | |||

- Optical flow for autonomous driving

- Assumptions

- Static background

- Small number of rigidly moving objects

- Foreground/background segmentation using semantic segmentation network in combination with 3D object detection

- Propose a siamese network with product layer that learns flow matching with uncertainty

- Restrict the flow matches to lie on its epipolar line

- Slanted plane model for background flow estimation

- Evaluation on KITTI 2015

| Datasets & Benchmarks | |||

|

IJCV 2011

Baker2011IJCV | |||

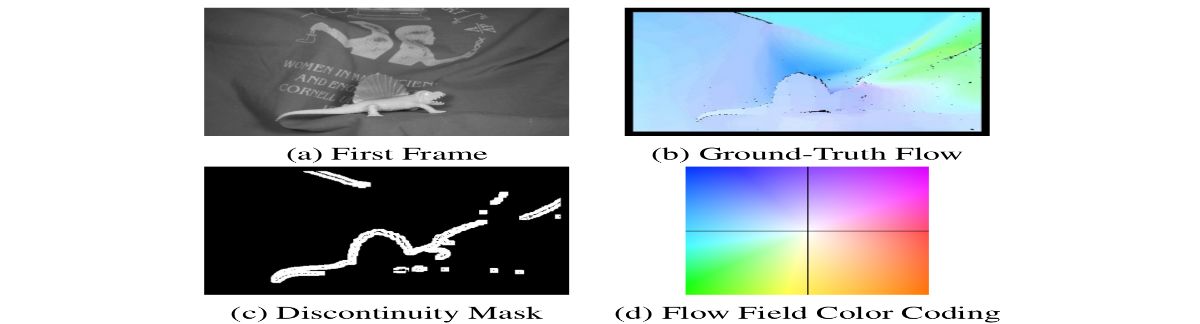

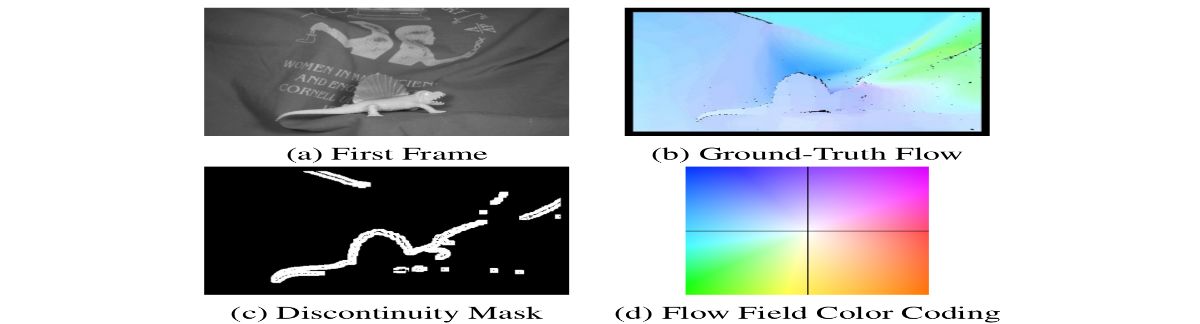

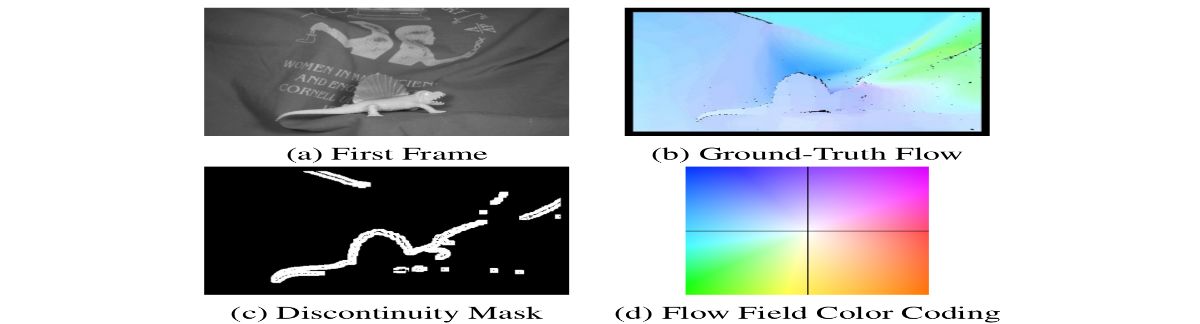

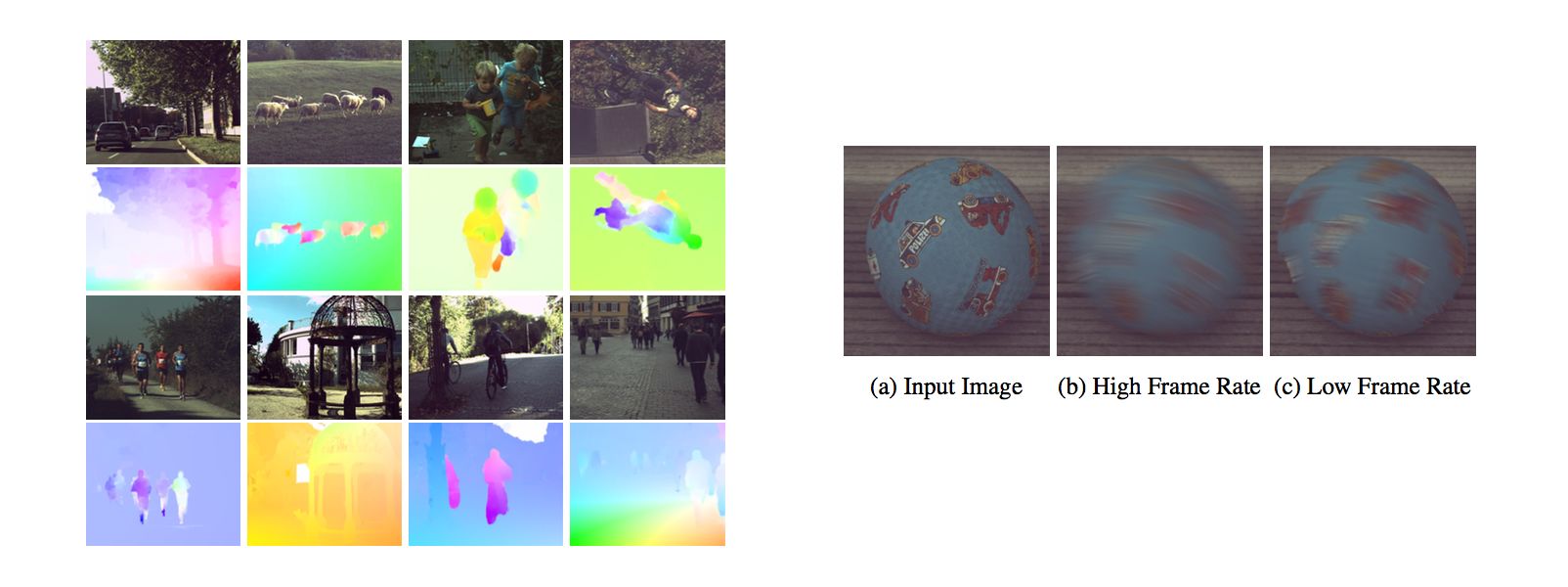

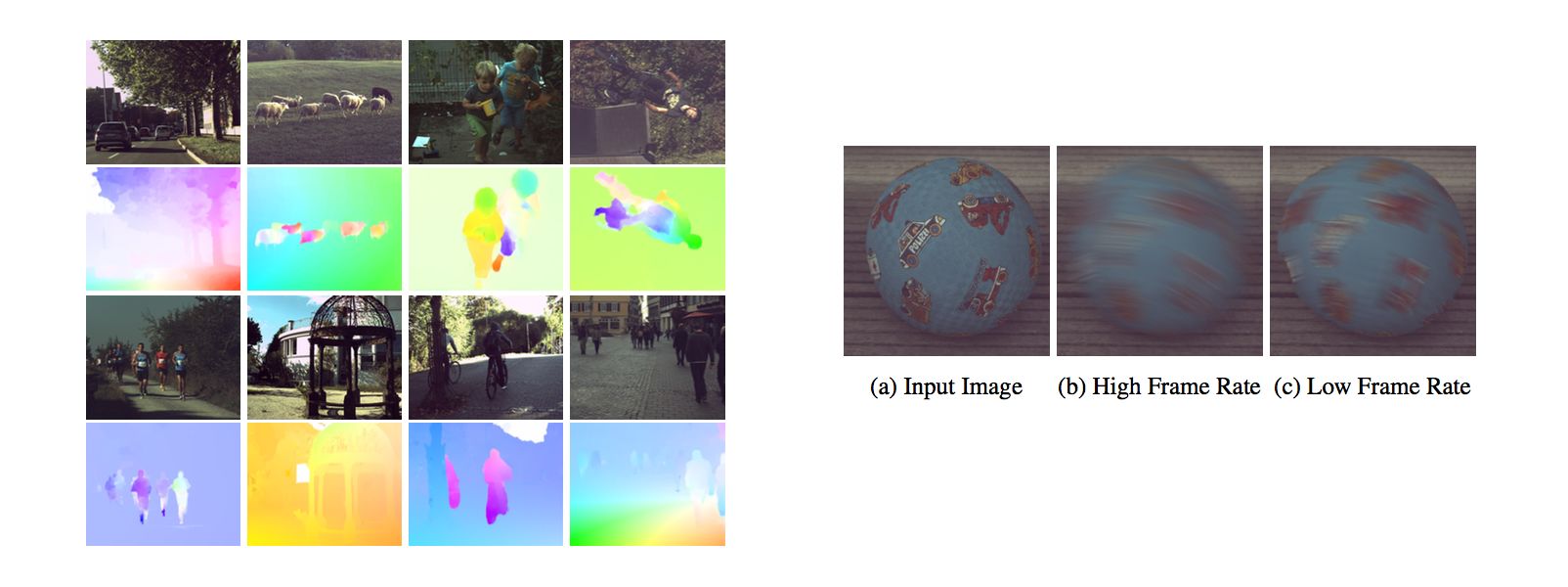

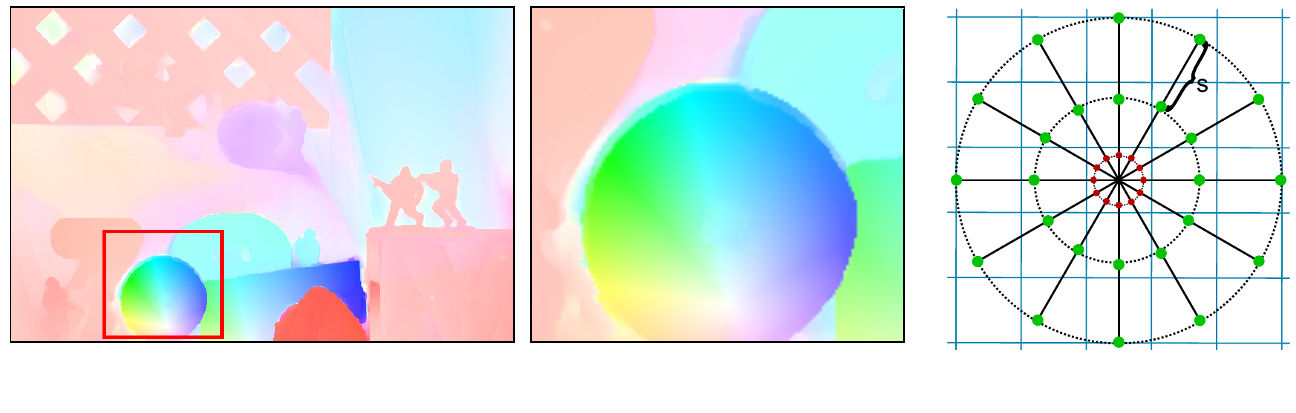

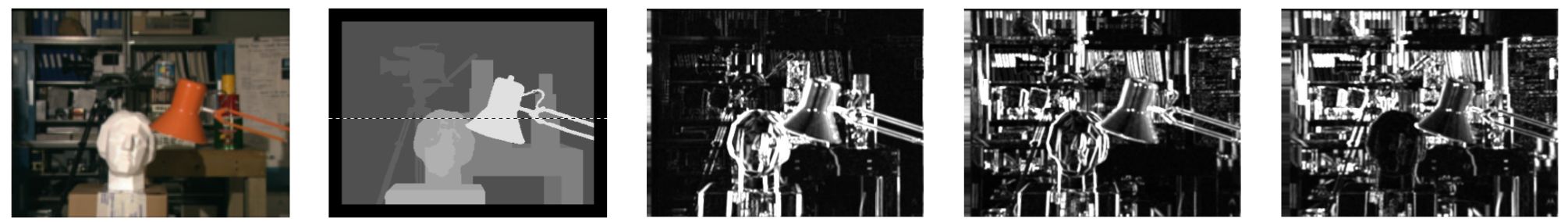

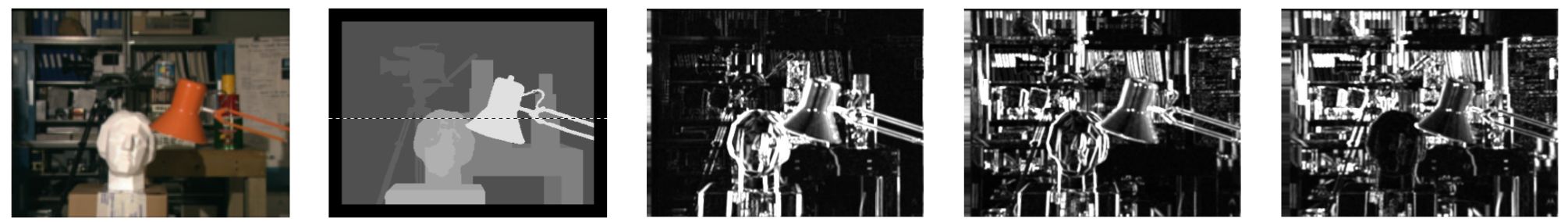

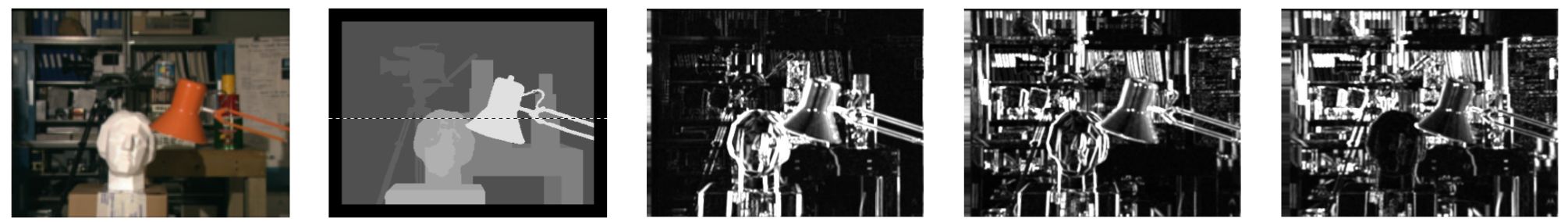

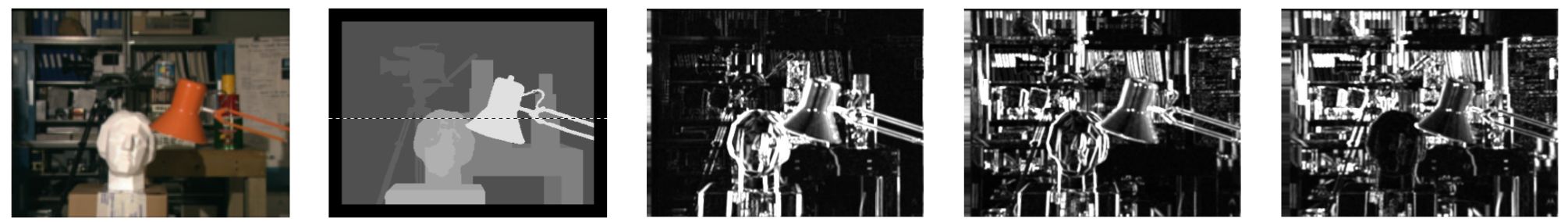

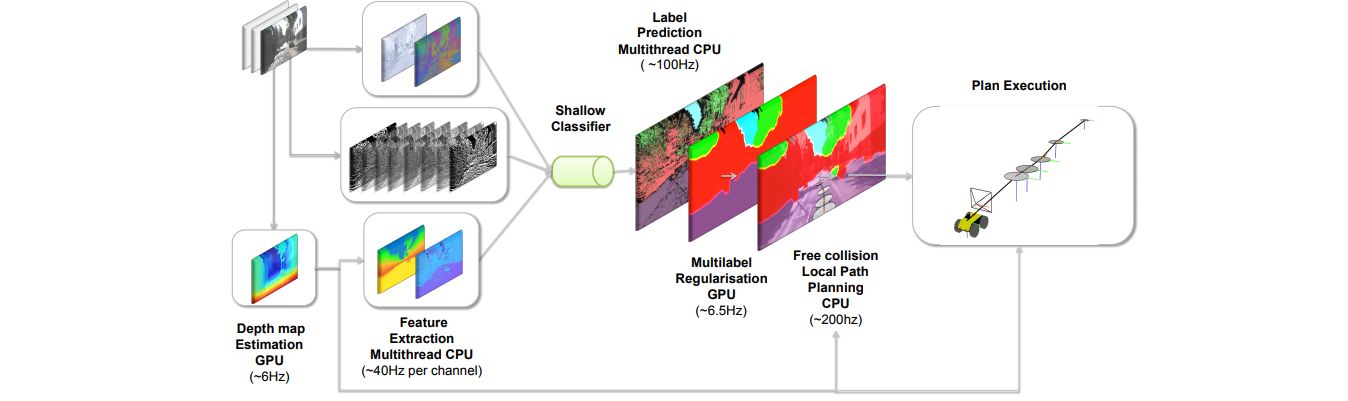

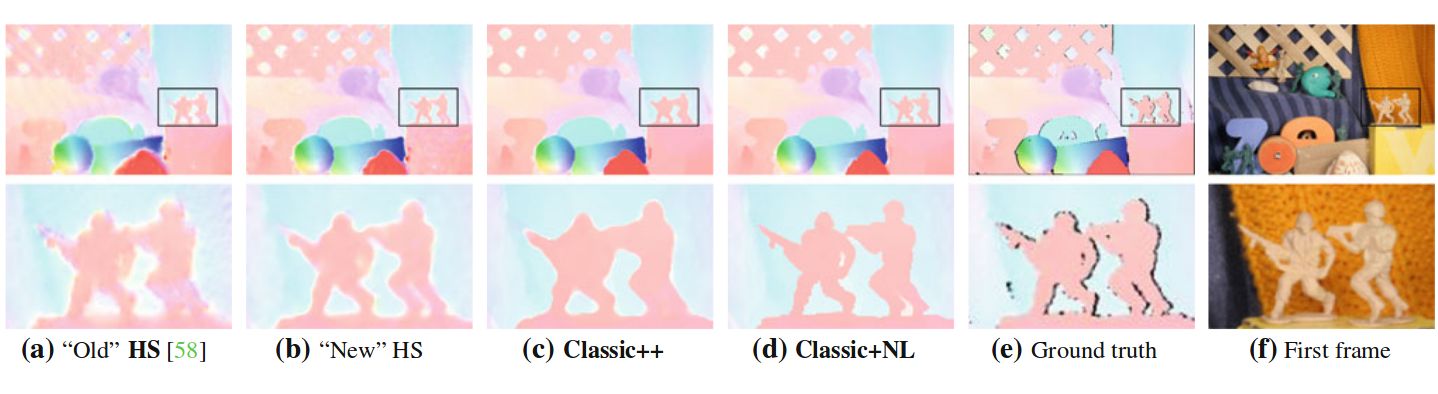

- Presents a collection of datasets for the evaluation of optical flow algorithms

- Contributes four types of data to test different aspects of optical flow algorithms:

- Sequences with nonrigid motion where the ground-truth flow is determined by tracking hidden fluorescent texture

- Realistic synthetic sequences - addresses the limitations of previous dataset sequences by rendering more complex scenes with significant motion discontinuities and textureless regions

- High frame-rate video used to study interpolation error

- Modified stereo sequences of static scenes for optical flow

- Evaluates a number of well-known flow algorithms to characterize the current state of the art

- Extendes the set of evaluation measures and improved the evaluation methodology

| Datasets & Benchmarks Computer Vision Datasets | |||

|

IJCV 2011

Baker2011IJCV | |||

- Presents a collection of datasets for the evaluation of optical flow algorithms

- Contributes four types of data to test different aspects of optical flow algorithms:

- Sequences with nonrigid motion where the ground-truth flow is determined by tracking hidden fluorescent texture

- Realistic synthetic sequences - addresses the limitations of previous dataset sequences by rendering more complex scenes with significant motion discontinuities and textureless regions

- High frame-rate video used to study interpolation error

- Modified stereo sequences of static scenes for optical flow

- Evaluates a number of well-known flow algorithms to characterize the current state of the art

- Extendes the set of evaluation measures and improved the evaluation methodology

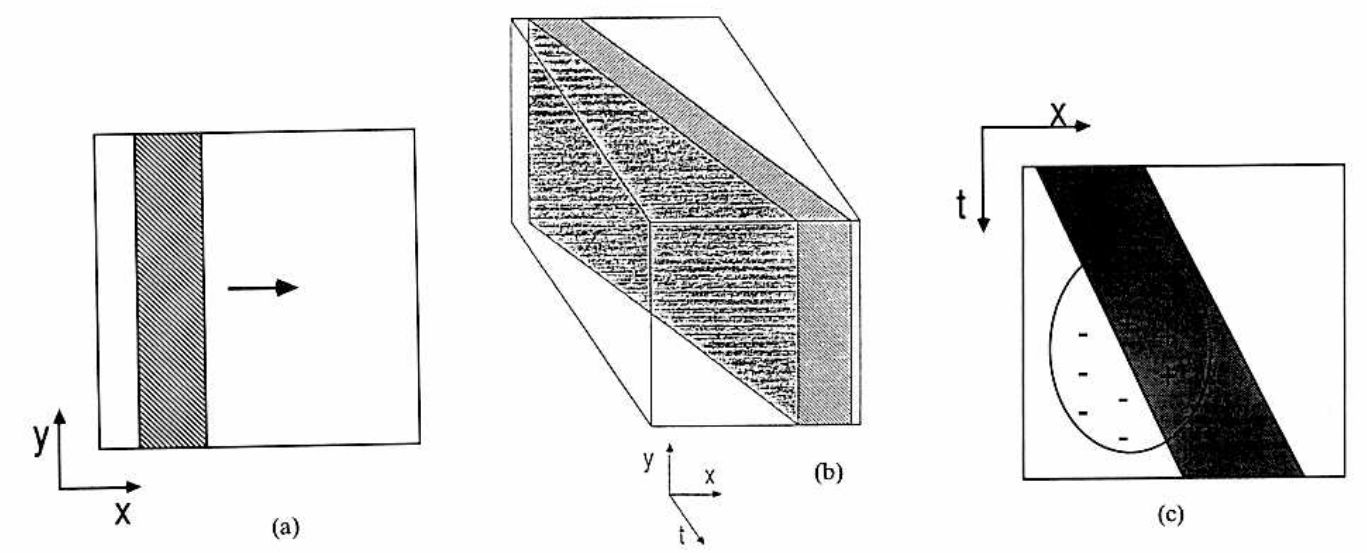

| Optical Flow Problem Definition | |||

|

IJCV 2011

Baker2011IJCV | |||

- Presents a collection of datasets for the evaluation of optical flow algorithms

- Contributes four types of data to test different aspects of optical flow algorithms:

- Sequences with nonrigid motion where the ground-truth flow is determined by tracking hidden fluorescent texture

- Realistic synthetic sequences - addresses the limitations of previous dataset sequences by rendering more complex scenes with significant motion discontinuities and textureless regions

- High frame-rate video used to study interpolation error

- Modified stereo sequences of static scenes for optical flow

- Evaluates a number of well-known flow algorithms to characterize the current state of the art

- Extendes the set of evaluation measures and improved the evaluation methodology

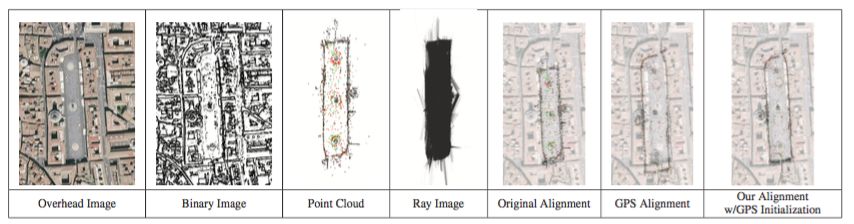

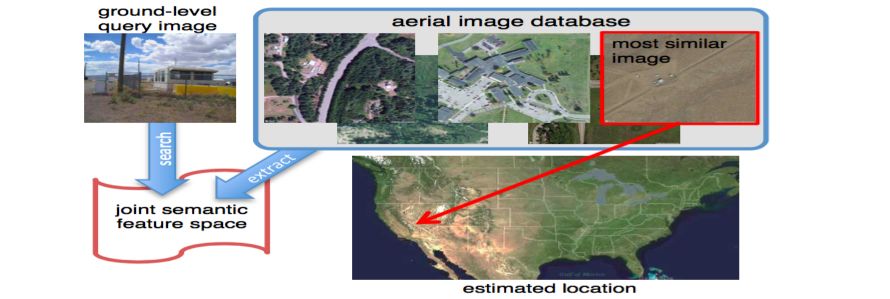

| Mapping, Localization & Ego-Motion Estimation Localization | |||

|

ICM 2011

Bansal2011ICM | |||

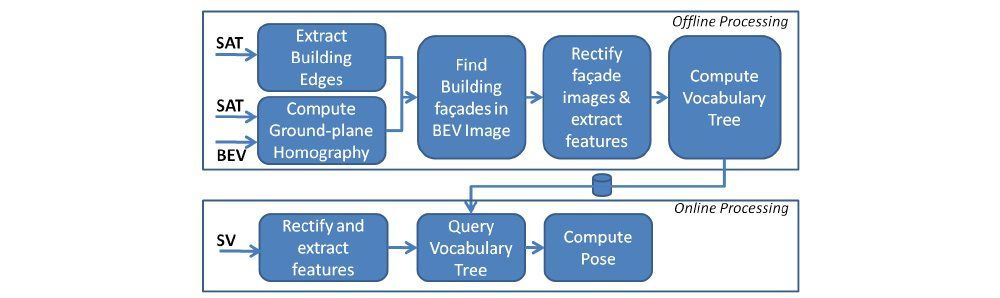

- Aerial image databases are widely available while image from the ground of urban areas is limited

- Localization of ground level images in urban areas using a database of satellite and oblique aerial images

- Method for estimating building facades by extracting line segments from satellite and aerial images

- Correspondence of building facades between aerial and ground images using statistical self-similarity with respect to other patches on a facade

- Position and orientation estimation of ground images

- Qualitative results on a region around Ridieu St. in Ottawa, Canada with BEV, Panoramio imagery and Google Street-view screen-shots

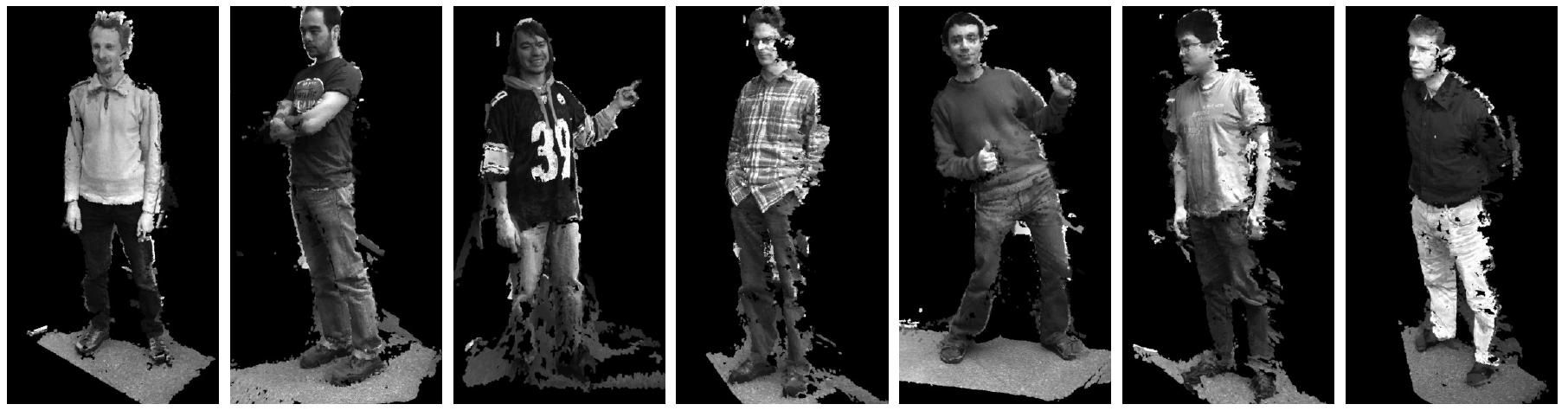

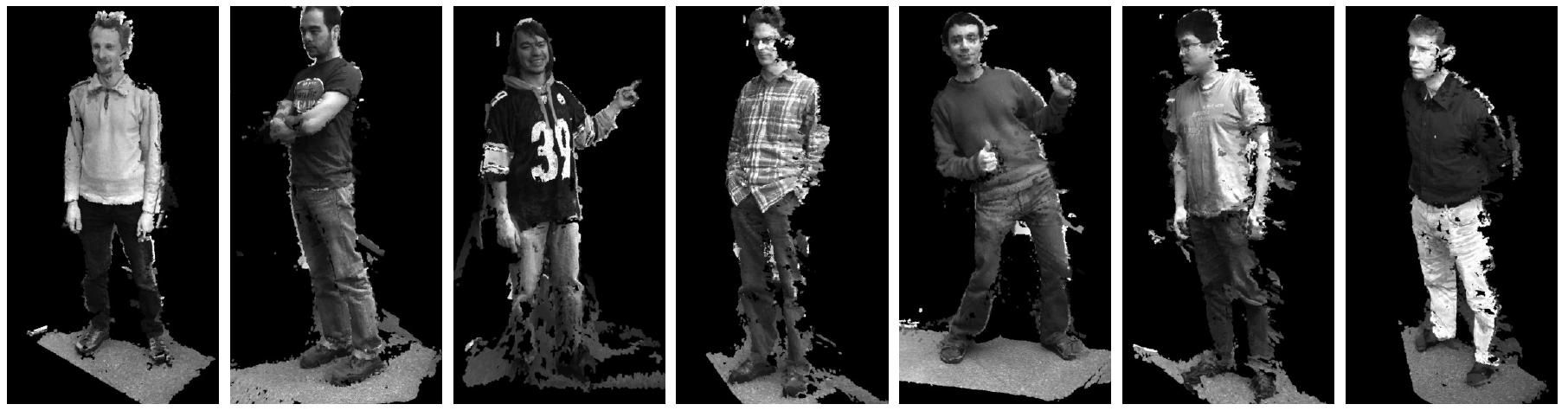

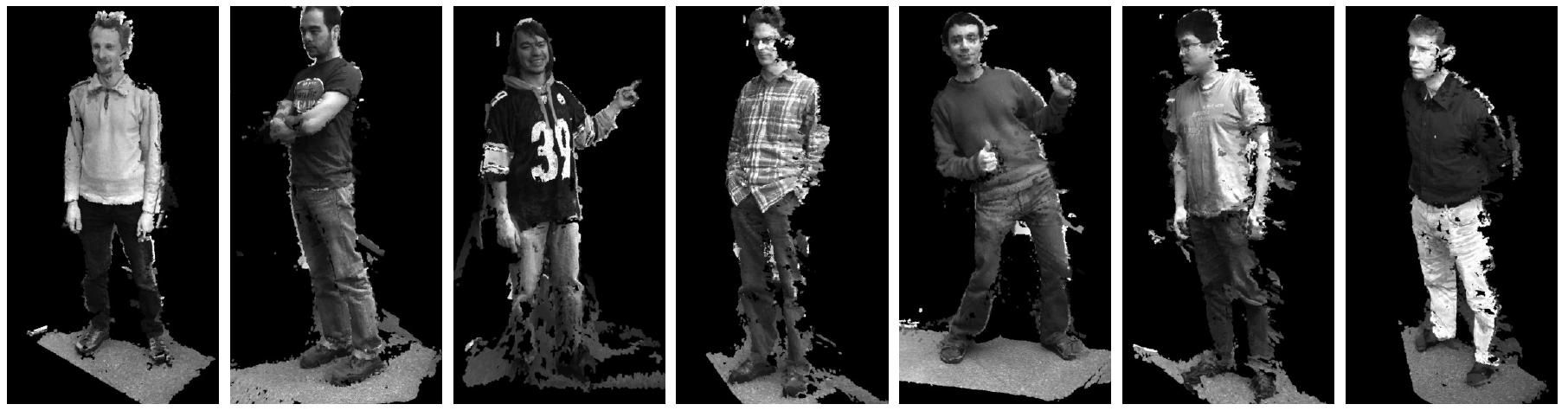

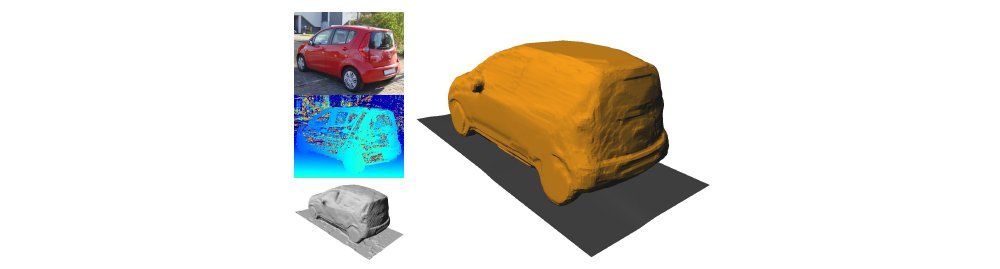

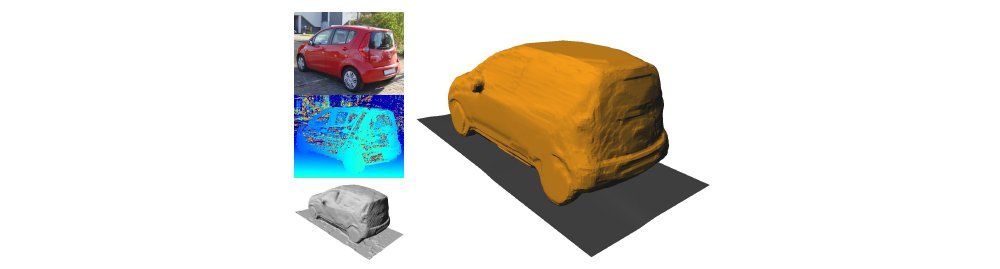

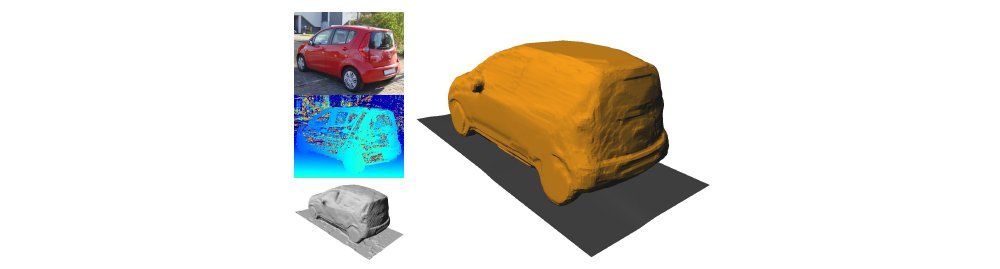

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

CVPR 2013

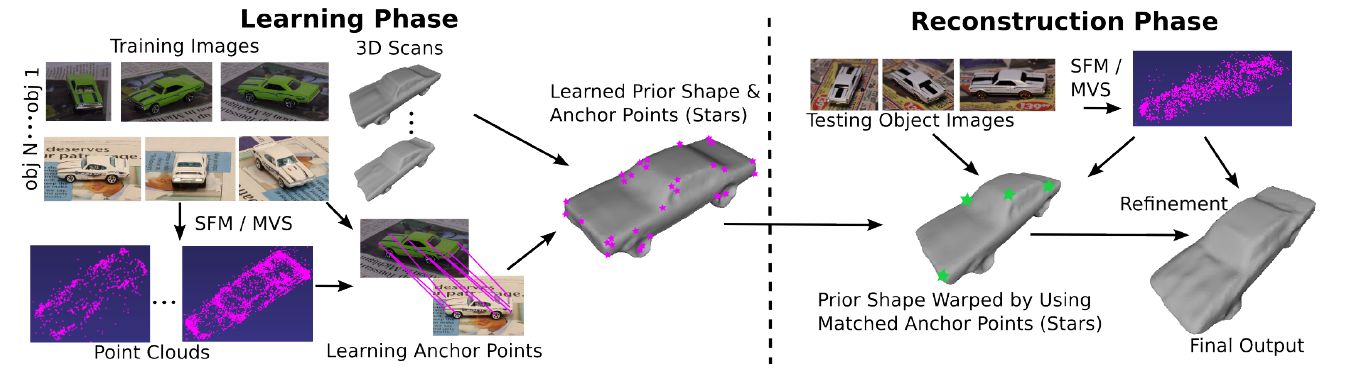

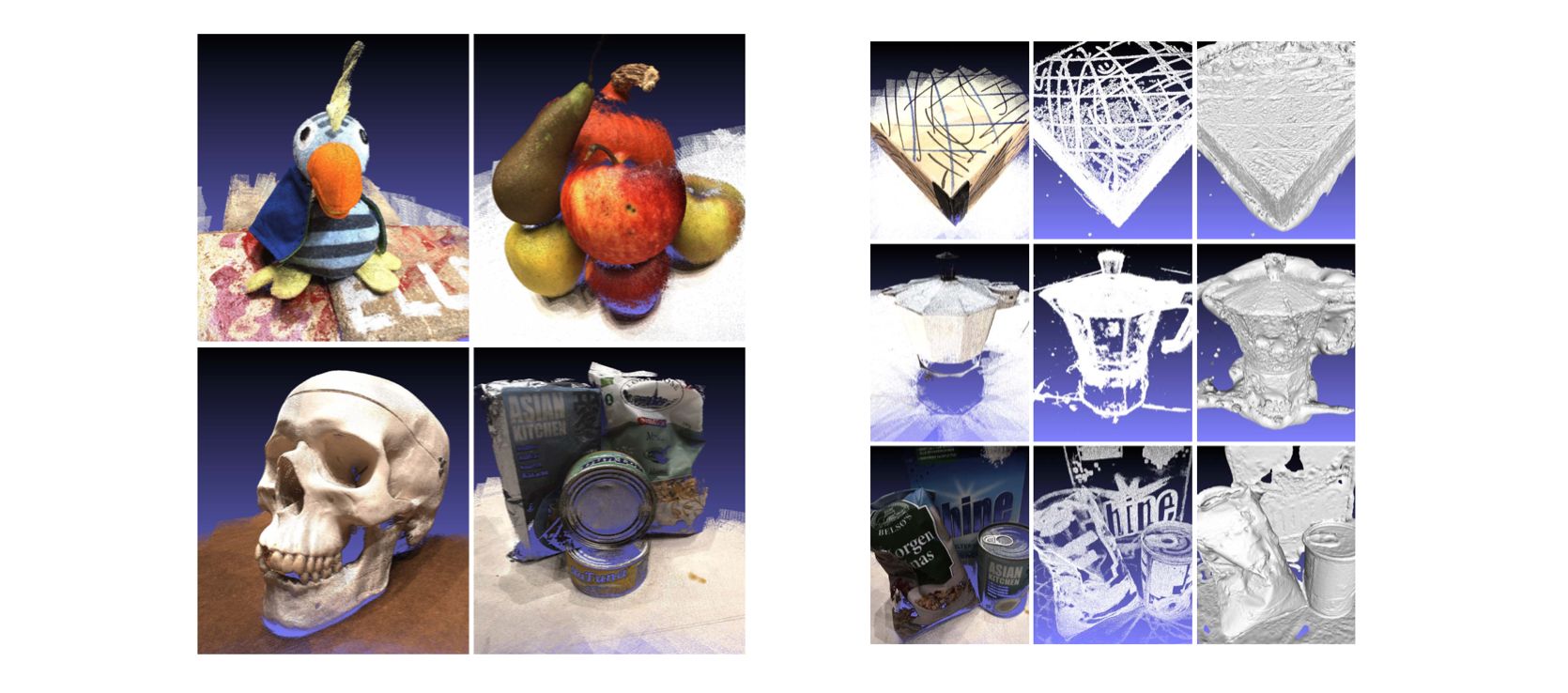

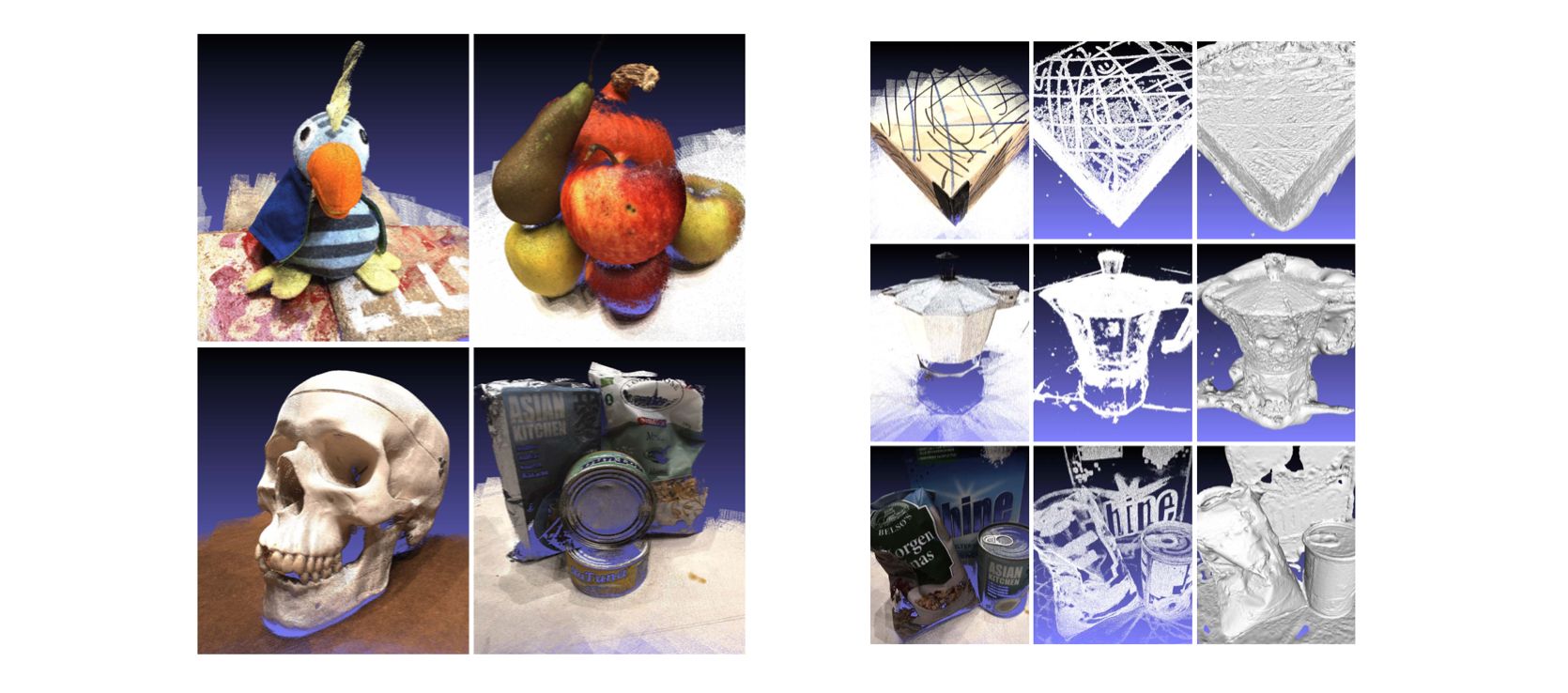

Bao2013CVPR | |||

- Dense reconstruction incorporating semantic information to overcome drawbacks of traditional multiview stereo

- Learning a prior comprised of a mean shape and a set of weighted anchor points

- Training from of 3D scans and images of objects from various viewpoints

- Robust algorithm to match anchor points across instances enables learning a mean shape for the category

- Shape of an object modelled as warped version of the category mean with instance-specific details

- Qualitative and quantitative results on a small dataset of model cars using leave-one-out

| Object Detection Methods | |||

|

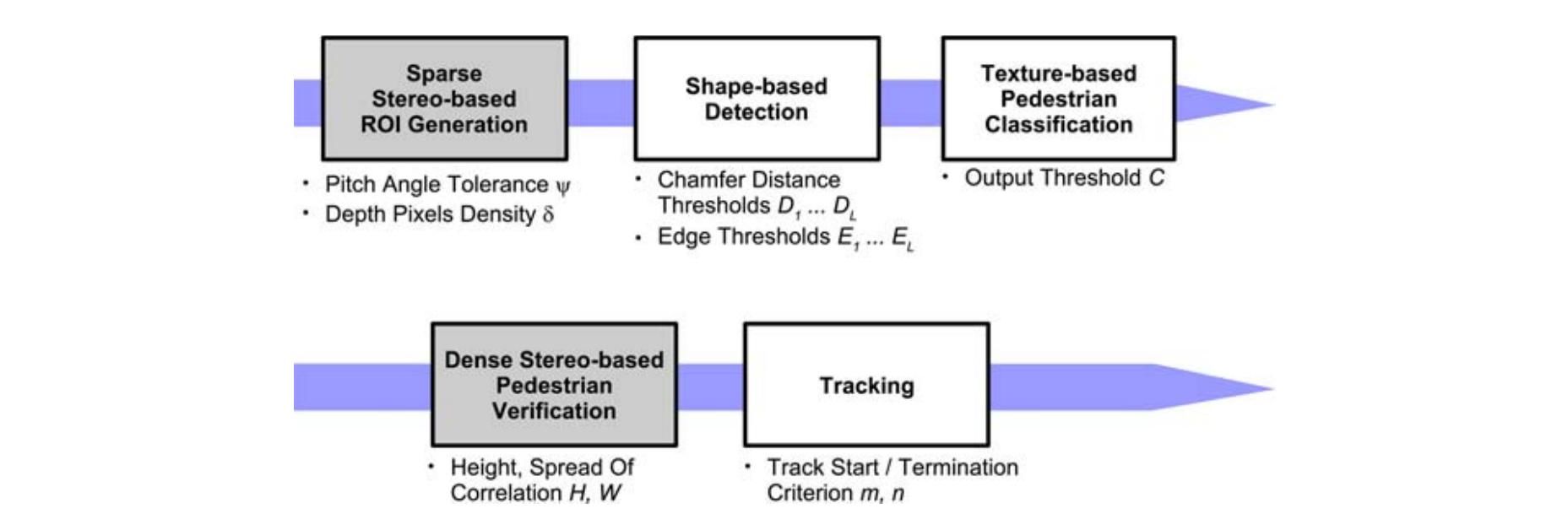

CVPR 2012

Benenson2012CVPR | |||

- Fast and high quality pedestrian detection

- Two new algorithmic speed-ups:

- Exploiting geometric context extracted from stereo images

- Efficiently handling different scales

- Object detection without image resizing using stixels

- Similar to Viola and Jones: scale the features not the images, applied to HOG-like features

- Detections at 50 fps (135 fps on CPU+GPU)

- Evaluated on INRIA Persons and Bahnhof sequence

| Object Detection Methods | |||

|

ECCV 2014

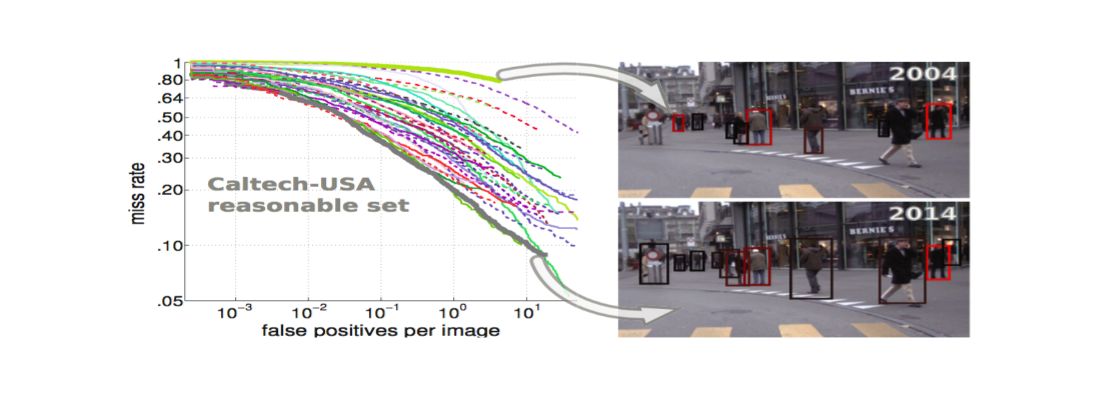

Benenson2014ECCV | |||

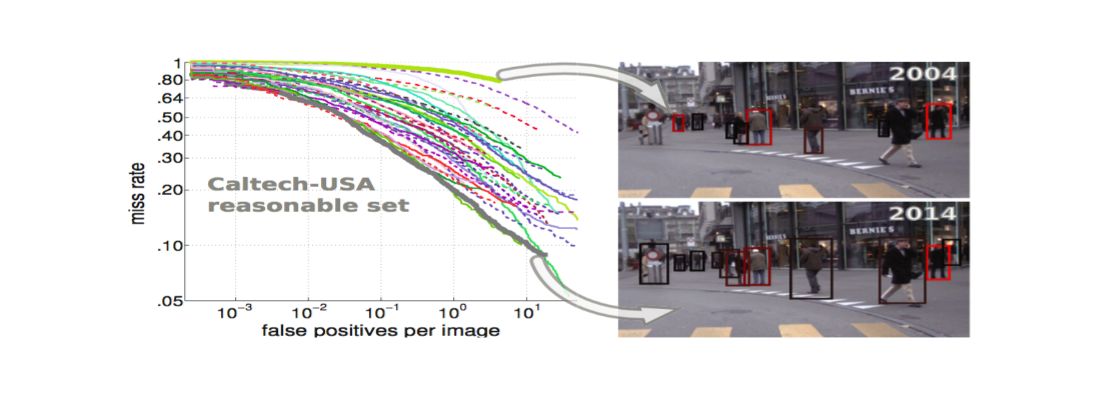

- Aim is to review progress over the last decade of pedestrian detection, & try to quantify which ideas had the most impact on final detection quality

- Evaluates on Caltech-USA, INRIA and KITTI datasets for comparing methods

- Conclusions:

- There is no conclusive empirical evidence indicating that whether non-linear kernels provide meaningful gains over linear kernel

- The 3 families of pedestrian detectors (DPMs, decision forests, deep networks) are based on different learning techniques, their results are surprisingly close

- Multi-scale models provide a simple and generic extension to existing detectors. Despite consistent improvements, their contribution to the final quality is minor

- Most of the progress can be attributed to the improvement in features alone

- Combining the detector ingredients found to work well (better features, optical flow, and context) shows that these ingredients are mostly complementary

| Object Detection Datasets | |||

|

ECCV 2014

Benenson2014ECCV | |||

- Aim is to review progress over the last decade of pedestrian detection, & try to quantify which ideas had the most impact on final detection quality

- Evaluates on Caltech-USA, INRIA and KITTI datasets for comparing methods

- Conclusions:

- There is no conclusive empirical evidence indicating that whether non-linear kernels provide meaningful gains over linear kernel

- The 3 families of pedestrian detectors (DPMs, decision forests, deep networks) are based on different learning techniques, their results are surprisingly close

- Multi-scale models provide a simple and generic extension to existing detectors. Despite consistent improvements, their contribution to the final quality is minor

- Most of the progress can be attributed to the improvement in features alone

- Combining the detector ingredients found to work well (better features, optical flow, and context) shows that these ingredients are mostly complementary

| History of Autonomous Driving | |||

|

IV 2011

Bertozzi2011IV | |||

- Presents the details and preliminary results of VIAC, the VisLab Intercontinental Autonomous Challenge, a test of autonomous driving along an unknown route from Italy to China

- The onboard perception systems can detect obstacles, lane markings, ditches, berms and indentify the presence and position of a preceding vehicle

- The information on the environment produced by the sensing suite is used to perform different tasks, such as leader-following, stop & go, and waypoint following

- All data have been logged, including all data generated by the sensors, vehicle data, and GPS info

- This data is available for a deep analysis of the various systems performance, with the aim of virtually running the whole trip multiple times with improved versions of the software

- This paper discusses some preliminary results and figures obtained by the analysis of the data collected during the test

| History of Autonomous Driving | |||

|

RAS 2000

Bertozzi2000RAS | |||

- Survey on the most common approaches to the challenging task of Autonomous Road Following

- Computing power not a problem any more

- Data acquisition still problematic with difficulties like light reflections, wet road, direct sunshine, tunnels, shadows.

- Enhancement of sensor's capabilities and performance need to be addressed

- Full automation of traffic is technically feasible

- Legal aspects related to the responsibility and the impact of automatic driving on human passengers need to be carefully considered

- Automation will be restricted to special infrastructure for now and will be gradually extended to other key transportations areas as shipping

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

ECCV 2002

Bhotika2002ECCV | |||

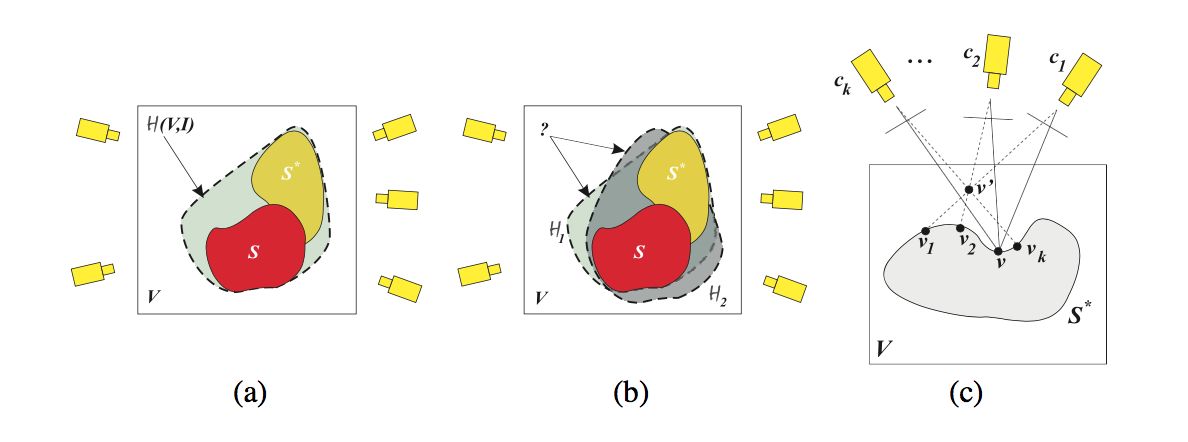

- Probabilistic 3D shape reconstruction based on mathematical definitions of visibility, occupancy, emptiness, and photo-consistency

- Understanding stereo ambiguities

- Probabilistic treatment of visibility

- Algorithm-independent analysis of occupancy

- Handling sensor and model errors

- Explicit distinction between shape ambiguity (multiple reconstruction solutions given noiseless images) and uncertainty (due to noise and modeling errors)

- {it Photo Hull Distribution}: all photo-consistent shapes with a probability

- A stochastic algorithm to draw samples from the Photo Hull Distribution with convergence properties

| Optical Flow Methods | |||

|

ICCV 1993

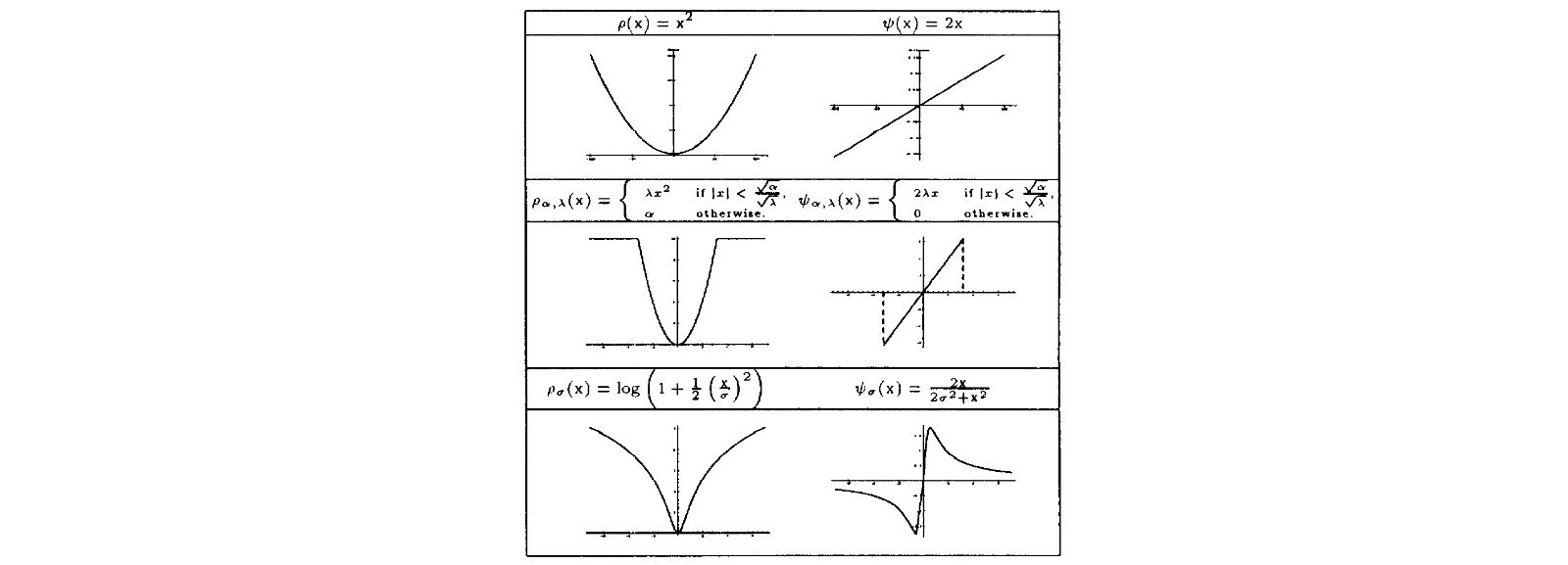

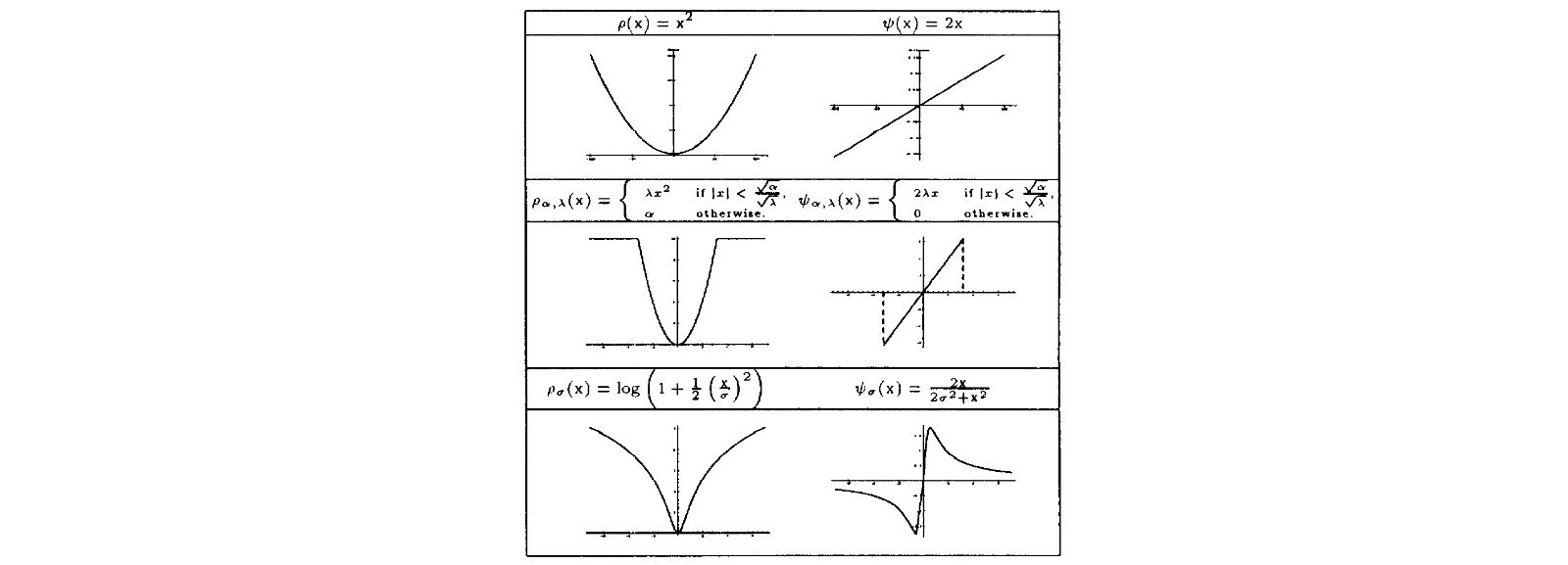

Black1993ICCV | |||

- Pioneering work in optical flow computation

- Addresses violations of the brightness constancy in Optical Flow formulation

- Proposes a new framework based on robust estimation

- Show relationship between robust estimation and line process approaches to deal with spatial discontinuities

- Generalize the notion of a line process to that of an outlier process

- Develop Graduated Non-Convexity algorithm for recovering optical flow and motion discontinuances

- Demonstrate the robust formulation on synthetic data and natural images

| Optical Flow Discussion | |||

|

ICCV 1993

Black1993ICCV | |||

- Pioneering work in optical flow computation

- Addresses violations of the brightness constancy in Optical Flow formulation

- Proposes a new framework based on robust estimation

- Show relationship between robust estimation and line process approaches to deal with spatial discontinuities

- Generalize the notion of a line process to that of an outlier process

- Develop Graduated Non-Convexity algorithm for recovering optical flow and motion discontinuances

- Demonstrate the robust formulation on synthetic data and natural images

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

CVPR 2016

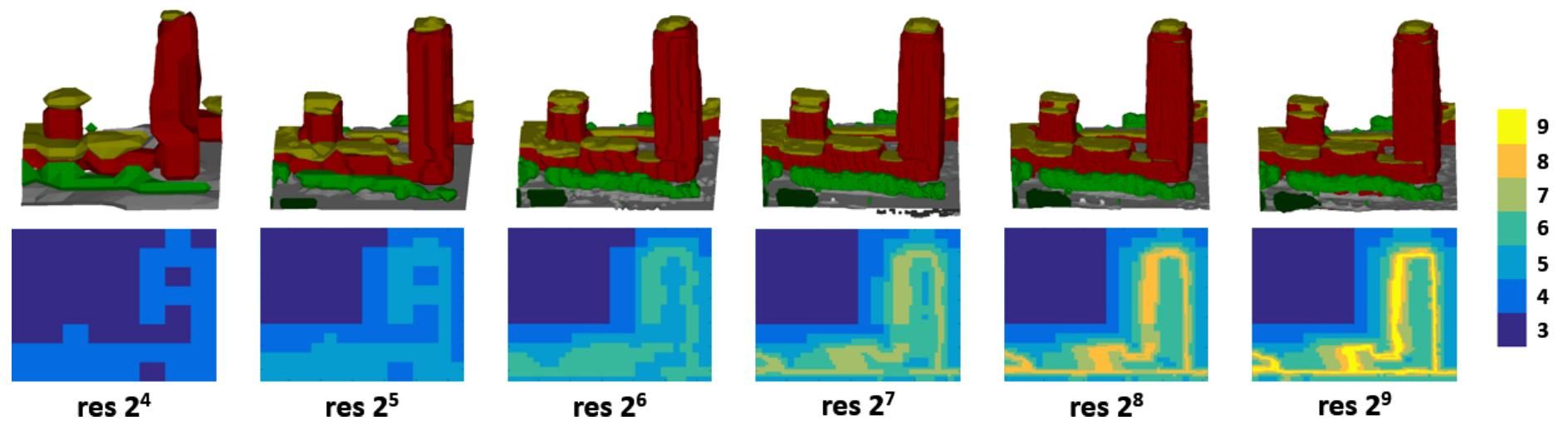

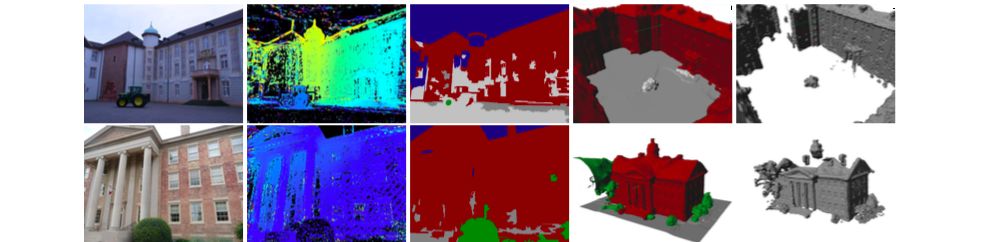

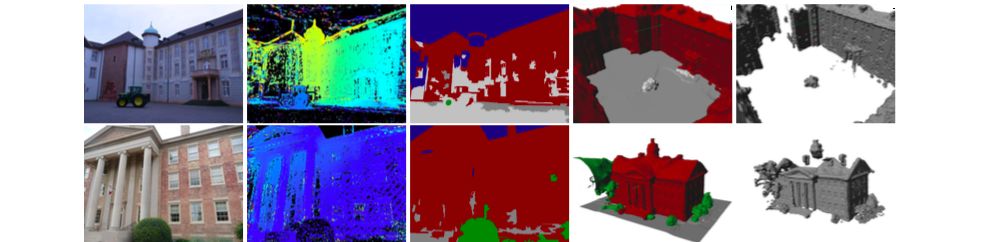

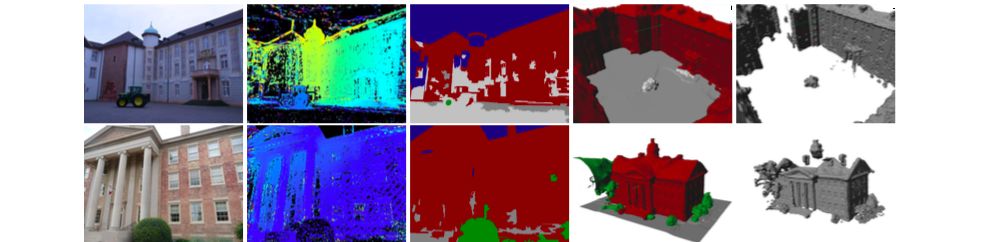

Blaha2016CVPR | |||

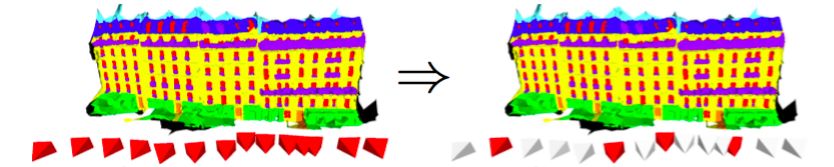

- Joint formulation of semantic segmentation and 3D reconstruction enables to use class-specific shape priors

- State-of-the-art could not scale to large scenes because of run time and memory

- Extension of an expensive volumetric approach

- Hierarchical scheme using an Octree structure

- Refines only in regions containing surfaces

- Coarse-to-fine converges faster because of improved initial guesses

- Saves 95 computation time and 98 memory usage

- Evaluation on real world data set from the city of Enschede

| Object Detection Methods | |||

|

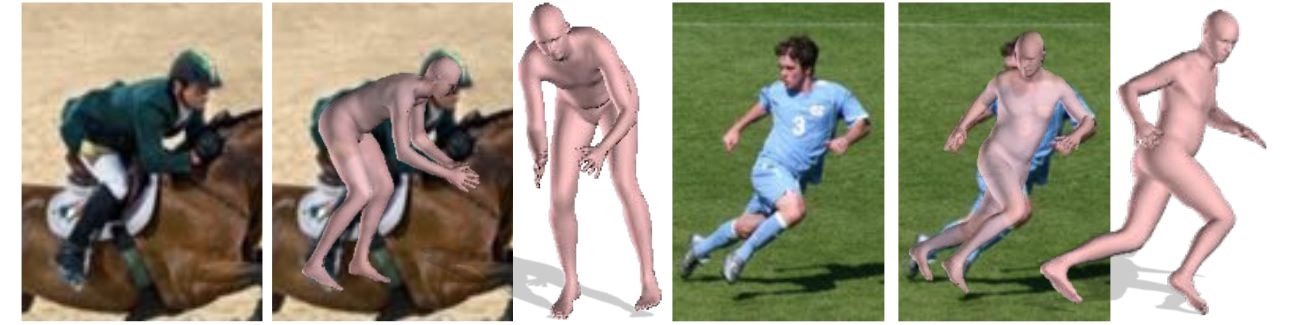

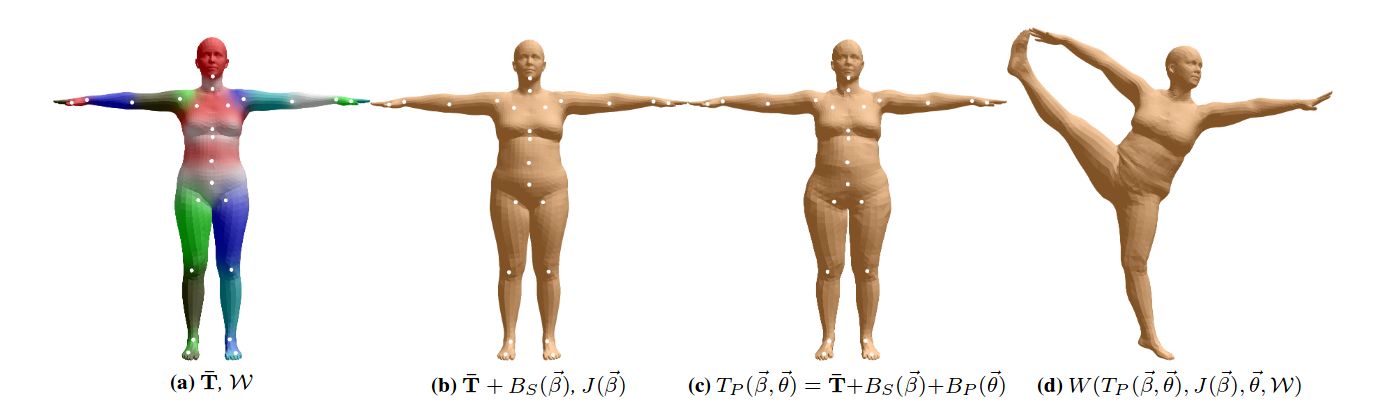

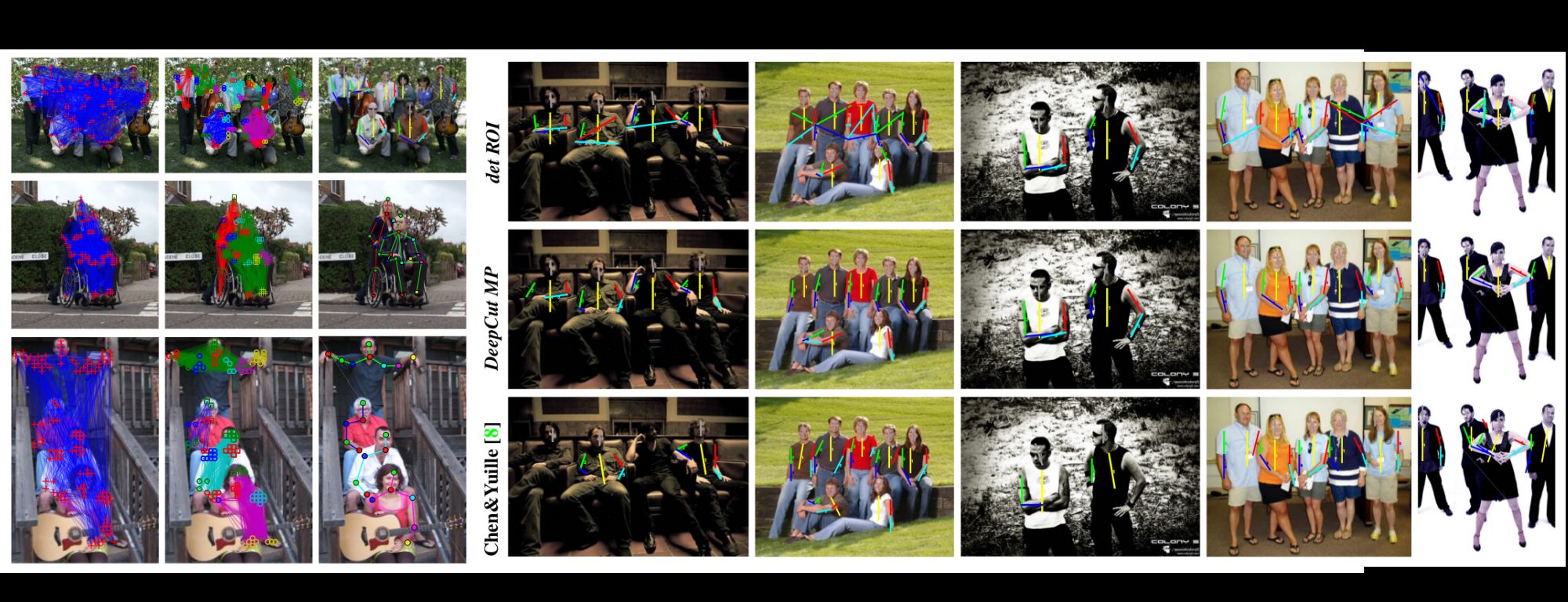

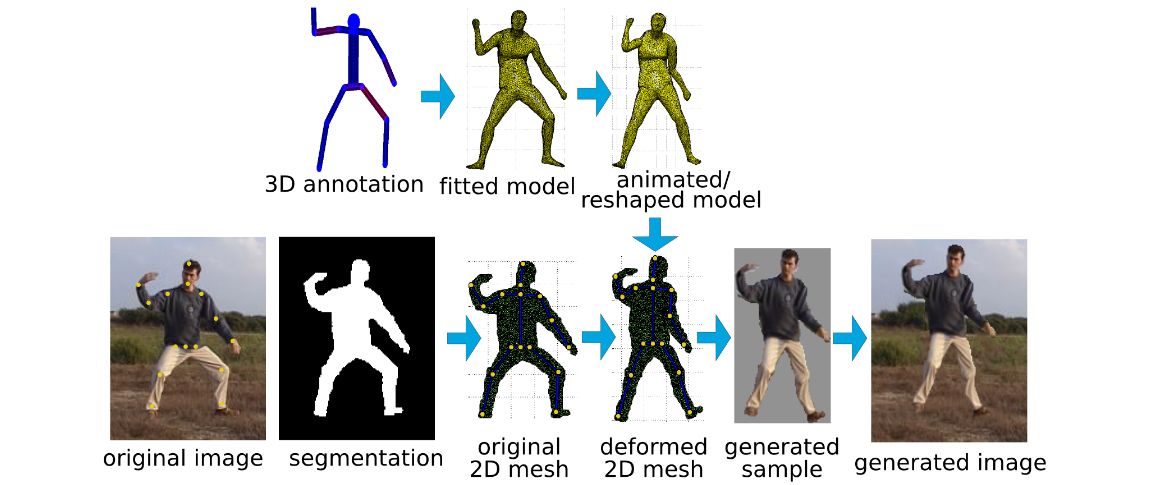

ECCV 2016

Bogo2016ECCV | |||

- Describes the first method to automatically estimate the 3D pose of the human body as well as its 3D shape from a single unconstrained image

- Estimates a full 3D mesh and shows that 2D joints alone carry a surprising amount of information about body shape

- First uses a CNN-based method, DeepCut, to predict the 2D body joint locations

- Then fits a body shape model, called SMPL, to the 2D joints by minimizing an objective function that penalizes the error between the projected 3D model joints and detected 2D joints

- Because SMPL captures correlations in human shape across the population, robust fitting is possible with very little data

- Evaluates on Leeds Sports, HumanEva, and Human3.6M datasets

| History of Autonomous Driving | |||

|

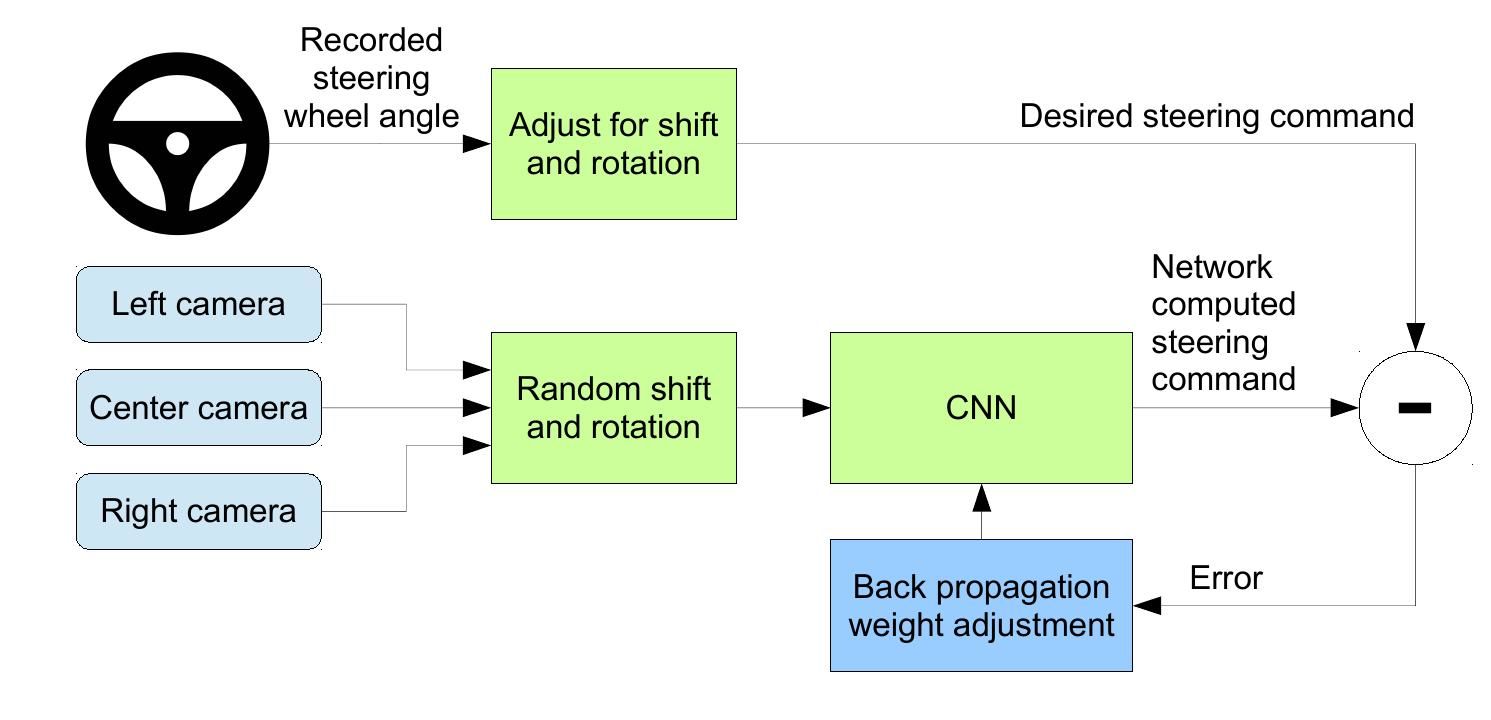

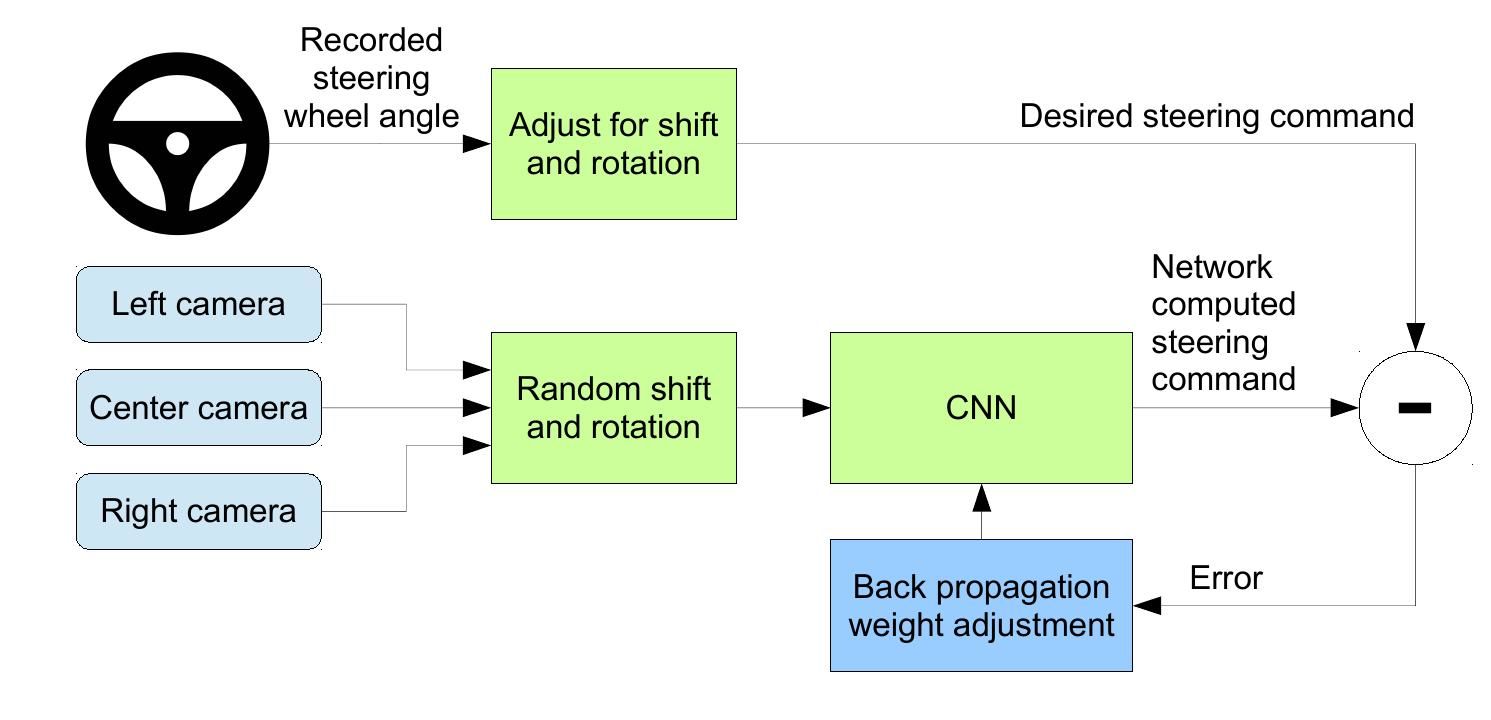

ARXIV 2016

Bojarski2016ARXIV | |||

- Convolutional Neural Network that learns vehicle control using images

- Left and right images are used for data augmentation to simulate specific off-center shifts while adapting the steering command

- Approximated viewpoint transformations assuming points below horizon lie on a plane and above are infinitely far away

- The final network outputs steering commands for the center camera only

- Tested with simulations and with the NVIDIA DRIVE PX self-driving car

| End-to-End Learning for Autonomous Driving Methods | |||

|

ARXIV 2016

Bojarski2016ARXIV | |||

- Convolutional Neural Network that learns vehicle control using images

- Left and right images are used for data augmentation to simulate specific off-center shifts while adapting the steering command

- Approximated viewpoint transformations assuming points below horizon lie on a plane and above are infinitely far away

- The final network outputs steering commands for the center camera only

- Tested with simulations and with the NVIDIA DRIVE PX self-driving car

| History of Autonomous Driving | |||

|

JFR 2006

Braid2006JFR | |||

- TerraMax is an autonomous vehicle based on Koshkosh Truck's Medium Tactical Vehicle Replacement Truck platform

- One of the five vehicles able to successfully pass the 132 miles DARPA Grand Challenge desert race

- Detailed description of the Intelligent Vehicle Management System which includes vehicle sensor management, navigation, and vehicle control system

- Informations on path planer, obstacle detection and behavior management

- Vehicle's vision system was provided by University of Parma

- Oshkosh Truck Corp. provided project management, system integration, low level controls hardware, modeling and simulation support and the vehicle

| Stereo Methods | |||

|

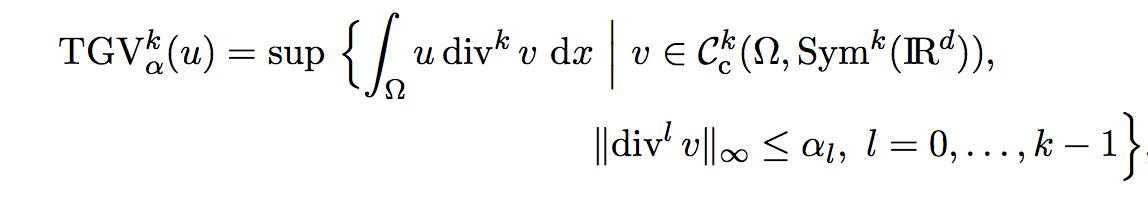

JIS 2010

Bredies2010JIS | |||

- The concept of Total Generalized Variation (TGV) as a regularization term

- Motivation: problems with the

- norm-of-squares terms due to outliers

- bounded variation semi-norm due to piece-wise constant modeling (stair-casing effect)

- Essential properties of TGV:

- generalized higher-order derivatives of the function

- shared properties with TV, for example rotational invariance but different for functions which are not piece-wise constant

- convexity and weak lower semi-continuity

- Experiments on denoising problem

- Regularization on different regularity levels without stair-casing effect

| Optical Flow Methods | |||

|

JIS 2010

Bredies2010JIS | |||

- The concept of Total Generalized Variation (TGV) as a regularization term

- Motivation: problems with the

- norm-of-squares terms due to outliers

- bounded variation semi-norm due to piece-wise constant modeling (stair-casing effect)

- Essential properties of TGV:

- generalized higher-order derivatives of the function

- shared properties with TV, for example rotational invariance but different for functions which are not piece-wise constant

- convexity and weak lower semi-continuity

- Experiments on denoising problem

- Regularization on different regularity levels without stair-casing effect

| Object Detection Methods | |||

|

IV 2000

Broggi2000IV | |||

- Detecting pedestrians on an experimental autonomous vehicle (the ARGO project)

- Exploiting morphological characteristics (size, ratio, and shape) and vertical symmetry of human shape

- A first coarse detection from a monocular image

- Distance refinement using a stereo vision technique

- Temporal correlation using the results from the previous frame to correct and validate the current ones

- Integrated in the ARGO vehicle and tested in urban environments

- Successful detections of whole pedestrians present in the image at a distance ranging from 10 to 40 meters

| History of Autonomous Driving | |||

|

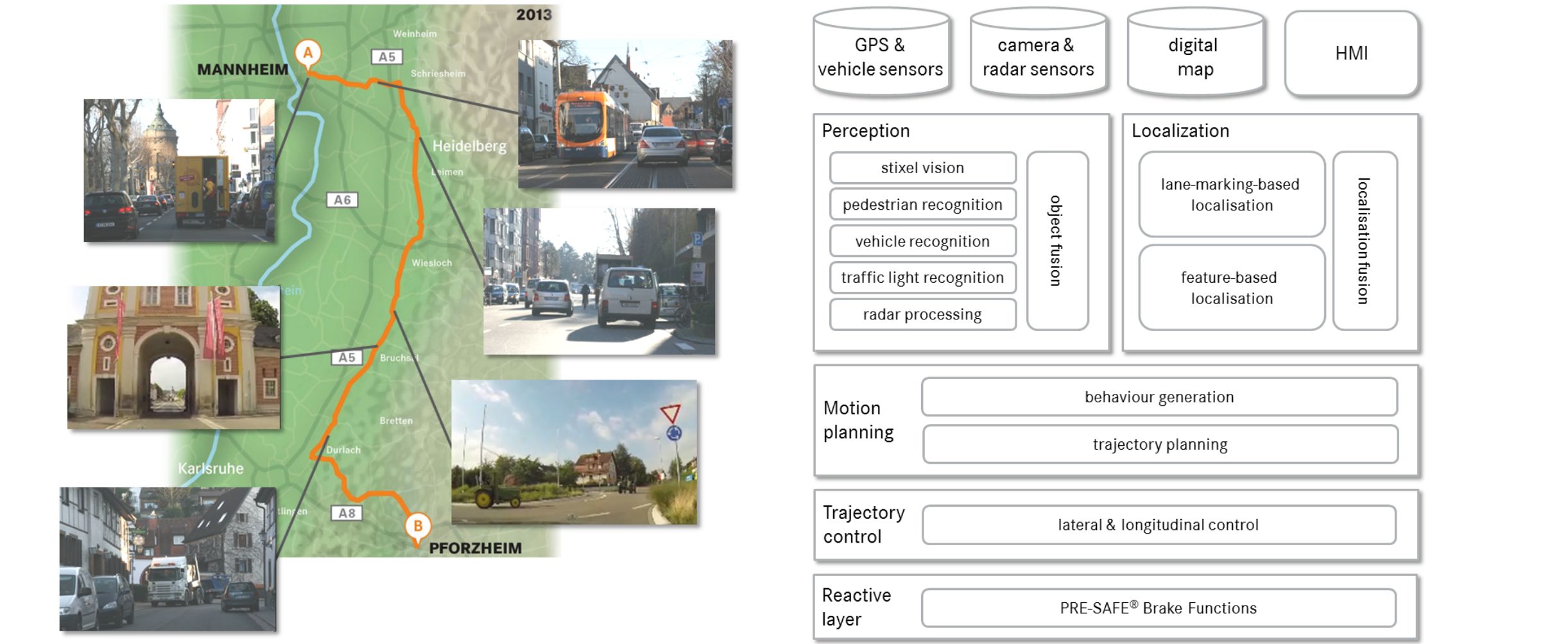

TITS 2015

Broggi2015TITS | |||

- An autonomous driving test on urban roads and freeways open to regular traffic

- Moving in a mapped and familiar scenario with the addition of the position of pedestrian crossings, traffic lights, and guard rails

- Real-time perception of the world for static and dynamic obstacles

- No need for precise 3D maps or world reconstruction

- Details about the vehicle, and main layers: perception, planning, and control

- Complex driving scenarios including roundabouts, junctions, pedestrian crossings, freeway junctions, and traffic lights

| Optical Flow Methods | |||

|

PAMI 2011

Brox2011PAMI | |||

- Coarse-to-fine warping for optical flow estimation

- can handle large displacements

- small objects moving fast are problematic

- Integration of rich descriptors into a variational formulation

- Simple nearest neighbor search in coarse grid

- Feature matches used as soft constraint in continuous approach

- Continuation method: coarse-to-fine while reducing the importance of descriptor matches

- Quantitative results only on Middlebury but real world qualitative results

| Mapping, Localization & Ego-Motion Estimation Localization | |||

|

PAMI 2016

Brubaker2016PAMI | |||

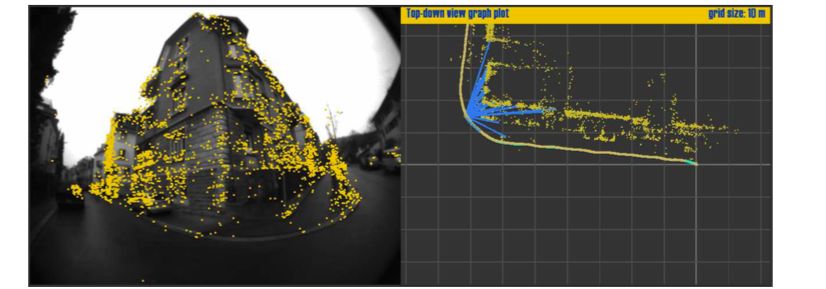

- Describes an affordable solution to vehicle self-localization which uses odometry computed from two video cameras & road maps as the sole inputs

- Contributions:

- Proposes a probabilistic model for which an efficient approximate inference algorithm is derived

- The inference algorithm is able to utilize distributed computation in order to meet the real-time requirements of autonomous systems

- Exploits freely available maps & visual odometry measurements, and is able to localize a vehicle to 4m on average after 52 seconds of driving

- Evaluates on KITTI visual odometry dataset

| Optical Flow Methods | |||

|

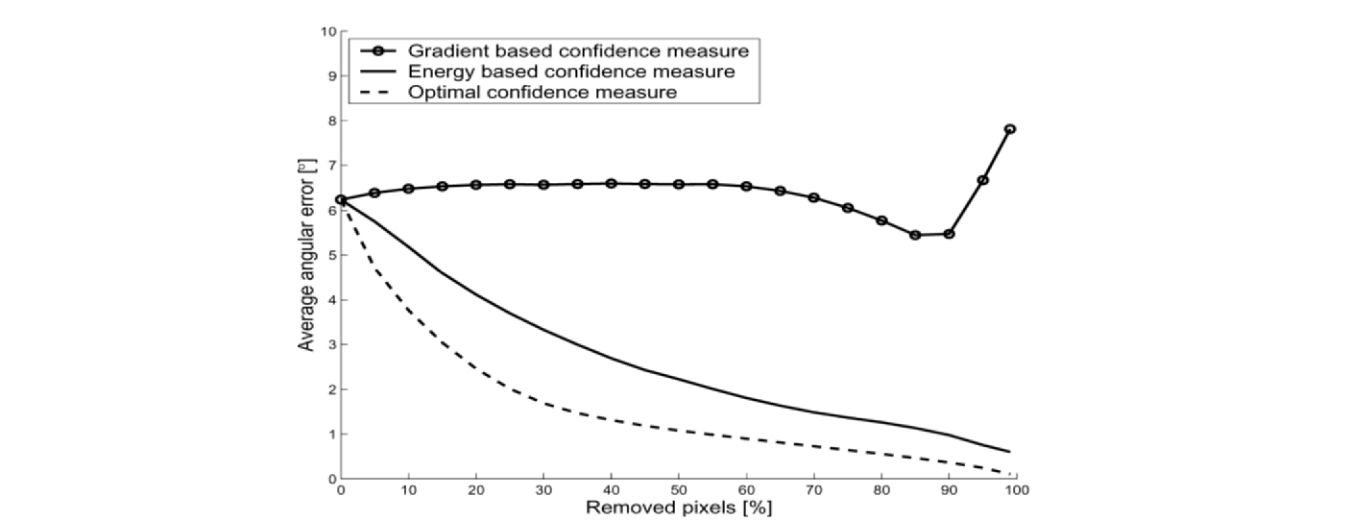

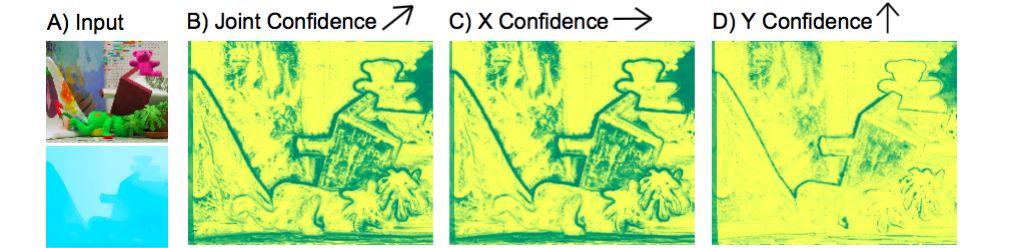

GPID 2006

Bruhn2006GPID | |||

- Investigation of confidence measures for variational optic flow computation

- Discussion of frequently used sparsification strategy based on the image gradient

- Propose a novel energy-based confidence measure that is parameter-free

- Applicable to the entire class of energy minimizing optical flow approaches

- Energy-based confidence measure leads to better results than the gradient-based approach

- Validation on Yosemite, Marble and Office

| Mapping, Localization & Ego-Motion Estimation State of the Art on KITTI | |||

|

ITSC 2016

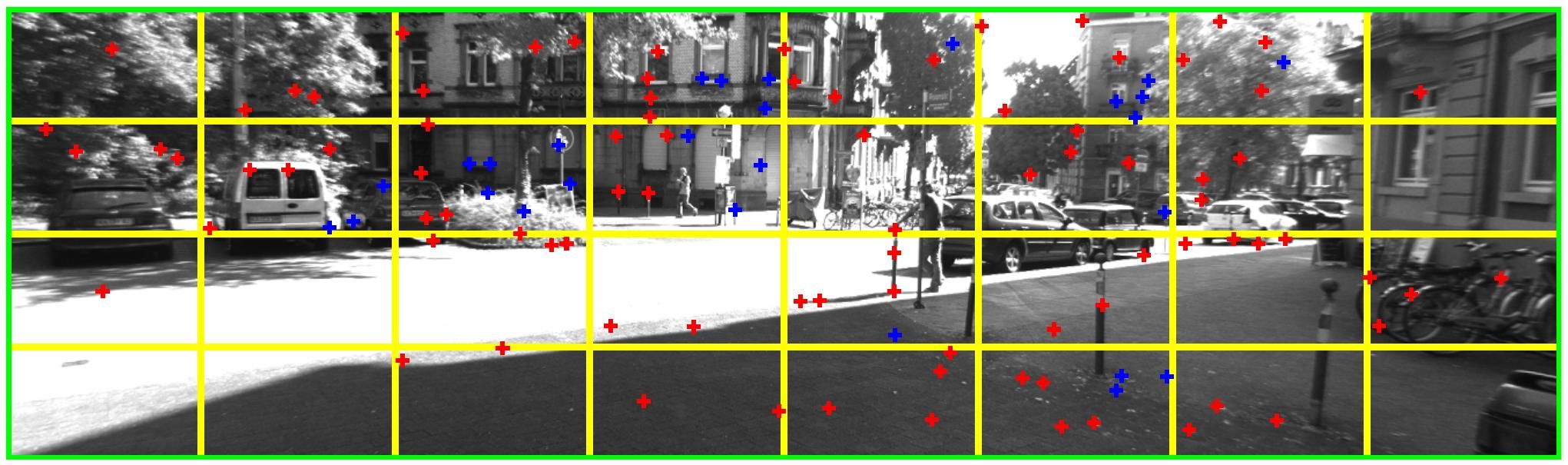

Buczko2016ITSC | |||

- Frame-to-frame feature-based ego-motion estimation using stereo cameras

- Current approach: Rotation and translation of the ego-motion in two separate processes

- An analysis of the characteristics of the optical flows and reprojection errors that are independently induced by each of the decoupled six degrees of freedom motion

- A reprojection error that depends on the coordinates of the features

- Decoupling the translation flow from the overall flow

- Using an initial rotation estimate

- Transforming the correspondences into a pure translation scenario

- Evaluated on KITTI, the best translation error of all camera-based methods

| Semantic Segmentation Methods | |||

|

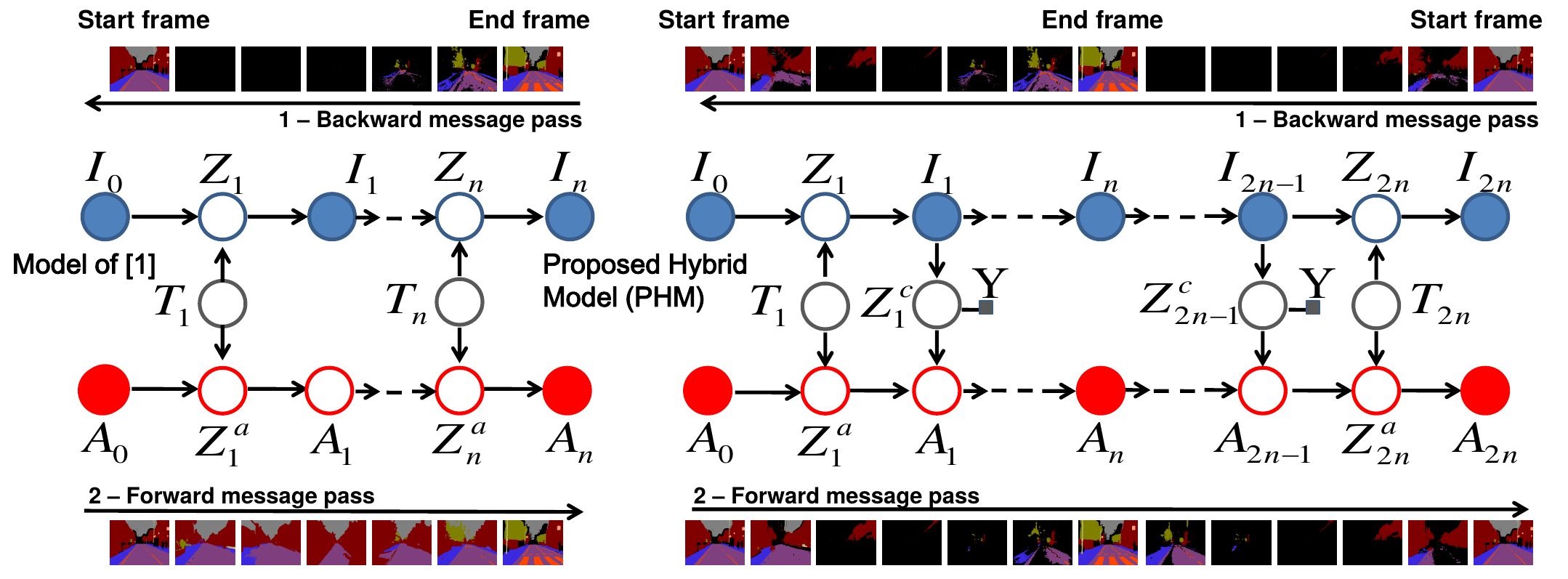

BMVC 2010

Budvytis2010BMVC | |||

- Directed graphical model for label propagation in long and complex video sequences

- Given hand-labelled (semantic labels) start and end frames of a video sequence

- Hybrid of generative label propagation and discriminative classification

- EM based inference used for initial propagation and training of a multi-class classifier

- Labels estimated by classifier are injected back into Bayesian network for another iteration

- Iterative scheme has the ability to handle occlusions

- Time-symmetric label propagation by appending the time-reversed sequence

- Show advantage of learning from propagated labels

- Quantitative and qualitative results on CamVid

| Datasets & Benchmarks | |||

|

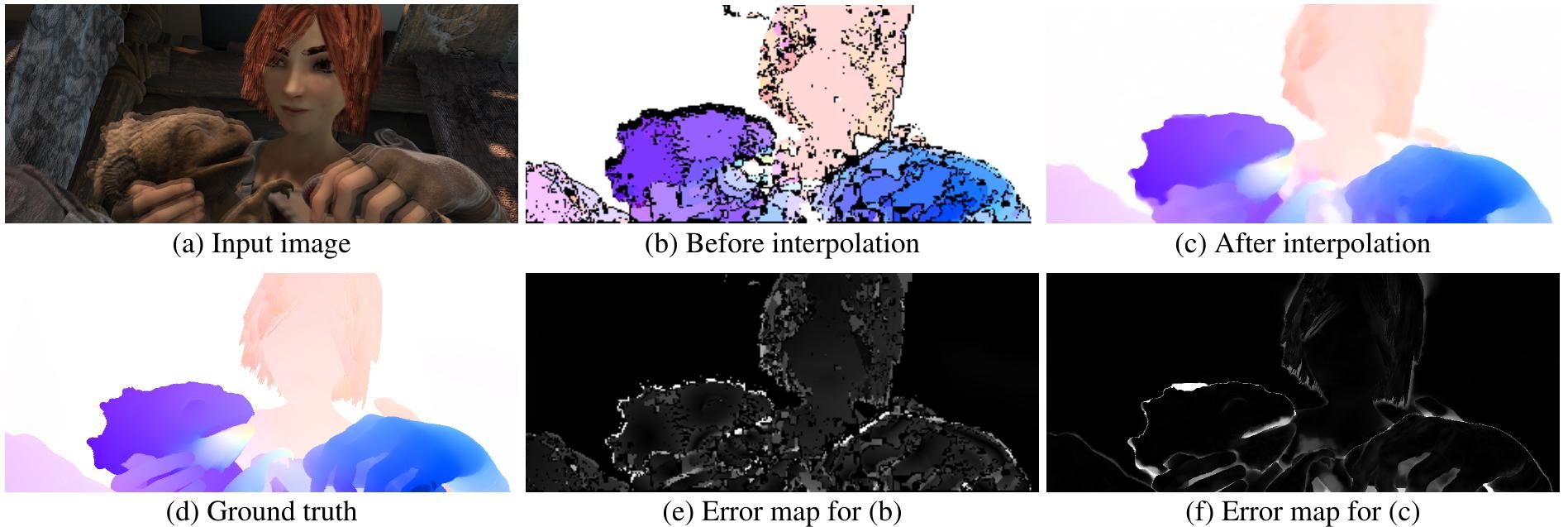

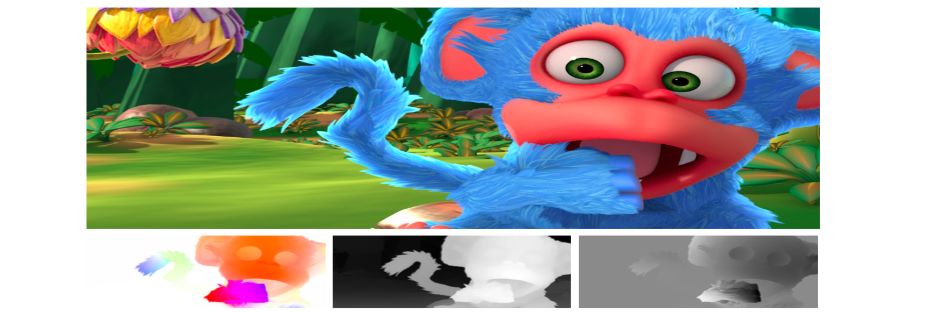

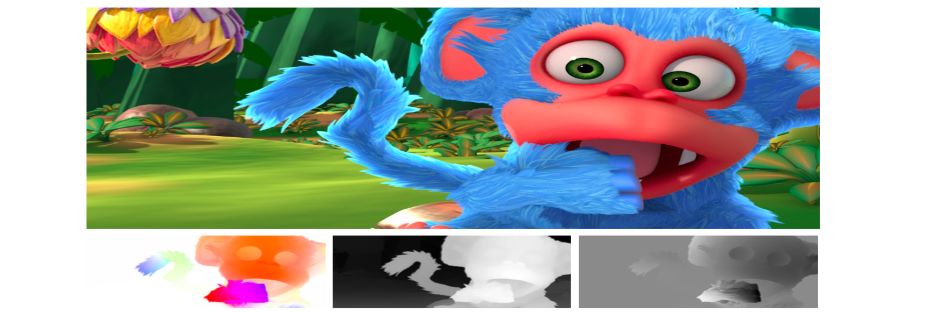

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Datasets & Benchmarks Computer Vision Datasets | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Datasets & Benchmarks Synthetic Data Generation using Game Engines | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Stereo Datasets | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Optical Flow Methods | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Optical Flow Datasets | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| 3D Scene Flow Datasets | |||

|

ECCV 2012

Butler2012ECCV | |||

- Introduction of MPI-Sintel, a new data set based on an open source animated film

- Contributions:

- This data set has important features not present in the Middlebury flow evaluation: long sequences, large motions, specular reflections, motion blur, defocus blur, atmospheric effects.

- Analysis of the statistical properties of the data suggesting it is sufficiently representative of natural movies to be useful

- Introduction of new evaluation measures

- Comparison of public-domain flow algorithms

- Evaluation website that maintains the current ranking and analysis of methods

| Object Detection Methods | |||

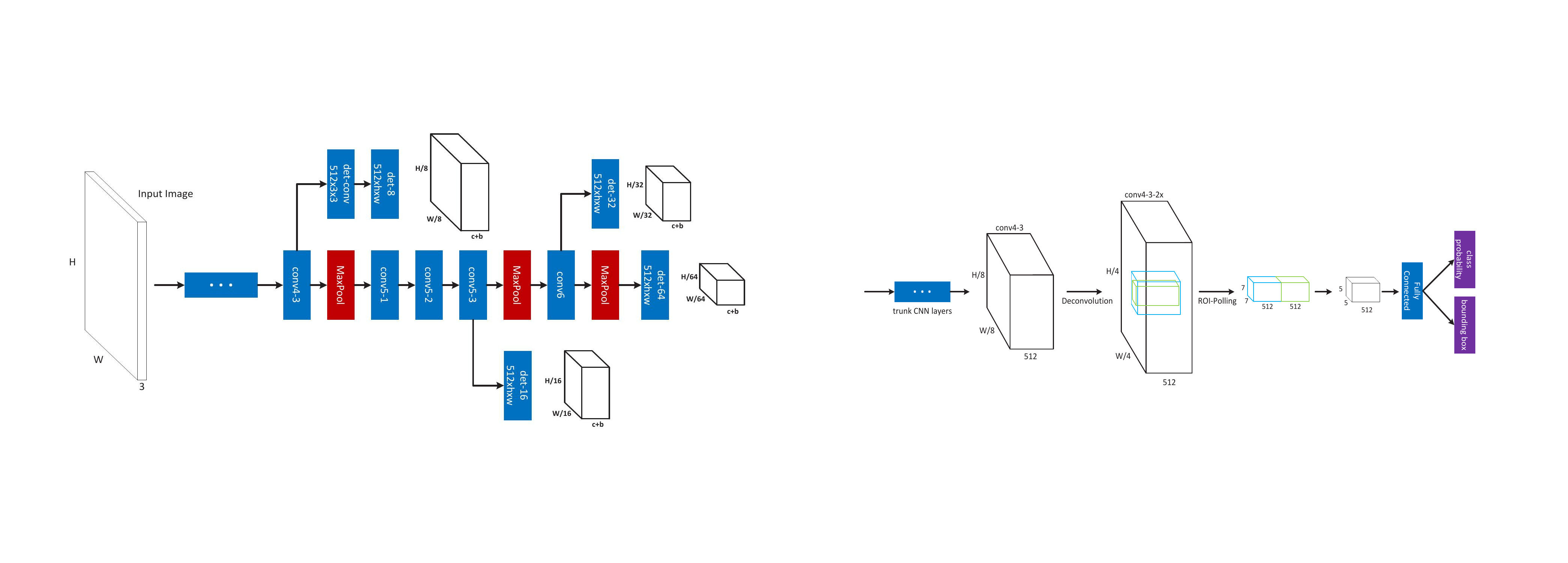

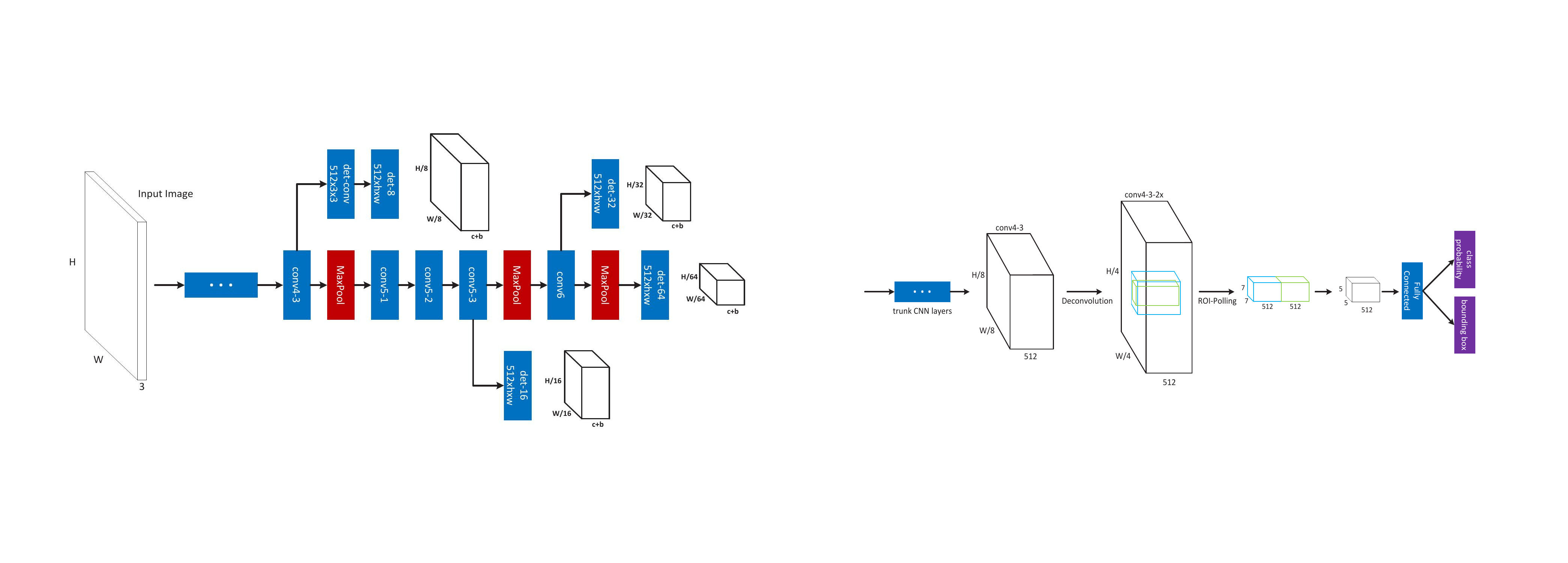

|

ECCV 2016

Cai2016ECCV | |||

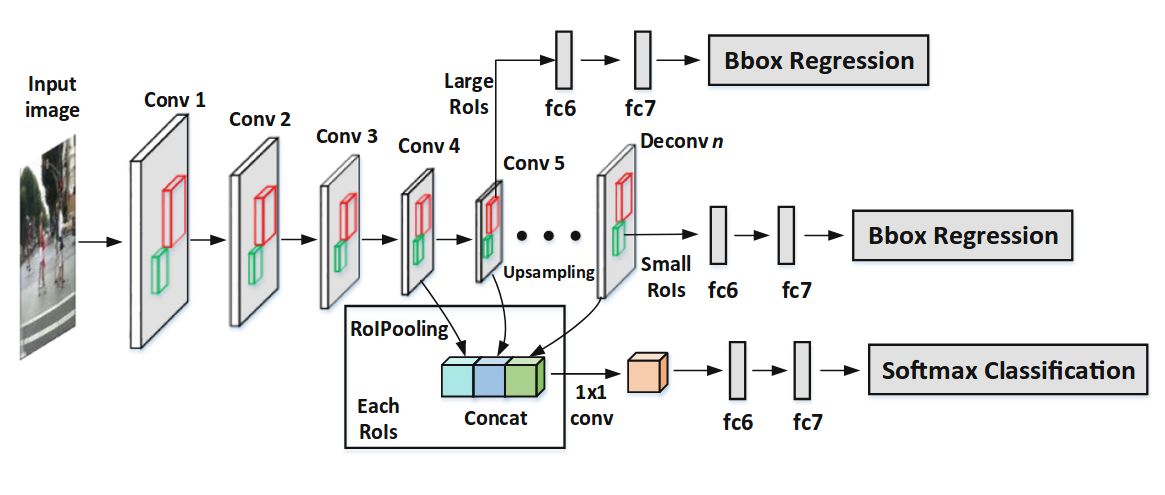

- Multi-scale CNN for fast multi-scale object detection

- Proposal sub-network performs detection at multiple output layers to match objects at different scales

- Complementary scale-specific detectors are combined to produce a strong multi-scale object detector

- Unified network is learned end-to-end by optimizing a multi-task loss

- Feature upsampling by deconvolution reduces the memory and computation costs in contrast to input upsampling

- Evaluation on KITTI and Caltech

| Object Detection State of the Art on KITTI | |||

|

ECCV 2016

Cai2016ECCV | |||

- Multi-scale CNN for fast multi-scale object detection

- Proposal sub-network performs detection at multiple output layers to match objects at different scales

- Complementary scale-specific detectors are combined to produce a strong multi-scale object detector

- Unified network is learned end-to-end by optimizing a multi-task loss

- Feature upsampling by deconvolution reduces the memory and computation costs in contrast to input upsampling

- Evaluation on KITTI and Caltech

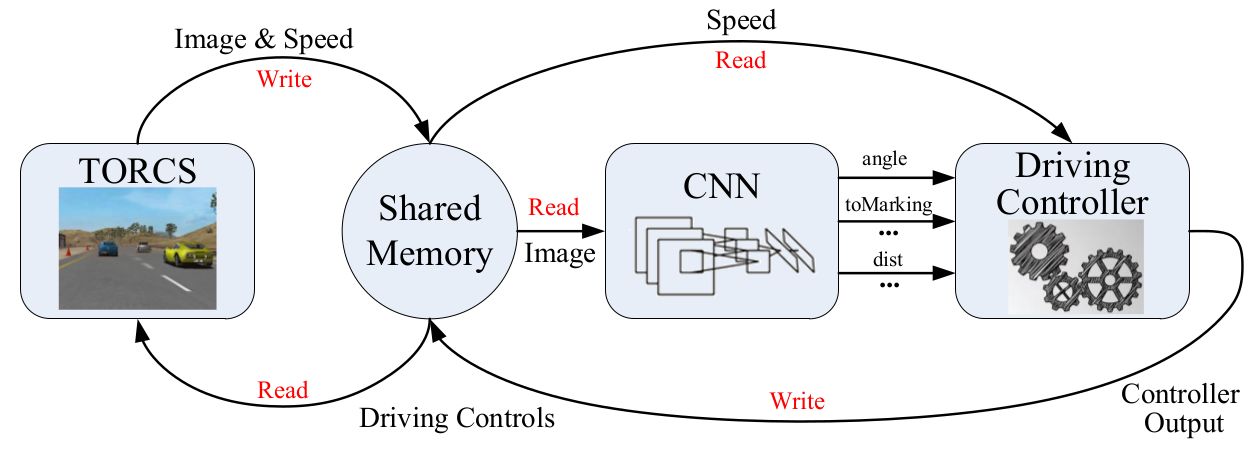

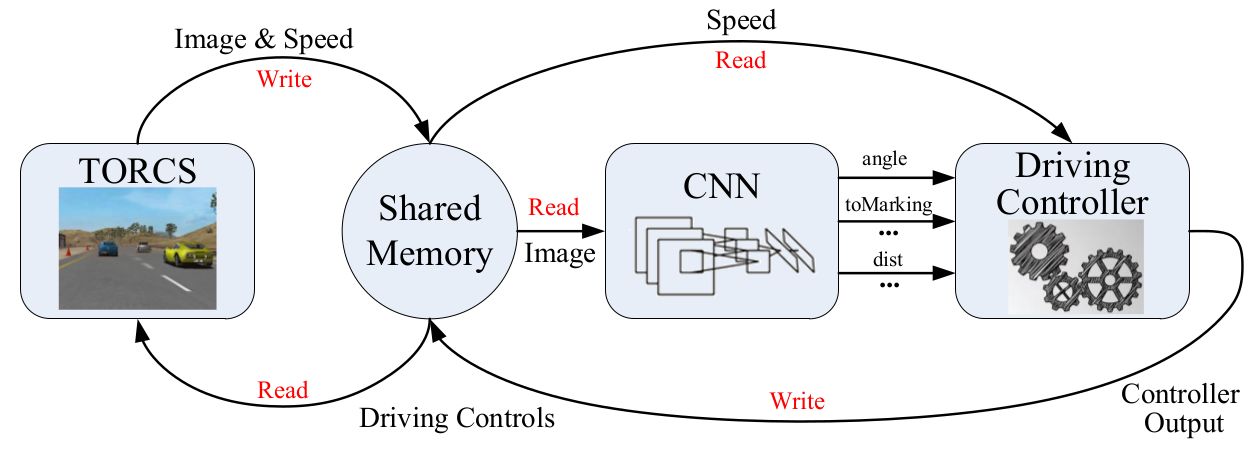

| End-to-End Learning for Autonomous Driving Methods | |||

|

ICCV 2015

Chen2015ICCVa | |||

- Existing methods can be categorized into two major paradigms:

- Mediated perception approaches that parse an entire scene to make a driving decision

- Behavior reflex approaches that directly map an input image to a driving action by a regressor

- Contributions:

- Proposes to map input image to a small number of perception indicators

- These indicators directly relate to the affordance of a road/traffic state for driving

- This representation provides a set of compact descriptions of the scene to enable a controller to drive autonomously

| End-to-End Learning for Autonomous Driving Datasets | |||

|

ICCV 2015

Chen2015ICCVa | |||

- Existing methods can be categorized into two major paradigms:

- Mediated perception approaches that parse an entire scene to make a driving decision

- Behavior reflex approaches that directly map an input image to a driving action by a regressor

- Contributions:

- Proposes to map input image to a small number of perception indicators

- These indicators directly relate to the affordance of a road/traffic state for driving

- This representation provides a set of compact descriptions of the scene to enable a controller to drive autonomously

| Semantic Segmentation Methods | |||

|

CVPR 2014

Chen2014CVPRb | |||

- Automatically segmentation of objects given annotated 3D bounding boxes

- Inference in a binary MRF using appearance models, stereo and/or noisy point clouds, 3D CAD models, and topological constraints

- 10 to 20 labeled objects to train the system

- Evaluated using 3D boxes available on KITTI

- 86 IOU score on segmenting cars (performance of MTurkers)

- It can be used to de-noise MTurk annotations.

- Segmenting big cars is easier than smaller ones.

- Each potential increases performance (CAD model most).

- Same performance with stereo or LIDAR (highest using both)

- Fast: 2 min for training and 44 seconds for full test set

- Robust to low-resolution, saturation, noise, sparse point clouds, depth estimation errors and occlusions

| Semantic Segmentation Methods | |||

|

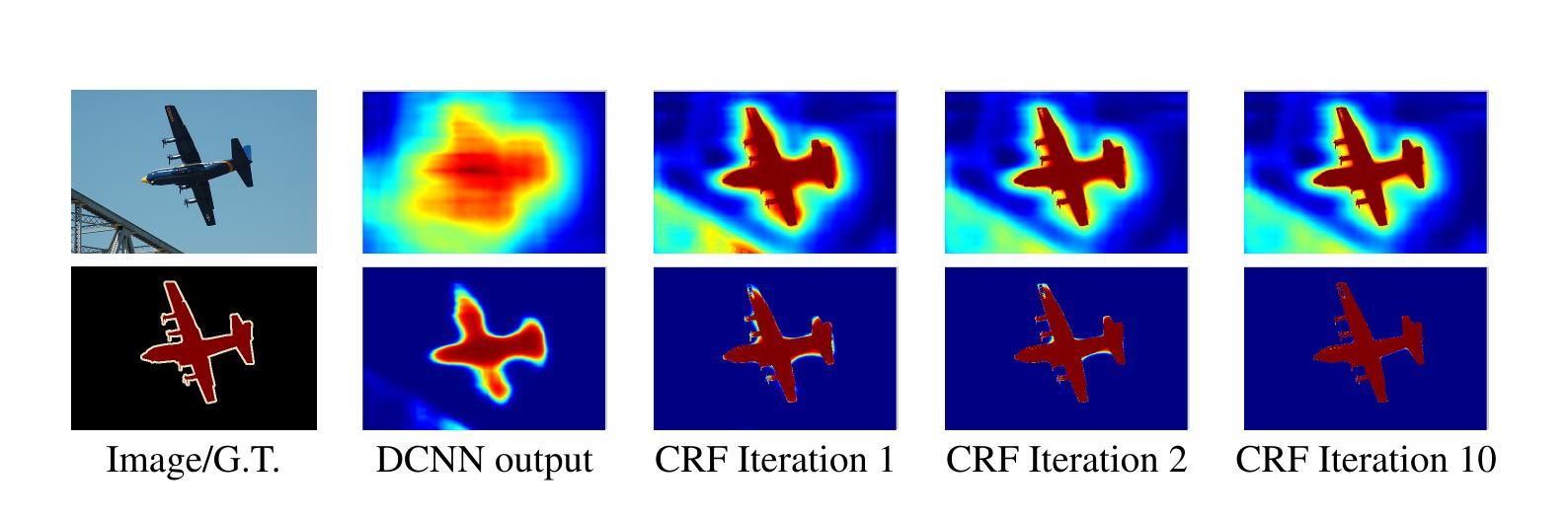

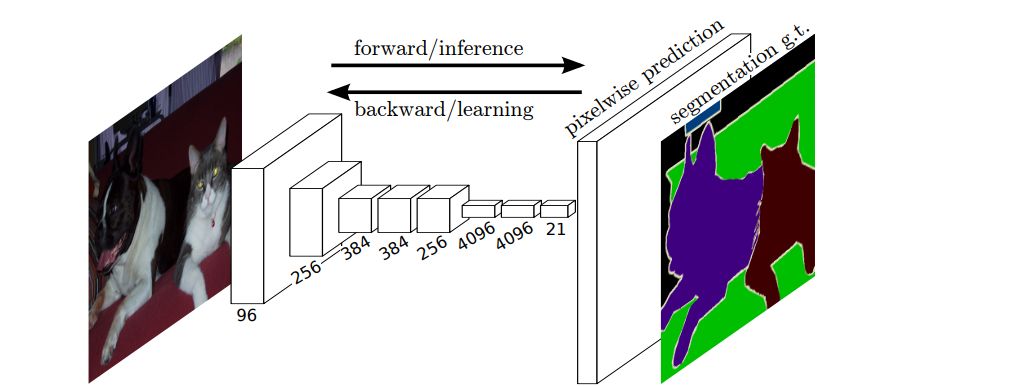

ICLR 2015

Chen2015ICLR | |||

- Final layer of CNNs not sufficiently localized for accurate pixel-level object segmentation

- Overcome poor localization by combining final CNN layer with fully connected Conditional Random Field 1

- Using a fully convolutional VGG-16 network

- Modified convolutional filters by applying the 'atrous' algorithm from wavelet community instead of subsampling

- Significantly advanced the state-of-the-art in PASCAL VOC 2012 in semantic segmentation

1. Krahenbuhl, P. and Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. In NIPS, 2011.

| Optical Flow Methods | |||

|

CVPR 2016

Chen2016CVPR | |||

- Discrete optimization over the full space of mappings for optical flow

- Using a classical formulation with a normalized cross-correlation data term

- Effective optimization over large label space with TRW-S

- Min-convolution reduces the complexity of message passing from squared to linear

- Reducing the space of mappings using a smaller resolution and max displacements

- Epic Flow interpolation to fill inconsistent pixel and post processing for subpixel precision

- State-of-the-art results on Sintel and KITTI 2015

| Object Detection Methods | |||

|

NIPS 2015

Chen2015NIPS | |||

- Generating 3D object proposals by placing 3D bounding boxes on the image

- Exploiting stereo and contextual models specific to autonomous driving

- Minimizing an energy function encoding

- object size priors

- ground plane

- depth-related cues free space, point cloud densities, distance to the ground

- Experiments on KITTI

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

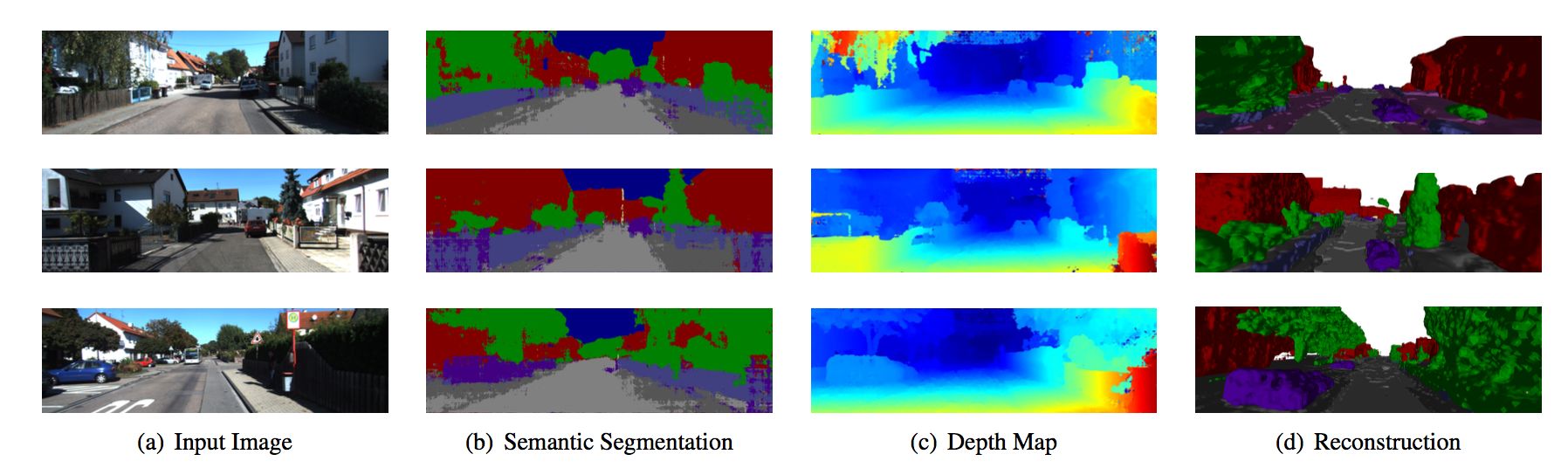

THREEDV 2016

Cherabier2016THREEDV | |||

- Efficient dense 3D reconstruction and semantic segmentation

- Motivation: Current approaches can only handle a low number of semantic labels due to high memory consumption

- Idea: Dividing the scene into blocks in which generally only a subset of labels is active

- Active blocks are determined early and updated during the iterative optimization

- Evaluations on KITTI

- Reduced memory usage with more number of labels, ie 9

| Object Tracking State of the Art on MOT & KITTI | |||

|

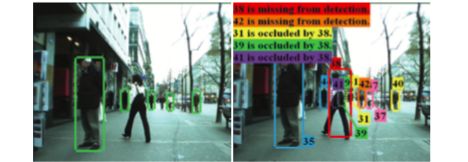

ICCV 2015

Choi2015ICCV | |||

- Near-Online Multi-target Tracking (NOMT) algorithm formulated as global data association between targets and detections in temporal window

- Designing an accurate affinity measure to associate detections and estimate the likelihood of matching

- Aggregated Local Flow Descriptor (ALFD) encodes the relative motion pattern using long term interest point trajectories

- Integration of multiple cues including ALFD metric, target dynamics, appearance similarity and long term trajectory regularization

- Solves the association problem with a parallelized junction tree algorithm

- Best accuracy with significant margins on KITTI and MOT dataset

| Object Tracking Methods | |||

|

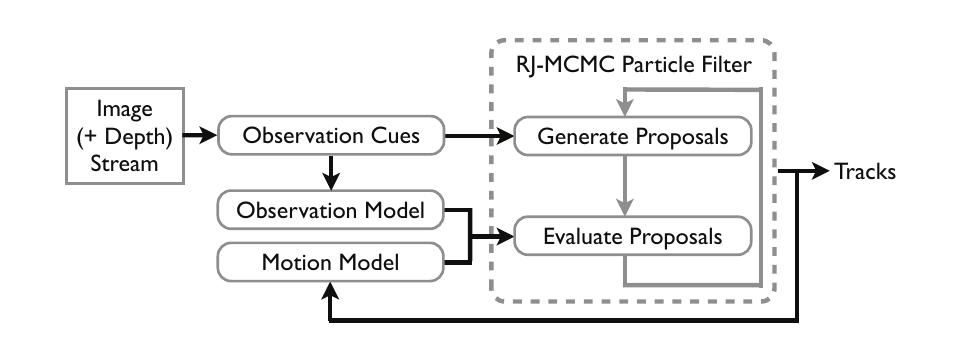

PAMI 2013

Choi2013PAMI | |||

- Tracking multiple, possibly interacting, people from a mobile vision platform

- Joint estimation of camera's ego-motion and the people's trajectory in 3D

- Tracking problem formulated as finding a MAP solution and solved using Reversible Jump Markov Chain Monte Carlo Particle Filtering

- Combination of multiple observation cues face, skin color, depth-based shape, motion, and target specific appearance-based detector

- Modelling interaction with two modes: repulsion and group movement

- Automatic detection of static features for camera estimation

- Evaluation on the challenging ETH dataset and a Kinect RGB-D dataset containing dynamic in- and outdoor scenes

| Multi-view 3D Reconstruction Multi-view Stereo | |||

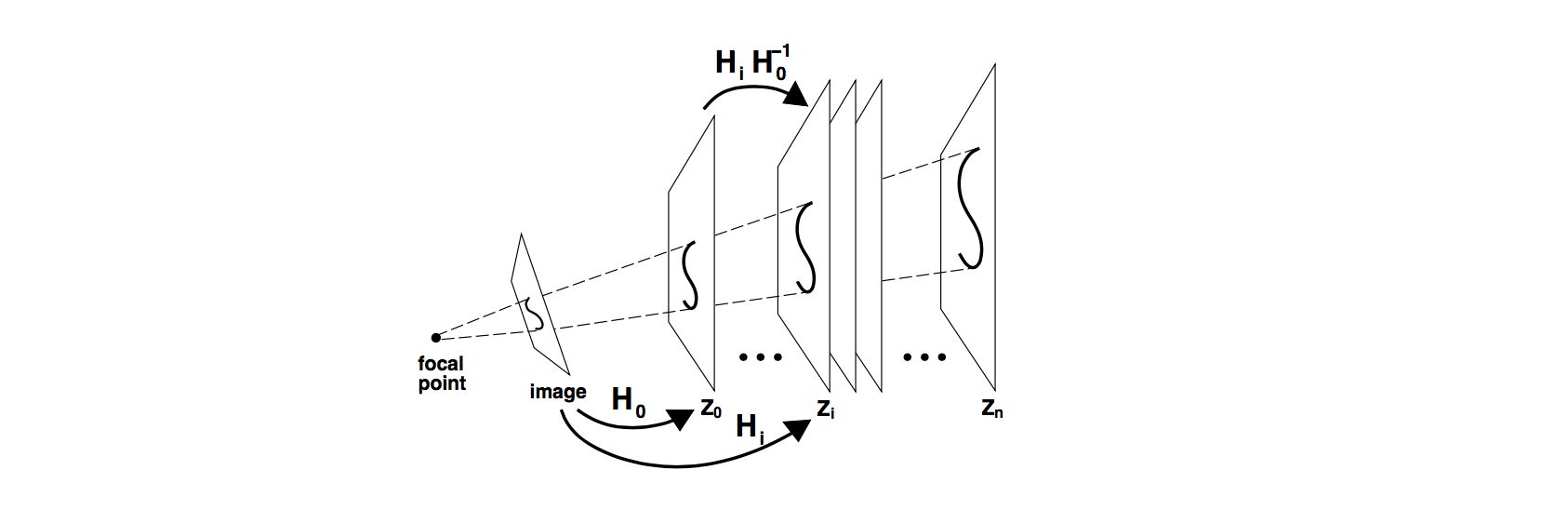

|

CVPR 1996

Collins1996CVPR | |||

- The space-sweep approach to {it true multi-image matching}

- generalizing to any number of images

- linear complexity in the number of images

- using all images in an equal manner

- Algorithm:

- A single plane partitioned into cells is swept through the volume of space along a line perpendicular to the plane (along the Z axis of the scene).

- At each position of the plane along the sweeping path, the number of viewing rays that intersect each cell are tallied by back-projecting point features from each image onto the sweeping plane.

- After accumulating counts from feature points in all of the images, cells containing counts that are large enough are hypothesized as the locations of 3D points.

- The plane then continues its sweep to the next Z location.

| Datasets & Benchmarks | |||

|

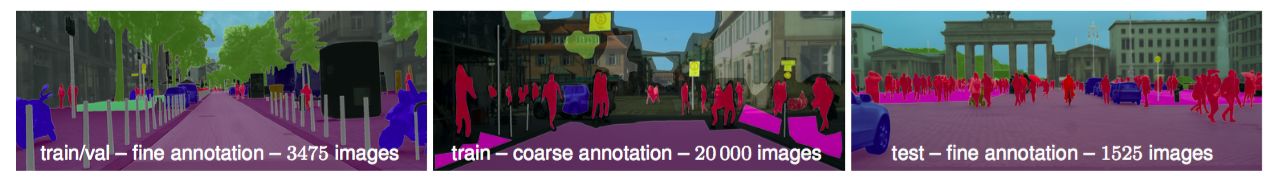

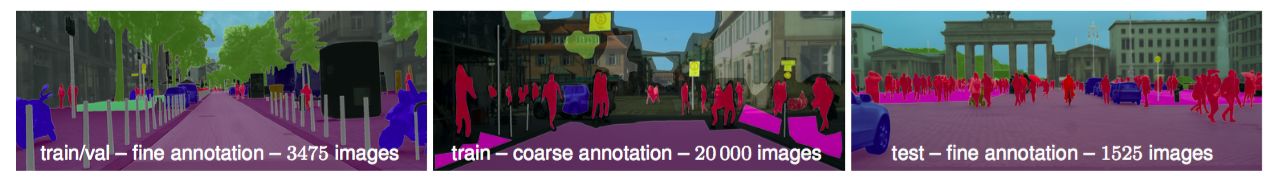

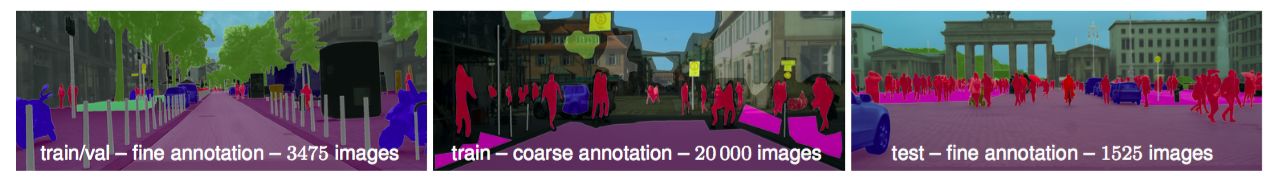

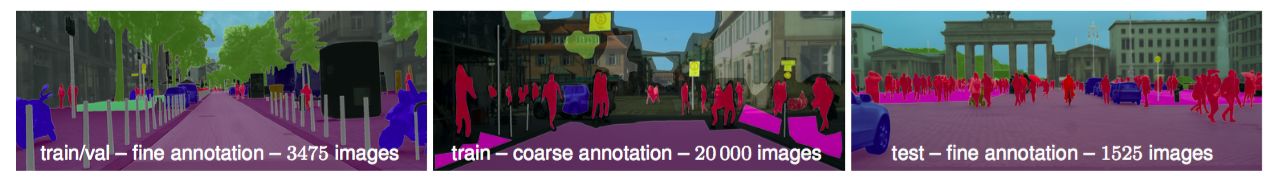

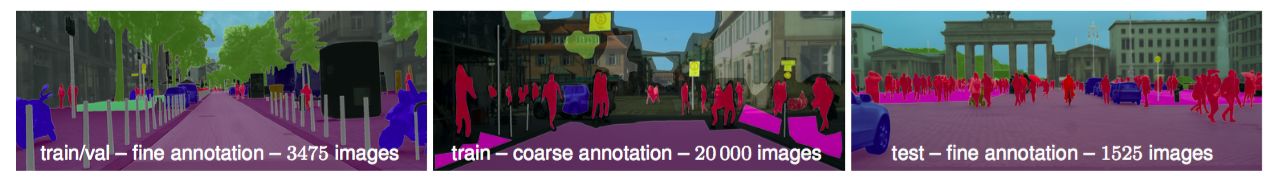

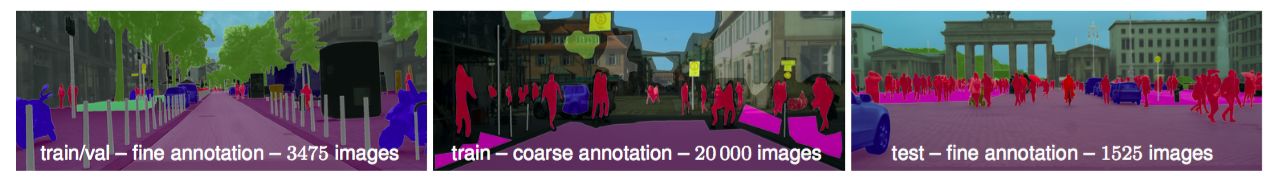

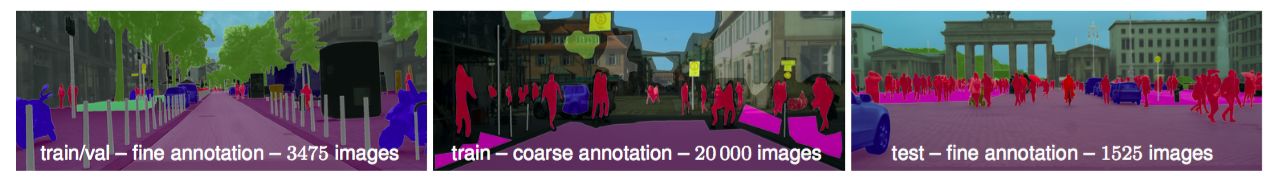

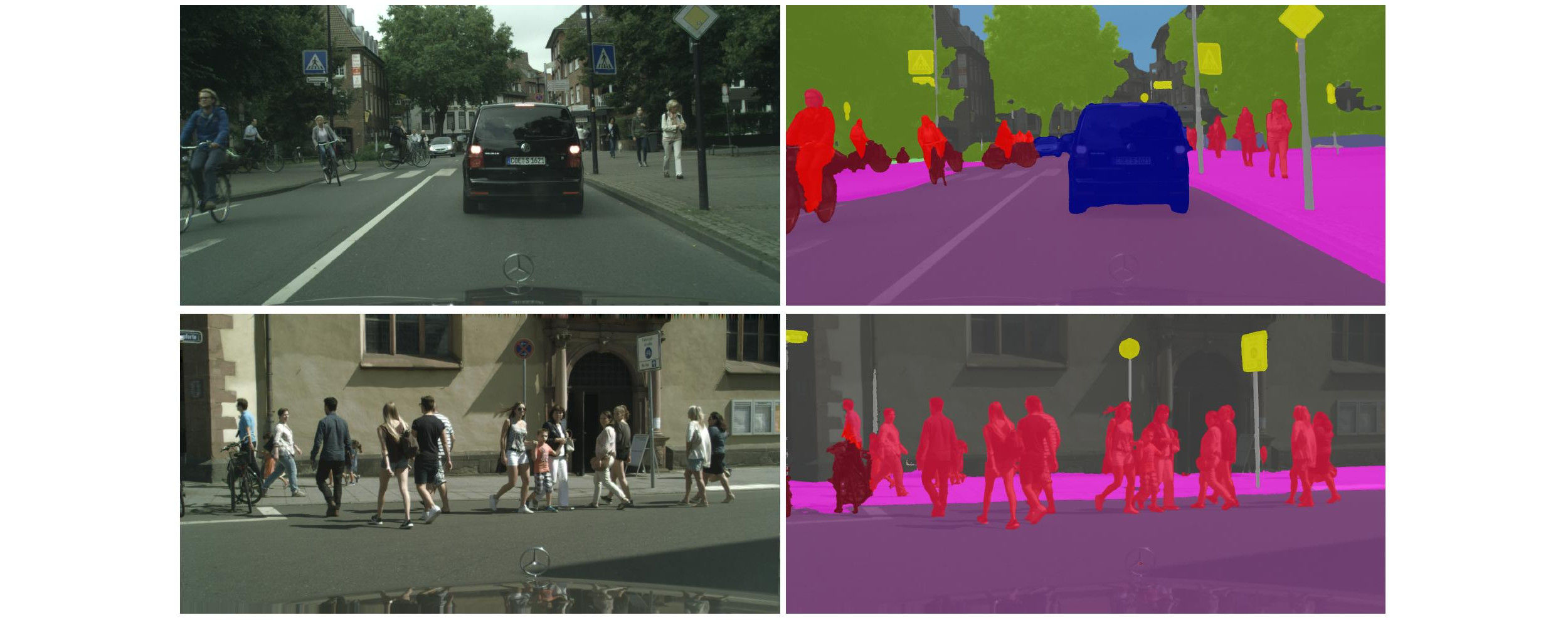

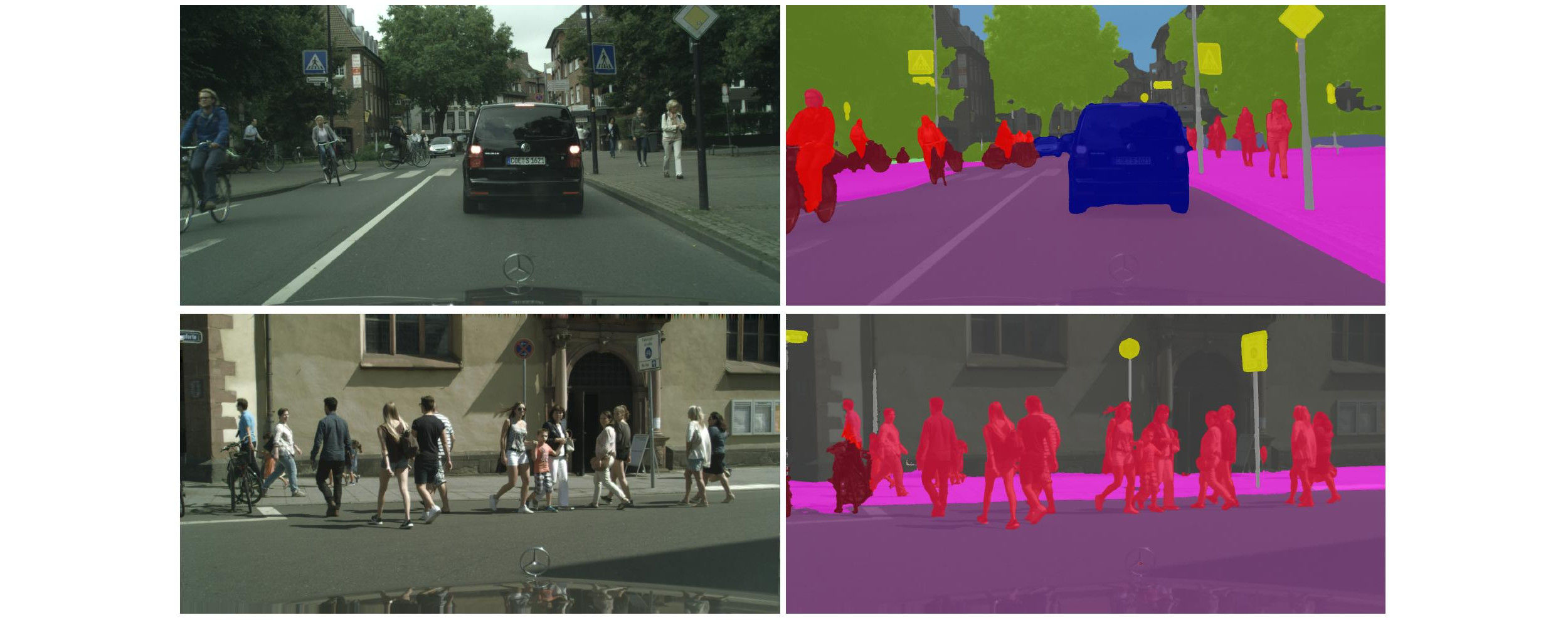

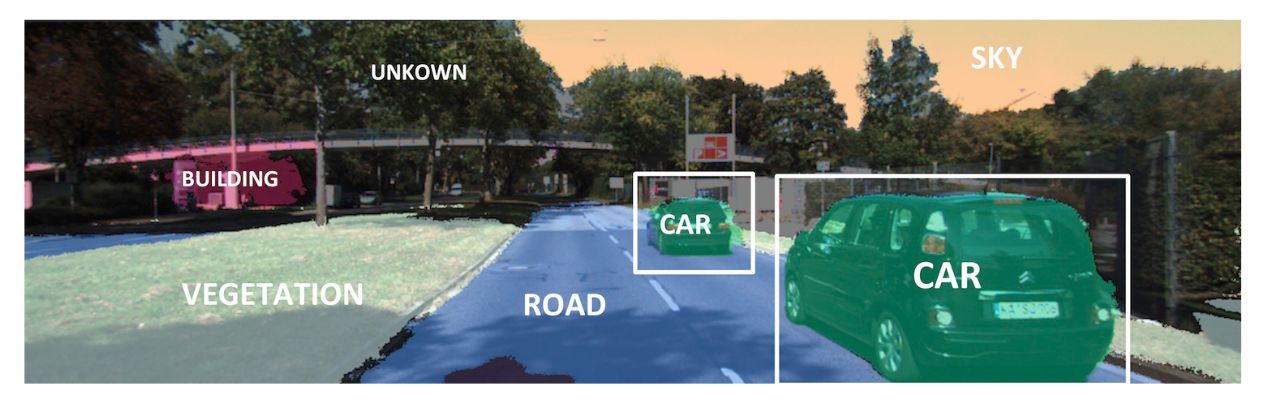

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Datasets & Benchmarks Autonomous Driving Datasets | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

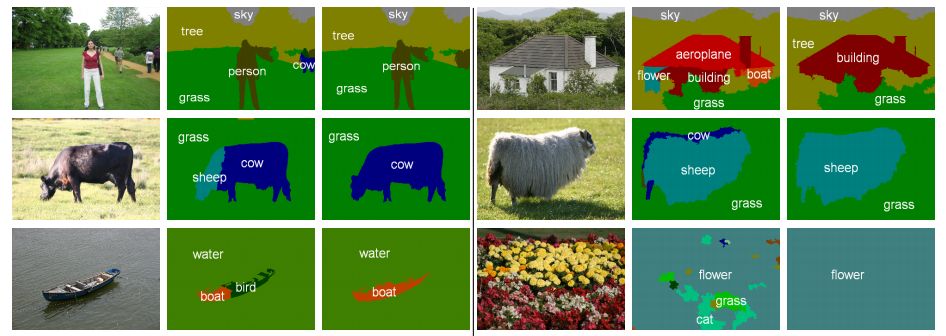

| Semantic Segmentation Problem Definition | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Semantic Segmentation Datasets | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Semantic Segmentation Metrics | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Semantic Instance Segmentation Methods | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Semantic Instance Segmentation Datasets | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

| Semantic Instance Segmentation Metrics | |||

|

CVPR 2016

Cordts2016CVPR | |||

- A benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling

- Specially tailored for autonomous driving in an urban environment

- Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities

- 5000 of these images have high quality pixel-level annotations

- 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data

- Develops a sound evaluation methodology for semantic labeling by introducing a novel evaluation measure

- Evaluates several state-of-the-art approaches on the benchmark

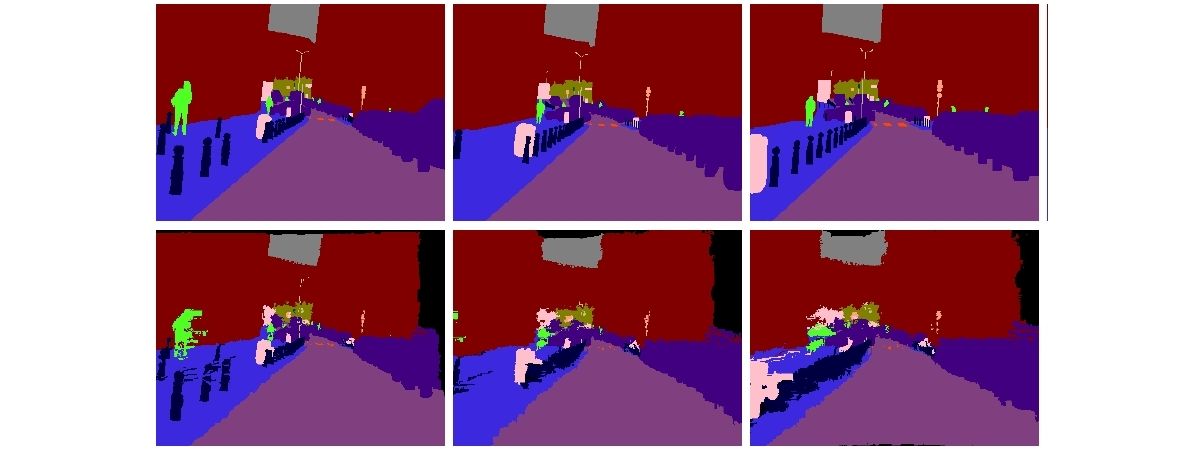

| Semantic Segmentation Methods | |||

|

GCPR 2014

Cordts2014GCPR | |||

- Existing stixels representations are solely based on dense stereo and a strongly simplifying world model with a nearly planar road surface and perpendicular obstacles

- Whenever depth measurements are noisy or the world model is violated, Stixels are prone to error

- Contributions:

- Shows a principled way to incorporate top-down prior knowledge from object detectors into the Stixel generation

- The additional information not only improves the representation of the detected object classes, but also of other parts in the scene, e.g. the freespace

- Evaluates on stereo sequence introduced in the paper

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

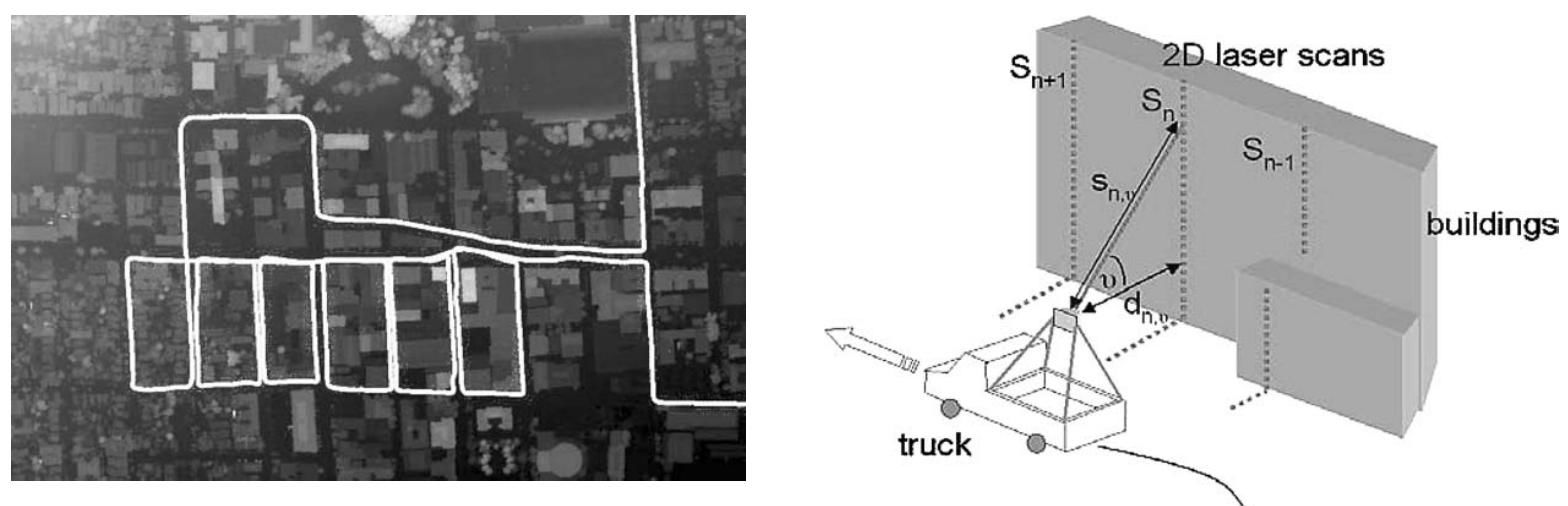

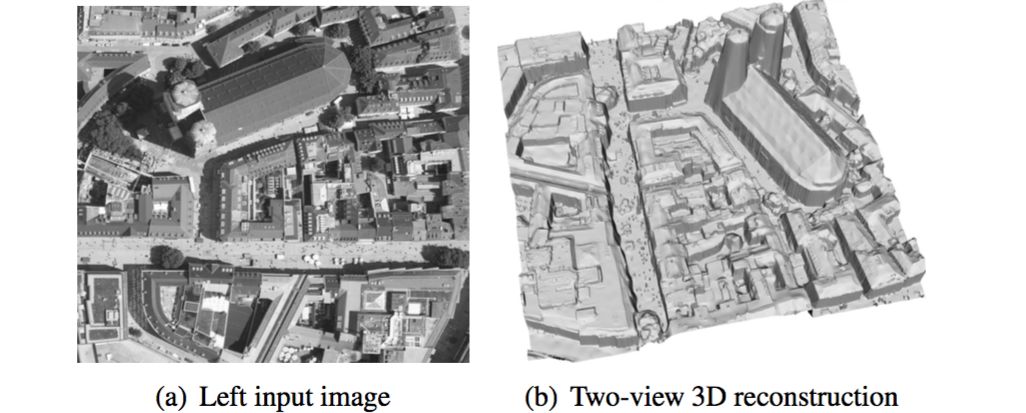

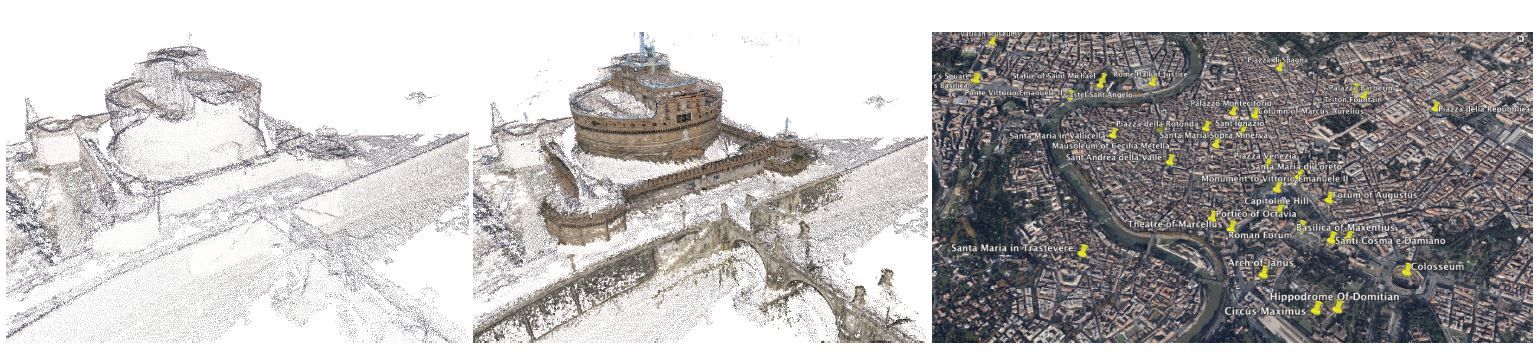

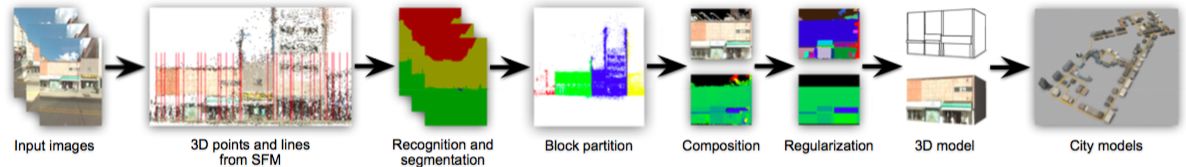

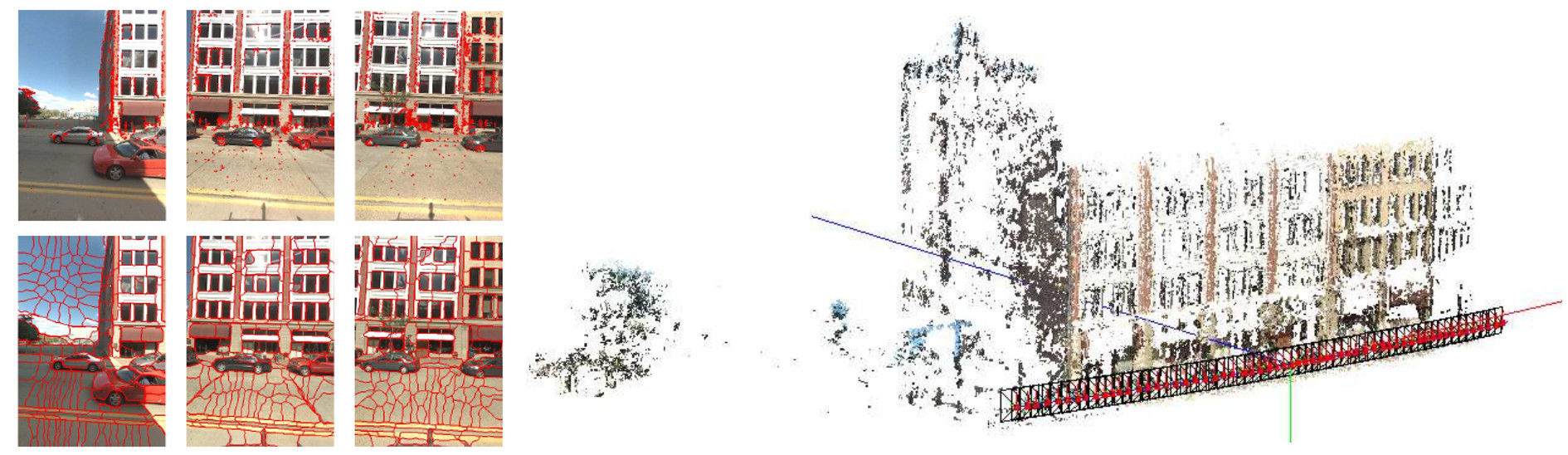

IJCV 2008

Cornelis2008IJCV | |||

- Fast and memory efficient 3D city modelling

- Application: a pre-visualization of a required traffic manoeuvre for navigation systems

- Simplified geometry assumptions while still having compact models

- Adapted dense stereo algorithm with ruled-surface approximation

- Integrating object recognition for detecting cars in video and then localizing them in 3D (not real-time yet)

- 3D reconstruction and localization benefit from each other.

- Tested on three stereo sequences annotated with GPS/INS measurements

| Mapping, Localization & Ego-Motion Estimation Ego-Motion Estimation | |||

|

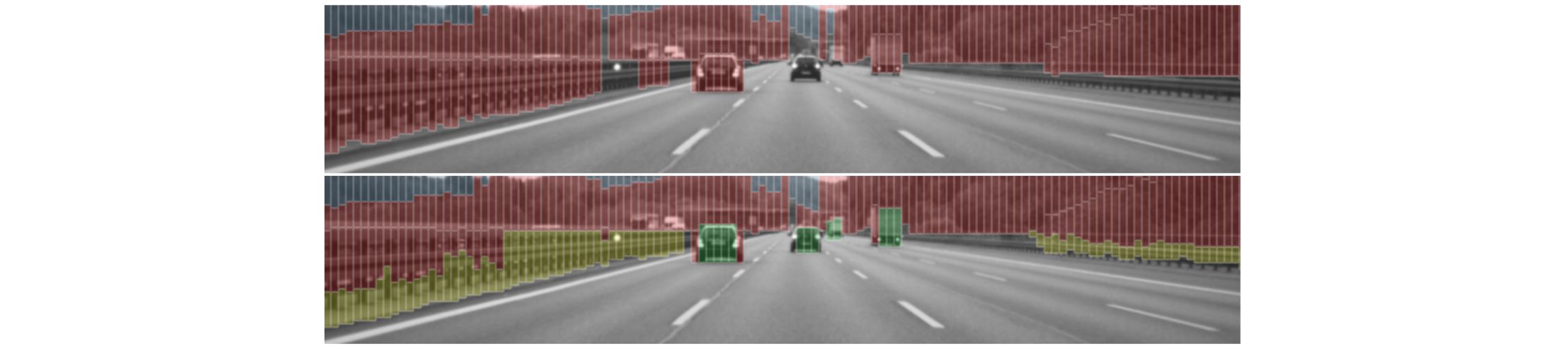

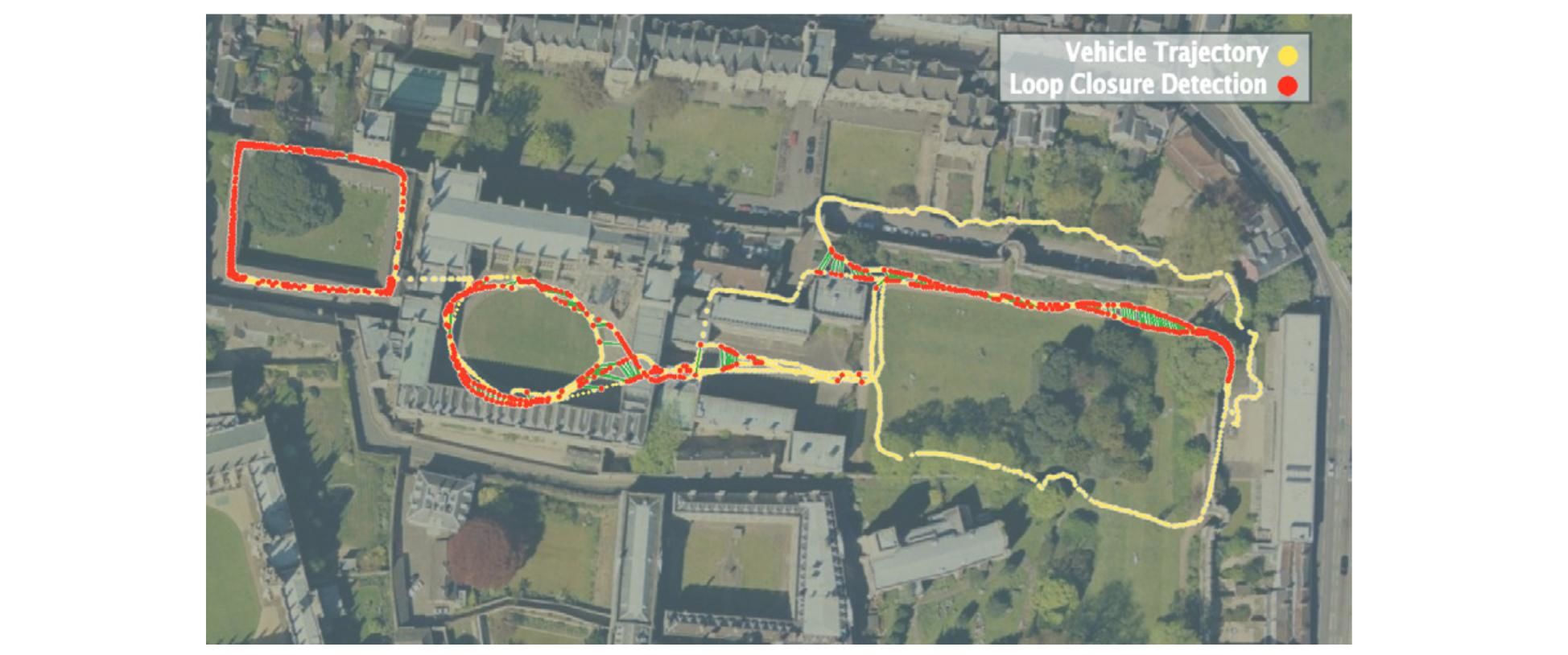

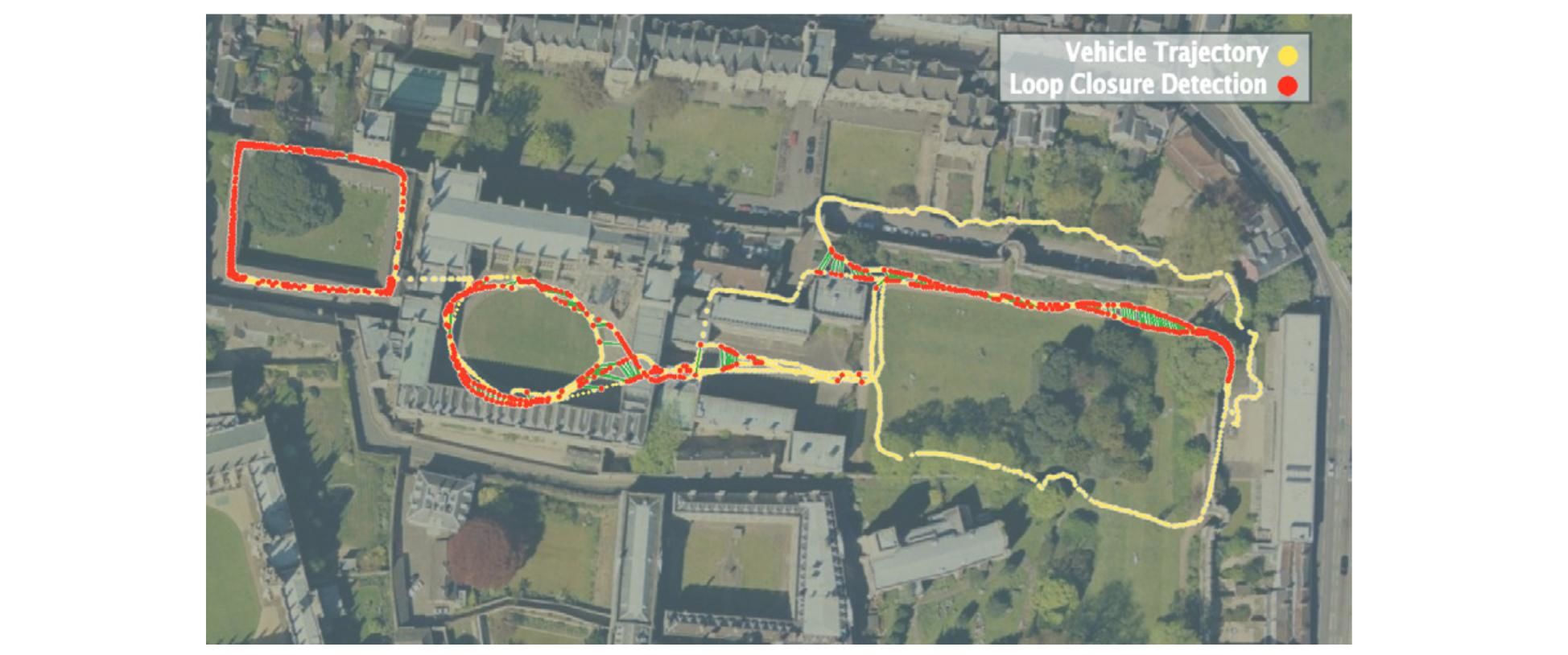

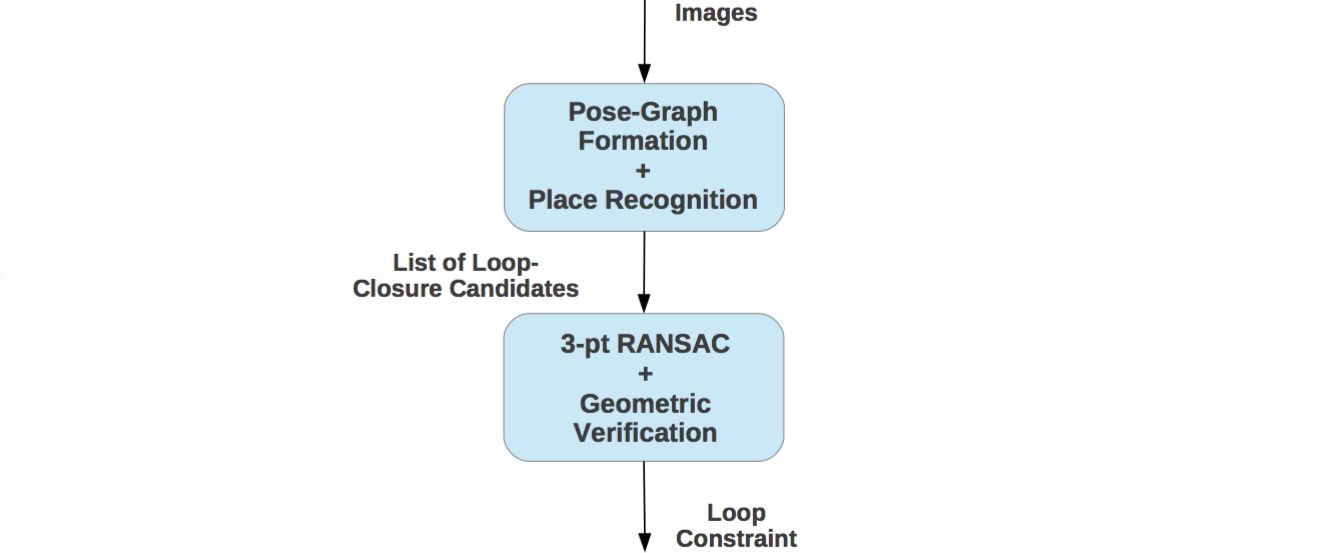

IJRR 2008

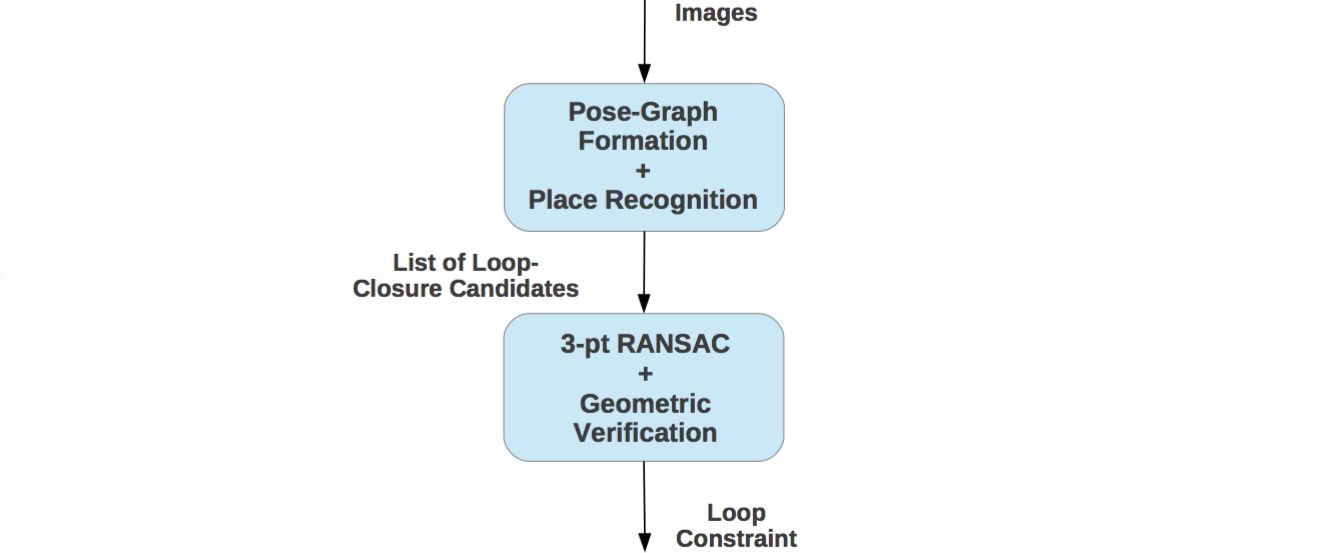

Cummins2008IJRR | |||

- Probabilistic approach to recognize places based on their appearance (loop closure detection)

- Topological SLAM by learning a generative model of place appearances using bag-of-words

- Combination of appearance words occur because they are generated from common objects

- Approximation of a discrete distribution using Chow Liu algorithm

- Robust in visually repetitive environments

- Complexity linear in number of places and the algorithm is suitable for online loop closure detection in mobile robotics

- Demonstration by detecting loop closures over 2km path in an initially unknown outdoor environment

| Mapping, Localization & Ego-Motion Estimation Metrics | |||

|

IJRR 2008

Cummins2008IJRR | |||

- Probabilistic approach to recognize places based on their appearance (loop closure detection)

- Topological SLAM by learning a generative model of place appearances using bag-of-words

- Combination of appearance words occur because they are generated from common objects

- Approximation of a discrete distribution using Chow Liu algorithm

- Robust in visually repetitive environments

- Complexity linear in number of places and the algorithm is suitable for online loop closure detection in mobile robotics

- Demonstration by detecting loop closures over 2km path in an initially unknown outdoor environment

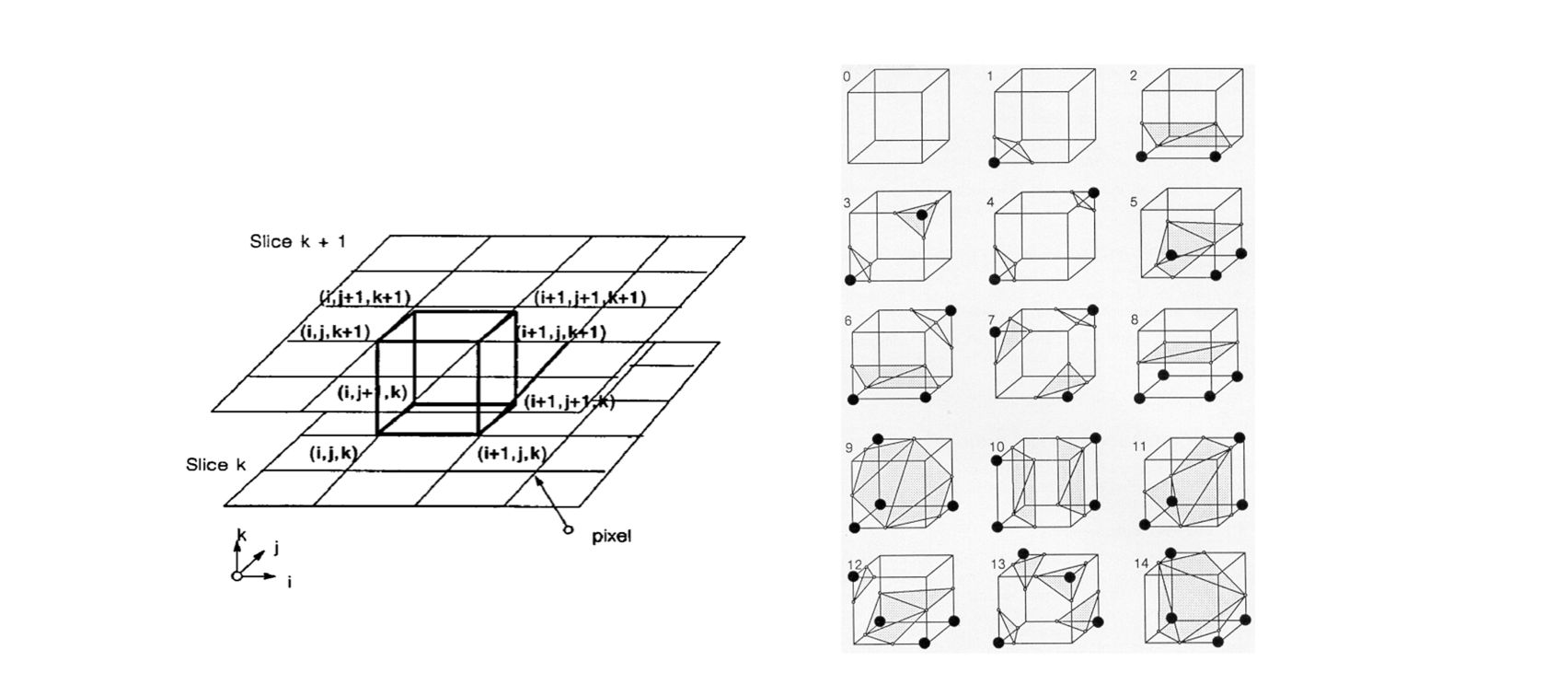

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

SIGGRAPH 1996

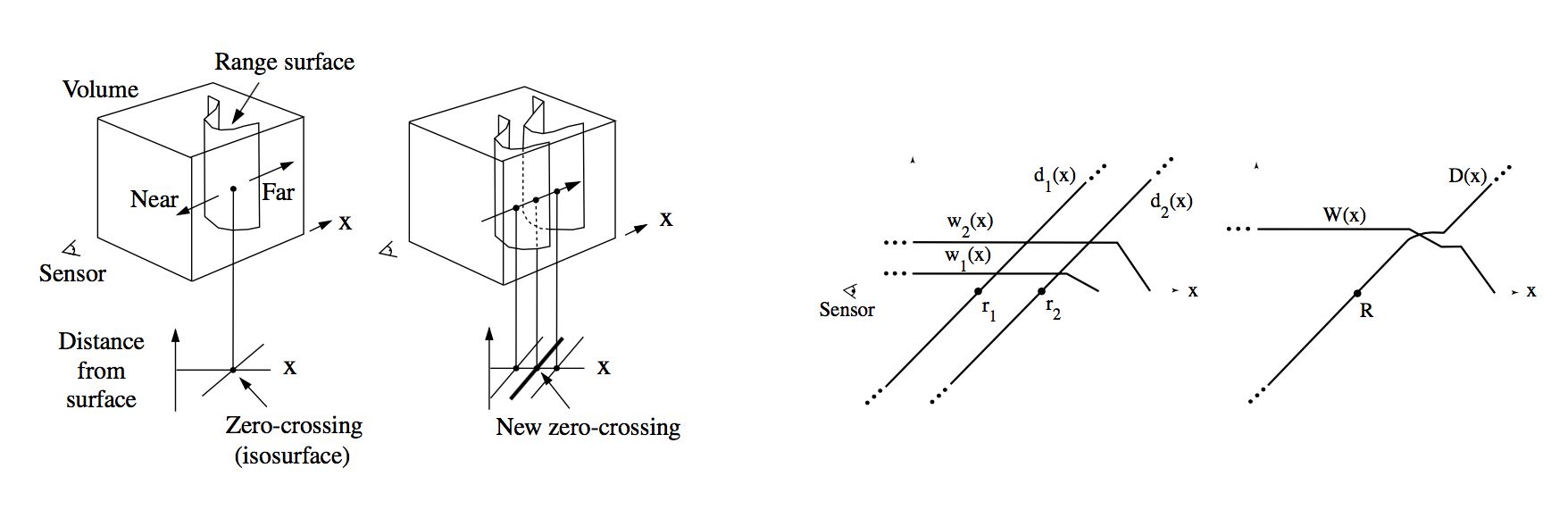

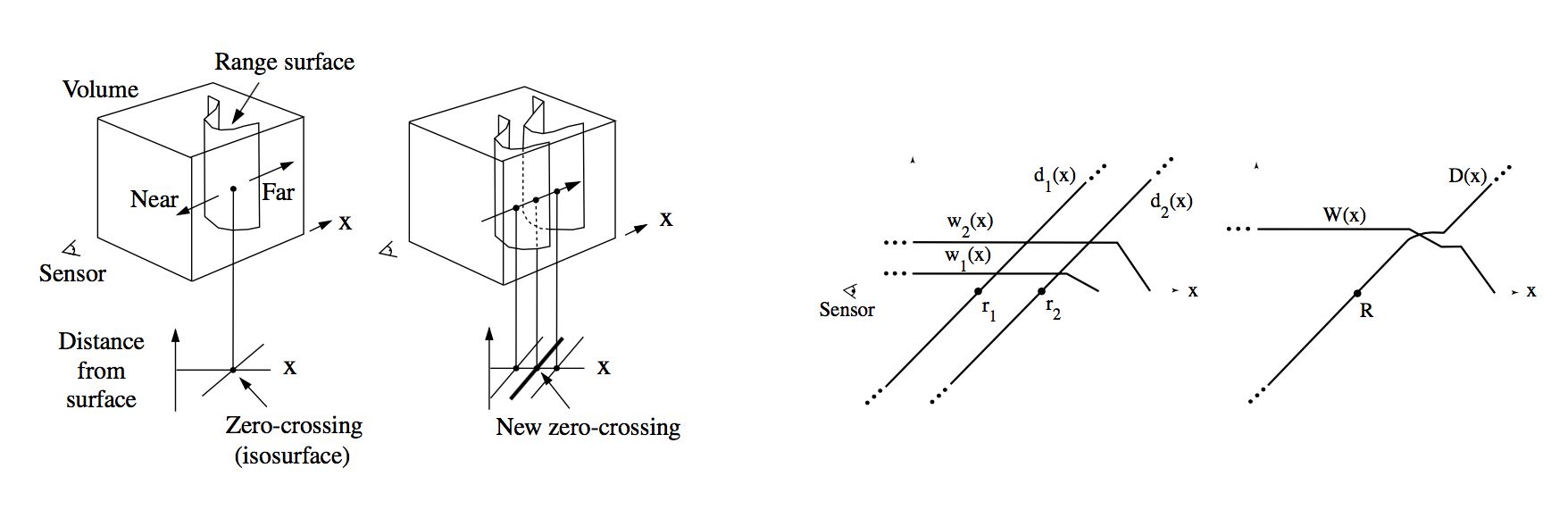

Curless1996SIGGRAPH | |||

- A volumetric representation for integrating a large number of range images

- Incremental and order independent updating based on a cumulative weighted signed distance function (TSDF)

- Representation of directional uncertainty with weights

- Utilization of all range data

- No restrictions on topological type, ie without simplifying assumptions

- Time and space efficiency

- The ability to fill gaps in the reconstruction using space carving

- Robustness in the presence of outliers

- Final manifold by extracting an isosurface from the volumetric grid

- Easy to parallelize in the implementation

| Mapping, Localization & Ego-Motion Estimation State of the Art on KITTI | |||

|

SIGGRAPH 1996

Curless1996SIGGRAPH | |||

- A volumetric representation for integrating a large number of range images

- Incremental and order independent updating based on a cumulative weighted signed distance function (TSDF)

- Representation of directional uncertainty with weights

- Utilization of all range data

- No restrictions on topological type, ie without simplifying assumptions

- Time and space efficiency

- The ability to fill gaps in the reconstruction using space carving

- Robustness in the presence of outliers

- Final manifold by extracting an isosurface from the volumetric grid

- Easy to parallelize in the implementation

| Mapping, Localization & Ego-Motion Estimation State of the Art on KITTI | |||

|

ECMR 2015

Cvisic2015ECMR | |||

- Stereo visual odometry based on feature selection and tracking (SOFT) for us: a good taxonomy is provided in intro

- Careful selection of a subset of stable features and their tracking through the frames

- Separate estimation of rotation (the five point) and translation (the three point)

- Evaluated on KITTI, outperforming all

- Pose error of 1.03 with processing speed above 10 Hz

- A modified IMU-aided version of the algorithm

- An IMU for outlier rejection and Kalman filter for rotation refinement

- Fast and suitable for embedded systems at 20 Hz on an ODROID U3 ARM-based embedded computer

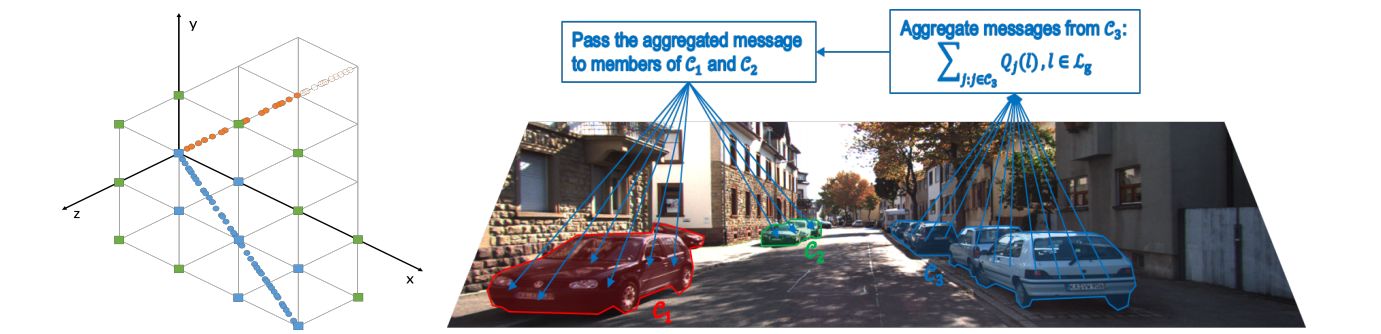

| Semantic Instance Segmentation Methods | |||

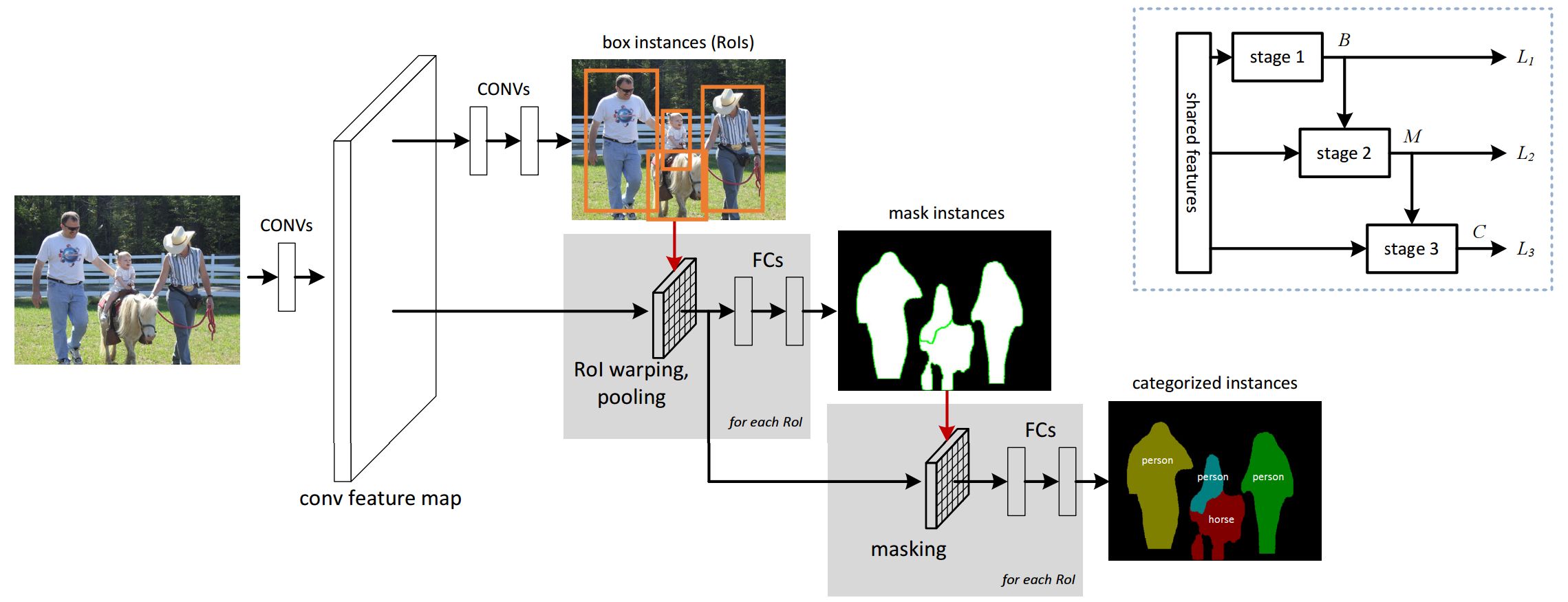

|

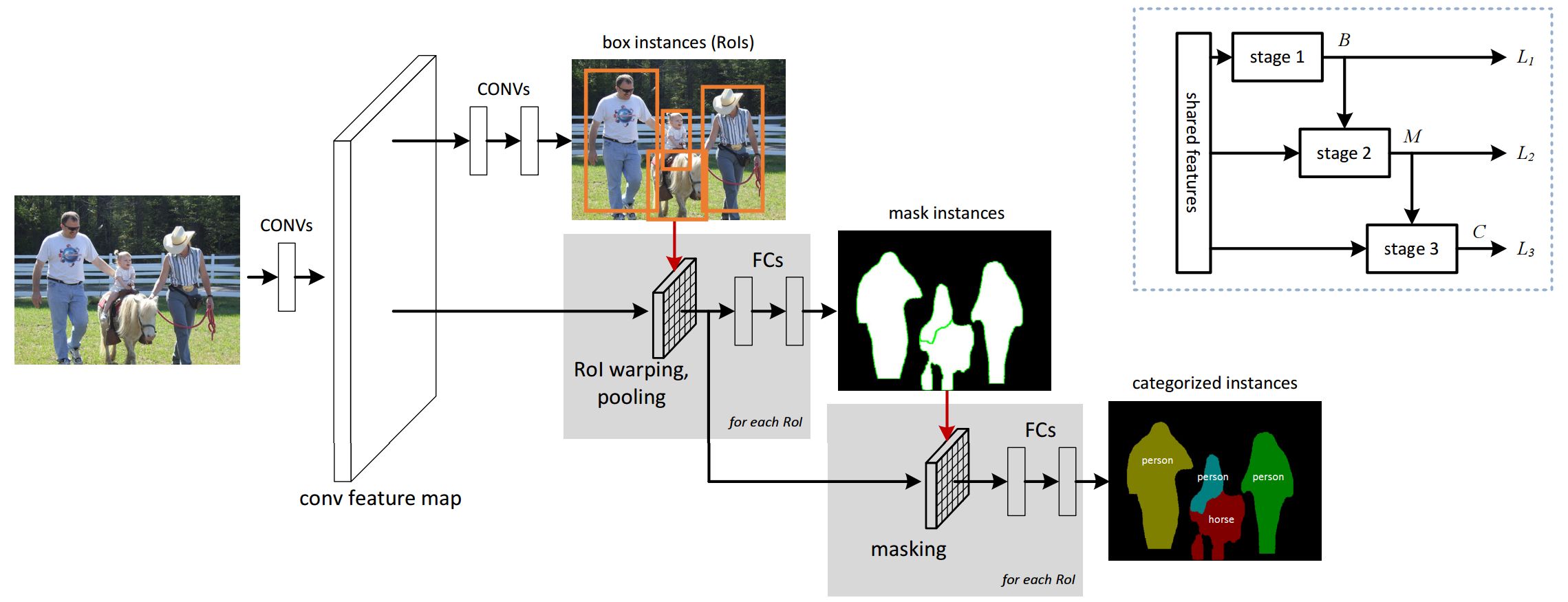

CVPR 2016

Dai2016CVPR | |||

- Limitations of existing methods for instance segmentation using CNNs

- Slow at inference time because they require mask propasal methods

- Don't take advantage of deep features and large amount of training data

- End-to-end training of Multi-task Network Cascades for 3 tasks of differentiating instances, estimating masks & categorizing objects

- Two orders of magnitude faster than previous systems

- State-of-the-art on PASCAL VOC & MS COCO 2015

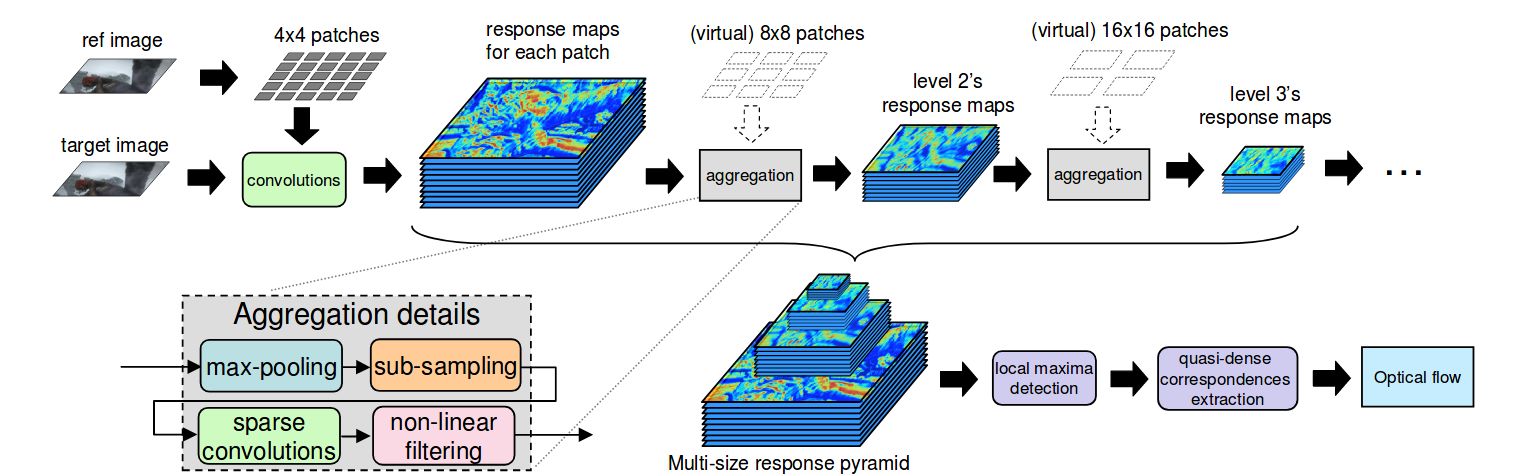

| 3D Scene Flow Methods | |||

|

CVPR 2016

Dai2016CVPR | |||

- Limitations of existing methods for instance segmentation using CNNs

- Slow at inference time because they require mask propasal methods

- Don't take advantage of deep features and large amount of training data

- End-to-end training of Multi-task Network Cascades for 3 tasks of differentiating instances, estimating masks & categorizing objects

- Two orders of magnitude faster than previous systems

- State-of-the-art on PASCAL VOC & MS COCO 2015

| Object Detection Methods | |||

|

CVPR 2005

Dalal2005CVPR | |||

- Show that Histograms of oriented Gradient (HOG) descriptors outperforms previous feature sets for human detection

- Analyze each stage of the computation on the performance of the approach

- Near-perfect separation on the original MIT pedestrian database

- Introduction of a more challenging dataset containing over 1800 annotated human images with large range of pose variations and backgrounds

| Multi-view 3D Reconstruction Multi-view Stereo | |||

|

CVPR 2013

Dame2013CVPR | |||

- Incorporation of object-specific knowledge into SLAM

- Current approaches

- Limited to the reconstruction of visible surfaces

- Photo-consistency error, sensitive to specularities

- Initial dense representation using photo-consistency

- Detection using a standard 2D sliding-window object-class detector

- A novel energy to find the 6D pose and shape of the object

- Shape-prior represented using GP-LVM

- Efficient fusion of the dense reconstruction with the reconstructed object shape

- Better reconstruction in terms of clarity, accuracy and completeness

- Faster and more reliable convergence of the segmentation with 3D data

- Evaluated using dense reconstruction from KinectFusion

| Mapping, Localization & Ego-Motion Estimation Ego-Motion Estimation | |||

|

GCPR 2016

Deigmoeller2016GCPR | |||

- Ego-motion estimation from stereo avoiding temporal filtering and relying exclusively on pure measurements

- Stereo camera set-up is the easiest and leads currently to the most accurate results

- Two parts

- Scene flow estimation with a combination of disparity and optical flow on Harris corners

- Pose estimation with a P6P method (perspective from 6 points) encapsulated in a RANSAC framework

- Careful selections of precise measurements by purely varying geometric constraints on optical flow measure

- Slim method within the top ranks of KITTI without filtering like bundle adjustment or Kalman filtering

| Mapping, Localization & Ego-Motion Estimation Localization | |||

|

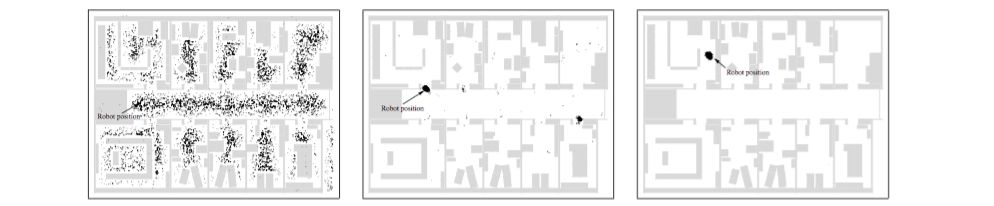

ICRA 1999

Dellaert1999ICRA | |||

- Presents the Monte Carlo method for localization for mobile robots

- Represents uncertainty by maintaining a set of samples that are randomly drawn from it instead of describing the probability density function itself

- Contributions:

- In contrast to Kalman filtering based techniques, it is able to represent multi-modal distributions and thus can globally localize a robot

- Reduces the amount of memory required compared to grid-based Markov localization

- More accurate than Markov localization with a fixed cell size, as the state represented in the samples is not discretized

- Evaluates on datasets introduced in the paper

| Mapping, Localization & Ego-Motion Estimation Ego-Motion Estimation | |||

|

Square Root SAM: Simultaneous Localization and Mapping via Square Root Information Smoothing[scholar]

|

IJRR 2006

Dellaert2006IJRR | ||

| Datasets & Benchmarks | |||

|

CVPR 2009

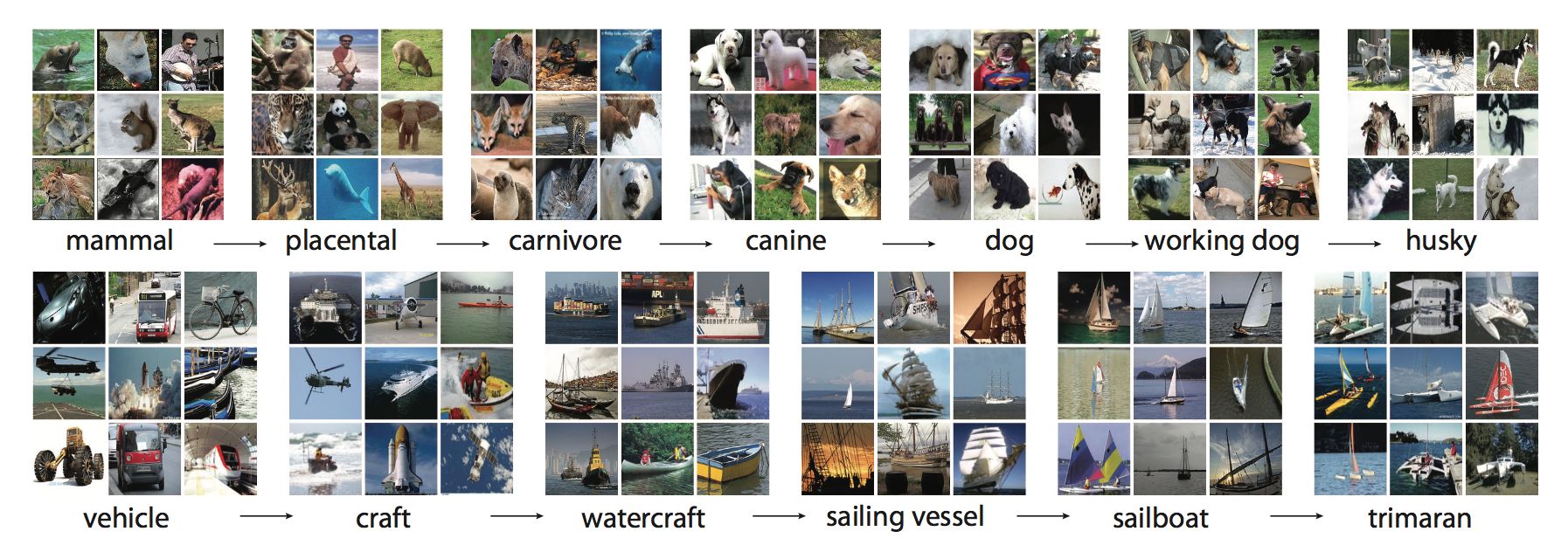

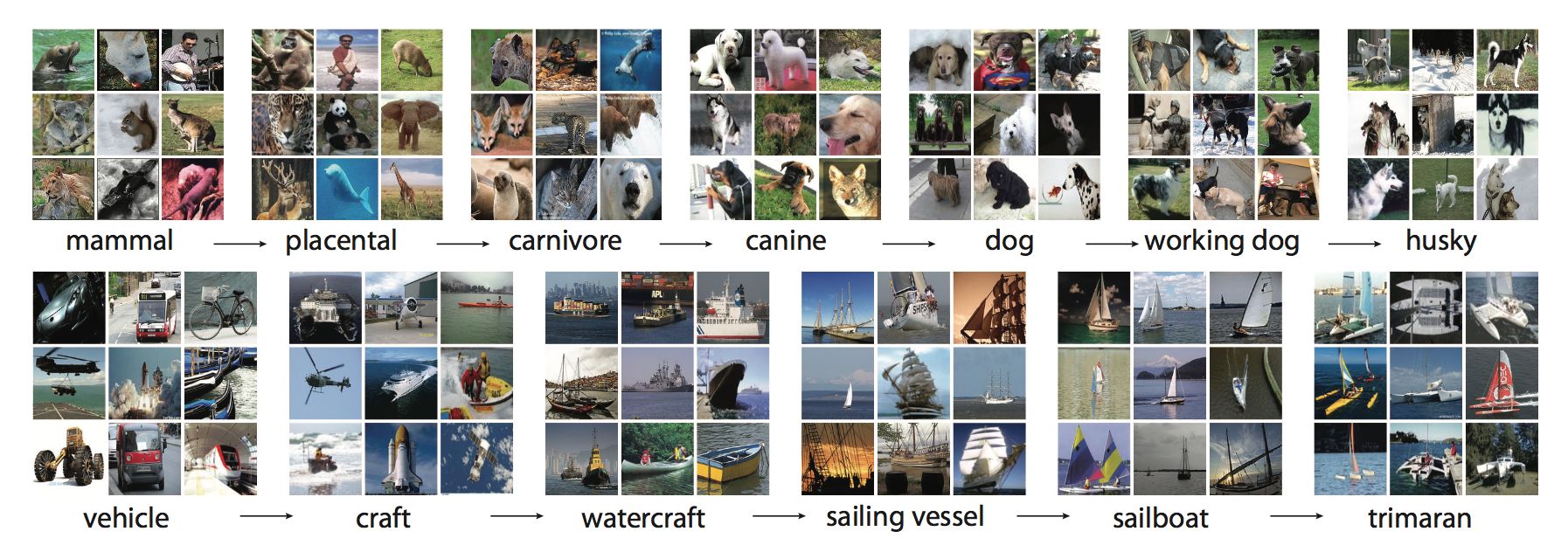

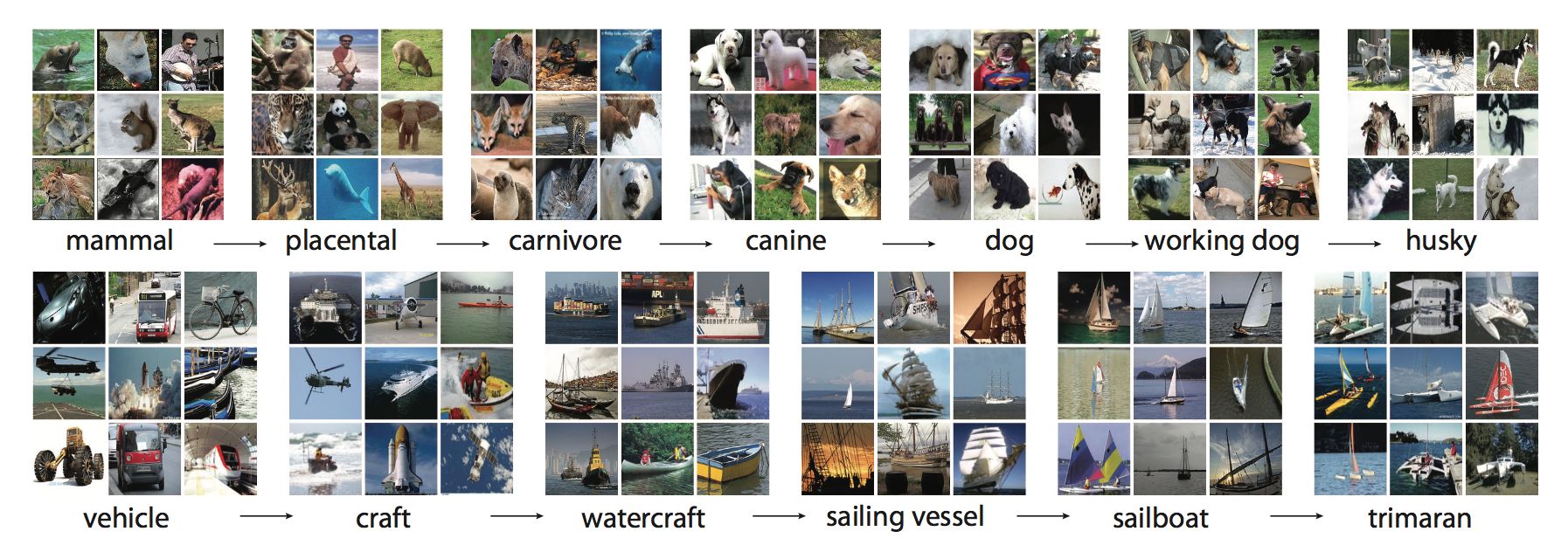

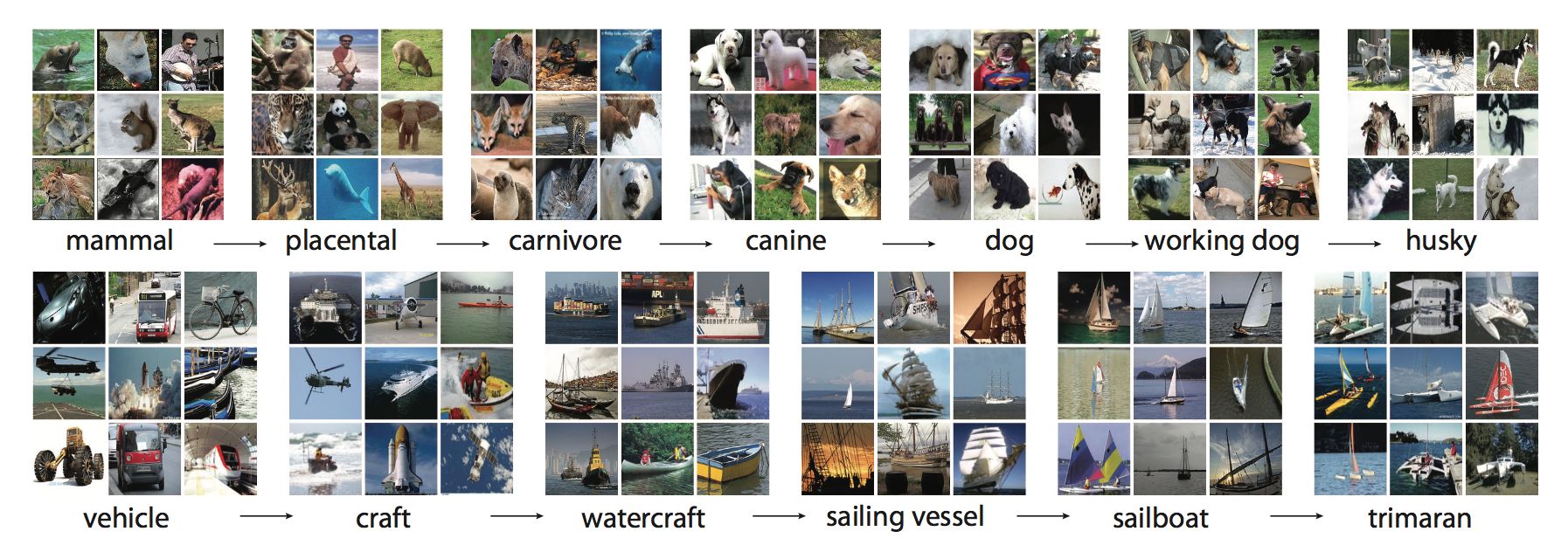

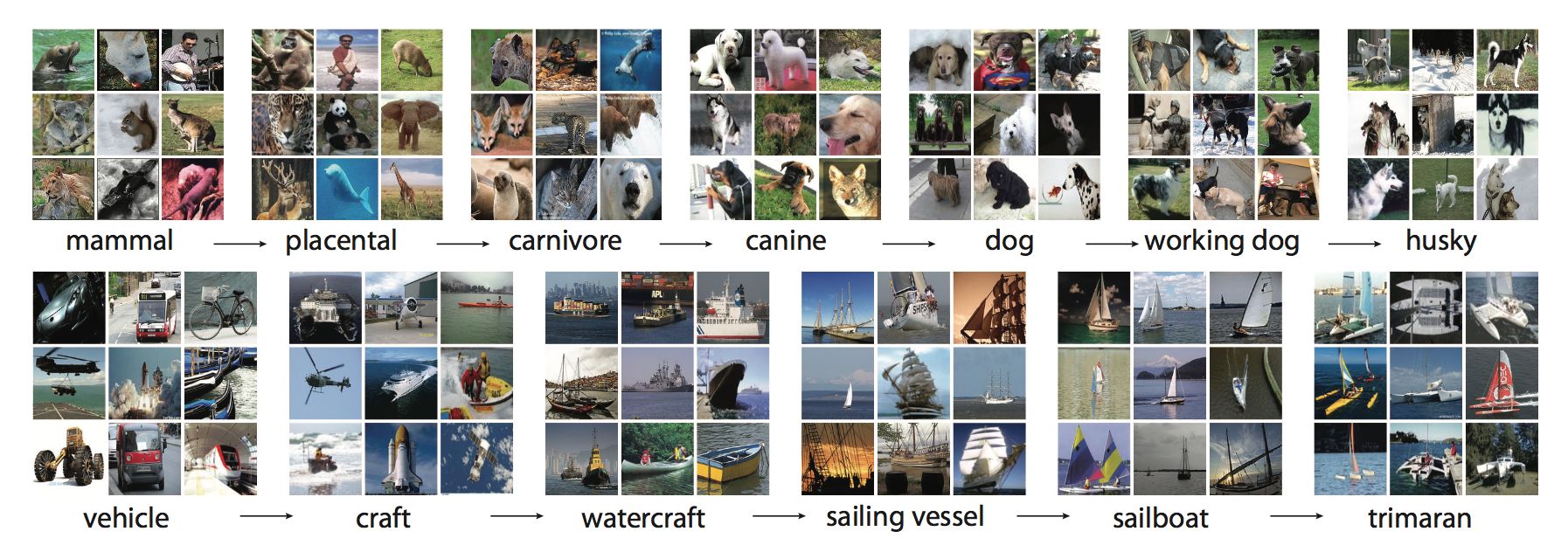

Deng2009CVPR | |||

- A large-scale annotated images organized by the semantic hierarchy of WordNet

- 12 subtrees with 5247 synsets and 3.2 million images in total

- Properties: scale, hierarchy, accuracy, diversity

- Much larger in scale and diversity and much more accurate than the current image datasets

- Data collection with Amazon Mechanical Turk

- Example applications shown: object recognition, image classification and automatic object clustering

| Datasets & Benchmarks Computer Vision Datasets | |||

|

CVPR 2009

Deng2009CVPR | |||

- A large-scale annotated images organized by the semantic hierarchy of WordNet

- 12 subtrees with 5247 synsets and 3.2 million images in total

- Properties: scale, hierarchy, accuracy, diversity

- Much larger in scale and diversity and much more accurate than the current image datasets

- Data collection with Amazon Mechanical Turk

- Example applications shown: object recognition, image classification and automatic object clustering

| Object Detection Datasets | |||

|

CVPR 2009

Deng2009CVPR | |||

- A large-scale annotated images organized by the semantic hierarchy of WordNet

- 12 subtrees with 5247 synsets and 3.2 million images in total

- Properties: scale, hierarchy, accuracy, diversity

- Much larger in scale and diversity and much more accurate than the current image datasets

- Data collection with Amazon Mechanical Turk

- Example applications shown: object recognition, image classification and automatic object clustering

| Object Detection Metrics | |||

|

CVPR 2009

Deng2009CVPR | |||

- A large-scale annotated images organized by the semantic hierarchy of WordNet

- 12 subtrees with 5247 synsets and 3.2 million images in total

- Properties: scale, hierarchy, accuracy, diversity

- Much larger in scale and diversity and much more accurate than the current image datasets

- Data collection with Amazon Mechanical Turk

- Example applications shown: object recognition, image classification and automatic object clustering

| Mapping, Localization & Ego-Motion Estimation Localization | |||

|

CVPR 2009

Deng2009CVPR | |||

- A large-scale annotated images organized by the semantic hierarchy of WordNet

- 12 subtrees with 5247 synsets and 3.2 million images in total

- Properties: scale, hierarchy, accuracy, diversity

- Much larger in scale and diversity and much more accurate than the current image datasets

- Data collection with Amazon Mechanical Turk

- Example applications shown: object recognition, image classification and automatic object clustering

| History of Autonomous Driving | |||

|

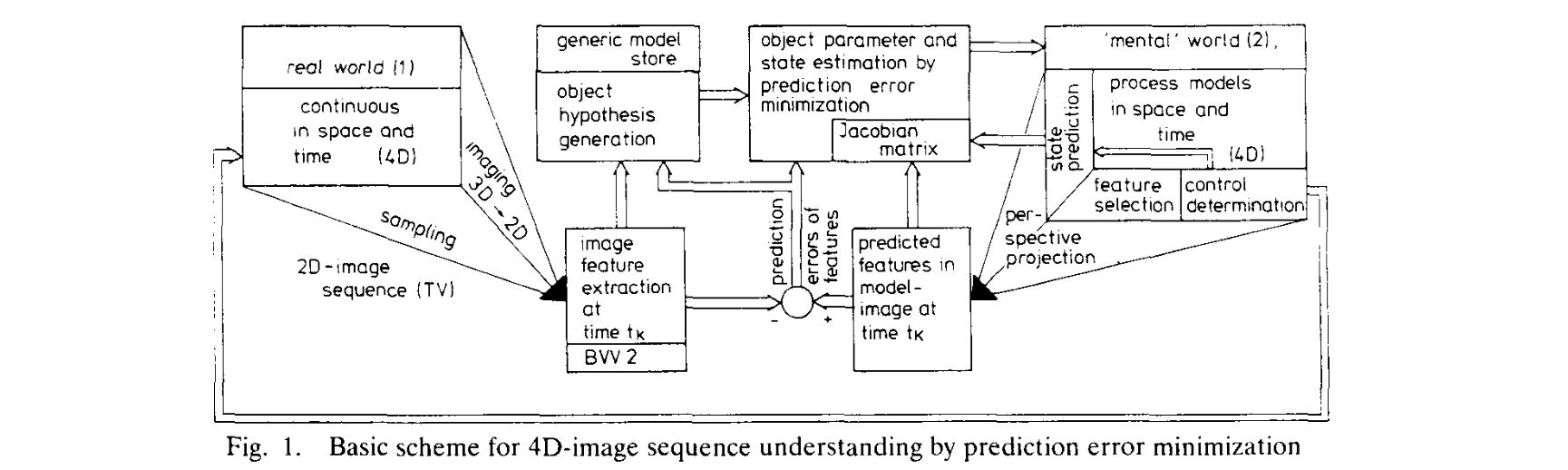

IV 1994

Dickmanns1994IV | |||

- Equipment of a passenger car Mercedes 500 SEL with sense of vision in the framework of the EUREKA-project 'Prometheus III'

- Road and object recognition performed in a look-ahead and look-back region newline allows internal servo-maintained representation of the situation around the vehicle

- Obstacle detection and tracking in forward and backward direction in a viewing range up to 100m

- Depending on computing power tracking of up to 4 or 5 objects in each direction possible

- Overall system comprises about 60 transputers T-222 (for image processing and communication) and T-800(for number crunching and knowledge processing)

- System has not been tested to its performance limit

| History of Autonomous Driving | |||

|

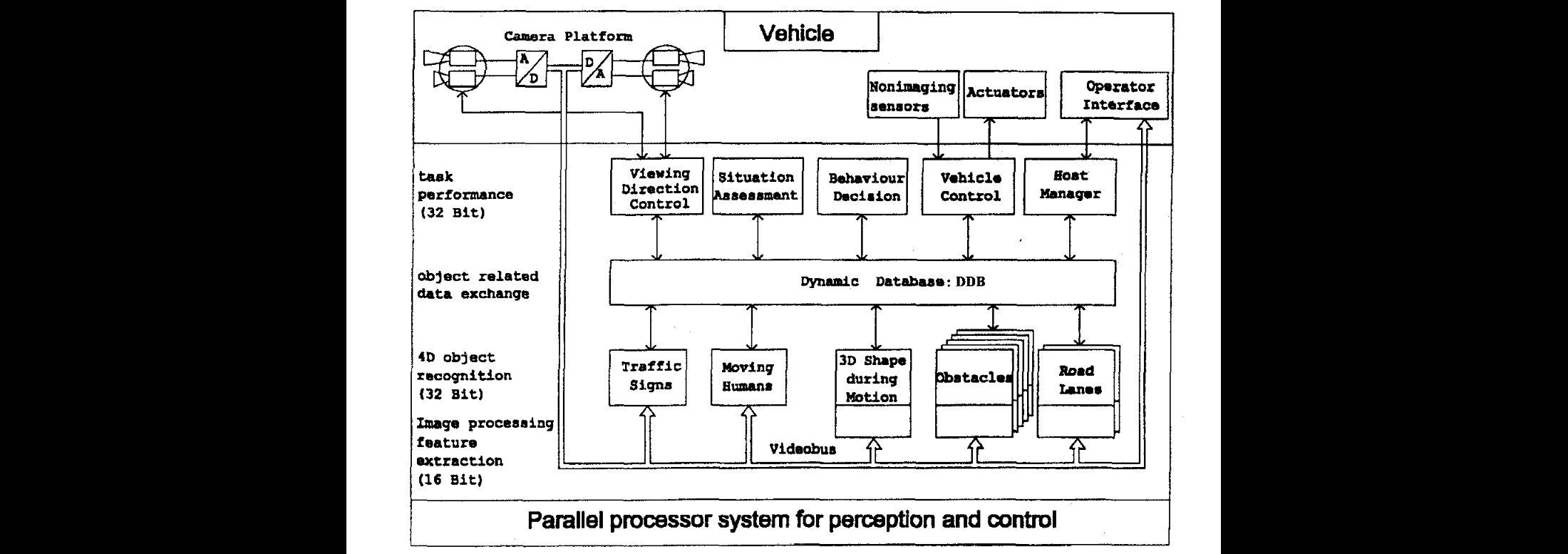

SMC 1990

Dickmanns1990SMC | |||

- Extension of the Kalman filter approach to image sequence processing

- Allows confine image processing to the last frame of the sequence

- Spatial interpretations are obtained in just one step, including spatial velocity components

- Results on road vehicle guidance at high speeds including obstacle detection and monocular relative spatial state estimation are presented

- Corresponding data processing architecture is discussed

- System has been implemented on a MIMD parallel processing system

- Demonstration of speeds up to 100 km/h

| Object Detection Datasets | |||

|

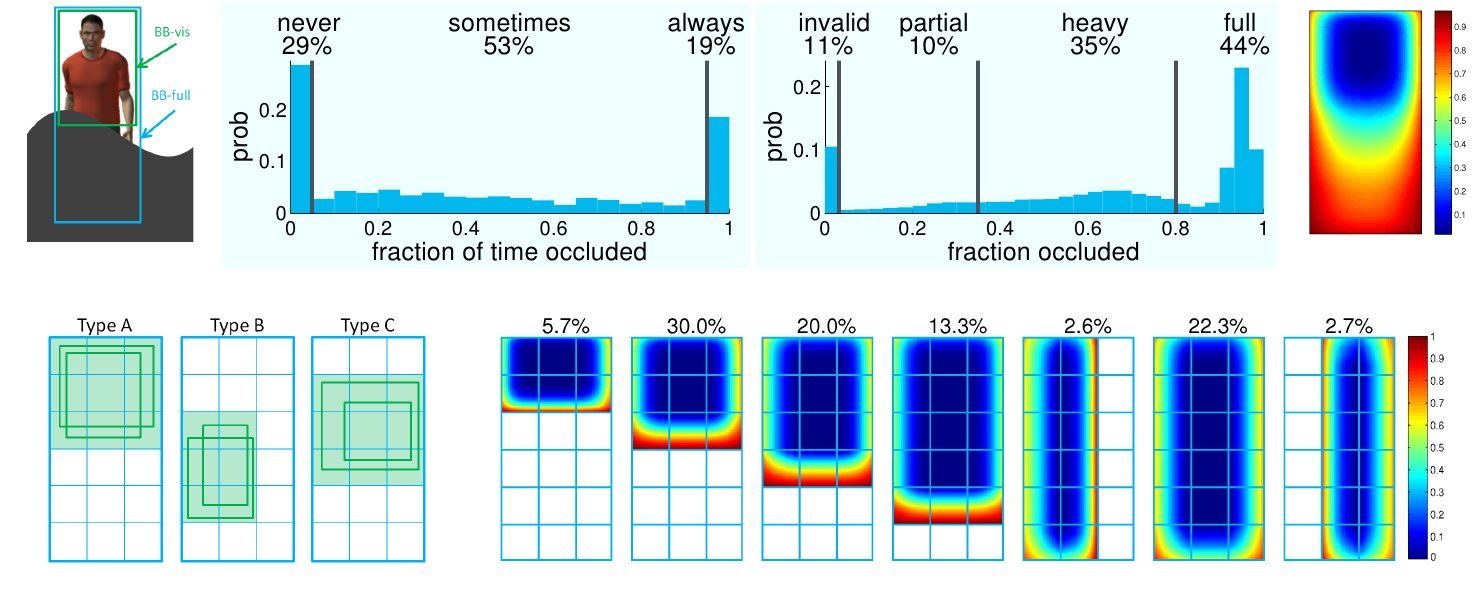

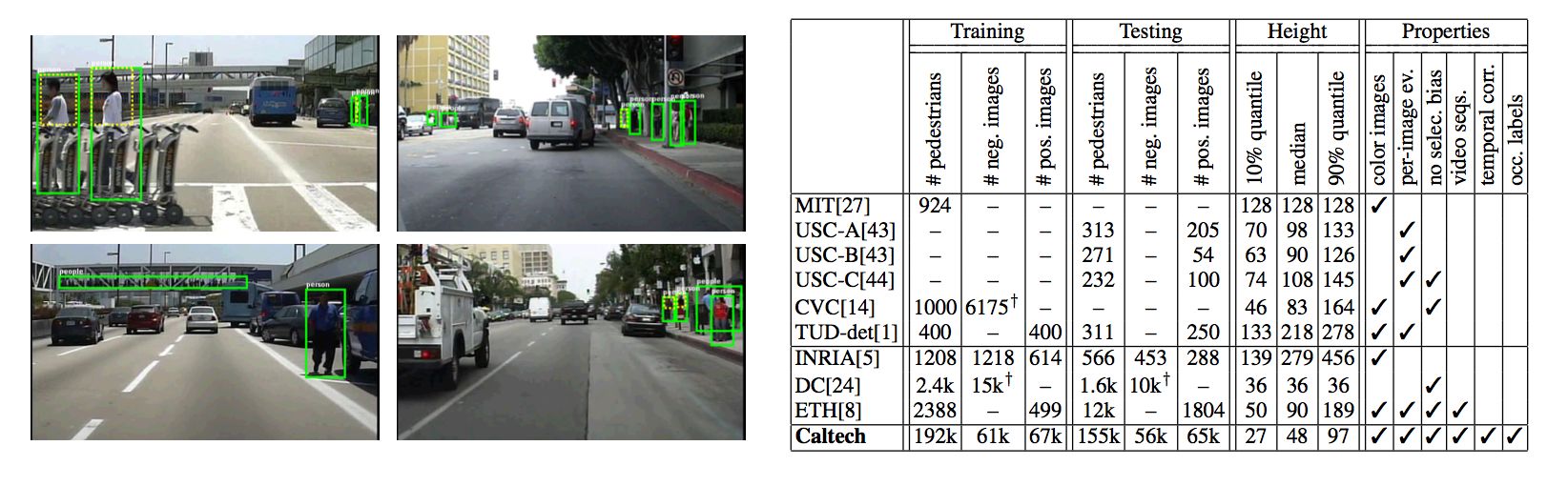

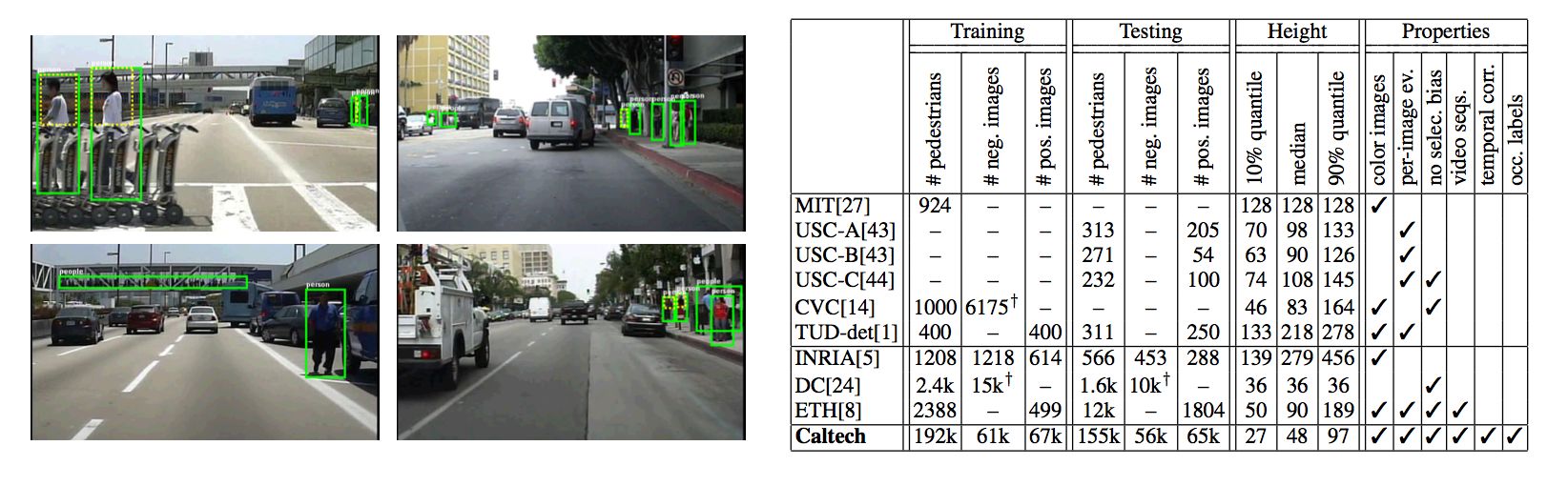

PAMI 2012

Dollar2012PAMI | |||

- Evaluation of pedestrian detection methods in a unified framework

- Monocular pedestrian detection data set with statistics of the size, position, and occlusion patterns of pedestrians in urban scenes (Caltech Pedestrian Data Set)

- Per-frame evaluation methodology considering performance in relation to scale and occlusion, also measuring localization accuracy and analyzing runtime

- Evaluating the performance of sixteen detectors across six data sets.

- Detection is disappointing at low resolutions and for partially occluded pedestrians.

| Object Detection Methods | |||

|

PAMI 2011

Dollar2011PAMI | |||

- Pedestrian detection methods are hard to compare because of multiple datasets and varying evaluation protocols

- Extensive evaluation of the state of the art in a unified framework

- Large, well-annotated and realistic monocular pedestrian detection dataset

- Refined per-frame evaluation methodology

- Evaluation of sixteen pre-trained state-of-the-art detectors across six datasets

- Performance of state-of-the-art is disappointing at low resolutions (far distant pedestrians) and in case of partial occlusions

| Datasets & Benchmarks | |||

|

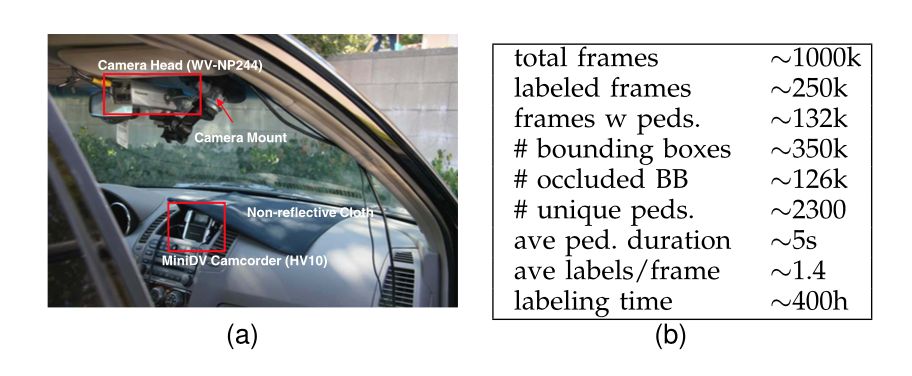

CVPR 2009

Dollar2009CVPR | |||

- Caltech Pedestrian Dataset:

- richly annotated video, recorded from a moving vehicle

- pedestrians varying widely in appearance, pose and scale

- challenging low resolution

- temporal correspondence between BBs

- detailed occlusion labels

- frequently occluded people (only 30 of pedestrians remain unoccluded for the entire time they are present)

- Improved evaluation metrics

- Benchmarking existing pedestrian detection systems

- Analyzing common failure cases, detection at smaller scales and of partially occluded pedestrians

| Datasets & Benchmarks Autonomous Driving Datasets | |||

|

CVPR 2009

Dollar2009CVPR | |||

- Caltech Pedestrian Dataset:

- richly annotated video, recorded from a moving vehicle

- pedestrians varying widely in appearance, pose and scale

- challenging low resolution

- temporal correspondence between BBs

- detailed occlusion labels

- frequently occluded people (only 30 of pedestrians remain unoccluded for the entire time they are present)

- Improved evaluation metrics

- Benchmarking existing pedestrian detection systems

- Analyzing common failure cases, detection at smaller scales and of partially occluded pedestrians

| Datasets & Benchmarks | |||

|

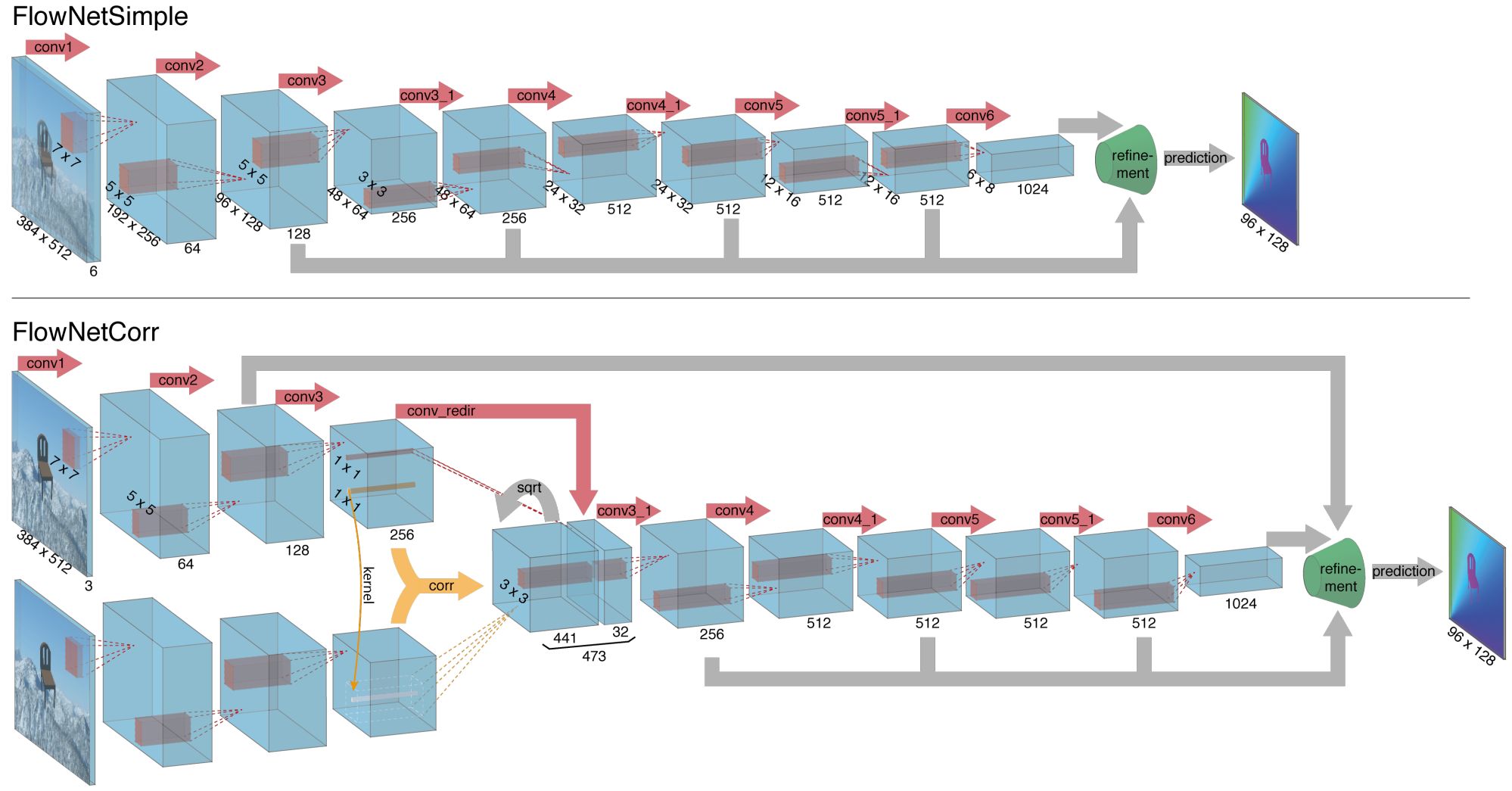

ICCV 2015

Dosovitskiy2015ICCV | |||