[st]ZoomNet: Part-aware adaptive zooming neural network for 3D object detection [ZoomNet]

https://github.com/detectRecog/ZoomNet

Submitted on 9 Nov. 2019 17:50 by

Zhenbo Xu (University of Science and Technology of China)

| Running time: | | 0.3 s | | Environment: | | 1 core @ 2.5 Ghz (C/C++) |

| Method Description: | 3D object detection is an essential task in autonomous driv-

ing. Though recently great progress has been made, stereo

imagery-based 3D detection methods still lag far behind

lidar- based methods. Current stereo imagery-based

methods lack fine-grained instance analysis due to the lack

of annotations in KITTI and ignore the problem that distant

cars which oc- cupy fewer pixels in width face huge depth

estimation errors. To fill the vacancy of fine-grained

annotations, we present KITTI Fine-Grained car (KFG)

dataset by extending KITTI with an instance-wise 3D CAD

model and pixel-wise fine- grained annotations. Further, to

reduce the error of depth es- timation, we propose an

effective strategy named adaptive zooming by which distant

cars are analyzed on larger scales for more accurate depth

estimation. Moreover, unlike most methods which exploit

key-points constraints to infer 3D po- sition from 2D

bounding box, we introduce part locations as a generalized

version of key-points to better localize cars and to enhance

the resistance to occlusion. In addition, to better estimate

the 3D detection quality, the 3D fitting score is pro- posed

as a supplementary to the 2D classification score. The

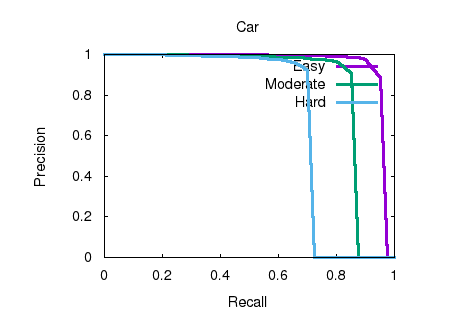

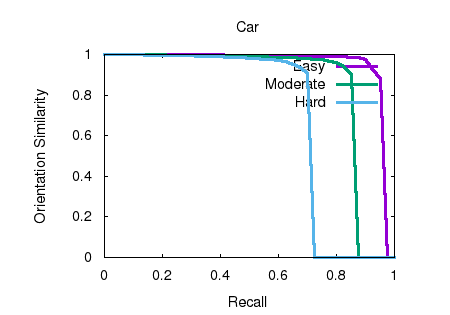

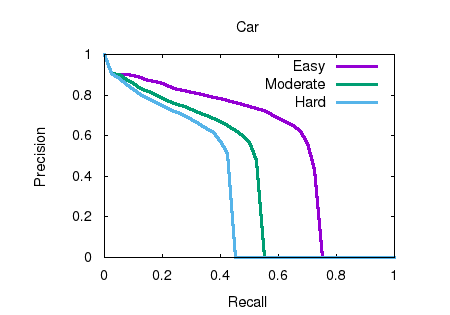

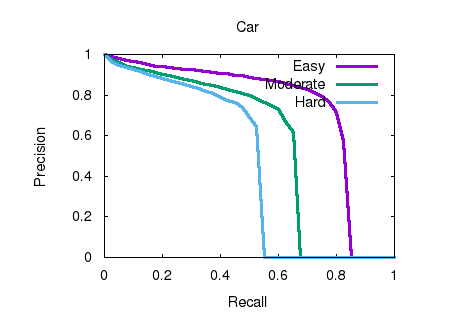

resulting architecture, named ZoomNet, surpasses all

existing state-of-the-art by large margins on the popular

KITTI 3D detection benchmark. More importantly,

ZoomNet first reaches a comparable performance to

current lidar-based methods at a relatively lower threshold.

Though at a lower threshold, closing this gap indicates

that stereo camera, like we human’s eyes, is a suitable

alternative for expensive devices like depth camera or lidar.

Both the KFG dataset and our codes will be publicly

available. | | Parameters: | It is worth noting that our ZoomNet has surpassed SOTA on

Bird-Eye's-view by large margins on the testing set.

ZoomNet Car 72.94 % 54.91 % 44.14 %

Pseudo-LIDAR 67.30 % 45.00 % 38.40 %

Stereo-RCNN 61.92 % 41.31 % 33.42 % | | Latex Bibtex: | @inproceedings{xu2020zoomnet,

title={ZoomNet: Part-Aware Adaptive Zooming

Neural Network for 3D Object Detection},

author={Z. Xu, W. Zhang, X. Ye, X. Tan, W. Yang, S. Wen, E.

Ding, A. Meng, L. Huang},

booktitle={Proceedings of the AAAI Conference on

Artificial Intelligence},

year={2020},

} |

|