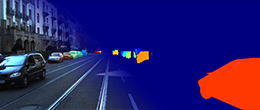

| This is the KITTI pixel-level semantic segmentation benchmark which consists of 200 training images as well as 200 test images. | |

| This is the KITTI semantic instance-level semantic segmentation benchmark which consists of 200 training images as well as 200 test images. | |

| This is our Segmenting and Tracking Every Pixel (STEP) benchmark; it consists of 21 training videos and 29 testing videos. The benchmark requires to assign segmentation and tracking labels to all pixels. |

Semantic and Instance Segmentation Evaluation

Additional Semantic Datasets

Here we collect a number of resources where people have annotated KITTI images with semantic labels. Please let me know if you have annotated some part or are aware of any further labels which we should list on this page.

- Deepen.ai have annotated 100 frames of KITTI sequence 2011_09_26_drive_0093 with point level semantic segmentation.

- Mennatullah Siam has created the KITTI MoSeg dataset with ground truth annotations for moving object detection.

- Hazem Rashed extended KittiMoSeg dataset 10 times providing ground truth annotations for moving objects detection. The dataset consists of 12919 images and is available on the project's website.

- Alexander Hermans and Georgios Floros have labeled 203 images from the KITTI visual odometry dataset.

- Zeeshan Zia has labeled 1560 cars from KITTI object detection set at the level of individual landmarks (pixels on the silhouettes) which can be used as precise polygonal outlines for segmentation, as well as for fine-grained categorization.

- Jose Alvarez has annotated 323 images from the KITTI road detection benchmark with semantic layout ground truth.

- Philippe Xu has annotated 107 frames of the KITTI raw dataset.

- Lubor Ladicky has annotated 60 frames of the KITTI stereo training set.

- Fatma Güney has extended Lubor's semantic and instance annotations to all KITTI stereo training images for the category 'vehicle'.

- Sunando Sengupta has annotated 60 images from the visual odometry dataset.

- Ivan Kreso has supervised annotation of 445 images from the visual odometry dataset, starting from images annotated by German Ros.

- German Ros has annotated 146 images from the visual odometry dataset.

- Abhijit Kundu has annotated parts of the visual odometry dataset.

- Hu He has annotated 51 images from the raw dataset.

- Richard Zhang has annotated 252 images and Velodyne scans on the tracking training dataset for 10 object categories.