https://github.com/xiaoliangabc/cyber_meld/tree/plard Submitted on 20 Jun. 2020 05:34 by Xiaoliang Wang (SJTU)

|

Method

Evaluation in Bird's Eye View

|

Behavior Evaluation

|

Road/Lane Detection

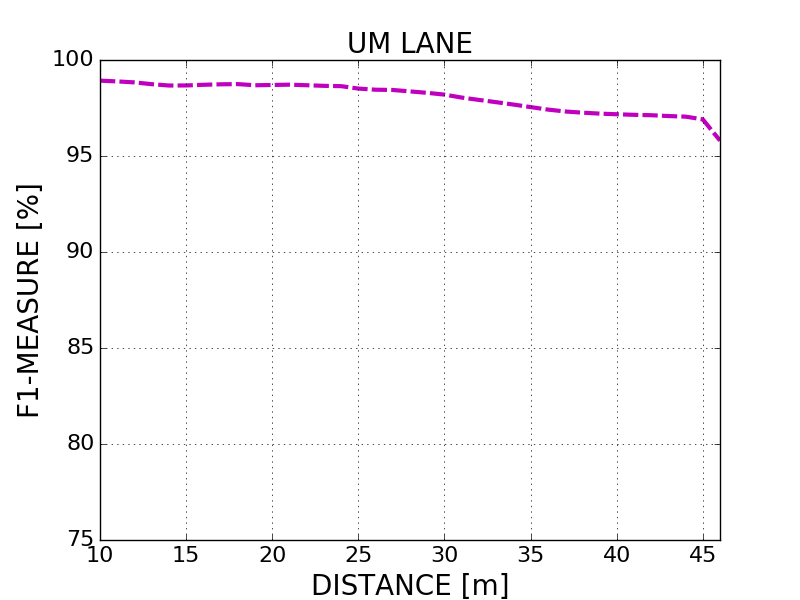

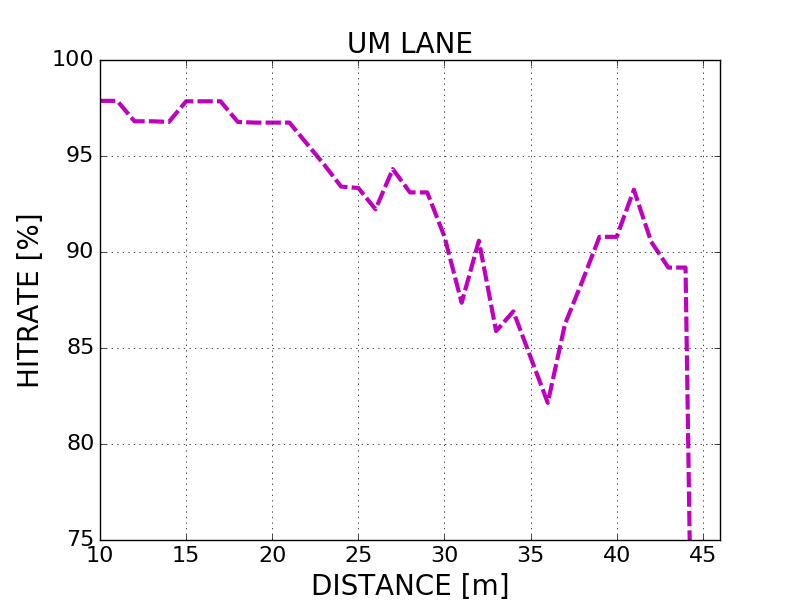

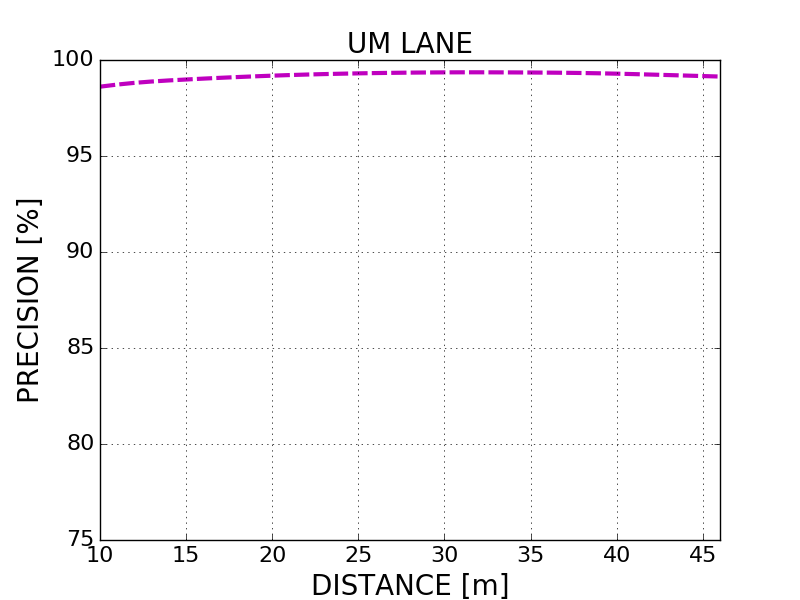

The following plots show precision/recall curves for the bird's eye view evaluation.

Distance-dependent Behavior Evaluation

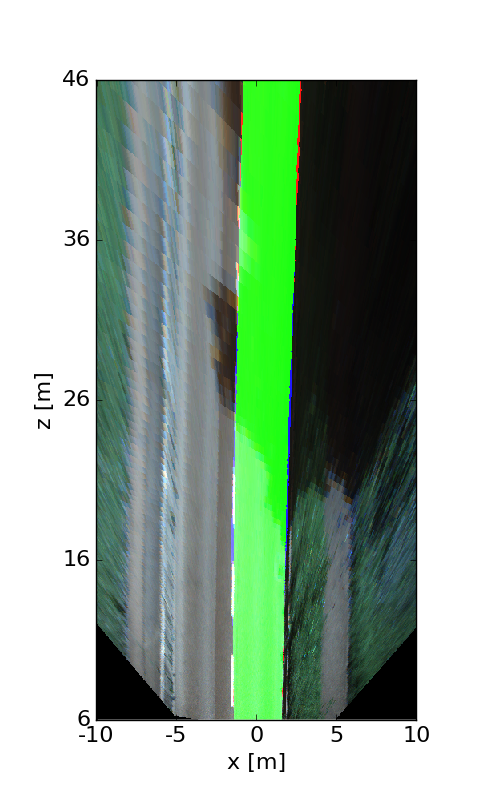

Visualization of Results

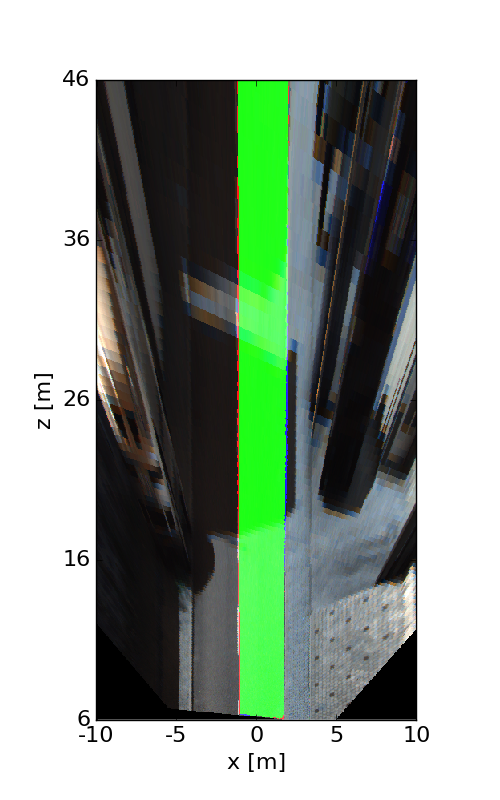

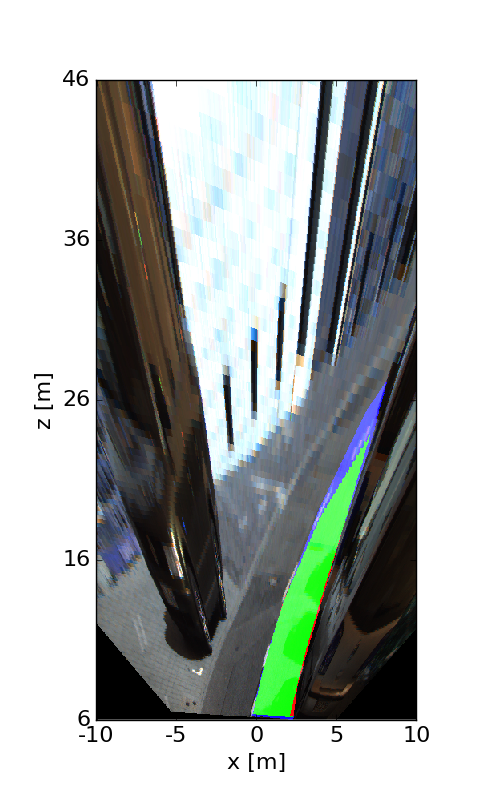

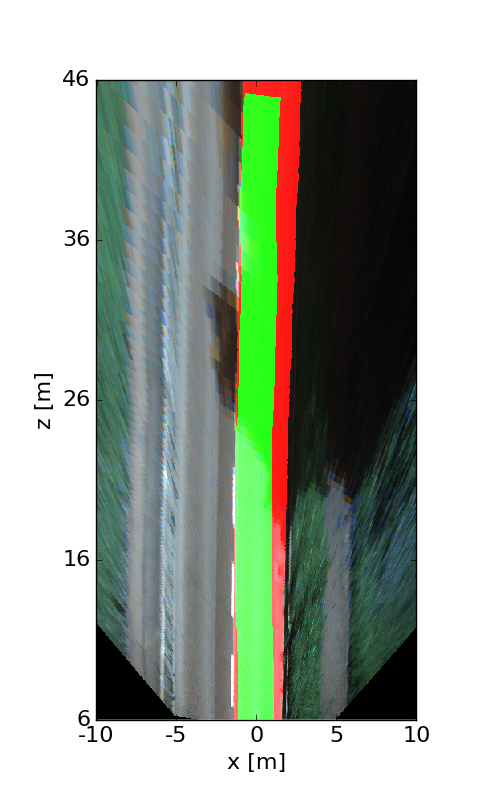

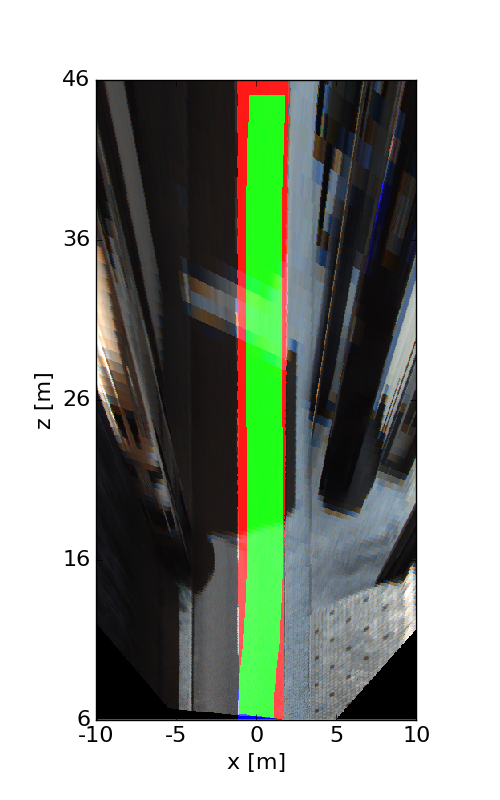

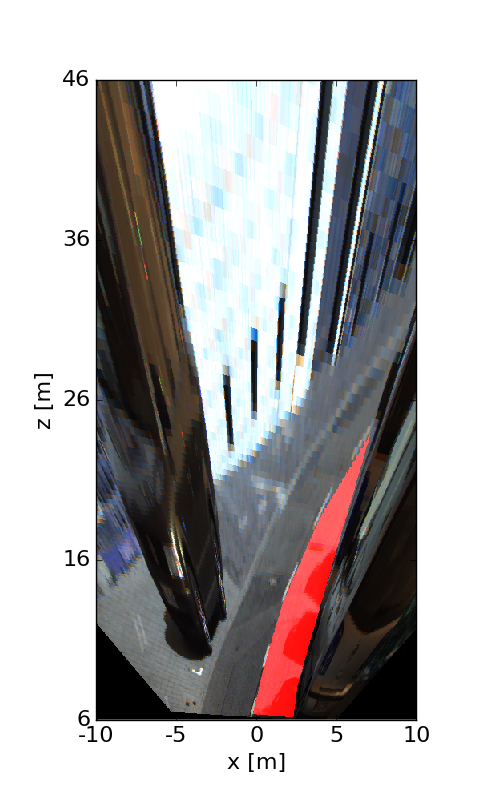

The following images illustrate the performance of the method qualitatively on a couple of test images. We first show results in the perspective image, followed by evaluation in bird's eye view. Here, red denotes false negatives, blue areas correspond to false positives and green represents true positives.