https://github.com/rasd3/3D-Dual-Fusion Submitted on 19 Aug. 2022 06:38 by Yecheol Kim (Hanyang University)

|

Method

Detailed Results

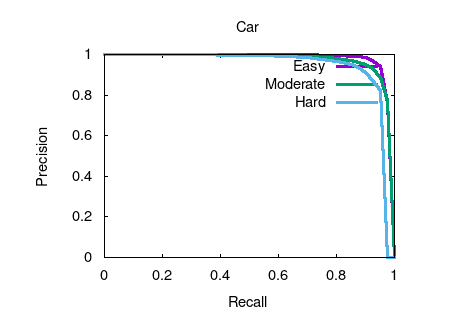

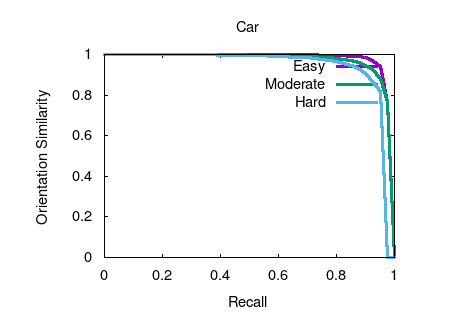

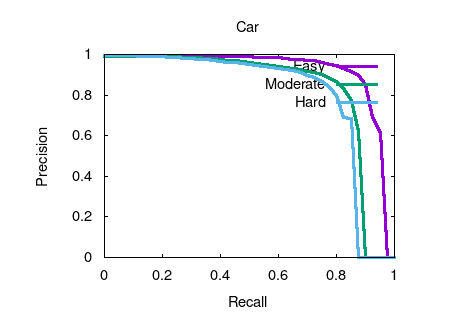

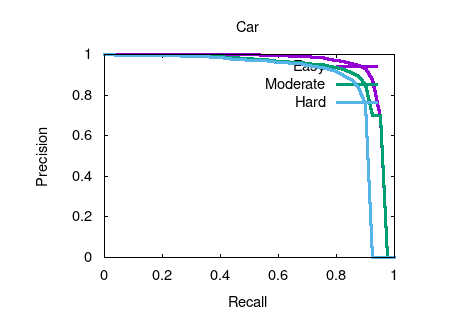

Object detection and orientation estimation results. Results for object detection are given in terms of average precision (AP) and results for joint object detection and orientation estimation are provided in terms of average orientation similarity (AOS).

|