Submitted on 24 Jul. 2017 13:01 by David Stutz (Max Planck Institute for Intelligent Systems)

|

Method

Detailed Results

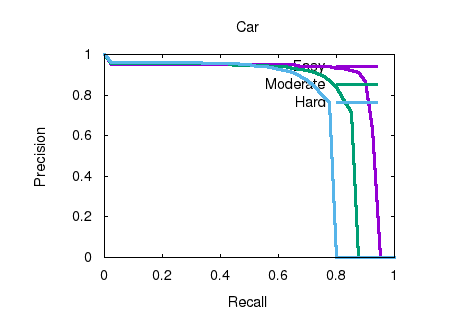

Object detection and orientation estimation results. Results for object detection are given in terms of average precision (AP) and results for joint object detection and orientation estimation are provided in terms of average orientation similarity (AOS).

|