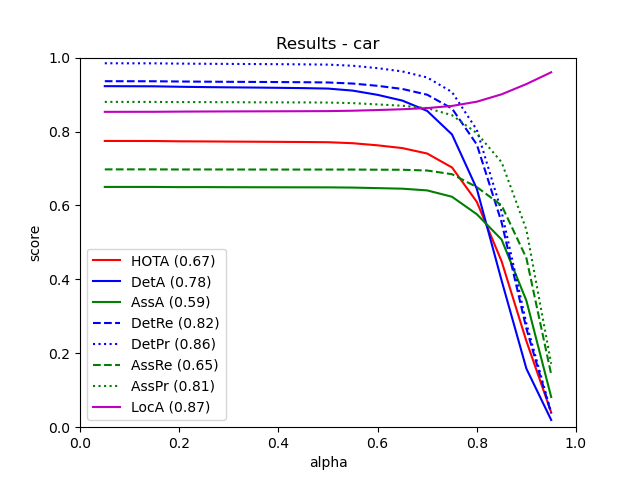

From all 29 test sequences, our benchmark computes the HOTA tracking metrics (HOTA, DetA, AssA, DetRe, DetPr, AssRe, AssPr, LocA) [1] as well as the CLEARMOT, MT/PT/ML, identity switches, and fragmentation [2,3] metrics.

The tables below show all of these metrics.

| Benchmark |

HOTA |

DetA |

AssA |

DetRe |

DetPr |

AssRe |

AssPr |

LocA |

| CAR |

67.32 % |

77.69 % |

58.99 % |

81.58 % |

85.81 % |

64.70 % |

80.74 % |

86.94 % |

| Benchmark |

TP |

FP |

FN |

| CAR |

32131 |

2261 |

568 |

| Benchmark |

MOTA |

MOTP |

MODA |

IDSW |

sMOTA |

| CAR |

89.59 % |

85.44 % |

91.77 % |

751 |

75.99 % |

| Benchmark |

MT rate |

PT rate |

ML rate |

FRAG |

| CAR |

86.46 % |

11.08 % |

2.46 % |

276 |

| Benchmark |

# Dets |

# Tracks |

| CAR |

32699 |

1134 |

This table as LaTeX

|

[1] J. Luiten, A. Os̆ep, P. Dendorfer, P. Torr, A. Geiger, L. Leal-Taixé, B. Leibe:

HOTA: A Higher Order Metric for Evaluating Multi-object Tracking. IJCV 2020.

[2] K. Bernardin, R. Stiefelhagen:

Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. JIVP 2008.

[3] Y. Li, C. Huang, R. Nevatia:

Learning to associate: HybridBoosted multi-target tracker for crowded scene. CVPR 2009.