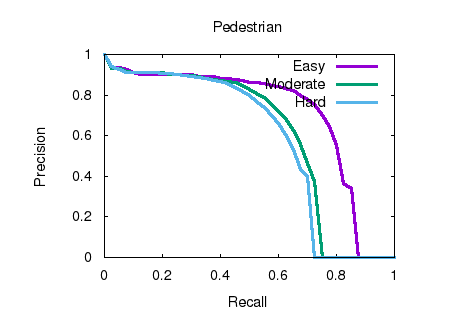

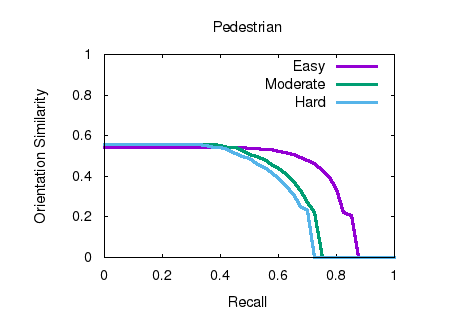

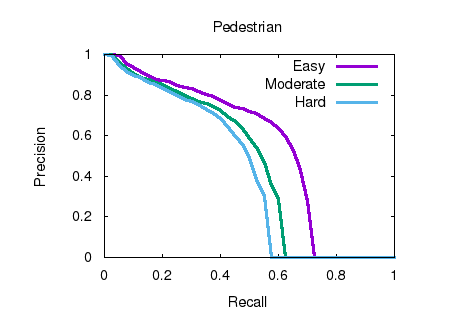

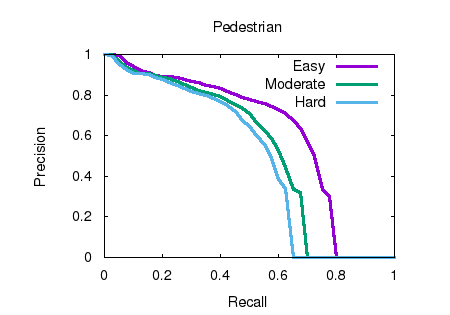

In this paper, we focus on exploring the

robustness of the3D object detection in point

clouds, which has been rarely discussed in

existing approaches. We observe two crucial

phenomena: 1) the detection accuracy of the

hard objects,e.g., Pedestrians, is

unsatisfactory, 2) when adding additional noise

points, the performance of existing

approaches de-creases rapidly. To alleviate these

problems, a novel TANet is introduced in this

paper, which mainly contains a Triple Attention

(TA) module, and a Coarse-to-Fine Regression

(CFR)module. By considering the channel-wise,

point-wise and voxel-wise attention jointly, the

TA module enhances the crucial information of

the target while suppresses the unstable

cloud points. Besides, the novel stacked TA

further exploits the multi-level feature

attention. In addition, the CFR module boosts the

accuracy of localization without excessive

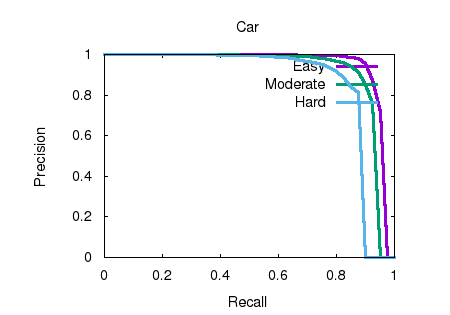

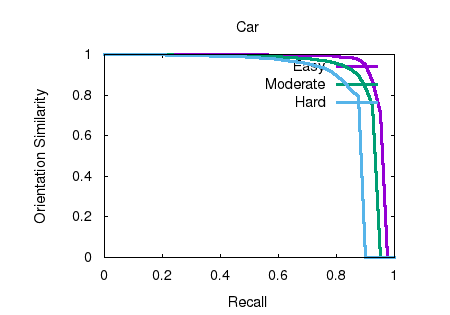

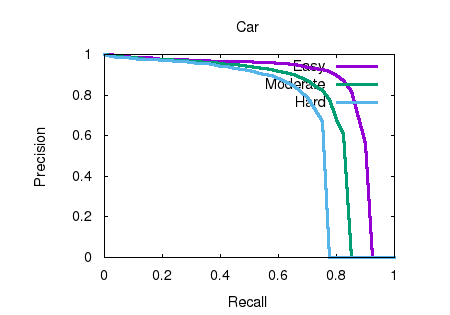

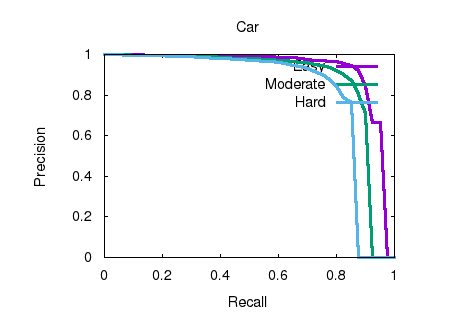

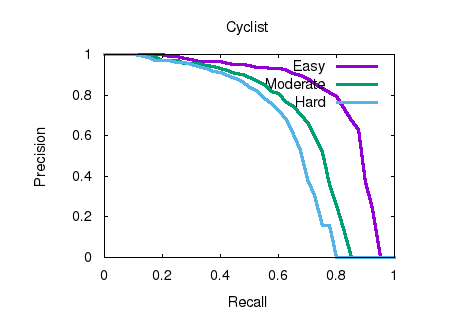

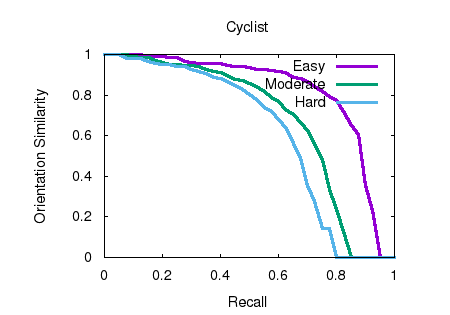

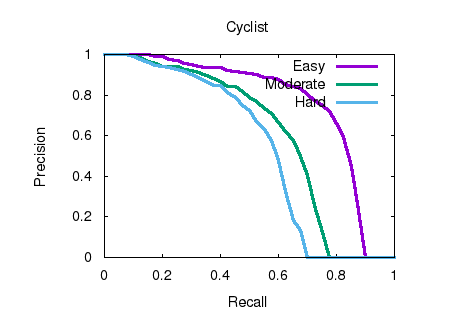

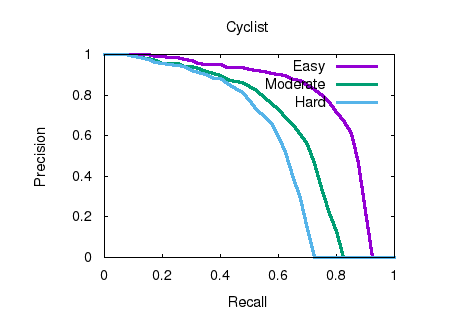

computation cost. Experimental results on the

validation set of KITTI dataset demonstrate that,

in the challenging noisy cases, i.e., adding

additional random noisy points around each object,

the presented approach goes far beyond state-of-

the-art approaches, especially for the Pedestrian

class.The running speed is around 29 frames per

second. |

@article{liu2019tanet,

title={TANet: Robust 3D Object Detection from

Point Clouds with Triple Attention},

author={Zhe Liu and Xin Zhao and Tengteng

Huang and Ruolan Hu and Yu Zhou and Xiang Bai},

year={2020},

journal={AAAI},

url={https://arxiv.org/pdf/1912.05163.pdf},

eprint={1912.05163},

archivePrefix={arXiv},

primaryClass={cs.CV}

} |