About

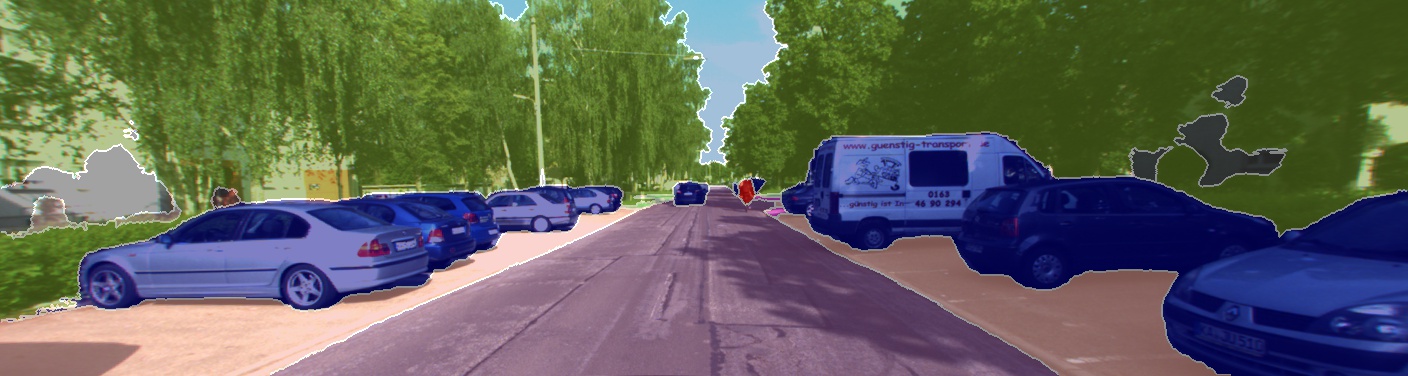

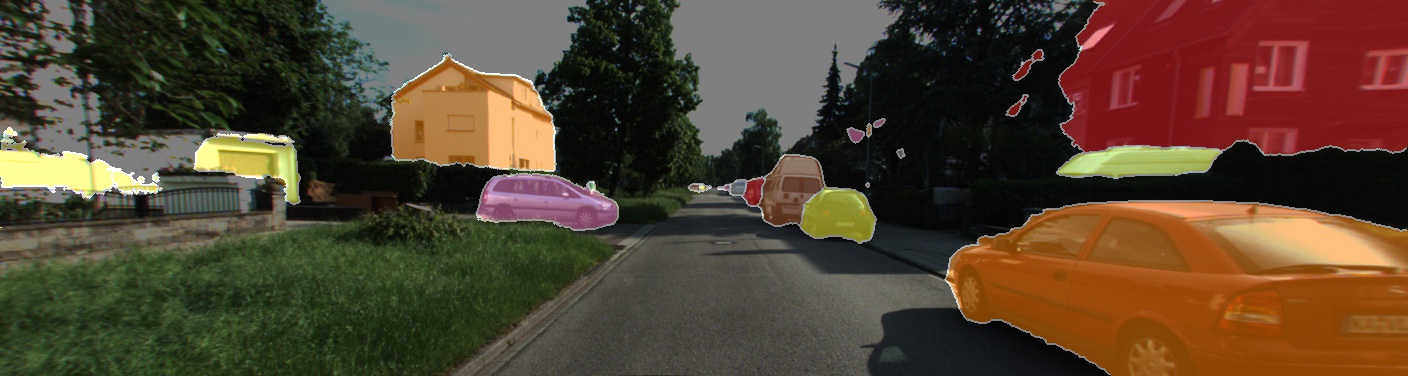

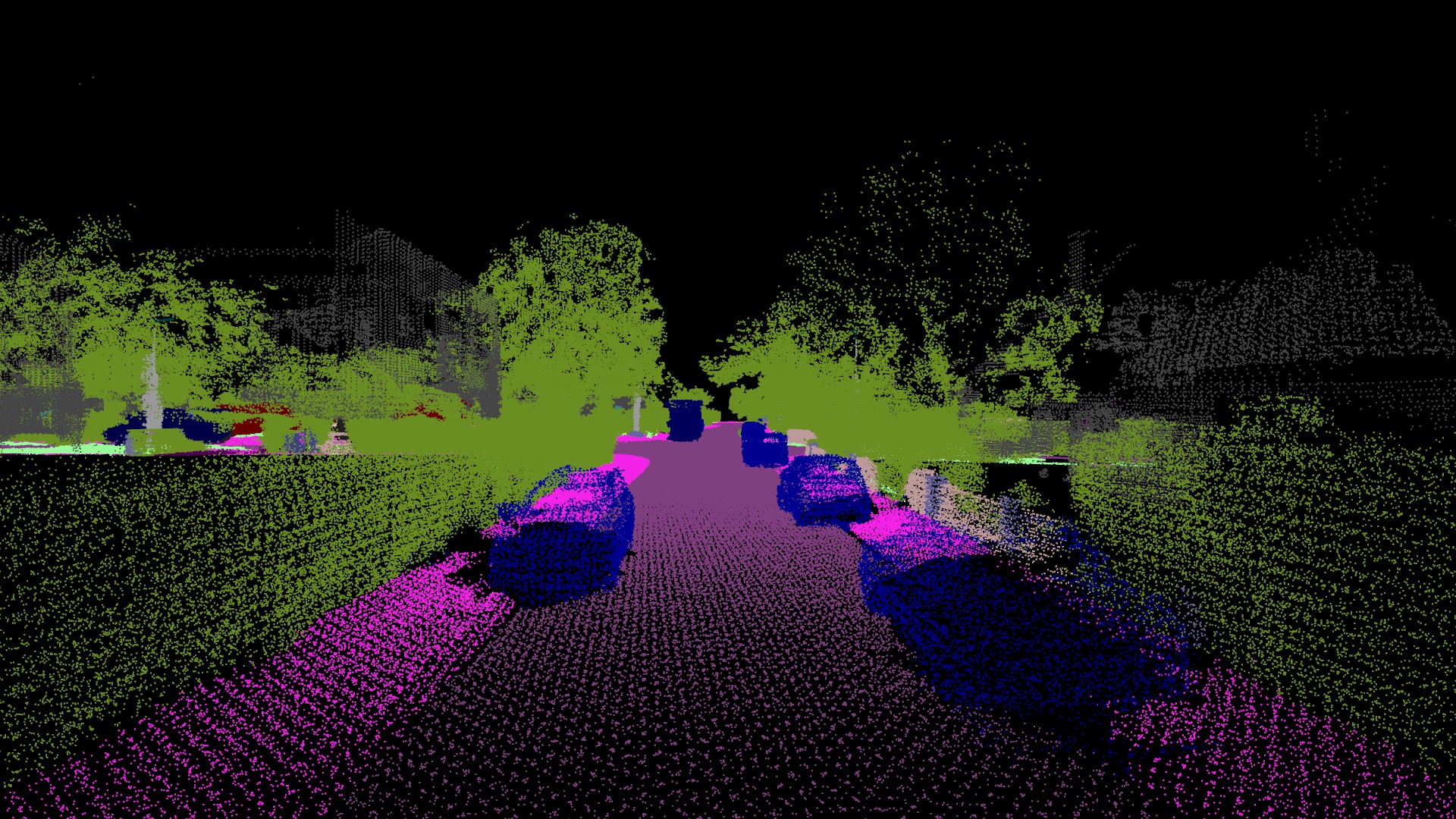

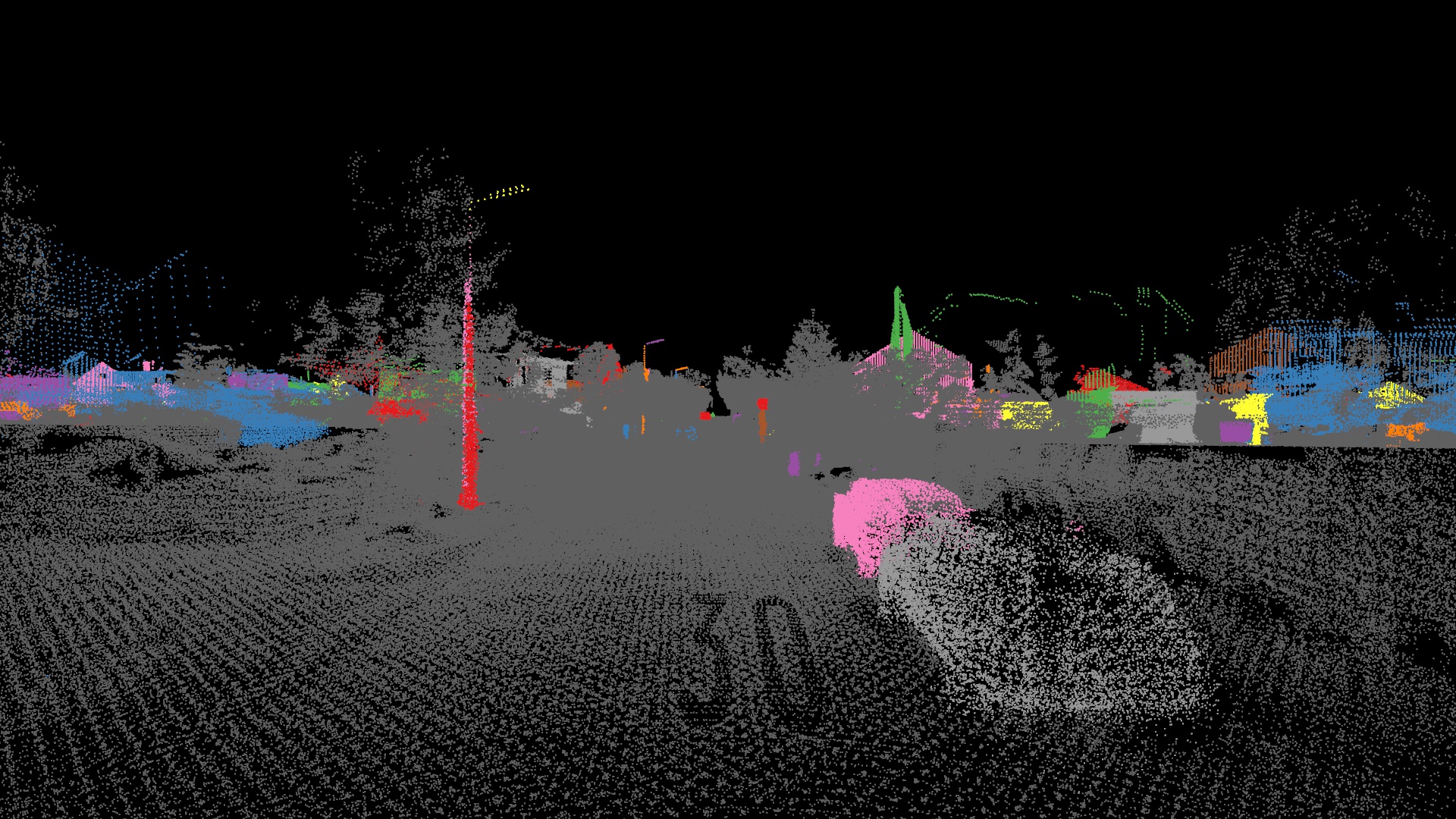

We present a large-scale dataset that contains rich sensory information and full annotations. We recorded several suburbs of Karlsruhe, Germany, corresponding to over 320k images and 100k laser scans in a driving distance of 73.7km. We annotate both static and dynamic 3D scene elements with rough bounding primitives and transfer this information into the image domain, resulting in dense semantic & instance annotations for both 3D point clouds and 2D images.

- Driving distance: 73.7 km, frames: 4x83,000

- All frames accurately geolocalized (OpenStreetMap)

- Semantic label definition consistent with Cityscapes, 19 classes for evaluation

- Each instance assigned with a consistent instance ID across all frames

Sensors

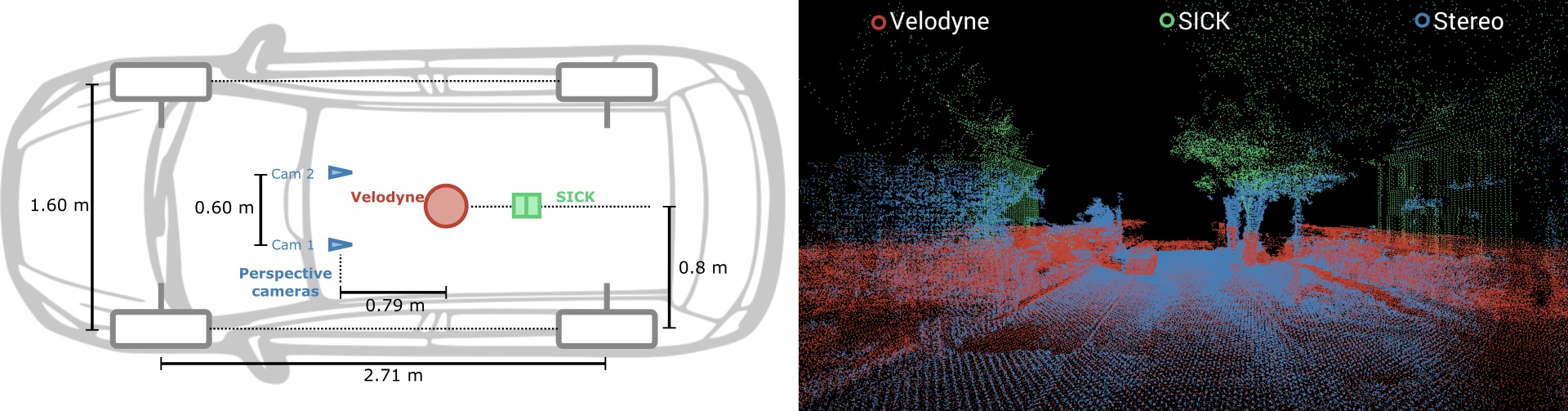

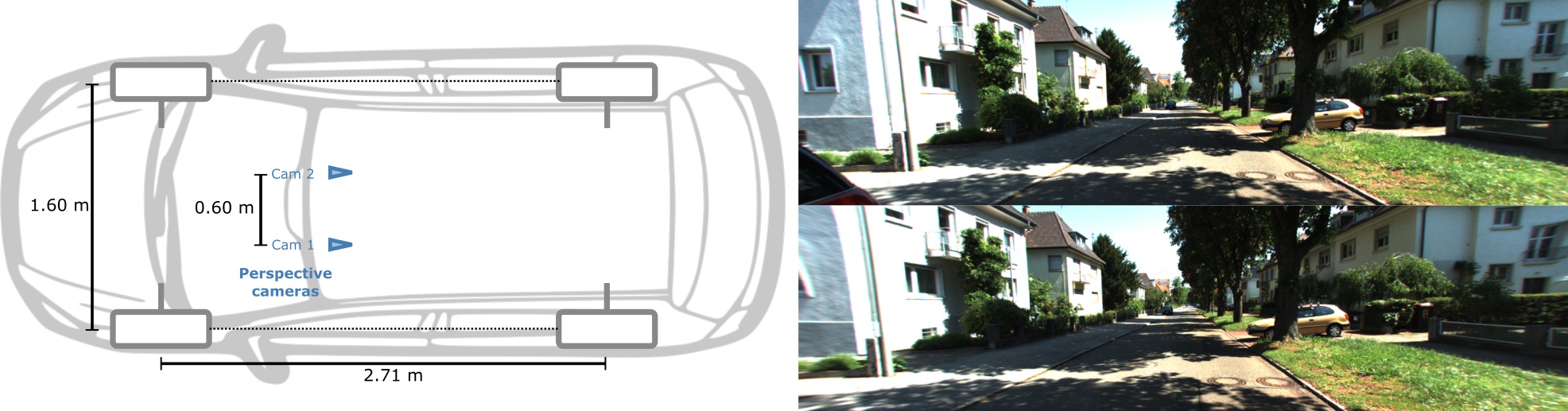

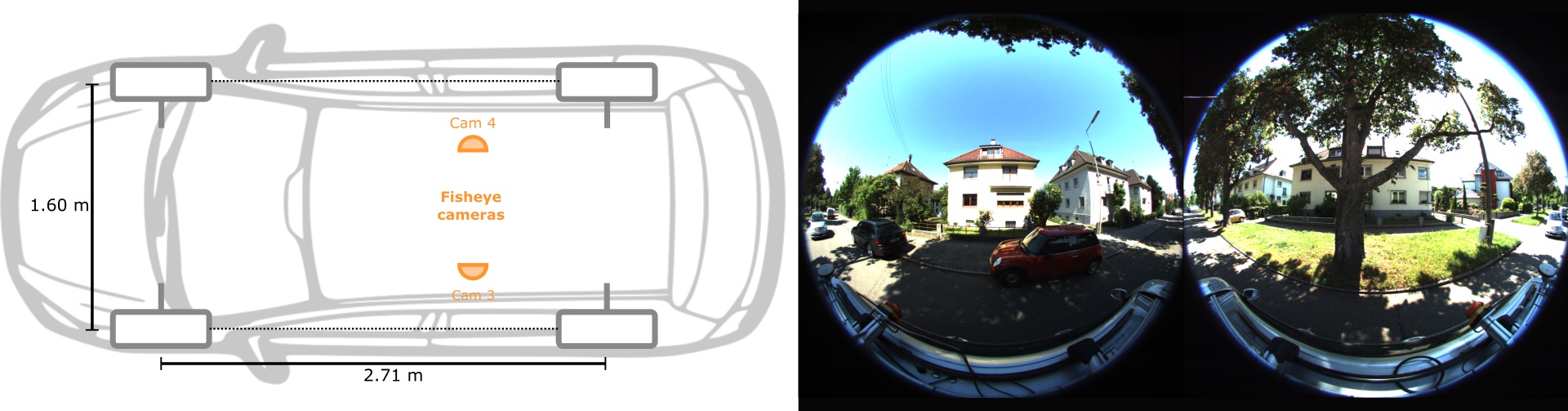

For our data collection we equipped a station wagon with one 180° fisheye camera to each side and a 90° perspective stereo camera (baseline 60 cm) to the front. Furthermore, we mounted a Velodyne HDL-64E and a SICK LMS 200 laser scanning unit in pushbroom configuration on top of the roof. This setup is similar to the one used in KITTI, except that we gain a full 360° field of view due to the additional fisheye cameras and the pushbroom laser scanner while KITTI only provides perspective images and Velodyne laser scans with a 26.8° vertical field of view. In addition, our system is equipped with an IMU/GPS localization system.

Examples

Tools

Copyright

Citation

title = {{KITTI}-360: A Novel Dataset and Benchmarks for Urban Scene Understanding in 2D and 3D},

author = {Yiyi Liao and Jun Xie and Andreas Geiger},

journal = {Pattern Analysis and Machine Intelligence (PAMI)},

year = {2022},

}

KITTI-360

KITTI-360