Slow Flow: Generating Optical Flow Reference Data

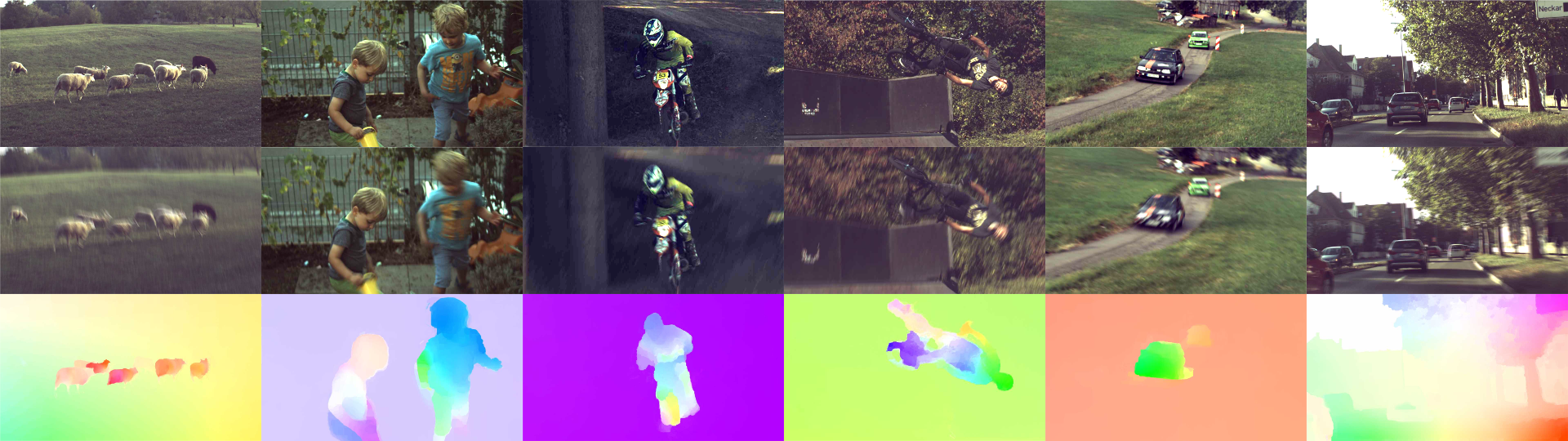

Existing optical flow datasets are limited in size and variability due to the difficulty of capturing dense ground truth. In this paper, we tackle this problem by tracking pixels through densely sampled space-time volumes recorded with a high-speed video camera. Our model exploits the linearity of small motions and reasons about occlusions from multiple frames. Using our technique, we are able to establish accurate reference flow fields outside the laboratory in natural environments. Besides, we show how our predictions can be used to augment the input images with realistic motion blur. We demonstrate the quality of the produced flow fields on synthetic and real-world datasets.

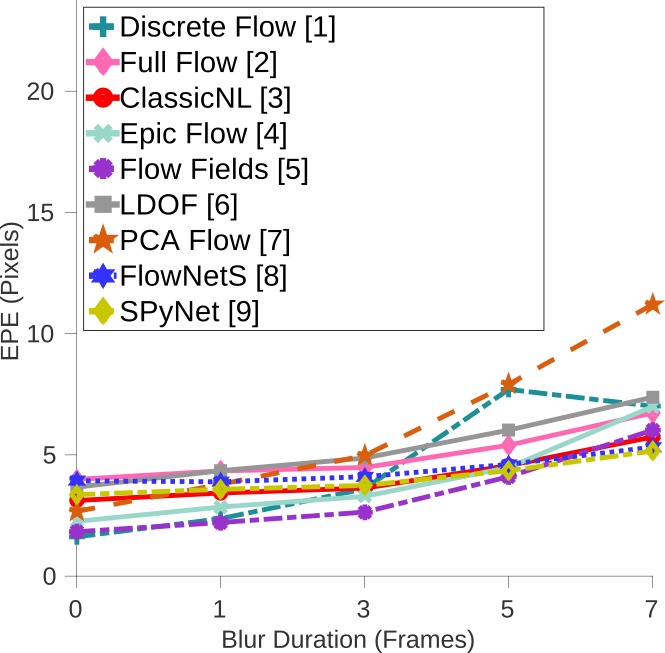

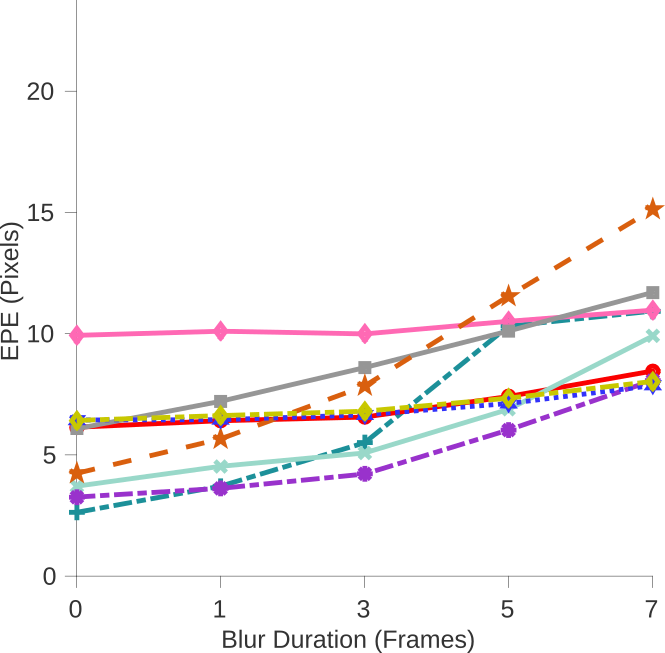

Real-World Benchmark

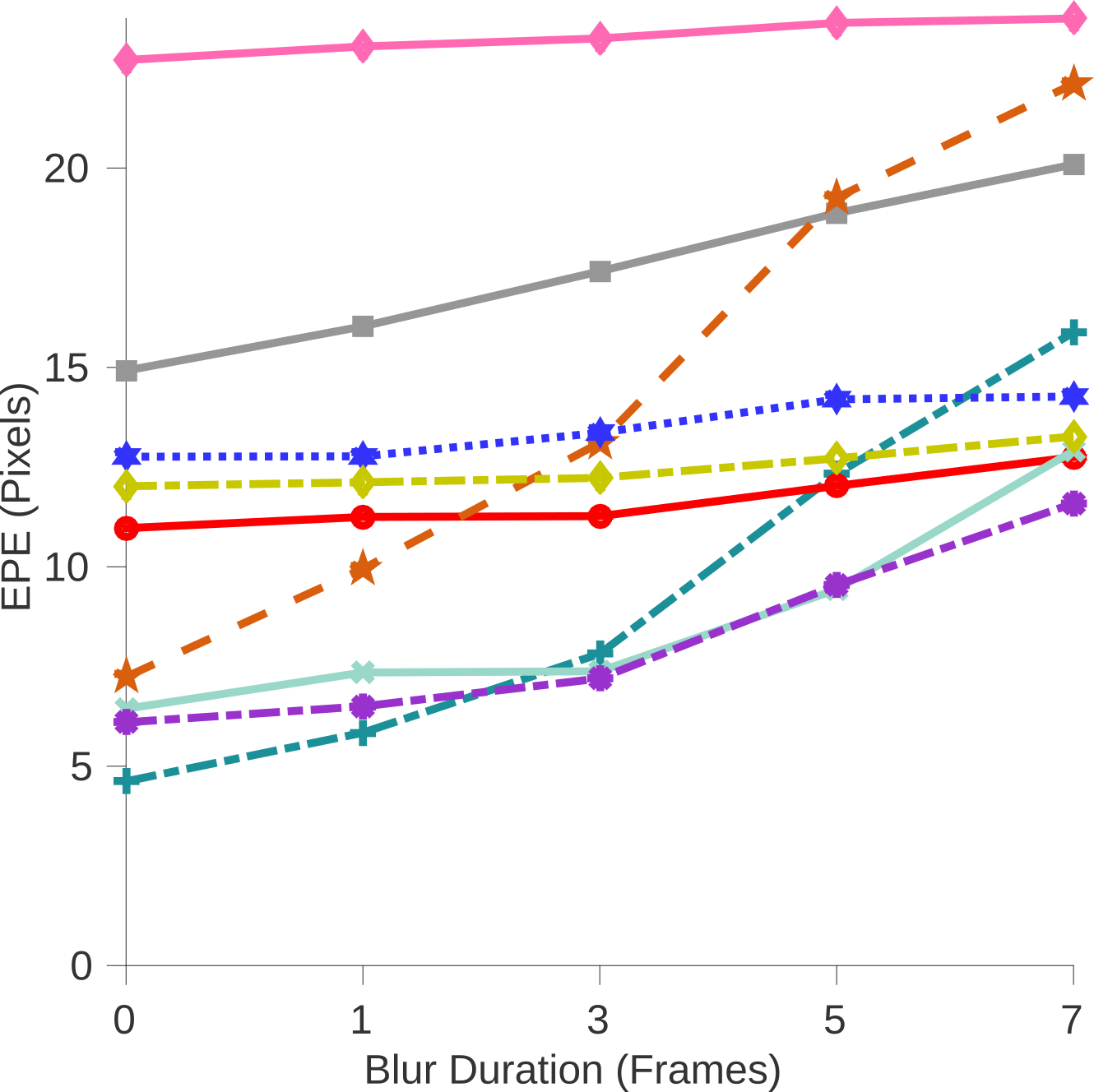

We show our evaluation results in terms of average end-point-error (EPE) over 160 diverse real-world sequences of dynamic scenes. The performance with different motion magnitudes (100, 200, 300 pixels) are shown in three different plots. For each plot we vary the length of the blur on the x-axis. The blur length is specified with respect to the number of blurred frames at the highest temporal resolution (0 indicated the original unblurred images). More details can be found in the paper and supplemantary material.

100px Flow Magnitude

200px Flow Magnitude

300px Flow Magnitude

[1] M. Menze, C. Heipke, and A. Geiger. Discrete optimization for optical flow. GCPR 2015.

[2] Q. Chen and V. Koltun. Full flow: Optical flow estimation by global optimization over regular grids. CVPR 2016.

[3] D. Sun, S. Roth, and M. J. Black. A quantitative analysis of current practices in optical flow estimation and the principles behind them. IJCV 2014.

[4] J. Revaud, P. Weinzaepfel, Z. Harchaoui, and C. Schmid. EpicFlow: Edge-preserving interpolation of correspondences for optical flow. CVPR 2015.

[5] C. Bailer, B. Taetz, and D. Stricker. Flow fields: Dense correspondence fields for highly accurate large displacement optical flow estimation. ICCV 2015.

[6] T. Brox and J. Malik. Large displacement optical flow: Descriptor matching in variational motion estimation. PAMI 2011.

[7] J. Wulff and M. J. Black. Efficient sparse-to-dense optical flow estimation using a learned basis and layers. CVPR 2015.

[8] A. Dosovitskiy, P. Fischer, E. Ilg, P. Haeusser, C. Hazirbas, V. Golkov, P. v.d. Smagt, D. Cremers, and T. Brox. Flownet: Learning optical flow with convolutional networks. ICCV 2015.

[9] A. Ranjan and M. J. Black. Optical flow estimation using a spatial pyramid network. CVPR 2017

Changelog

- 18.04.2020: Added Slow Flow and Sintel High-Speed datasets!

- 03.08.2017: Teaser dataset online and video added!

- 21.07.2017: Benchmark dataset online!

- 20.07.2017: First version online!

Downloads

- Paper (pdf, 1.5 MB)

- Supplementary Material (pdf, 5 MB)

- Video

- Code (C++, Git repository)

- Benchmark Dataset (zip, 9.6 GB)

- Teaser Dataset (zip, 9.0 GB)

High-Speed Sintel Dataset:

High-Speed Slow Flow Dataset:

Note: Steps to unzip slow_flow.zip. First to fuse the zip files and afterwards unzip the fused archive.Use the following command for this purpose:

zip -FF slow_flow.zip slow_flow_complete.zip

unzip slow_flow_complete.zip

Citation

@inproceedings{Janai2017CVPR,

author = {Joel Janai and Fatma Güney and Jonas Wulff and Michael Black and Andreas Geiger},

title = {Slow Flow: Exploiting High-Speed Cameras for Accurate and Diverse Optical Flow Reference Data},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2017}

}