https://github.com/Nicholasli1995/EgoNet Submitted on 29 Mar. 2021 13:22 by Shichao Li (Hong Kong University of Science and Technology)

|

Method

Detailed Results

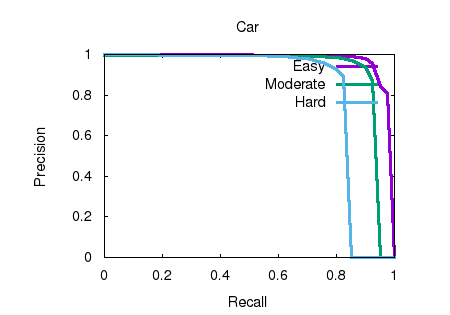

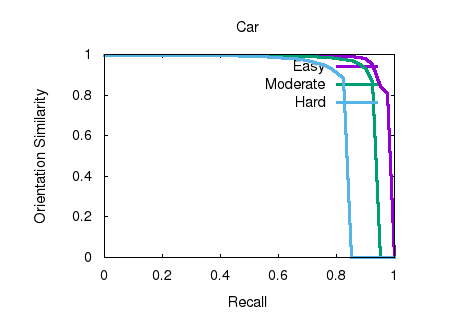

Object detection and orientation estimation results. Results for object detection are given in terms of average precision (AP) and results for joint object detection and orientation estimation are provided in terms of average orientation similarity (AOS).

|